Innovations in Data Center Power and Cooling Solutions

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Products

STULZ updates CyberRack Active Rear Door cooling

STULZ, a manufacturer of mission-critical air conditioning technology, has launched an updated version of its CyberRack Active Rear Door, aimed at high-density data centre cooling applications where space is limited and heat loads are increasing.

The rear-mounted heat exchanger is designed to capture heat directly at rack level, using electronically commutated fans to remove heat at the point of generation. The updated unit is intended for use in both air-cooled and liquid-cooled data centre environments.

Integrated sensors monitor return and supply air temperatures within the rack. Cooling output is then adjusted automatically in line with server heat load, aiming to maintain consistent thermal performance as workloads fluctuate.

Designed for high-density and retrofit environments

Valeria Mercante, Product Manager at STULZ, explains, “The tremendous growth of high-performance computing and artificial intelligence has driven server power densities higher than ever, creating significant heat challenges.

“With data centre space often at a premium, the CyberRack Active Rear Door is precision engineered to deliver maximum cooling capacity in a footprint depth of just 274mm.

"Delivering up to 49kW chilled water cooling with large heat exchanger surfaces and EC fans, it also supports higher water temperatures and can extend free cooling hours. This helps reduce overall energy consumption and operating costs.”

The compact footprint means the unit can be installed without rack repositioning, making it suitable for retrofit projects and sites with limited floorspace.

Custom adaptor frames are available to support a range of rack sizes and deployment models, including standalone use, supplemental precision air conditioning, and hybrid configurations alongside direct-to-chip liquid cooling.

For maintenance, the system includes a two-step door opening of more than 90°, providing access to fans and coils. Hot-swappable axial fans with plug connectors are also designed to simplify servicing and reduce downtime.

Differential pressure control adjusts fan speed in line with server airflow requirements, while low noise operation is also specified.

The CyberRack Active Rear Door includes the STULZ E² intelligent control system, featuring a 4.3-inch touchscreen interface. The controller supports functions such as redundancy management, cross-unit parallel operation, standby mode with emergency operation, and integration with building management systems.

Valeria continues, “The updated CyberRack Active Rear Door embodies our commitment to providing air conditioning solutions that combine cutting edge technology with intelligent design, user friendliness, energy efficiency, flexibility, and reliability.

“In environments where space is tight, heat loads are high, or there’s no raised floor, these advanced units can deliver highly efficient cooling, regardless of the server load.”

For more from STULZ, click here.

Joe Peck - 23 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Motivair introduces scalable CDU for AI data centres

Motivair, a provider of liquid cooling systems for data centres, owned by Schneider Electric, has announced a new coolant distribution unit designed to support high-density data centre cooling requirements, including large-scale AI and high-performance computing deployments.

The new CDU, MCDU-70, has a nominal capacity of 2.5 MW and is intended for use in liquid-cooled environments where compute density continues to increase.

Motivair says the system can be deployed as part of a centralised cooling architecture and scaled beyond 10 MW through multiple units operating together.

According to the company, the CDU is designed to support current and future GPU-based workloads, where heat output is significantly higher than traditional CPU-based infrastructure.

It notes that rack power densities in AI environments are expected to approach one megawatt and above, increasing the need for liquid cooling approaches.

Designed for scalable, high-density cooling

Motivair states that the new CDU integrates with Schneider Electric’s EcoStruxure platform, allowing multiple units to operate as part of a coordinated system. The design is intended to support phased expansion as cooling demand grows, without requiring major redesign of the wider plant.

Rich Whitmore, CEO of Motivair by Schneider Electric, comments, “Our solutions are designed to keep pace with chip and silicon evolution. Data centre success now depends on delivering scalable, reliable infrastructure that aligns with next-generation AI factory deployments.”

The CDU forms part of Schneider Electric’s wider liquid cooling portfolio, which includes systems ranging from lower-capacity deployments through to multi-megawatt installations.

Motivair says the units are designed as modular building blocks, enabling operators to select and combine systems based on specific performance and redundancy requirements.

The system is manufactured through Schneider Electric's facilities in North America, Europe, and Asia, and is intended to provide high flow rates and pressure within a compact footprint.

The company adds that the design supports parallel filtration, real-time monitoring, and integration with other cooling components to support efficient operation across the data centre.

The MCDU-70 is now available to order globally.

For more from Schneider Electric, click here.

Joe Peck - 21 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Products

Vertiv expands perimeter cooling range in EMEA

Vertiv, a global provider of critical digital infrastructure, has expanded its CoolPhase Perimeter PAM air-cooled perimeter cooling range with additional capacity options and the introduction of the CoolPhase Condenser, now available across Europe, the Middle East, and Africa (EMEA).

The update is aimed at small, medium, and edge data centre environments, with Vertiv stating that the expanded range is intended to improve energy efficiency and operational resilience while reducing overall operating costs and extending equipment life.

The CoolPhase Perimeter PAM has been developed for modern data centre requirements and now incorporates the EconoPhase Pumped Refrigerant Economizer, integrated within the CoolPhase Condenser system.

Vertiv says the approach is designed to increase free-cooling operation by using a pumped refrigerant circuit that consumes less power than conventional compressor-based systems and reduces space requirements.

The range uses R-513A refrigerant, which has a lower global warming potential than R-410A and is non-flammable with low toxicity. The company notes that this aligns the system with EU F-Gas Regulation 2024/573 and supports operators seeking to reduce emissions while maintaining cooling capacity.

Designed for efficiency and regulatory compliance

Sam Bainborough, VP Thermal Management, EMEA at Vertiv, explains, “With this latest addition to the Vertiv CoolPhase Perimeter PAM range, we're making our direct expansion offering more flexible while addressing two critical challenges faced by data centre operators today: environmental compliance and operational efficiency.

“The new air-cooled models boost free-cooling capabilities to lower PUE, demonstrating our commitment to providing energy-efficient and environmentally responsible options.”

The CoolPhase Perimeter PAM includes variable-speed compressors, staged coils, and patented filtration technology, and integrates with CoolPhase Condenser units using the Liebert iCOM control platform.

The range forms part of Vertiv’s wider thermal portfolio and is supported by the company’s service organisation, covering design, commissioning, and ongoing operational support.

For more from Vertiv, click here.

Joe Peck - 21 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Sabey Data Centers partners with OptiCool Technologies

Sabey Data Centers, a data centre developer, owner, and operator, has announced a partnership with OptiCool Technologies, a US manufacturer of refrigerant-based cooling systems for data centres, to support higher-density computing requirements across its US facilities.

The collaboration sits within Sabey’s integrated cooling programme, which aims to ease adoption of liquid cooling approaches as processing demand increases, particularly for AI applications.

Sabey says the partnership will broaden the range of cooling technologies available to customers across its portfolio, providing a practical route to denser deployments.

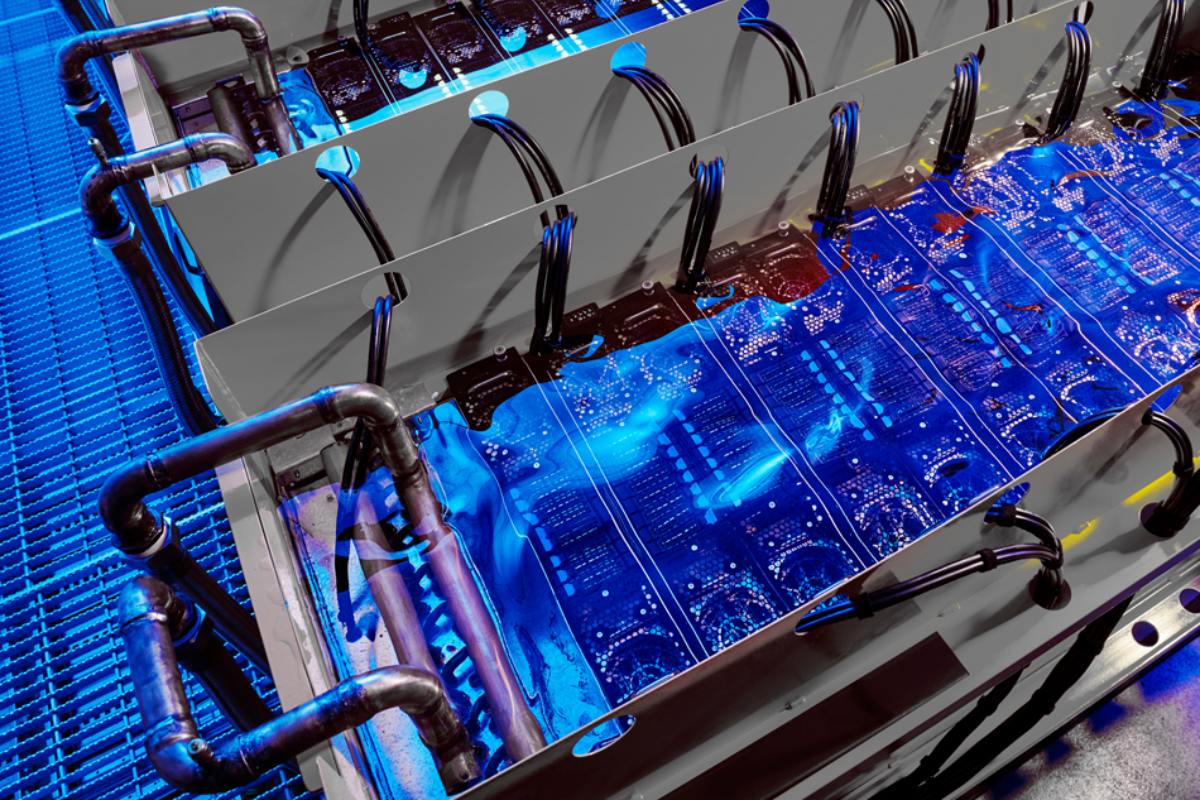

OptiCool supplies two-phase refrigerant pumped systems designed for data centre use. The non-conductive refrigerant absorbs heat at the rack through phase change, removing heat without chilled water, large mechanical infrastructure, or significant data hall changes.

Sabey states that the method can support increased density while reducing energy use and simplifying plant design.

Supporting higher-density and liquid cooling uptake

John Sasser, Chief Technical Officer at Sabey Data Centers, comments, “Partnering with OptiCool allows us to offer a cooling pathway that is both efficient and flexible.

"Together, we’re making it easier for customers to deploy advanced liquid cooling while maintaining the operational clarity and reliability they expect.”

Lawrence Lee, Chief Channel Officer at OptiCool, adds, “By working with Sabey, we’re able to bring our two-phase refrigerant systems into facilities designed to support the next generation of compute.

"This partnership helps customers move forward with confidence as they transition to more advanced cooling architectures.”

For more from Sabey Data Centers, click here.

Joe Peck - 19 January 2026

Data Centre Infrastructure News & Trends

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Innovations in Data Center Power and Cooling Solutions

Modular Data Centres in the UK: Scalable, Smart Infrastructure

Prism expands into the US market

Prism Power Group, a UK manufacturer of electrical switchgear and critical power systems for data centres, is looking to purchase a US business that already has UL Certification (for compliance, safety, and quality assurance regulations) and is reportedly raising $40 million (£29.8 million) for the acquisition.

With surging data centre demand straining power infrastructure and outpacing domestic capacity, US developers are actively seeking trusted overseas suppliers to keep pace.

Prism says it is well placed to take advantage of the current climate, having forged its reputation in mechanical and electrical infrastructure for modular data centre initiatives in the UK and across Europe since 2005.

It adds that its engineers have executed a variety of end-to-end installations, from high-voltage substations and backup generators to low-voltage switchboards that safeguard servers, in "tightly scheduled" data centre projects.

Expansion to meet ongoing supply strain

Adhum Carter Wolde-Lule, Director at Prism Power Group, explains, “The scale and urgency is such that America’s data centre expansion has become an international endeavour, and we’re again able to punch well above our weight in providing the niche expertise that’s missing and will augment strained local supply chains on the ground, straight away.

“Major power manufacturers in the United States are ramping up production, while global giants have announced new stateside factories for transformers and switchgear components, aiming to cut lead times and ease the backlog - but those investments will take years to bear fruit and that is time the US data centre market simply doesn’t have.”

Keith Hall, CEO at Prism Power Group, adds, “For overseas engineering companies like us [...], the time is now and represents an exceptional opening into the world’s fastest-growing infrastructure market.

"Equally, for the US sector, the willingness to look globally for critical power systems excellence will prove vital in keeping ambitious build-outs on schedule and preventing the data centre explosion from hitting a capacity wall.”

Joe Peck - 19 January 2026

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Innovations in Data Center Power and Cooling Solutions

Multi-million pound Heathrow data centre upgrade completed

Managed IT provider Redcentric has completed a multi-million pound electrical infrastructure upgrade at its Heathrow Corporate Park data centre in London.

The project was partly funded through the Industrial Energy Transformation Fund, which supports high-energy organisations adopting lower-carbon technologies. The programme included replacement of legacy uninterruptible power supplies (UPS).

As part of the upgrade, Centiel supplied StratusPower modular UPS equipment to protect an existing 7 MW critical load. Redcentric states the system design allows the facility to increase capacity to 10.5 MW without additional infrastructure work.

The site reports a rise in UPS operating efficiency from below 90% to more than 97%, which could reduce future emissions over the expected lifecycle of the equipment.

Modular UPS deployment and installation

Paul Hone, Data Centre Facilities Director at Redcentric, comments, “Our London West colocation data centre is a strategically located facility that offers cost effective ISO-certified racks, cages, private suites, and complete data halls, as well as significant on-site office space. The data centre is powered by 100% renewable energy, sourced solely from solar, wind, and hydro.

“In 2023 we embarked on the start of a full upgrade across the facility which included the electrical infrastructure and live replacement of legacy UPS before they reached end of life. This part of the project has now been completed with zero downtime or disruption.

“In addition, for 2026, we are also planning a further deployment of 12 MW of power protection from two refurbished data halls being configured to support AI workloads of the future.”

Aaron Oddy, Sales Manager at Centiel, adds, “A critical component of the project was the strategic removal of 22 MW of inefficient, legacy UPS systems. By replacing outdated technology with the latest innovation, we have dramatically improved efficiency delivering immediate and substantial cost savings.

“StratusPower offers an exceptional 97.6% efficiency, dramatically increasing power utilisation and reducing the data centre's overall carbon footprint - a key driver for Redcentric.

“The legacy equipment was replaced by Centiel’s StratusPower UPS system, featuring 14x500kW Modular UPS Systems. This delivered a significant reduction in physical size, while delivering greater resilience as a direct result of StratusPower’s award-winning, unique architecture.

Durata carried out the installation work.

Paul Hone concludes, “Environmental considerations were a key driver for us. StratusPower is a truly modular solution, ensuring efficient running and maintenance of systems. Reducing the requirement for major midlife service component replacements further adds to its green credentials.

“With no commissioning issues [and] zero reliability challenges or problems with the product, we are already talking to the Centiel team about how they can potentially support us with power protection at our other sites.”

For more from Centiel, click here.

Joe Peck - 16 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

News

APR increases power generation capacity to 1.1GW

APR Energy, a US provider of fast-track mobile gas turbine power generation for data centres and utilities, has expanded its mobile power generation fleet after acquiring eight gas turbines, increasing its owned capacity from 850 MW to more than 1.1 GW.

The company says the investment reflects rising demand from data centre developers and utilities that require short-term power to support growth while permanent grid connections are delayed.

APR currently provides generation for several global customers, including a major artificial intelligence data centre operator.

Across multiple regions, new transmission and grid reinforcement projects are taking years to deliver, creating a gap between available power and the needs of electricity-intensive facilities.

APR reports growing enquiries from data centre operators that require capacity within months rather than years.

Rapid deployment for interim power

The company says its turbines can typically be delivered, installed, and brought online within 30 to 90 days, enabling organisations to progress construction schedules and maintain service reliability while longer-term infrastructure is built.

Chuck Ferry, Executive Chairman and Chief Executive Officer of APR Energy, comments, “The demand we are seeing is immediate and substantial.

“Data centres and utilities need dependable power now. Expanding our capacity allows us to meet that demand with speed, certainty, and proven execution.”

APR states that the expanded fleet positions it to support data centre growth at a time when grid access remains constrained, combining rapid deployment with operational experience across international markets.

Joe Peck - 16 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Vertiv launches new MegaMod HDX configurations

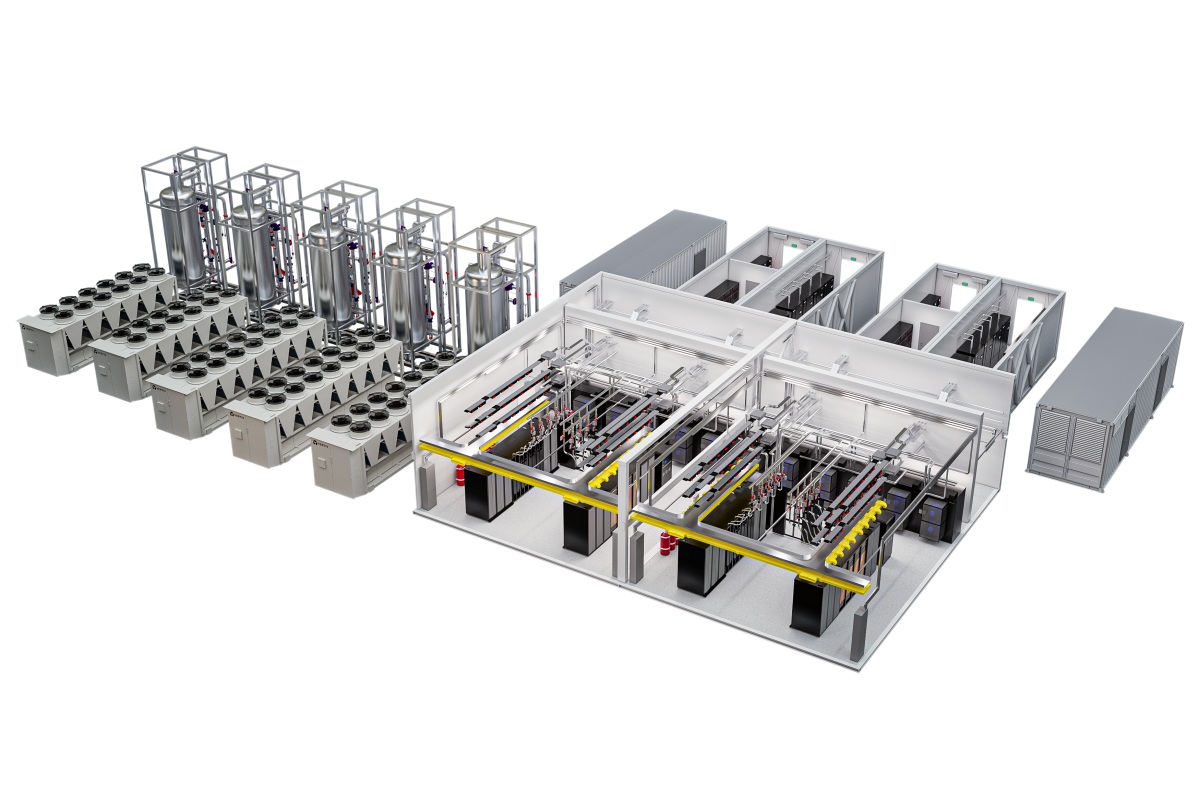

Vertiv, a global provider of critical digital infrastructure, has introduced new configurations of its MegaMod HDX prefabricated power and liquid cooling system for high-density computing deployments in North America and EMEA.

The units are designed for environments using artificial intelligence and high-performance computing and allow operators to increase power and cooling capacity as requirements rise.

Vertiv states the configurations give organisations a way to manage greater thermal loads while maintaining deployment speed and reducing space requirements.

The MegaMod HDX integrates direct-to-chip liquid cooling with air-cooled systems to meet the demands of pod-based AI and GPU clusters.

The compact configuration supports up to 13 racks with a maximum capacity of 1.25 MW, while the larger combo design supports up to 144 racks and power capacities up to 10 MW.

Both are intended for rack densities from 50 kW to above 100 kW.

Prefabricated scaling for high-density sites

The hybrid architecture combines direct-to-chip cooling with air cooling as part of a prefabricated pod.

According to Vertiv, a distributed redundant power design allows the system to continue operating if a module goes offline, and a buffer-tank thermal backup feature helps stabilise GPU clusters during maintenance or changes in load.

The company positions the factory-assembled approach as a method of standardising deployment and planning and supporting incremental build-outs as data centre requirements evolve.

The MegaMod HDX configurations draw on Vertiv’s existing power, cooling, and management portfolio, including the Liebert APM2 UPS (uninterruptible power supply), CoolChip CDU (cooling distribution unit), PowerBar busway system, and Unify infrastructure monitoring.

Vertiv also offers compatible racks and OCP-compliant racks, CoolLoop RDHx rear door heat exchangers, CoolChip in-rack CDUs, rack power distribution units, PowerDirect in-rack DC power systems, and CoolChip Fluid Network Rack Manifolds.

Viktor Petik, Senior Vice President, Infrastructure Solutions at Vertiv, says, “Today’s AI workloads demand cooling solutions that go beyond traditional approaches.

"With the Vertiv MegaMod HDX available in both compact and combo solution configurations, organisations can match their facility requirements while supporting high-density, liquid-cooled environments at scale."

For more from Vertiv, click here.

Joe Peck - 15 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Products

Janitza launches UMG 801 power analyser

Modern data centres often face a choice between designing electrical monitoring systems far beyond immediate needs or replacing equipment as sites expand.

Janitza, a German manufacturer of energy measurement and power quality monitoring equipment, says its UMG 801 power analyser is designed to avoid this issue by allowing users to increase capacity from eight to 92 current measuring channels without taking systems offline.

The analyser is suited to compact switchboards, with a fully expanded installation occupying less DIN rail space than traditional designs that rely on transformer disconnect terminals.

Each add-on module introduces eight additional measuring channels within a single sub-unit, reducing physical footprint within crowded cabinets.

Expandable monitoring with fewer installation constraints

The core UMG 801 unit supports ten virtual module slots that can be populated in any mix. These include conventional transformer modules, low-power modules, and digital input modules.

Bridge modules allow measurement points to be located up to 100 metres away without consuming module capacity, reducing wiring impact and installation complexity.

Sampling voltage at 51.2 kHz, the analyser provides Class 0.2 accuracy across voltage, current, and energy readings. This level of precision is used in applications such as calculating power usage effectiveness (PUE) to two decimal places, as well as assessing harmonic distortion that may affect uninterruptible power supplies (UPS).

Voltage harmonic analysis extends to the 127th order, and transient events down to 18 microseconds can be recorded. Onboard memory of 4 GB also ensures data continuity during network disruptions.

The system is compatible with ISO 50001 energy management frameworks and includes two ethernet interfaces that can operate simultaneously to provide redundant communication paths.

Native OPC UA and Modbus TCP/IP support enable direct communication with energy management platforms and legacy supervisory control systems, while whitelisting functions restrict access to approved devices. RS-485 additionally provides further support for older infrastructure.

Configuration is carried out through an integrated web server rather than proprietary software, and an optional remote display allows monitoring without opening energised cabinets.

Installations typically start with a single base unit at the primary distribution level, with additional modules added gradually as demand grows, reducing the need for upfront expenditure and avoiding replacement activity that risks downtime.

Janitza’s remote display connects via USB and mirrors the analyser’s interface, providing visibility of all measurement channels from the switchboard front panel. Physical push controls enable parameter navigation, helping users access configuration and measurement information without opening the enclosure.

The company notes that carrying out upgrades without interrupting operations may support facilities that cannot accommodate downtime windows.

For more from Janitza, cick here.

Joe Peck - 13 January 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Data centre cooling options

Modern data centres require advanced cooling methods to maintain performance as power densities rise and workloads intensify. In light of this, BAC (Baltimore Aircoil Company), a provider of data centre cooling equipment, has shared some tips and tricks from its experts.

This comes as the sector continues to expand rapidly, with some analysts estimating an 8.5% annual growth rate over the next five years, pushing the market beyond $600 billion (£445 billion) by 2029.

AI and machine learning are accelerating this trajectory. Goldman Sachs Research forecasts a near 200TWh increase in annual power demand from 2024 to 2030, with AI projected to represent almost a fifth of global data centre load by 2028.

This growth places exceptional pressure on cooling infrastructure. Higher rack densities and more compact layouts generate significant heat, making reliable heat rejection essential to prevent equipment damage, downtime, and performance degradation. The choice of cooling system directly influences efficiency and Total-power Usage Effectiveness (TUE).

Cooling technologies inside the facility

Two primary approaches dominate internal cooling: air-based systems and liquid-based systems.

Air-cooled racks have long been the standard, especially in traditional enterprise environments or facilities with lower compute loads. However, rising heat output, hotspots, and increased energy consumption are testing the limits of air-only designs, contributing to higher TUE and emissions.

Liquid cooling offers substantially greater heat-removal capacity. Different approaches to this include:

• Immersion cooling, which submerges IT hardware in non-conductive dielectric fluid, enabling efficient heat rejection without reliance on ambient airflow. Immersion tanks are commonly paired with evaporative or dry coolers outdoors, maximising output while reducing energy use. The method also enables denser layouts by limiting thermal constraints.

• Direct-to-chip cooling, which channels coolant through cold plates on high-load components such as CPUs and GPUs. While effective, it is less efficient than immersion and can introduce additional complexity. Rear door heat exchangers offer a hybrid path for legacy sites, removing heat at rack level without overhauling the entire cooling architecture.

Heat rejection outside the white space

Once captured inside the building, heat must be expelled efficiently. A spectrum of outdoor systems support differing site priorities, including energy, water, and climate considerations. Approaches include:

• Dry coolers — These are increasingly used in water-sensitive regions. By using ambient air, they eliminate evaporative loss and offer strong Water Usage Effectiveness (WUE), though typically with higher power draw than evaporative systems. In cooler climates, they benefit from free cooling, reducing operational energy.

• Hybrid and adiabatic systems — These offer variable modes, balancing energy and water use. They switch between dry operation and wet operation as conditions change, helping operators reduce water consumption while still tapping evaporative efficiencies during peaks.

• Evaporative cooling — Through cooling towers or closed-circuit fluid coolers, this remains one of the most energy-efficient options where water is available. Towers evaporate water to remove heat, while fluid coolers maintain cleaner internal circuits, protecting equipment from contaminants.

With data centre deployment expanding across diverse climates, operators increasingly weigh water scarcity, power constraints, and sustainability targets. Selecting the appropriate external cooling approach requires evaluating both consumption profiles and regulatory pressures.

For more from BAC, click here.

Joe Peck - 12 January 2026

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173