Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Events

Sponsored

ISE 2026 launches inaugural CyberSecurity Summit

Integrated Systems Europe (ISE), a Barcelona-based annual trade show for audiovisual (AV) and systems integration professionals, has announced the launch of the CyberSecurity Summit, a major new addition to its 2026 content programme.

Scheduled for Thursday, 5 February 2026, the Summit will tackle the escalating cybersecurity challenges confronting the professional AV and systems integration industries as digital threats increasingly impact critical infrastructure, smart buildings, venues, and public services.

The announcement comes during European Cybersecurity Awareness Month, a continent-wide initiative coordinated by ENISA and the European Commission to promote safer digital practices across businesses, institutions, and individuals.

With cybercrime surging across Europe and globally, the timing of ISE’s new Summit couldn’t be more relevant.

Cybersecurity: A business-critical priority for AV

As AV systems become increasingly networked and embedded in enterprise, public sector, and venue environments, they are directly exposed to the same vulnerabilities as traditional IT infrastructure.

From control rooms and conferencing platforms to digital signage, smart buildings, and event venues, AV solutions are now high-value targets for ransomware, data breaches, social engineering, and denial-of-service attacks.

At ISE’s CyberSecurity Summit, AV professionals will learn about safeguarding critical systems, navigate evolving regulations like NIS2 and ISO 27001, and transform cybersecurity from a vulnerability into a strategic advantage, before it’s too late

“Cybersecurity is no longer a technical afterthought; it’s a business-critical factor,” says Mike Blackman, Managing Director of Integrated Systems Events. “For AV manufacturers, integrators, and technology users, it’s essential for accessing public tenders, ensuring regulatory compliance, and building long-term trust with clients.”

Pere Ferrer i Sastre, Summit Chair and former Director General of the Catalan Police (Mossos d’Esquadra), with extensive experience in public security, digital transformation, regulatory frameworks, and critical infrastructure management, will facilitate discussions addressing emerging digital threats to the AV and systems integration sectors.

He explains, “Cybersecurity is no longer optional; it lies at the heart of every AV innovation. ISE’s CyberSecurity Summit brings together the brightest minds in our industry to confront today’s digital threats head-on and turn them into strategic advantages.

"By sharing actionable insights, proven strategies, and real-world experience, we will empower AV professionals to protect critical systems, lead with confidence, and build a safer, smarter future for the entire industry.”

The CyberSecurity Summit at ISE 2026 will unite AV and cybersecurity leaders to tackle the most pressing challenges facing connected AV systems in critical infrastructure, smart buildings, and corporate environments.

Opening with Pere Ferrer, the Summit features keynotes from Shaun Reardon (DNV Cyber) on building cyber resilience, Timo Kosig and Andrew Dowsett (Barco Control Rooms) on secure operations, and Pedro Pablo Pérez (TRC) on protecting corporate communications.

Roundtables with Laura Caballero (Cybersecurity Agency of Catalonia), Folly Farrel (TÜV SÜD), and Sergi Carmona (Veolia España) will explore compliance, governance, and best practices for securing critical AV environments.

Cybersecurity: A strategic imperative for AV

The Summit is part of ISE 2026’s overarching theme, "Push Beyond", which challenges the global AV and systems integration community to redefine what’s possible.

By introducing the CyberSecurity Summit, ISE is pushing beyond traditional boundaries to address one of the most urgent and complex issues facing the industry today.

Don’t miss your chance to be part of what’s next

Registration for ISE 2026 is now open, so take your place among the visionaries, trailblazers, and creative minds from every corner of the globe.

Whether you're an AV integrator, manufacturer, IT manager, or facilities director, the CyberSecurity Summit offers essential knowledge and networking opportunities to help you navigate the evolving threat landscape.

It’s a chance to learn from leading voices in cybersecurity and discover how to protect your business, your clients, and your reputation.

Reserve your spot at the event where tomorrow’s innovations are unveiled, and let’s Push Beyond what’s possible, together: Click here to register for free using the code ‘dcnnews’ to Push Beyond.

Joe Peck - 27 January 2026

Artificial Intelligence in Data Centre Operations

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Security Risk Management for Data Centre Infrastructure

Vertiv launches AI predictive maintenance service

Vertiv, a global provider of critical digital infrastructure, has launched a new AI-powered predictive maintenance service, Vertiv Next Predict, aimed at modern data centres and facilities supporting AI workloads, including AI factories.

The managed service is designed to move maintenance away from time-based and reactive models, using data analysis to identify potential issues before they affect operations.

Vertiv says the service supports power, cooling, and IT systems with the aim of improving visibility and supporting more consistent infrastructure performance.

The company notes that, as AI workloads increase compute intensity, data centre operators are under pressure to maintain uptime and performance across increasingly complex environments. In this respect, predictive maintenance and advanced analytics are positioned as a way to support more informed operational decisions.

Ryan Jarvis, Vice President of the Global Services Business Unit at Vertiv, says, “Data centre operators need innovative technologies to stay ahead of potential risks as compute intensity rises and infrastructures evolve.

“Vertiv Next Predict helps data centres unlock uptime, shifting maintenance from traditional calendar-based routines to a proactive, data-driven strategy. We move from assumptions to informed decisions by continuously monitoring equipment condition and enabling risk mitigation before potential impacts to operations.”

AI-based monitoring and anomaly detection

Vertiv Next Predict uses AI-based anomaly detection to analyse operating conditions and identify deviations from expected behaviour at an early stage. A predictive algorithm then assesses potential operational impact to determine risk and prioritise responses.

The service also includes root cause analysis to help isolate contributing factors, supporting more targeted resolution. Based on system data and site context, prescriptive actions are defined and carried through to execution, with corrective measures carried out by Vertiv Services personnel.

According to Vertiv, this approach is intended to support earlier intervention and reduce the likelihood of unplanned outages by addressing issues before they escalate.

The service currently supports a range of Vertiv power and cooling platforms, including battery energy storage systems (BESS) and liquid cooling components. Vertiv says the platform is designed to expand over time to support additional technologies as data centre infrastructure evolves.

Vertiv Next Predict is intended to integrate as part of a broader grid-to-chip service architecture, with the aim of supporting long-term scalability and alignment with future data centre technologies.

For more from Vertiv, click here.

Joe Peck - 27 January 2026

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Security Risk Management for Data Centre Infrastructure

Warnings of drone‑enabled cyber threats to critical infrastructure

As drone technology becomes more sophisticated and accessible across the globe, researchers from Innovation Central Canberra (ICC) at the University of Canberra have teamed up with Australian tech company DroneShield to understand the risk profile of cyber attacks to critical infrastructure.

With the rapid expansion of drone tech reshaping Australia’s security landscape, Defence, national security, and critical infrastructure are facing new challenges; meeting these requires capability that is not only technologically advanced, but also assessed and refined through rigorous, independent research environments.

“We know how drones have changed traditional warfare, but are we oblivious of the role they play in cyber security?" questions Professor Frank den Hartog, Cisco Research Chair in Critical Infrastructure at the University of Canberra. "That's a worry, and an opportunity for our drone and cyber industry.”

The project began with a team comprising Professor den Hartog and ICC students - namely Andrew Giumelli and Simone Chitsinde - undertaking targeted analysis and interviewing critical infrastructure operators to further understand the cyber threat environment through the use of drones.

Increasing threats to critical infrastructure

In the independent report, researchers found no recorded domestic cyber incidents using drones to date, but also noted that limited drone detection capabilities and awareness, minimal government guidance, and rising drone use are creating vulnerabilities.

This highlights a gap in reporting on drone-enabled cyber threats in Australia. The findings warn that the combinations of steadily increasing drone capability, limited awareness across industries, and a lack of targeted government guidance is creating a widening gap.

The report emphasises that drones are no longer emerging technology. Their capability, affordability, and accessibility have increased dramatically in recent years, and malicious actors are experimenting with drone-borne cyber techniques overseas.

Within the next five years, as drone and cyber capabilities continue to evolve, operators may need to reassess the likelihood and relevance of drone-enabled cyber threats.

Professor den Hartog continues, “This research highlights the need for greater education, more industry collaboration, improved knowledge sharing, and broader consideration of counter-drone capabilities across critical infrastructure sectors.

“We need to encourage operators to periodically and critically review how drones are used within their operations, assess the cybersecurity implications of increased adoption, and explore strategies to integrate drone risk into existing security and resilience programs.”

DroneShield’s engagement with ICC highlights the broader importance of research-industry collaboration in strengthening countries' sovereign capabilities. Acknowledging this, both organisations say they are exploring opportunities to continue the partnership.

Joe Peck - 22 January 2026

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Events

ISE 2026 returns to Barcelona

Integrated Systems Europe (ISE) 2026 returns to Fira de Barcelona, Gran Via from 3–6 February, inviting attendees to ‘Push Beyond’ the boundaries of cyber security and intelligence. The organisers state that this event is "where visionaries, creators, and innovators unite to shape the future, foster collaboration, and spark new ideas."

As AV systems become more integrated within enterprise, public sector, and venue settings, they are increasingly subject to the same security risks as conventional IT infrastructures. Whether deployed in control rooms, conferencing platforms, digital signage, smart buildings, or event venues, AV solutions have become prominent targets for threats such as ransomware, data breaches, social engineering, and denial-of-service attacks.

ISE 2026 aims to push beyond to dive deeper into this defining megatrend, the importance of collaboration and innovation, and preparing AV professionals for safeguarding the future from emerging digital threats.

CyberSecurity Summit

On Thursday, 5 February, 09:00–12:00 in CC5.1, ISE 2026 will host the brand-new CyberSecurity Summit, a gathering for AV professionals and business leaders determined to strengthen their organisation’s defences.

Recognising cyber security as a business-critical priority, the Summit will examine its pivotal role in securing public tenders, ensuring regulatory compliance, and maintaining client trust.

Expert speakers will address urgent real-world challenges, guide delegates in pinpointing the most pressing risks, and outline practical, actionable strategies.

During the summit, AV professionals will learn about safeguarding critical systems, navigate evolving regulations like NIS2 and ISO 27001, and transform cyber security from a vulnerability into a strategic advantage.

Attendees should leave equipped with a clear, sector-relevant roadmap to enhance their organisations' digital resilience in an increasingly connected world.

Summit Chair Pere Ferrer i Sastre, former Director General of the Catalan Police (Mossos d’Esquadra), has extensive experience in public security, digital transformation, regulatory frameworks, and critical infrastructure management. He will facilitate discussions addressing emerging digital threats to the AV and systems integration sectors from years of experience in the field.

Cybersecurity megatrends

This feeds into one of ISE’s defining megatrends for 2026: cyber security. These are environments where safeguarding critical infrastructure and public services against cyber threats has become paramount.

At ISE 2026, you’ll discover how the cyber security ecosystem is pushing beyond boundaries to deliver intelligent, resilient, and secure systems that are equipped to protect public sector operations and ensure ongoing wellbeing amidst evolving digital threats.

Other megatrends include: AI, robotics, smart spaces, sustainability, and tradescape.

Strategies, innovation, and collaboration at ISE Hackathon

Putting cyber security prevention into action, the ISE Hackathon brings together a dynamic community of highly skilled participants, representing top international universities.

For 48 hours, the student participants will engage in rapid networking, collaboration, brainstorming, and innovation engineering to solve a business challenge, before pitching their ideas to the judging panel.

This year, the event will once again offer three separate tracks: cyber security, sustainability, and innovation.

The Hackathon is designed to serve as a catalyst for innovation, challenging participants to address critical security challenges through collaborative problem-solving.

Connect, collaborate, and revolutionise

Sol Rashidi, Chief AI Officer for enterprises, will headline ISE on Wednesday, 5 February 2026. Her keynote, ‘The AI Reality Check: What It Takes to Scale and the Future of Leadership’, will aim to expose the realities of AI beyond the hype, offering practical frameworks and highlighting the importance of AI governance and cyber security for successful scaling.

The organisers say ISE 2026 is "more than just an exhibition; it’s a platform for networking, learning, and discovering new ways to drive value in your organisation."

With opportunities to meet leading brands, share knowledge with peers, and explore emerging trends in cyber security and AI, those running the event hope every attendee will leave better equipped for the challenges ahead.

Why attend ISE 2026?

Whether you’re focused on enhancing communication within your organisation or delivering unforgettable live experiences, ISE 2026 is the event that brings it all together.

Don’t miss your chance to be at the forefront of industry transformation. Click here to head to the website and register for free with the code ‘dcnnews’ to secure your place.

Joe Peck - 8 January 2026

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Security Risk Management for Data Centre Infrastructure

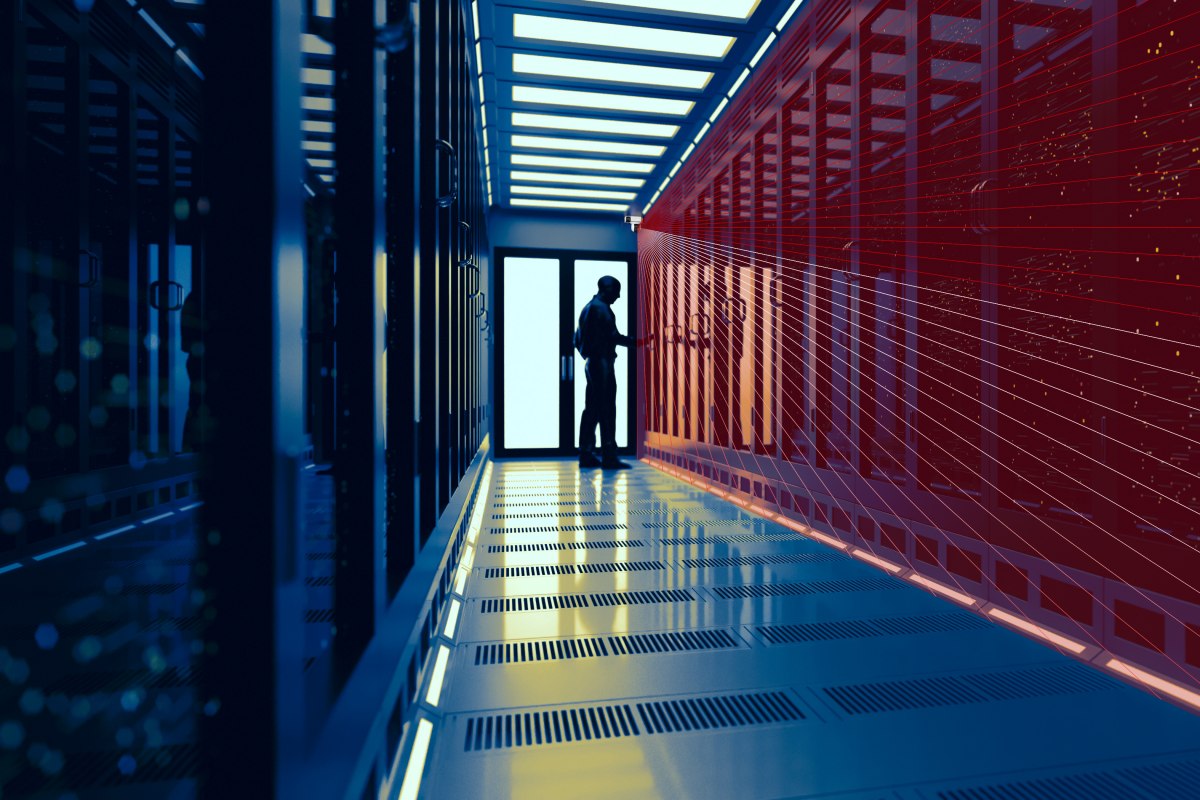

SIA launches data centre advisory board

The Security Industry Association (SIA), a trade association for global security solution providers, has launched a new Data Center Advisory Board to provide guidance on data centre security matters to its Board of Directors and to support SIA members with relevant resources.

The group will be chaired by Jim Black, Senior Director and Security Architect at Microsoft, who has been involved in the company’s cloud and data centre operations since 2011.

The establishment of the advisory board comes as global demand for data centre capacity continues to rise, driven by artificial intelligence, cloud services, and other digital technologies.

As facilities that host large volumes of sensitive information, data centres face increasing pressure to maintain robust and resilient security practices.

A focus on collaboration and guidance

According to SIA, the Data Center Advisory Board will contribute to the development of guidance and information related to security deployments, encourage collaboration between security providers and data centre security professionals, and engage with SIA’s government relations team on legislative and regulatory matters where relevant.

In his role at Microsoft, Jim is responsible for defining security technology strategy to protect assets and personnel across a global portfolio of more than 400 data centres.

He holds several professional certifications, including Certified Protection Professional and Physical Security Professional from ASIS International, as well as Certified Information Systems Security Professional from ISC².

Commenting on his appointment, he notes, “The data centre industry is experiencing unprecedented growth and heightened risks driven by emerging technologies and global operational challenges.

"I am honoured to serve as SIA’s inaugural Data Center Advisory Board Chair and look forward to working with this accomplished group of industry experts to advance and publish modern security standards that will strengthen cloud critical infrastructure protection worldwide.”

Don Erickson, CEO of SIA, says Jim’s experience makes him well suited to the role, commenting, “The Data Center Advisory Board is an important venture for SIA, and we are very pleased that it will be able to benefit from Jim’s experience and expertise in data centre security from its inception.

“Jim has for many years been an enthusiastic and generous supporter of SIA, contributing to multiple groups and projects that have advanced the industry’s professionalism and knowledge base. We are excited about what the advisory board will accomplish under his leadership.”

Joe Peck - 5 January 2026

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Features

News in Cloud Computing & Data Storage

OpenNebula, Canonical partner on cloud security

OpenNebula Systems, a global open-source technology provider, has formed a new partnership with UK developer Canonical to offer Ubuntu Pro as a built-in, security-maintained operating system for hypervisor nodes running OpenNebula.

The collaboration is intended to streamline installation, improve long-term maintenance, and reinforce security and compliance for enterprise cloud environments.

OpenNebula is used for virtualisation, cloud deployment, and multi-cluster Kubernetes management. It integrates with a range of technology partners, including NetApp and Veeam, and is supported by relationships with NVIDIA, Dell Technologies, and Ampere.

These partnerships support its use in high-performance and AI-focused environments.

Beginning with the OpenNebula 7.0 release, Ubuntu Pro becomes an optional operating system for hypervisor nodes.

Canonical’s long-term security maintenance, rapid patch delivery, and established update process are designed to help teams manage production systems where new vulnerabilities emerge frequently.

Integrated security maintenance for hypervisor nodes

With Ubuntu Pro embedded into OpenNebula workflows, users will gain access to extended security support, expedited patching, and coordinated lifecycle updates.

The approach aims to reduce operational risk and maintain compliance across large-scale, distributed environments.

Constantino Vázquez, VP of Engineering Services at OpenNebula Systems, explains, “Our mission is to provide a truly sovereign and secure multi-tenant cloud and edge platform for enterprises and public institutions.

"Partnering with Canonical to integrate Ubuntu Pro into OpenNebula strengthens our customers’ confidence by combining open innovation with long-term stability, security, and compliance.”

Mark Lewis, VP of Application Services at Canonical, adds, “Ubuntu Pro provides the secure foundation that modern cloud and AI infrastructures demand.

"By embedding Ubuntu Pro into OpenNebula, we are providing enterprises [with] a robust and compliance-ready environment from the bare metal to the AI workload - making open source innovation ready for enterprise-grade operations.”

Joe Peck - 2 December 2025

Data Centre Infrastructure News & Trends

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Innovations in Data Center Power and Cooling Solutions

Products

Security Risk Management for Data Centre Infrastructure

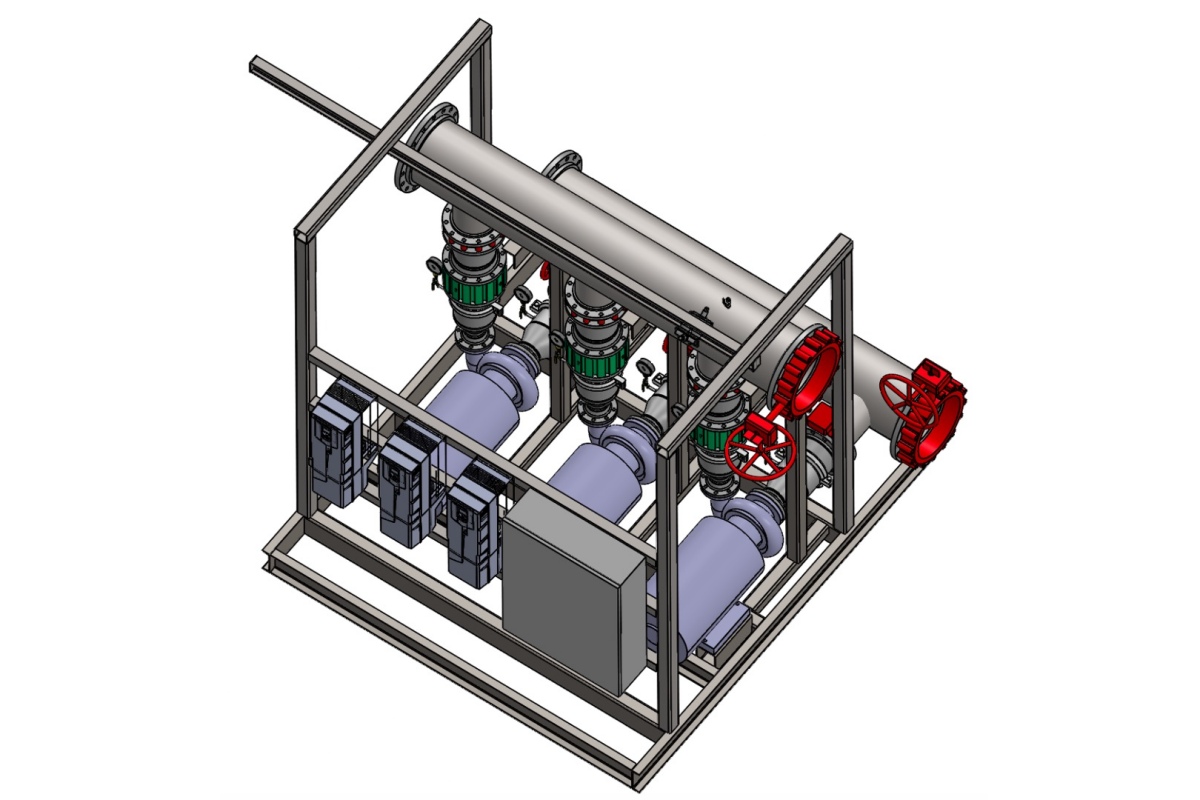

SPP Pumps brings fire and cooling experience to DCs

SPP Pumps, a manufacturer of centrifugal pumps and systems, and its subsidiary, SyncroFlo, have combined their fire protection and cooling capabilities to support the expanding data centre sector.

The companies aim to offer an integrated approach to pumping, fire suppression, and liquid cooling as operators and contractors face rising demand for large-scale, high-density facilities.

The combined portfolio draws on SPP’s nearly 150 years of engineering experience and SyncroFlo’s long history in pre-packaged pump system manufacturing.

With modern co-location and hyperscale facilities requiring hundreds of pumps on a single site, the companies state that the joint approach is intended to streamline procurement and project coordination for contractors, consultants, developers, and OEMs.

SPP’s offering spans pump equipment for liquid-cooled systems, cooling towers, chillers, CRAC and CRAH systems, water treatment, transformer cooling, heat recovery, and fire suppression. Its fire pump equipment is currently deployed across regulated markets, with SPP and SyncroFlo packages available to meet NFPA 20 requirements.

Integrated pump systems for construction efficiency

The company says its portfolio also includes pre-packaged pump systems that are modular and tailored to each project. These factory-tested units are designed to reduce installation time and simplify on-site coordination, helping to address construction schedules and cost pressures.

Tom Salmon, Group Business Development Manager for Data Centres at SPP and SyncroFlo, comments, “Both organisations have established strong credentials independently, with over 75 data centre projects delivered for the world’s largest operators.

"We’re now combining our group’s extensive fire suppression, HVAC, and cooling capabilities. By bringing together our complementary capabilities from SPP, SyncroFlo, and other companies in our group, we can now offer comprehensive solutions that cover an entire data centre's pumping requirements.”

John Santi, Vice President of Commercial Sales at SyncroFlo, adds, “Design consultants and contractors tell us lead time is critical. They cannot afford schedule delays. Our pre-packaged systems are factory-tested and ready for immediate commissioning.

"With our project delivery experience and expertise across fire suppression, cooling, and heat transfer combined under one roof, we eliminate the coordination headaches of managing multiple suppliers across different disciplines.”

Tom continues, “In many growth markets, data centres are now classified as critical national infrastructure, and rightly so. These facilities cannot afford downtime, and our experience with critical infrastructure positions us to best serve this market."

Joe Peck - 20 November 2025

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Essential Disaster Recovery News for Data Centres

Modular Data Centres in the UK: Scalable, Smart Infrastructure

Secure I.T. constructs modular DC for NHS Trust

Secure I.T. Environments (SITE), a UK design and build company for modular, containerised, and micro data centres, has today announced the handover of its latest external modular data centre project with Somerset NHS Foundation Trust.

The new 125m² data centre has now been completed and provides an energy-efficient disaster recovery facility for the Trust, ensuring it can continue to deliver resilient services across Somerset and for the 1.7 million patient contacts that take place.

A data centre for all challenges

Whilst the Trust initially considered cloud solutions for its data requirements, these could not meet the requirements for existing clinical software, nor the cost constraints in place.

In response, SITE proposed its external modular data centre, which is intended to provide a cost-effective and secure way to build new data centres or extend existing infrastructure to meet the growing demands of on-site IT needs.

SITE says its modular system can be built rapidly and that this particular project was designed, built, and live within 8 months.

The company also says its modular rooms are a pre-engineered solution, offering a clean and fast construction process, making it appropriate for locations where an existing room is not available or where a new building is impractical.

The modular system reportedly has high protection, including protecting against physical security threats by meeting industry standards BS476 / EN1047 and LPS1175 security ratings.

Design and delivery

The design was divided into three areas: the main IT racks, an electrical plant area, and build area.

Working with the Trust, SITE’s design incorporated 20 19” 48U cabinets, configured in two rows of 10 with cold aisle containment, energy-efficient UPS systems in N+1 format, as well as GEA Multi-DENCO Energy Efficient DX Freecool air conditioning units, also in N+1 configuration.

SITE managed the delivery of all groundworks and mechanical and electrical infrastructure.

The delivery of the new facility included a new concrete pad, drainage, power distribution, FK 5-1-12 fire suppression, VESDA detection systems, environmental monitoring, backup generator, and fuel tank.

Furthermore, the design and specification hardened the data centre against burglary (LPS 1175 SR2 specifications), fire, fire-fighting water, heat, humidity, gases, dust, debris, and unauthorised access.

The facility's external perimeter security has been protected with CCTV, prison mesh anti-climb fencing, security gates, and Amcor barriers.

Chris Wellfair, Projects Director at Secure I.T. Environments, comments, “At a time when many organisations are trying to balance the needs of their IT infrastructure with challenging budgets, our modular data centres are making it easier for them to achieve their goals without compromising on performance.

"Having previously built a data centre for the Trust at another hospital location, we were pleased to work with Somerset NHS Foundation Trust to deliver this new data centre to meet their specific requirements.”

Adam Morgan, Deputy Chief Technology Officer at Somerset NHS Foundation Trust, adds, “Secure I.T. have delivered a significant upgrade to the Trust’s data centre infrastructure.

"We were very specific about the design brief and requirements, and it has been a positive project delivering this facility with Secure I.T. Environments.

"The Trust now has additional capacity for growth for years to come, which will bring benefits to clinical care by enabling resilient delivery of clinical systems across the county of Somerset.”

For more from Secure I.T. Environments, click here.

Joe Peck - 18 November 2025

Data Centre Infrastructure News & Trends

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Enclosures, Cabinets & Racks for Data Centre Efficiency

Products

Security Risk Management for Data Centre Infrastructure

R&M introduces radio-based access control for racks

R&M, a Swiss developer and provider of infrastructure for data and communications networks, is introducing radio-based access control for data centres. The core product is an electromechanical door handle for the racks of the BladeShelter and Freenet families from R&M.

Technicians can only open the door handles with authorised transponder cards, while administrators can control them remotely via encrypted radio connections and data networks.

R&M says it is thus integrating high-security digital protection into its "holistic infrastructure solutions" for data centres.

Package details

One installation comprises up to 1,200 door handles for server and network racks, as well as radio and control modules for computer rooms.

The door handles do not require any wiring in the racks. Their electronics are powered by batteries whose power is sufficient for three years of operation or 30,000 locking cycles.

The personalised transponder cards communicate with the door handles via RFID antennas.

In addition, there is software to manage users, access rights, the transponder cards, and racks. The software creates protocols, visualises alarm states, and supports other functions. It can be operated remotely and integrated into superordinate systems such as data centre infrastructure management (DCIM).

The new offer is the result of the collaboration with German manufacturer EMKA, being based on the company's 'Agent E', an intelligent locking system.

The R&M offering aims to integrate complementary systems from selected manufacturers into infrastructure for data centres.

In Europe, R&M notes it is already working with several independent partner companies that pursue comparable medium-sized business models and sustainability goals.

For more from R&M, click here.

Joe Peck - 18 November 2025

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Products

Security Risk Management for Data Centre Infrastructure

OPTEX introduces indoor LiDAR sensor for DC security

OPTEX, a manufacturer of intrusion detection sensors and security systems, has launched the REDSCAN Lite, a short-range indoor LiDAR sensor designed to provide precise and rapid detection for protecting critical infrastructure within data centres.

Founded in Japan in 1979, OPTEX has developed a series of sensor innovations, including the use of LiDAR technology for security detection.

The company says its REDSCAN range is recognised for improving the accuracy and reliability of intrusion detection in sensitive environments.

Addressing physical security risks in critical environments

As data centres across the UK and EU are now classified as critical infrastructure, operators face increasing pressure to meet strict security standards and mitigate both internal and external risks.

Industry data indicates that nearly two thirds of data centres experienced a physical security breach in the past year.

The REDSCAN Lite uses 2D LiDAR technology to detect intrusions within a 10 m x 10 m range, reportedly responding in as little as 100 milliseconds.

The sensor can be positioned vertically to create invisible ‘laser walls’ around assets such as server racks, ventilation conduits, and access points, or horizontally to protect ceilings, skylights, and raised floors.

Engineered for high-density environments, the REDSCAN Lite is capable of detecting small-scale activities such as the insertion of USB drives or LAN cables through server racks.

It is designed to operate effectively despite temperature fluctuations, low light, or complete darkness, helping reduce false alarms common in traditional systems.

Purpose-built for confined data centre spaces

Mac Kokobo, Head of Global Security Business at OPTEX, says the product was developed in response to growing demand from data centre operators, noting, “In today’s modern environments, such as data centres, spaces are becoming tighter and tighter filling with racks and processors.

"This latest REDSCAN Lite has been developed to meet the specific need for rapid detection in tight indoor spaces where high security is crucial.

“Feedback from customers highlighted a clear need for enhanced protection in small, narrow areas and spaces, so the REDSCAN Lite sensor has been designed to fit into the narrow gaps and is engineered to provide highly accurate and fast detection in indoor spaces that other technologies simply cannot reach.”

The REDSCAN Lite RS-1010L is now available for deployment.

Joe Peck - 12 November 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173