Features

Artificial Intelligence in Data Centre Operations

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Features

News in Cloud Computing & Data Storage

Europe races to build its own AI backbone

Recent outages across global cloud infrastructure have once again served as a reminder of how deeply Europe depends on foreign hyperscalers. When platforms run on AWS or services protected by Cloudflare fail, European factories, logistics hubs, retailers, and public services can stall instantly.

US-based cloud providers currently dominate Europe’s infrastructure landscape. According to market data, Amazon, Microsoft, and Google together control roughly 70% of Europe’s public cloud market. In contrast, all European providers combined account for only about 15%. This share has declined sharply over the past decade. For European enterprises, this means limited leverage over resilience, performance, data governance, and long-term sovereignty.

This same structural dependency is now extending from cloud infrastructure directly into artificial intelligence and its underlying investments. Between 2018 and 2023, US companies attracted more than €120 billion (£104 billion) in private AI investment, while the European Union drew about €32.5 billion (£28 billion) over the same period.

In 2024 alone, US-based AI firms raised roughly $109 billion (£81 billion), more than six times the total private AI investment in Europe that year. Europe is therefore trying to close the innovation gap while simultaneously tightening regulation, creating a paradox in which calls for digital sovereignty grow louder even as reliance on non-European infrastructure deepens.

The European Union’s Apply AI Strategy is designed to move AI out of research environments and into real industrial use, backed by more than one billion euros in funding. However, most of the computing power, cloud platforms, and model infrastructure required to deploy these systems at scale still comes from outside Europe. This creates a structural risk: even as AI adoption accelerates inside European industry, much of the strategic control over its operation may remain in foreign hands.

Why industrial AI is Europe’s real monitoring ground

For any large-scale technology strategy to succeed, it must be tested and refined through real-world deployment, not only shaped at the policy level. The effectiveness of Europe’s AI push will ultimately depend on how quickly new rules, funding mechanisms, and technical standards translate into working systems, and how fast feedback from practice can inform the next iteration.

This is where industrial environments become especially important. They produce large amounts of real-time data, and the results of AI use are quickly visible in productivity and cost. As a result, industrial AI is becoming one of the main testing grounds for Europe’s AI ambitions. The companies applying AI in practice will be the first to see what works, what does not, and what needs to be adjusted.

According to Giedrė Rajuncė, CEO and co-founder of GREÏ, an AI-powered operational intelligence platform for industrial sites, this shift is already visible on the factory floor, where AI is changing how operations are monitored and optimised in real time.

She notes, “AI can now monitor operations in real time, giving companies a new level of visibility into how their processes actually function. I call it a real-time revolution, and it is available at a cost no other technology can match. Instead of relying on expensive automation as the only path to higher effectiveness, companies can now plug AI-based software into existing cameras and instantly unlock 10–30% efficiency gains.”

She adds that Apply AI reshapes competition beyond technology alone, stating, “Apply AI is reshaping competition for both talent and capital. European startups are now competing directly with US giants for engineers, researchers, and investors who are increasingly focused on industrial AI. From our experience, progress rarely starts with a sweeping transformation. It starts with solving one clear operational problem where real-time detection delivers visible impact, builds confidence, and proves return on investment.”

The data confirms both movement and caution. According to Eurostat, 41% of large EU enterprises had adopted at least one AI-based technology in 2024. At the same time, a global survey by McKinsey & Company shows that 88% of organisations worldwide are already using AI in at least one business function.

“Yes, the numbers show that Europe is still moving more slowly,” Giedrė concludes. “But they also show something even more important. The global market will leave us no choice but to accelerate. That means using the opportunities created by the EU’s push for AI adoption before the gap becomes structural.”

Joe Peck - 8 December 2025

Cyber Security Insights for Resilient Digital Defence

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Features

News in Cloud Computing & Data Storage

OpenNebula, Canonical partner on cloud security

OpenNebula Systems, a global open-source technology provider, has formed a new partnership with UK developer Canonical to offer Ubuntu Pro as a built-in, security-maintained operating system for hypervisor nodes running OpenNebula.

The collaboration is intended to streamline installation, improve long-term maintenance, and reinforce security and compliance for enterprise cloud environments.

OpenNebula is used for virtualisation, cloud deployment, and multi-cluster Kubernetes management. It integrates with a range of technology partners, including NetApp and Veeam, and is supported by relationships with NVIDIA, Dell Technologies, and Ampere.

These partnerships support its use in high-performance and AI-focused environments.

Beginning with the OpenNebula 7.0 release, Ubuntu Pro becomes an optional operating system for hypervisor nodes.

Canonical’s long-term security maintenance, rapid patch delivery, and established update process are designed to help teams manage production systems where new vulnerabilities emerge frequently.

Integrated security maintenance for hypervisor nodes

With Ubuntu Pro embedded into OpenNebula workflows, users will gain access to extended security support, expedited patching, and coordinated lifecycle updates.

The approach aims to reduce operational risk and maintain compliance across large-scale, distributed environments.

Constantino Vázquez, VP of Engineering Services at OpenNebula Systems, explains, “Our mission is to provide a truly sovereign and secure multi-tenant cloud and edge platform for enterprises and public institutions.

"Partnering with Canonical to integrate Ubuntu Pro into OpenNebula strengthens our customers’ confidence by combining open innovation with long-term stability, security, and compliance.”

Mark Lewis, VP of Application Services at Canonical, adds, “Ubuntu Pro provides the secure foundation that modern cloud and AI infrastructures demand.

"By embedding Ubuntu Pro into OpenNebula, we are providing enterprises [with] a robust and compliance-ready environment from the bare metal to the AI workload - making open source innovation ready for enterprise-grade operations.”

Joe Peck - 2 December 2025

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Edge Computing in Modern Data Centre Operations

Features

News in Cloud Computing & Data Storage

365, Robot Network unveil AI-enabled private cloud platform

365 Data Centers (365), a provider of network-centric colocation, network, cloud, and other managed services, has announced a partnership with Robot Network, a US provider of edge AI platforms, to deliver a new AI-enabled private cloud platform for enterprise customers.

Hosted within 365’s cloud infrastructure, the platform supports small-language models, analytics, business intelligence, and cost optimisation, marking a shift in how colocation facilities can function as active layers in AI optimisation.

Integrating AI capabilities into colocation environments

Building on 365’s experience in colocation, network, and cloud services, the collaboration seeks to enable data processing and intelligent operations closer to the edge.

The model allows more than 90% of workloads to be handled within the data centre, using high-density AI only where necessary.

This creates a hybrid AI architecture that turns colocation from passive hosting into an active optimisation environment, lowering operational costs while allowing AI to run securely within compact, high-density footprints.

Derek Gillespie, CEO of 365 Data Centers, says, “Our objective is to meet AI where colocation, connectivity, and cloud converge.

"This platform will provide seamless integration and economies of scale for our customers and partners, giving them access to AI that is purpose-built for their business initiatives.”

Initial enterprise use cases will be supported by a proprietary AI platform that integrates both small and large language models.

Supporting AI adoption across enterprise operations

Jacob Guedalia, CEO of Robot Network, comments, “We’re pleased to partner with 365 Data Centers to bring this unique offering to market. 365 is a forward-thinking partner with strong colocation capabilities and operational experience.

"By combining our proprietary stack - optimised for AMD EPYC processors and NVIDIA GPUs - with their infrastructure, we’re providing a trusted platform that makes advanced AI accessible and affordable for enterprises.

"Our system leverages small AI models from organisations such as Meta, OpenAI, and Grok to extend AI capabilities to a broader business audience.”

365 says the new platform underlines its strategy to evolve as an infrastructure-as-a-service provider, helping enterprises adopt AI-driven tools and improve efficiency through secure, flexible, and data-informed operations.

The company notes it continues to focus on enabling digital transformation across colocation and cloud environments while maintaining reliability and scalability.

For more from 365 Data Centers, click here.

Joe Peck - 13 November 2025

News

News in Cloud Computing & Data Storage

ZainTECH launches Microsoft Azure ExpressRoute in Kuwait

ZainTECH, the digital solutions and cloud services arm of Kuwait-based Zain Group, in collaboration with Zain Kuwait and Zain Omantel International (ZOI), has announced the availability of Microsoft Azure ExpressRoute on the Azure Marketplace.

The move enables government and enterprise customers in Kuwait to access secure and private connectivity to Microsoft Azure directly through the Azure Marketplace.

The listing allows organisations with existing Azure agreements to purchase ExpressRoute using their existing Microsoft Azure consumption credits, designed to simplify procurement and integration with cloud infrastructure.

Dedicated, compliant cloud connectivity

ExpressRoute provides a dedicated, low-latency connection to Microsoft data centres in the UAE and Europe.

According to ZainTECH, the service is aimed at supporting mission-critical workloads that require high performance, enhanced security, and compliance with Kuwait’s national data residency and regulatory standards.

Andrew Hanna, CEO of ZainTECH, comments, “Helping governments and enterprises become more digital, intelligent, and resilient is what all Zain entities aim for.

"The availability of ExpressRoute on the Azure Marketplace is a game-changer for customers in Kuwait. It removes friction from the procurement process and makes it easier than ever for entities to leverage Microsoft’s cloud, using their existing agreements and credits.

"This is how we accelerate real transformation: by combining secure, high-speed connectivity with operational simplicity.”

ZainTECH says that its collaboration with Zain Kuwait, ZOI, and Microsoft establishes a new framework for compliant and resilient cloud connectivity in Kuwait.

The initiative also aligns with Kuwait’s Vision 2035 strategy, supporting national digital transformation efforts and enabling government and enterprise modernisation.

Joe Peck - 13 October 2025

News

News in Cloud Computing & Data Storage

Products

Object First launches 'ransomware-proof' storage

Object First, a US provider of storage appliances, has introduced Ootbi Mini, a compact immutable storage appliance designed for remote and branch offices, edge environments, and small businesses using Veeam for backup.

The device is intended to protect local backup data against ransomware attacks without requiring a traditional data centre setup.

Available in 8, 16, and 24 terabyte capacities, Ootbi Mini brings the company’s existing enterprise-level security to a smaller form factor.

Built on 'zero trust' principles, it provides what the company describes as “absolute immutability”, meaning that no one - including administrators - can alter its firmware, operating system, storage layer, or backup data.

The device offers the same data protection, user interface, and Veeam integration as Object First’s existing Ootbi systems, with minimal setup required.

Ootbi Mini will be available for purchase or through a subscription model, with deliveries due to begin in January 2026.

New additional features

Alongside Ootbi Mini, Object First has announced several additional updates:

The first, Ootbi Honeypot, introduces early warning protection against cyberthreats targeting Veeam Backup and Replication (VBR). The feature, available in version 1.7 at no additional cost to current customers, deploys a decoy VBR environment to detect suspicious activity.

If a threat is detected, Honeypot immediately sends an alert via the customer’s chosen communication channel.

The company has also launched a beta programme for Ootbi Fleet Manager, a cloud-based platform that allows customers to monitor and manage their entire Ootbi fleet from a single dashboard.

The system provides granular monitoring, reporting, and hardware health insights, enabling customers to maintain visibility across distributed environments.

Phil Goodwin, Research Vice President at IDC, comments, “Organisations running Veeam can benefit from storage technologies that combine immutability, simplicity, and resilience.

"With Ootbi Mini, Honeypot, and Fleet Manager, Object First is expanding its ransomware-proof portfolio to meet the needs of businesses of all sizes.”

David Bennett, CEO at Object First, adds, “Our mission is to make ransomware-proof backups simple, powerful, and accessible for every Veeam user. With Ootbi Mini, any organisation can achieve enterprise-grade immutability within a small footprint.

"Honeypot strengthens cyber resilience by providing early threat detection, while Fleet Manager simplifies how customers monitor their deployments. Together, these innovations reinforce our commitment to secure, straightforward, and reliable backup for Veeam users.”

For more from Object First, click here.

Joe Peck - 10 October 2025

Features

News

News in Cloud Computing & Data Storage

Crusoe expands partnership with atNorth at Iceland DC

Crusoe, a developer of AI cloud infrastructure, and Nordic data centre operator atNorth have announced a 24MW expansion of Crusoe Cloud capacity at atNorth’s ICE02 facility in Iceland.

The development builds on the two companies’ original agreement from December 2023, which included 33MW of capacity, and forms part of Crusoe’s wider European growth strategy.

The ICE02 site, located near Reykjavík, benefits from low-latency connectivity supported by multiple undersea fibre-optic cables linking international markets. The latest phase of development includes the deployment of NVIDIA GB200 NVL72 infrastructure, as well as NVIDIA Blackwell and Hopper GPUs.

Renewable-powered expansion

The data centre is powered by geothermal and hydroelectric energy and has been fitted with Direct Liquid to Chip (DLC) cooling systems. Both companies say this reflects their commitment to supporting high-performance computing while limiting carbon impact.

Chase Lochmiller, co-founder and CEO of Crusoe, says, “Our partnership with atNorth allows us to leverage the abundant geothermal and hydroelectric power in Iceland to build energy-first AI infrastructure so that our customers can run their most demanding AI workloads on Crusoe Cloud.”

Eyjólfur Magnús Kristinsson, CEO of atNorth, adds, “The expansion of our ICE02 site features cutting-edge infrastructure and highly energy-efficient Direct Liquid to Chip cooling technology. This aligns with both companies’ commitment to sustainability, and we are proud to support Crusoe on their path to decarbonise workloads while delivering AI-ready solutions in an environmentally responsible way.”

Growing AI data centre demand

atNorth says demand for AI-ready infrastructure continues to increase, with its modular campus design enabling flexible scaling. Recent partnerships, including projects with Nokia and 6G AI Sweden AB, reflect the company’s focus on balancing advanced computing with environmental responsibility.

For more from atNorth, click here.

Joe Peck - 29 August 2025

Data

Features

News

News in Cloud Computing & Data Storage

365 Data Centers collaborates with Liberty Center One

365 Data Centers (365), a provider of network-centric colocation, network, cloud, and other managed services, has announced a collaboration with Liberty Center One, an IT delivery solutions company focused on cloud services, data protection, and high-availability environments.

The collaboration aims to expand the companies' combined cloud capabilities.

Liberty Center One provides open-sourced-based public and private cloud services, disaster recovery resources, and colocation at two data centres which it operates.

365 currently operates 20 colocation data centres, and this relationship is set to enhance the company’s colocation, public cloud, multi-tenant, private cloud, and hybrid cloud offerings for enterprise clients, as well as its managed and dedicated services.

"This collaboration will have big implications for 365 as we continue to expand our offerings to the market," believes Derek Gillespie, CRO of 365 Data Centers.

"When it comes to solutions for enterprise, working with Liberty Center One will enable us to enhance our current suite of cloud capabilities and hosted services to give our customers what they need today to meet the demands of their business.”

Tim Mullahy, Managing Director of Liberty Center One, adds, "We’re looking forward to working with 365 Data Centers to be able to truly bring the best out of one another’s services through this agreement.

"Customer service has always been our number one priority, and this association will be instrumental in helping 365 reach its business goals.”

For more from 365 Data Centers, click here.

Joe Peck - 13 August 2025

Data

Features

News

News in Cloud Computing & Data Storage

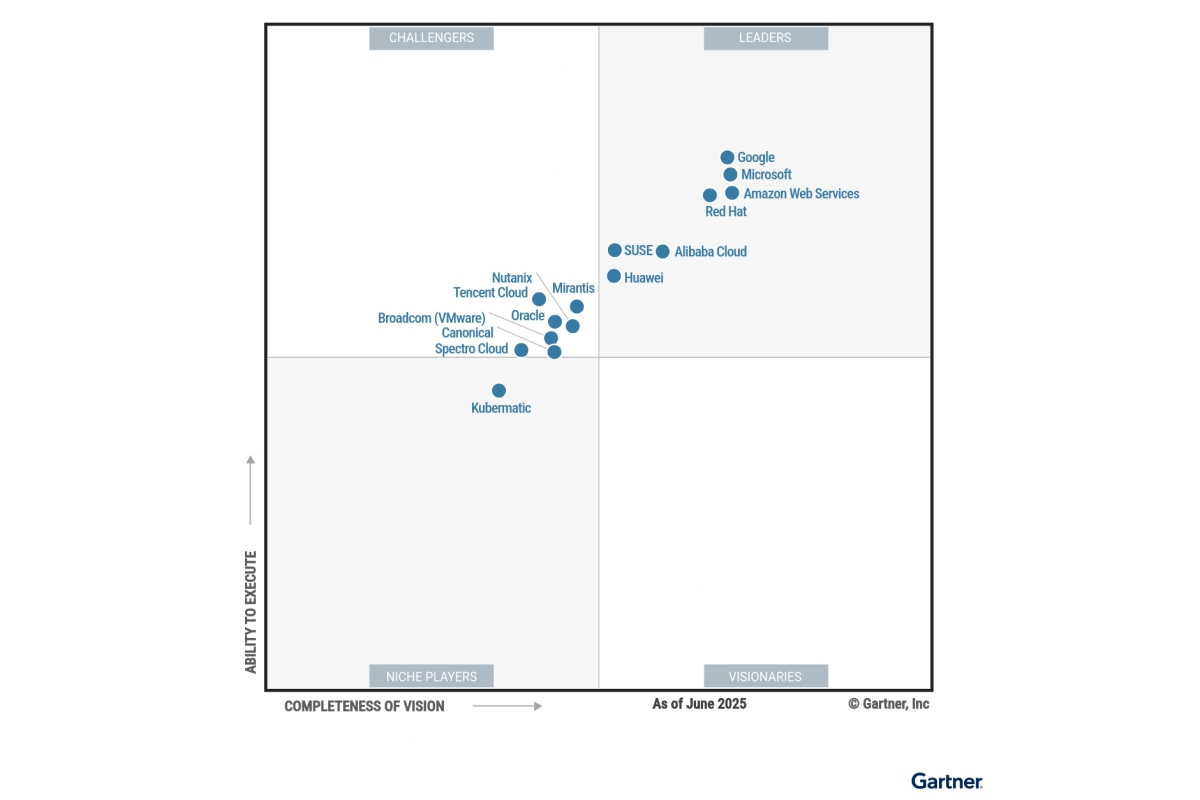

Huawei named a leader for container management

Chinese multinational technology company Huawei has been positioned in the 'Leaders' quadrant of American IT research and advisory company Gartner's Magic Quadrant for Container Management 2025, recognising its capabilities in cloud-native infrastructure and container management.

The company’s Huawei Cloud portfolio includes products such as CCE Turbo, CCE Autopilot, Cloud Container Instance (CCI), and the distributed cloud-native service UCS. These are designed to support large-scale containerised workloads across public, distributed, hybrid, and edge cloud environments.

Huawei Cloud’s offerings cover a range of use cases, including new cloud-native applications, containerisation of existing applications, AI container deployments, edge computing, and hybrid cloud scenarios. Gartner’s assessment also highlighted Huawei Cloud’s position in the AI container domain.

Huawei is an active contributor to the Cloud Native Computing Foundation (CNCF), having participated in 82 CNCF projects and holding more than 20 maintainer roles. It is currently the only Chinese cloud provider with a vice-chair position on the CNCF Technical Oversight Committee.

The company says it has donated multiple projects to the CNCF, including KubeEdge, Karmada, Volcano, and Kuasar, and contributed other projects such as Kmesh, openGemini, and Sermant in 2024.

Use cases and deployments

Huawei Cloud container services are deployed globally in sectors such as finance, manufacturing, energy, transport, and e-commerce. Examples include:

• Starzplay, an OTT platform in the Middle East and Central Asia, used Huawei Cloud CCI to transition to a serverless architecture, handling millions of access requests during the 2024 Cricket World Cup whilst reducing resource costs by 20%.

• Ninja Van, a Singapore-based logistics provider, containerised its services using Huawei Cloud CCE, enabling uninterrupted operations during peak periods and improving order processing efficiency by 40%.

• Chilquinta Energía, a Chilean energy provider, migrated its big data platform to Huawei Cloud CCE Turbo, achieving a 90% performance improvement.

• Konga, a Nigerian e-commerce platform, adopted CCE Turbo to support millions of monthly active users.

• Meitu, a Chinese visual creation platform, uses CCE and Ascend cloud services to manage AI computing resources for model training and deployment.

Cloud Native 2.0 and AI integration

Huawei Cloud has incorporated AI into its cloud-native strategy through three main areas:

1. Cloud for AI – CCE AI clusters form the infrastructure for CloudMatrix384 supernodes, offering topology-aware scheduling, workload-aware scaling, and faster container startup for AI workloads.

2. AI for Cloud – The CCE Doer feature integrates AI into container lifecycle management, offering diagnostics, recommendations, and Q&A capabilities. Huawei reports over 200 diagnosable exception scenarios with a root cause accuracy rate above 80%.

3. Serverless containers – Products include CCE Autopilot and CCI, designed to reduce operational overhead and improve scalability. New serverless container options aim to improve computing cost-effectiveness by up to 40%.

Huawei Cloud states it will continue working with global operators to develop cloud-native technologies and broaden adoption across industries.

For more from Huawei, click here.

Joe Peck - 12 August 2025

Data Centres

News

News in Cloud Computing & Data Storage

Products

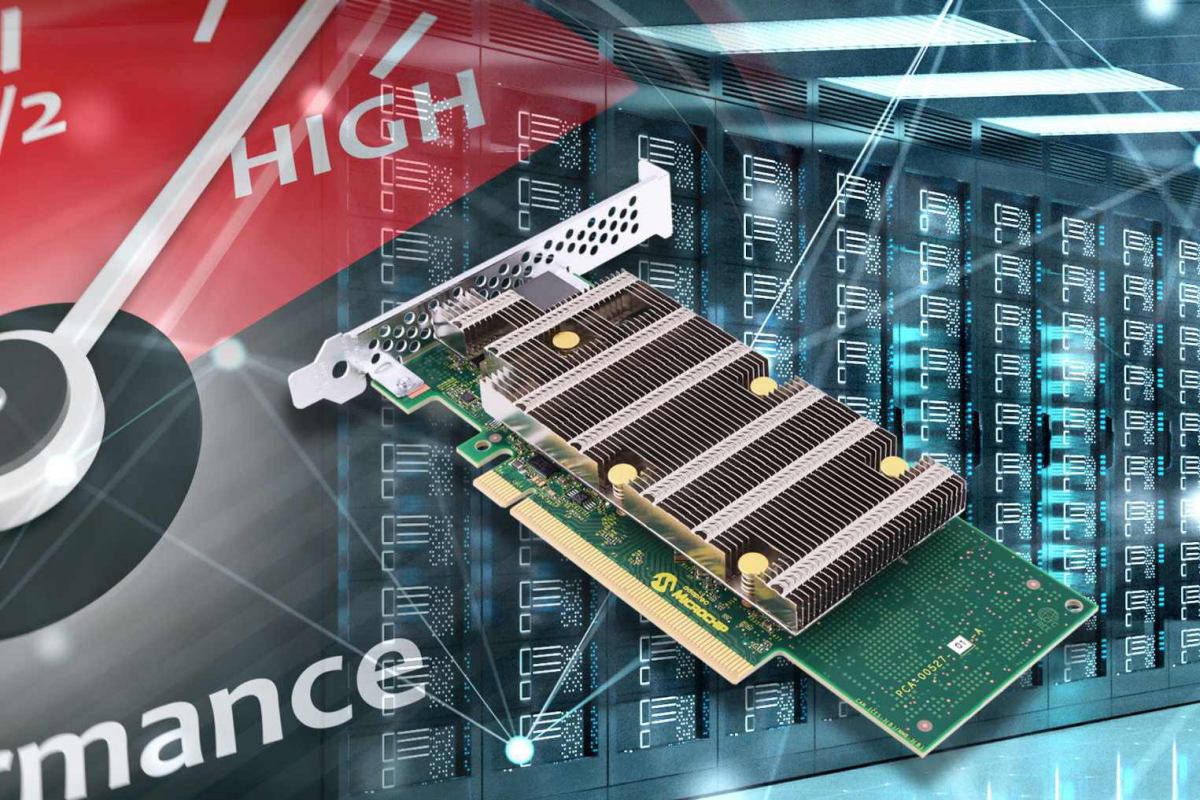

Microchip launches Adaptec SmartRAID 4300 accelerators

Semiconductor manufacturer Microchip Technology has introduced the Adaptec SmartRAID 4300 series, a new family of NVMe RAID storage accelerators designed for use in server OEM platforms, storage systems, data centres, and enterprise environments.

The series aims to support scalable, software-defined storage (SDS) solutions, particularly for high-performance workloads in AI-focused data centres.

The SmartRAID 4300 series uses a disaggregated architecture, separating software and hardware elements to improve efficiency.

The accelerators integrate with Microchip’s PCIe-based storage controllers to offload key RAID processes from the host CPU, while the main storage software stack runs directly on the host system.

This approach allows data to flow at native PCIe speeds, while offloading parity-based functions such as XOR to dedicated accelerator hardware.

According to internal testing by Microchip, the new architecture has delivered input/output (I/O) performance gains of up to seven times compared with the company’s previous generation products.

Architecture and capabilities

The SmartRAID 4300 accelerators are designed to work with Gen 4 and Gen 5 PCIe host CPUs and can support up to 32 CPU-attached x4 NVMe devices and 64 logical drives or RAID arrays.

This is intended to help address data bottlenecks common in conventional in-line storage solutions by taking advantage of expanded host PCIe infrastructure.

By removing the reliance on a single PCIe slot for all data traffic, Microchip aims to deliver greater performance and system scalability. Storage operations such as writes now occur directly between the host CPU and the NVMe endpoints, while the accelerator handles redundancy tasks.

Brian McCarson, Corporate Vice President of Microchip’s Data Centre Solutions Business Unit, says, “Our innovative solution with separate software and hardware addresses the limitations of traditional architectures that rely on a PCIe host interface slot for all data flows.

"The SmartRAID 4300 series allows us to enhance performance, efficiency, and adaptability to better support modern enterprise infrastructure systems.”

Power efficiency and security

Power optimisation features include automatic idling of processor cores and autonomous power reduction mechanisms.

To help maintain data integrity and system security, the SmartRAID 4300 series incorporates features such as secure boot and update, hardware root of trust, attestation, and Self-Encrypting Drive (SED) support.

Management tools and compatibility

The series is supported by Microchip’s Adaptec maxView management software, which includes an HTML5-based web interface, the ARCCONF command line tool, and plug-ins for both local and remote management.

The tools are accessible through standard desktop and mobile browsers and are designed to remain compatible with existing Adaptec SmartRAID utilities.

For out-of-band management via Baseboard Management Controllers (BMCs), the series supports Distributed Management Task Force (DMTF) standards, including Platform-Level Data Model (PLDM) and Redfish Device Enablement (RDE), using MCTP protocol.

For more from Microchip, click here.

Joe Peck - 7 August 2025

Events

Features

News

News in Cloud Computing & Data Storage

Kioxia showcases flash storage at FMS 2025

Memory manufacturer Kioxia is showcasing its latest flash storage technologies at this year’s Flash Memory Summit (FMS 2025), highlighting how its memory and SSD developments are supporting the infrastructure demands of artificial intelligence (AI) applications in enterprise and data centre settings.

Among the products on display is the Kioxia LC9 Series, introduced as the industry’s first 245.76 terabyte (TB) NVMe SSD.

Other featured releases include the CM9 and CD9P Series SSDs, built using Kioxia’s eighth-generation BiCS FLASH 3D flash memory. These devices aim to deliver a balance of performance, power efficiency, and versatility.

The company is also presenting its ninth-generation BiCS FLASH memory, which is based on 1 terabit (Tb) 3bit/cell technology. It uses the CBA (CMOS directly Bonded to Array) architecture initially developed for the previous generation and offers gains in data read speed and energy consumption. Additional benefits include improvements in PI-LLT and SCA characteristics.

“Artificial intelligence is reforming data infrastructure, and Kioxia is advancing storage technology alongside it,” says Axel Störmann, Vice President and Chief Technology Officer for Memory and SSD products at Kioxia Europe.

“Our BiCS FLASH technology features a 32-die stack QLC architecture and innovative CBA technology. Delivering an industry-first 8TB per chip package, this breakthrough redefines the performance, scalability, and efficiency needed to power next-generation AI workloads.”

Conference participation

Kioxia is also contributing to a range of talks and sessions throughout FMS 2025:

Keynote presentation:

“Optimise AI Infrastructure Investments with Flash Memory Technology and Storage Solutions”Tuesday, 5 August at 11am PDTPresented by Katsuki Matsudera, General Manager, Memory Technical Marketing Department, Kioxia Corporation; and Neville Ichhaporia, Senior Vice President and General Manager, SSD Business Unit, Kioxia America.

Executive AI panel discussion:

“Memory and Storage Scaling for AI Inferencing”Thursday, 7 August at 11am PDTRory Bolt, Senior Fellow and Principal Architect, SSD Business Unit, Kioxia America, will join a panel featuring experts from NVIDIA and other companies in the memory and storage sector. The discussion will explore how to avoid configuration challenges and optimise infrastructure for AI workloads.

Kioxia will also participate in additional panel discussions and technical sessions during the event.

For more from Kioxia, click here.

Joe Peck - 5 August 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173