Data Centre Infrastructure News & Trends

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

Socomec launches energy audit initiative for UKI data centres

Socomec, a manufacturer of low voltage power management systems, has launched an energy audit programme for data centres in the UK and Ireland, aimed at helping operators measure energy use and meet reporting requirements under the EU Energy Efficiency Directive (EED).

Under EU EED rules, owners and operators of facilities with a capacity above 500kW must disclose their power usage effectiveness (PUE) and other environmental performance indicators each year. The next reporting deadline is 15 May 2026.

The directive closely aligns with the UK’s Energy Savings Opportunity Scheme (ESOS) and the ISO 50001 standard, which requires organisations to monitor and report energy consumption and power utilisation accurately.

Improving PUE is also becoming an operational priority for data centres as electricity costs increase and workloads linked to artificial intelligence raise power demand.

Socomec estimates that improving PUE by 0.1 - from 1.6 to 1.5, for example - can reduce annual energy consumption by around 6–8%. For a 2MW data centre, this could equate to more than £100,000 in yearly energy savings while also extending the lifespan of existing infrastructure.

Energy infrastructure assessments for operators

Data centre operators in the UK and Ireland can apply for an assessment of their energy infrastructure through the programme.

Socomec’s engineers will carry out site inspections covering IT and non-IT loads, including UPS systems, server racks, cooling equipment, lighting, and switchgear. The aim is to determine PUE and identify gaps in existing metering capabilities.

Participating facilities receive a report outlining energy efficiency measures, estimated cost savings, and potential return on investment. The findings are intended to support decision-making across sustainability, finance, and engineering teams.

The audits are particularly relevant for older colocation data centres seeking to measure PUE at rack level using Measuring Instrument Directive-compliant metering. More detailed measurement can also allow operators to allocate energy costs more accurately between tenants.

Colin Dean, Managing Director of Socomec, says, “The EU EED represents a gold standard for sustainable energy management and it’s only a matter of time before other countries follow Germany’s example and start penalising non-compliance.

"In addition, there is a fear - particularly among legacy data centre operators - that a rip-and-replace approach is needed to achieve modern energy efficiency. At Socomec, our aim is to plug this gap with proactive and practical guidance, showing that metering can be retrofitted to improve efficiency without infrastructure overhaul or operational downtime.

“Our energy audit is designed to help operators of mission-critical data centres take informed action towards sustainability while maximising their investments. With clear, accurate insights into PUE, data centres can turn energy data into action, optimise operational costs, and drive long-term resilience.”

Joe Peck - 6 March 2026

Data Centre Architecture Insights & Best Practices

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Crestchic unveils 600kW liquid-cooled loadbank

Crestchic, a UK manufacturer of loadbanks and transformers for testing power systems and data centres, has launched its new 600kW Liquid Cooled Loadbank at Data Centre World London 2026, aimed at supporting commissioning in the growing liquid-cooled data centre market.

As rack power densities increase, operators are increasingly adopting liquid cooling to manage higher thermal loads. Crestchic says the new system has been designed to provide accurate thermal validation and precision electrical testing for liquid-cooled infrastructure.

The 600kW loadbank delivers up to 648kW at 415V and features stable ΔT thermal control to ±0.5°C, enabling repeatable testing during commissioning.

Temperature accuracy is maintained regardless of flow variation, while built-in protections cover flow, pressure, overload, underload, and thermal shock.

Designed for liquid-cooled data centre commissioning

The unit uses a single-vessel architecture, reducing footprint compared with multi-vessel systems at similar power levels. This compact design makes it easier to position in plant rooms and simplifies transport and handling.

The platform includes a stackable structure, flush-mounted connections, heavy-duty castors, and dual-side forklift pockets, allowing two units to be transported within a standard-height ISO shipping container.

The system integrates with Crestchic’s VCS software, providing live monitoring of supply and hydraulic data, real-time load profiling, and the ability to cluster up to 240 load banks for hybrid air- and liquid-cooled testing.

Paul Brickman, Commercial Director at Crestchic, says, “The move towards liquid cooling is accelerating as rack densities increase, particularly with AI and high-performance computing workloads.

“Our new 600kW Liquid Cooled Loadbank has been designed from the ground up to serve this market, giving commissioning engineers the precision, reliability, and control they need to bring critical infrastructure online with confidence."

The 600kW Liquid Cooled Loadbank is available for sale or rental through Crestchic’s global network.

For more from Crestchic, click here.

Joe Peck - 6 March 2026

Data Centre Business News and Industry Trends

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Insights into Data Centre Investment & Market Growth

News

'Rising power costs top data centre concern'

New research from UK colocation data centre provider Asanti shows that AI adoption, resilience pressures, and rising power costs are reshaping data centre strategies for UK organisations, with material implications for managed service providers (MSPs), cloud providers, and infrastructure partners.

In a survey of 100 senior IT decision makers, nearly half (48%) said AI adoption will have a large influence on their IT infrastructure strategy over the next three years, ahead of regulatory change and hybrid or multi-cloud capabilities. IT leaders report average rack densities of 8kW per rack today, rising to 11kW within 12 months, as AI-heavy workloads and high-density compute drive up power and cooling requirements.

Rising power costs are already the top concern regarding current data centre environments, cited by 52% of respondents, ahead of maintaining uptime (48%). Over the next three years, rising energy costs (34%) and sustainability commitments (33%) sit alongside AI, resilience, and regulatory change as core inputs to infrastructure strategy.

Stewart Laing, CEO of Asanti, notes, “AI has moved from pilot projects to production workloads, and with it comes a step-change in rack density, power demand, and cooling requirements. Organisations are realising they need the right mix of facilities, partners, and architectures to deliver compute and storage requirements without compromising on resilience, sovereignty, or cost control.”

Resilience and sovereignty drive hosting decisions

Over the next 12 months, cybersecurity and resilience are the most common focus for infrastructure investment, cited by 51% of IT leaders. In response to cyberattacks and service disruptions in 2025, organisations are strengthening security controls (60%), creating backup strategies across multiple data centre providers/locations (50%), and reviewing business continuity planning (42%). A third (33%) plan to move more workloads into on-premise or colocation environments to strengthen their IT resilience.

Location decisions are becoming more polarised, with 30% of organisations already using data centres outside the UK and a further 24% planning to do so, while 32% say they use only UK-based data centres. The research suggests a push‑pull between cost and sovereignty: high UK power costs draw some workloads overseas, but data protection obligations, regulatory exposure, and latency considerations keep others anchored in UK facilities.

Stewart continues, “For MSPs and infrastructure partners, the opportunity is to help customers design architectures that balance the needs of today, sovereignty, compliance, and resilience with AI ambition. That increasingly means hybrid strategies that combine UK-based colocation for critical workloads with selective use of overseas capacity and public cloud where it makes sense.”

Opportunity for MSPs and infrastructure partners

The study shows strong and sustained demand for external expertise. More than half of organisations (54%) already use third parties for cybersecurity services, while around a third bring in external partners for infrastructure audits (35%), disaster recovery and business continuity planning (33%), and end-to-end solution deployment (35%). Looking ahead over the next 12 months, organisations expect to increase their use of external support for public cloud repatriation (32%) and technical scoping for new projects (31%), signalling a shift towards more intentional workload placement and right‑sizing.

Stewart concludes, “As power, AI, and sovereignty concerns collide, few organisations can carry all the skills they need in‑house. MSPs, systems integrators, and specialist data centre providers have a critical role in helping enterprises architect for higher densities, navigate cross border data complexity, and build resilient, multi‑site infrastructure that can withstand disruption.”

The full whitepaper, From Misconception to Momentum: 2026 Trends for the UK’s Data Centre Sector, is available by clicking here.

For more from Asanti, click here.

Joe Peck - 3 March 2026

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

Sponsored

Huawei launches enhanced AI-centric network solutions

Chinese multinational technology company Huawei released a series of all-scenario U6 GHz products at MWC Barcelona 2026 to help carriers unlock the full potential of 5G-A and set the stage for a seamless transition to 6G.

The company also launched enhanced AI-centric network solutions that will help carriers prepare for the agentic era by enabling intelligent services, networks, and network elements (NEs).

In addition, Huawei is showcasing its SuperPoD cluster for the first time outside China, which they have created to offer "a new option for the intelligent world".

The theme of Huawei's booth for this year's conference is "Advancing All Intelligence", reflecting the company's plans to build more AI-centric networks and computing backbones that will help carriers and industry customers seize opportunities from the AI era.

U6 GHz: Unlocking 5G-A potential for a smooth transition to 6G

According to Huawei, the next five years will provide a window of opportunity to unleash the full potential of 5G-A. They plan to work with global carriers on the large-scale 5G-A deployment, use high uplink to address surging consumer and industry demand for mobile AI applications, and use the U6 GHz band to unlock the full value of spectrum and pave the way for smooth evolution to 6G.

There are already 70 million 5G-A users globally and 5G-A is increasingly being adopted by carriers at scale. In China, Huawei has helped carriers deliver contiguous 5G-A coverage across 270 cities and launch 5G-A packages that monetise experience in over 30 provinces.

The all-scenario U6 GHz products and solutions Huawei have released use innovative technologies to create a high-capacity, low-latency, optimal-experience backbone designed for mobile AI applications.

Three-layer intelligence with AI-centric network: Seizing opportunities in the agentic era

Following the trend to integrate AI directly into networks, Huawei is using AI to create AI-centric network solutions that will act as target networks for the agentic era. These solutions embed intelligence across three layers:

• At the service layer — Huawei is helping carriers build multi-agent collaboration platforms, with specialised agents for calling, experience monetisation, and home broadband. These platforms will enable AI-driven transformation of carriers' core services like voice, internet access, and home broadband.

• At the network layer — Phase one of Huawei's L4 Autonomous Driving Network (AND L4) solution primarily focuses on single-scenario automation, helping carriers drastically improve O&M efficiency, network quality, and monetisation capabilities. By the end of 2025, the company's single-scenario ADN solutions have been commercially deployed on more than 130 telecom networks worldwide. Moving forward, Huawei will continue to help carriers reshape operations with AI, going beyond single-scenario automation to support end-to-end single-domain network autonomy.

• At the NE layer — Huawei works with carriers to accelerate innovation in areas like algorithm optimisation for RANs, intelligent and accurate service identification for WANs, and unified service intent for core networks that helps integrate B2C and B2H services. Innovations in these domains are already driving marked improvements in network energy and spectral efficiency, intelligent service awareness, and network resilience assurance.

Computing backbone with SuperPoDs and clusters: A new option for the intelligent world

In the computing space, Huawei is showcasing its computing cluster and SuperPoD products featuring new innovations in system-level architecture, including its UnifiedBus technology for SuperPoD interconnect, for the first time outside China.

Key products on display will include the Atlas 950 SuperPoD for AI computing, the TaiShan 950 SuperPoD for general-purpose computing, the Atlas 850E SuperPoD, and the TaiShan 500 and TaiShan 200 servers. These offerings are Huawei's answer to demand for stronger compute and lower latency – two elements that are especially critical as trillion-parameter AI models become more commonplace and agentic AI is introduced into core production systems.

These offerings also reflect Huawei's ongoing commitment to going fully open source and open access. The company is actively working with partners to build an open computing ecosystem and provide the world with another option for solid computing power.

In the enterprise space, Huawei's focus at MWC is on helping different industries accelerate their intelligent transformation. Together with customers, partners, and representatives from different industries, Huawei will unveil a series of innovative practices that are helping different industries go intelligent on all fronts.

The company will also share its new offerings in digital and intelligent infrastructure, and give updates on its latest efforts in partner ecosystem development.

In total, Huawei will feature 115 industrial intelligence showcases for enterprise customers in different domains, its SHAPE 2.0 Partner Framework, and 22 new industrial intelligence solutions jointly developed with partners.

In the consumer space, Huawei's theme for this year's MWC is "Now is Yours". The company is working to deliver an unparalleled intelligent experience for consumers in all scenarios and will showcase a range of new smartphones, wearables, tablets, PCs, and earphones that feature its latest breakthroughs in areas like foldable screens, health and fitness, mobile photography, productivity, and creativity.

In 2026, Huawei will keep innovating to deliver competitive products with a superior experience, giving consumers greater freedom to discover and create in their own unique way.

Huawei also announced that it had successfully surpassed the commitment it had made to help drive digital inclusion and combat the rapidly widening digital divide. By the end of 2025, Huawei had worked with customers to provide connectivity to 170 million people in remote areas across more than 80 countries, giving more people access to inclusive digital services.

MWC Barcelona 2026 is being held from 2 March to 5 March in Barcelona, Spain. During the event, Huawei is showcasing its latest products and solutions at Stand 1H50 in Fira Gran Via Hall 1.

The era of agentic networks is now approaching fast and the commercial adoption of 5G-A at scale is gaining speed. Huawei is actively working with carriers and partners around the world to unleash the full potential of 5G-A and pave the way for the evolution to 6G. It is also creating AI-centric network solutions to enable intelligent services, networks, and network elements (NEs), speeding up the large-scale deployment of level-4 autonomous networks (AN L4) and using AI to upgrade its core business. Together with other industry players, it says it will create leading value-driven networks and AI computing backbones for a fully intelligent future.

For more information, click here to visit Huawei's website.

For more from Huawei, click here.

Joe Peck - 3 March 2026

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

News

Caterpillar collaboration targets low-carbon DC power

Construction equipment manufacturer Caterpillar, energy infrastructure provider OnePWR, and carbon management company Vero3 have announced a strategic collaboration to develop lower carbon power generation and carbon storage projects for mission-critical facilities, including data centres.

The parties plan to design an integrated system combining natural gas-based prime power generation, carbon capture, battery energy storage, and permanent geological sequestration of carbon dioxide.

Under the agreement, Caterpillar will provide generation equipment, including natural gas and diesel generators, gas turbines, and control systems. The company will also lead front-end engineering and design activities for the carbon capture element.

500MW prime power project planned for 2026

OnePWR will build, own, and operate the power generation assets and associated infrastructure, supplying continuous power under long-term commercial agreements.

Vero3 will develop and operate the carbon capture and permanent storage infrastructure, as well as oversee tax credit monetisation linked to sequestration projects.

The first project is expected to begin in 2026 with the development of a 500MW prime power site. The companies state that this initial deployment is intended to form the basis for wider international rollout.

The collaboration focuses on delivering dispatchable power capacity to meet growing energy demand, while incorporating carbon capture and storage to reduce overall emissions associated with on-site generation.

For more from Caterpillar, click here.

Joe Peck - 3 March 2026

Data Centre Infrastructure News & Trends

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Edge Computing in Modern Data Centre Operations

Enterprise Network Infrastructure: Design, Performance & Security

Nokia, Telefónica to expand edge networking in Spain

Finnish telecommunications company Nokia has been selected by Telefónica, a Spanish multinational telecommunications company, to deploy networking technology across 17 new edge data centre nodes in Spain.

The rollout forms part of Telefónica’s expansion of distributed edge infrastructure, supporting AI, B2B, and telco cloud services for residential, enterprise, and public sector users.

Nokia will provide connectivity for compute and storage within each edge facility, as well as links between the sites and external networks. The infrastructure is designed to support AI training and inferencing closer to end users, alongside digital services in healthcare, education, industry, and government.

Under the multi-year agreement, Nokia has exclusive responsibility for networking across the 17 nodes. 12 have already been deployed, including at Telefónica’s Tecno-Alcalá site.

Multi-year agreement covers 17 edge nodes

The latest phase follows a pilot deployment of three edge data centres in 2024. Nokia now acts as sole networking technology partner for the programme, with the companies stating that a single-vendor approach is intended to simplify operations and standardise architecture.

Sergio Sánchez, CTIO at Telefónica España, comments, “This initiative fully aligns with our strategy to make edge cloud and artificial intelligence main cornerstones of Telefónica’s growth.

"Nokia has proven to be a trusted connectivity partner in this mission, and they are playing a critical role in building secure, reliable data centre networks for our ambitious edge node project.

"Through this effort, we are not only enhancing our digital infrastructure but also reinforcing Spain’s technological sovereignty and enabling a more dynamic, user-centric digital ecosystem.”

David Heard, President, Network Infrastructure at Nokia, adds, “We are proud to collaborate with Telefónica for this landmark project that supports our customer’s shift to a nationwide distributed edge architecture.

"This win underscores our long-term strategic relationship and Nokia’s leadership in building AI-ready, high-performance data centre networking solutions. Together, we’re creating the foundation for Spain’s digital future, bringing intelligence and services closer to where people and businesses need them most.”

For more from Nokia, click here.

Joe Peck - 2 March 2026

Data Centre Infrastructure News & Trends

Events

Innovations in Data Center Power and Cooling Solutions

Sponsored

Schneider to demonstrate power and cooling at DCW 2026

In this article for DCNN, Matthew Baynes, Vice President, Secure Power & Data Centres, UK & Ireland at Schneider Electric, details how the company will demonstrate its integrated power, cooling, and digital capabilities at Data Centre World 2026:

Building for AI at scale – are you ready?

As the global competition for AI leadership intensifies, the UK is stepping up in its mission to become an ‘AI Maker’. As demand increases, so too does the need for the secure, scalable, and sustainable infrastructure to accommodate it. The UK ranks among the world’s top three data centre markets, and the industry sits at the core of the country’s AI ambitions, with the Government’s AI Opportunities Action Plan now designating data centres as critical national infrastructure (CNI).

Data Centre World in London is the industry’s largest gathering of professionals and end-users. During the event, as the UK’s energy technology provider, Schneider Electric will explore how we can scale AI infrastructure.

The impact of investment and AI Growth Zones

As previously mentioned, with the Government’s AI Opportunities Action Plan being backed by investment from big tech, data centres are now considered as critical national infrastructure. This has opened the gates for large-scale innovation, investment, and opportunities. From Stargate UK to Google’s £5 billion commitment to AI infrastructure, announcements by major global technology companies have all strengthened the UK’s leadership position.

Exploring the UK’s position in the data centre market, on 4 March at 11:05am, I will discuss the importance of scaling AI responsibly in the UK, prioritising energy efficiency and innovation in data centres.

Liquid cooling: Meeting the challenge of density

As rack densities soar to support AI workloads, the challenge is no longer whether to adopt liquid cooling, but how to deploy if effectively at scale. On 4 March, 12:05–13:15pm, Andrew Whitmore, Vice President of Sales at Motivair by Schneider Electric, will chair a panel discussion on tackling liquid cooling challenges in data centres, and will unpack the innovations, risks, and realities behind the technology.

During the session, Andrew will be joined by Karl Harvard, Chief Commercial Officer at Nscale; Ian Francis, Global Design and Engineering SME at Digital Realty; and Petrina Steele, Business Development Senior Director at Equinix.

How agentic AI transforms data centre services

While AI is driving demand for data centre capacity, it is also transforming how these facilities are operated and maintained. On 5 March, 11:15–11:45am, Natasha Nelson, Chief Technology Officer at Schneider Electric’s Services business, will deliver a keynote exploring how agentic AI can transform data centre services at scale.

During the session, Natasha will explore the transformative role of agentic AI and Augmented Operations in delivering highly skilled technical services – both remotely and on site – for electro-sensitive environments such as large-scale data centres. She will unpack how AI-powered decision-making and human expertise can create a new era of service excellence, where every intervention is smarter, faster, and more sustainable.

Building resilient, end-to-end, AI-ready data centres

At Stand D140, Schneider Electric will showcase its complete, end-to-end, AI-ready data centre portfolio, enabling scalable, resilient, and sustainable AI infrastructure. Our solutions cover:

• Integrated power train — including Ringmaster AirSeT switchgear, Galaxy UPS, iLine busbar, and 800VDC sidecar

• Hybrid cooling solutions — including Motivair by Schneider Electric’s liquid cooling and coolant distribution units (CDUs)

• All-in-one modular infrastructure — AI POD (EcoStruxure Pod Data Centres) and Modular Data Centres

• Lifecycle Services — to support compliant and optimised operations

Our integrated power chain begins with the Ringmaster AirSet compact switchgear, directing high-voltage power and preventing overloads. The Galaxy UPS systems provide resilient backup, keeping AI servers running continuously. Inside facilities, the iLine busbar replaces cable complexity with overhead power bars, while the 800VDC sidecar delivers direct current to racks, avoiding conversion losses.

Lifecycle services orchestrate this seamless system – from the Galaxy UPS enabling rapid repair to essential cabling controlling power safely. This de-risks expansion, ensures UK regulatory compliance, and delivers efficient, long-term AI infrastructure.

Together, these solutions demonstrate a fully integrated, AI-ready architecture, showcased digitally and in physical format at the stand. Experts from Secure Power, Digital Energy, and Power Products divisions will also be present to explore how these technologies enable UK organisations to lead the AI race.

Software and digital services

Our DCIM software solutions and services safeguard AI operations through monitoring, optimisation, and digital modelling. These include:

• EcoStruxure Data Centre Expert• AVEVA and ETAP Digital Twins• EcoStruxure Building Operation• Power Monitoring Expert

The software pods demonstrate comprehensive digital solutions for monitoring, controlling, and optimising infrastructure. EcoStruxure Data Centre Expert provides real-time power and cooling visibility, while Aveva and ETAP Digital Twins enable simulation, design, and automation of critical systems.

EcoStruxure Building Operation facilitates secure data exchange from third-party energy, HVAC, fire safety, and security systems. Power Monitoring Expert (PME) delivers electrical system insights for improved performance and sustainability, connecting smart devices across electrical systems and integrating with process controls for real-time monitoring.

Join us at Stand D140 during Data Centre World in London to be part of the conversation on scaling sustainable, efficient, and resilient data centres together.

For more from Schneider Electric, click here.

Joe Peck - 26 February 2026

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Enterprise Network Infrastructure: Design, Performance & Security

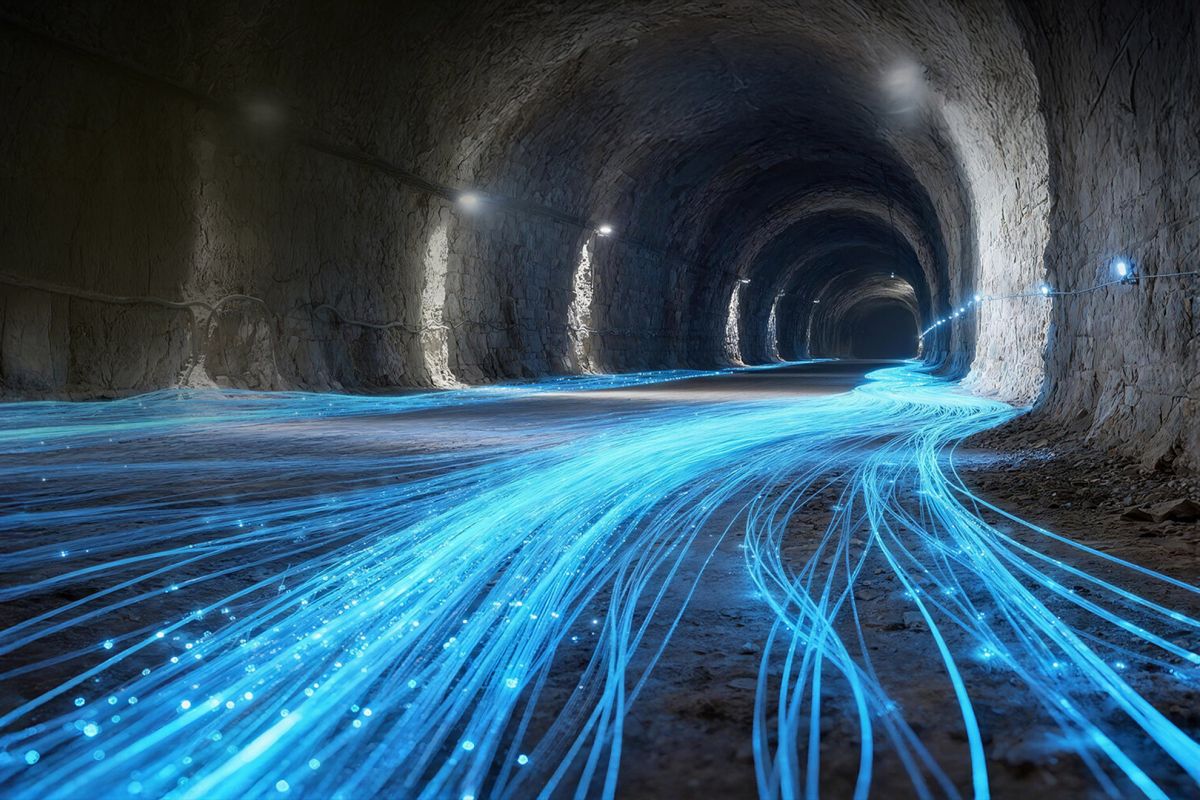

STL, Mynet deliver fibre in mountainous Italy

STL, an optical and digital systems company, has worked with Mynet to deliver optical fibre infrastructure for the Intacture data centre in Trentino, Italy, located in a mountainous area described as the ‘heart of the mountain’.

The project was led by the University of Trento as implementing body and scientific lead. It involves total funding of €50.2 million (£43.7 million), including €18.4 million (£16 million) from Italy’s National Recovery and Resilience Plan (PNRR).

Mynet, a telecommunications company focused on fibre optic networks across Northern Italy, is the first provider to activate fibre connectivity at the facility.

The deployment required high-capacity fibre to be installed within a tight timeframe and in a geographically complex environment.

https://www.youtube.com/watch?v=9KpsqOIWu2E

Fibre deployment completed within two months

STL supplied high fibre-count cable with a compact diameter, designed for installation in 10/12mm duct systems. The cable is engineered to support long-distance blowing, faster end preparation, and simplified on-site handling, while meeting performance, durability, and scalability requirements.

The connectivity infrastructure assigned to Mynet was completed in under two months. According to the companies, this resulted in around a 50% reduction in deployment time, an expected network lifetime of more than 15 years, and improved stability during peak load.

Giovanni Zorzoni, General Manager of Mynet, says, “We accepted a challenge to bring high-performance connectivity to this extraordinary infrastructure in less than sixty days.

"With STL’s advanced optical fibre solutions, we were able to focus on the design and execution without compromising on reliability or performance. The quality, robustness, and ease of deployment of STL’s optical fibre solutions enabled us to complete the project at record speed, even in a uniquely demanding environment.”

Rahul Puri, CEO, ONB, STL, adds, “This collaboration underscores STL's expertise in delivering mission-critical digital infrastructure for data centres.

"By providing scalable, future-ready solutions like multi-core and low-latency fibre, we are helping our customers build resilient networks structurally prepared for an AI-driven future.”

For more from STL, click here.

Joe Peck - 26 February 2026

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

Events

Products

Mayflex to highlight Elevate at Data Centre World 2026

Mayflex, a UK-based distributor of converged IP infrastructure, networking, and electronic security products, will present updates to its Elevate infrastructure portfolio at Data Centre World London 2026, taking place on 4–5 March at ExCeL London. The company will exhibit on Stand B180.

Launched at the 2025 event, Elevate brings together fibre connectivity, racks, aisle containment, power distribution, and rack-level security within a single infrastructure platform.

Mayflex says the portfolio has evolved over the past 12 months in response to increasing density and performance requirements in data centre environments.

Andrew Percival, Managing Director at Mayflex, says, “From concept, our ambition with Elevate was to continually move the offer forwards.

"We aim to build an integrated set of solutions that responds to the real pressures facing data centre operators: densification, thermal performance, deployment speed, and operational clarity. The progress made over the last 12 months reflects that focus.”

New high-density additions

At the exhibition, Mayflex will introduce new very small form factor (VSFF) pre-connectorised fibre systems supporting up to 3,456 fibres in 1U, alongside high-density optical distribution frames with pre-connectorised trays and cables.

Additional launches include high-density power distribution strips and intelligent rack locking systems. Updates to the DCR Rack Series and cold aisle containment systems will also be demonstrated.

Visitors to Stand B180 can view the portfolio and speak with the team during the event.

For more from Mayflex, click here.

Joe Peck - 25 February 2026

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

News

RETN launches Tallinn–Cēsis backbone route

RETN, an independent global network services provider, has launched a new backbone route between Tallinn and Cēsis, designed to strengthen connectivity between Northern and Central Europe.

The route was tested shortly before entering service when a fibre break affected the primary backbone path in late 2025. During pre-service testing, engineers redirected live traffic onto the new Tallinn–Cēsis link.

More than 40 DWDM (Dense Wavelength Division Multiplexing) backbone channels across multiple European segments were rerouted within 60 minutes. According to the company, latency and jitter remained within normal operating parameters during the transfer.

Additional capacity and route diversity

The new line forms part of RETN’s wider network expansion strategy, aimed at increasing route and supplier diversity. It provides an additional terrestrial path between Finland, the Nordics, and Central Europe.

The deployment includes a new core point of presence at Greenergy Data Centre in Tallinn and adds capacity of up to 40Tbps, with additional DWDM spectrum available for future services and traffic resilience.

Tony O’Sullivan, CEO of RETN, says, “Modern backbone networks have to be engineered on the assumption that outages are inevitable. Therefore, the network design should be resilient from the start.

"The Tallinn–Cēsis route was built as part of a deliberate resilience strategy, adding diversity at both the route and supplier level so that when a failure occurs, traffic can be shifted quickly without compromising performance.”

For more from RETN, click here.

Joe Peck - 23 February 2026

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173