Liquid Cooling Technologies Driving Data Centre Efficiency

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Ireland’s first liquid-cooled AI supercomputer

CloudCIX, an Irish provider of open-source cloud computing platforms and data centre services, and AlloComp, an Irish provider of AI infrastructure and sustainable computing solutions, have announced the deployment of Ireland’s first liquid-cooled NVIDIA HGX-based supercomputer at CloudCIX’s facility in Cork, marking a development in the country’s AI and high performance computing (HPC) infrastructure.

Delivered recently and scheduled to go live in the coming weeks, the system is based on NVIDIA’s Blackwell architecture and supplied through Dell Technologies. It represents an upgrade to the Boole Supercomputer and is among the first liquid-cooled installations of this class in Europe.

The upgraded system is intended to support industry users, startups, applied research teams, and academic spin-outs that require high performance, sovereign compute capacity within Ireland.

Installation and infrastructure requirements

The system stands more than 2.5 metres tall and weighs close to one tonne, requiring structural modifications during installation. Costellos Engineering carried out the building works and precision placement, including the creation of a new access point to accommodate the liquid-cooled rack.

Jerry Sweeney, Managing Director of CloudCIX, says, “More and more Irish companies are working with AI models that demand extreme performance and tight control over data. This upgrade gives industry, startups, and applied researchers a world-class compute platform here in Ireland, close to their teams, their systems, and their customers.”

The project was led by AlloComp, CloudCIX’s AI infrastructure partner, which supported system selection, supply coordination, and technical deployment.

AlloComp co-founder Niall Smith comments, “[The] Boole Supercomputer upgrade represents a major step forward in what’s possible with AI, but it also marks a fundamental shift in the infrastructure required to power it.

"Traditional data centres average roughly 8 kW per rack; today’s advanced AI systems are already at [an] unprecedented 120 kW per rack, and the next generation is forecast to reach 600 kW. Liquid cooling is no longer optional; it is the only way to deliver the density, efficiency, and performance for demanding AI workloads.”

Kasia Zabinska, co-founder of AlloComp, adds, “Supporting CloudCIX in delivering Ireland’s first liquid-cooled system of this type is an important milestone. The result is a high-density platform designed to give Irish teams the performance, control, and sustainability they need to develop and deploy AI.”

The system is expected to support larger model training, advanced simulation, and other compute-intensive workloads across sectors including medtech, pharmaceuticals, manufacturing, robotics, and computer vision.

CloudCIX says it will begin onboarding customers as part of its sovereign AI infrastructure offering in the near term.

To mark the deployment, CloudCIX and AlloComp plan to host a national event in January 2026, focused on supercomputing and next-generation AI infrastructure. The event will bring together industry, research, and policy stakeholders to discuss the role of sovereign and energy-efficient compute in Ireland’s AI development.

Joe Peck - 16 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Motivair by Schneider Electric introduces new CDUs

Motivair, a US provider of liquid cooling solutions for data centres and AI computing, owned by Schneider Electric, has introduced a new range of coolant distribution units (CDUs) designed to address the increasing thermal requirements of high performance computing and AI workloads.

The new units are designed for installation in utility corridors rather than within the white space, reflecting changes in how liquid cooling infrastructure is being deployed in modern data centres.

According to the company, this approach is intended to provide operators with greater flexibility when integrating cooling systems into different facility layouts.

The CDUs will be available globally, with manufacturing scheduled to increase from early 2026.

Motivair states that the range supports a broader set of operating conditions, allowing data centre operators to use a wider range of chilled water temperatures when planning and operating liquid cooled environments.

The additions expand the company’s existing liquid cooling portfolio, which includes floor-mounted and in-rack units for use across hyperscale, colocation, edge, and retrofit sites.

Cooling design flexibility for AI infrastructure

Motivair says the new CDUs reflect changes in infrastructure design as compute densities increase and AI workloads become more prevalent.

The company notes that operators are increasingly placing CDUs outside traditional IT spaces to improve layout flexibility and maintenance access, as having multiple CDU deployment options allows cooling approaches to be aligned more closely with specific data centre designs and workload requirements.

The company highlights space efficiency, broader operating ranges, easier access for maintenance, and closer integration with chiller plant infrastructure as key considerations for operators planning liquid cooling systems.

Andrew Bradner, Senior Vice President, Cooling Business at Schneider Electric, says, “When it comes to data centre liquid cooling, flexibility is the key with customers demanding a more diverse and larger portfolio of end-to-end solutions.

"Our new CDUs allow customers to match deployment strategies to a wider range of accelerated computing applications while leveraging decades of specialised cooling experience to ensure optimal performance, reliability, and future-readiness.”

The launch marks the first new product range from Motivair since Schneider Electric acquired the company in February 2025.

Rich Whitmore, CEO of Motivair, comments, “Motivair is a trusted partner for advanced liquid cooling solutions and our new range of technologies enables data centre operators to navigate the AI era with confidence.

"Together with Schneider Electric, our goal is to deliver next-generation cooling solutions that adapt to any HPC, AI, or advanced data centre deployment to deliver seamless scalability, performance, and reliability when it matters most.”

For more from Schneider Electric, click here.

Joe Peck - 15 December 2025

Data Centre Infrastructure News & Trends

Enclosures, Cabinets & Racks for Data Centre Efficiency

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Supermicro launches liquid-cooled NVIDIA HGX B300 systems

Supermicro, a provider of application-optimised IT systems, has announced the expansion of its NVIDIA Blackwell architecture portfolio with new 4U and 2-OU liquid-cooled NVIDIA HGX B300 systems, now available for high-volume shipment.

The systems form part of Supermicro's Data Centre Building Block approach, delivering GPU density and power efficiency for hyperscale data centres and AI factory deployments.

Charles Liang, President and CEO of Supermicro, says, "With AI infrastructure demand accelerating globally, our new liquid-cooled NVIDIA HGX B300 systems deliver the performance density and energy efficiency that hyperscalers and AI factories need today.

"We're now offering the industry's most compact NVIDIA HGX B300 options - achieving up to 144 GPUs in a single rack - whilst reducing power consumption and cooling costs through our proven direct liquid-cooling technology."

System specifications and architecture

The 2-OU liquid-cooled NVIDIA HGX B300 system, built to the 21-inch OCP Open Rack V3 specification, enables up to 144 GPUs per rack. The rack-scale design features blind-mate manifold connections, modular GPU and CPU tray architecture, and component liquid cooling.

The system supports eight NVIDIA Blackwell Ultra GPUs at up to 1,100 watts thermal design power each. A single ORV3 rack supports up to 18 nodes with 144 GPUs total, scaling with NVIDIA Quantum-X800 InfiniBand switches and Supermicro's 1.8-megawatt in-row coolant distribution units.

The 4U Front I/O HGX B300 Liquid-Cooled System offers the same compute performance in a traditional 19-inch EIA rack form factor for large-scale AI factory deployments. The 4U system uses Supermicro's DLC-2 technology to capture up to 98% of heat generated by the system through liquid cooling.

Supermicro NVIDIA HGX B300 systems feature 2.1 terabytes of HBM3e GPU memory per system. Both the 2-OU and 4U platforms deliver performance gains at cluster level by doubling compute fabric network throughput up to 800 gigabits per second via integrated NVIDIA ConnectX-8 SuperNICs when used with NVIDIA Quantum-X800 InfiniBand or NVIDIA Spectrum-4 Ethernet.

With the DLC-2 technology stack, data centres can reportedly achieve up to 40% power savings, reduce water consumption through 45°C warm water operation, and eliminate chilled water and compressors.

Supermicro says it delivers the new systems as fully validated, tested racks before shipment.

The systems expand Supermicro's portfolio of NVIDIA Blackwell platforms, including the NVIDIA GB300 NVL72, NVIDIA HGX B200, and NVIDIA RTX PRO 6000 Blackwell Server Edition. Each system is also NVIDIA-certified.

For more from Supermicro, click here.

Joe Peck - 10 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Siemens, nVent develop reference design for AI DCs

German multinational technology company Siemens and nVent, a US manufacturer of electrical connection and protection systems, are collaborating on a liquid cooling and power reference architecture intended for hyperscale AI environments.

The design aims to support operators facing rising power densities, more demanding compute loads, and the need for modular infrastructure that maintains uptime and operational resilience.

The joint reference architecture is being developed for 100MW-scale AI data centres using liquid-cooled infrastructure such as the NVIDIA DGX SuperPOD with GB200 systems.

It combines Siemens’ electrical and automation technology with NVIDIA’s reference design framework and nVent’s liquid cooling capabilities. The companies state that the architecture is structured to be compatible with Tier III design requirements.

Reference model for power and cooling integration

“We have decades of expertise supporting customers’ next-generation computing infrastructure needs,” says Sara Zawoyski, President of Systems Protection at nVent. “This collaboration with Siemens underscores that commitment.

"The joint reference architecture will help data centre managers deploy our cutting-edge cooling infrastructure to support the AI buildout.”

Ciaran Flanagan, Global Head of Data Center Solutions at Siemens, adds, “This reference architecture accelerates time-to-compute and maximises tokens-per-watt, which is the measure of AI output per unit of energy.

“It’s a blueprint for scale: modular, fault-tolerant, and energy-efficient. Together with nVent and our broader ecosystem of partners, we’re connecting the dots across the value chain to drive innovation, interoperability, and sustainability, helping operators build future-ready data centres that unlock AI’s full potential.”

Reference architectures are increasingly used by data centre operators to support rapid deployment and consistent interface standards. They are particularly relevant as facilities adapt to higher rack-level densities and more intensive computing requirements.

Siemens says it contributes its experience in industrial electrical systems and automation, ranging from medium- and low-voltage distribution to energy management software.

nVent adds that it brings expertise in liquid cooling, working with chip manufacturers, original equipment makers, and hyperscale operators.

For more from Siemens, click here.

Joe Peck - 9 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

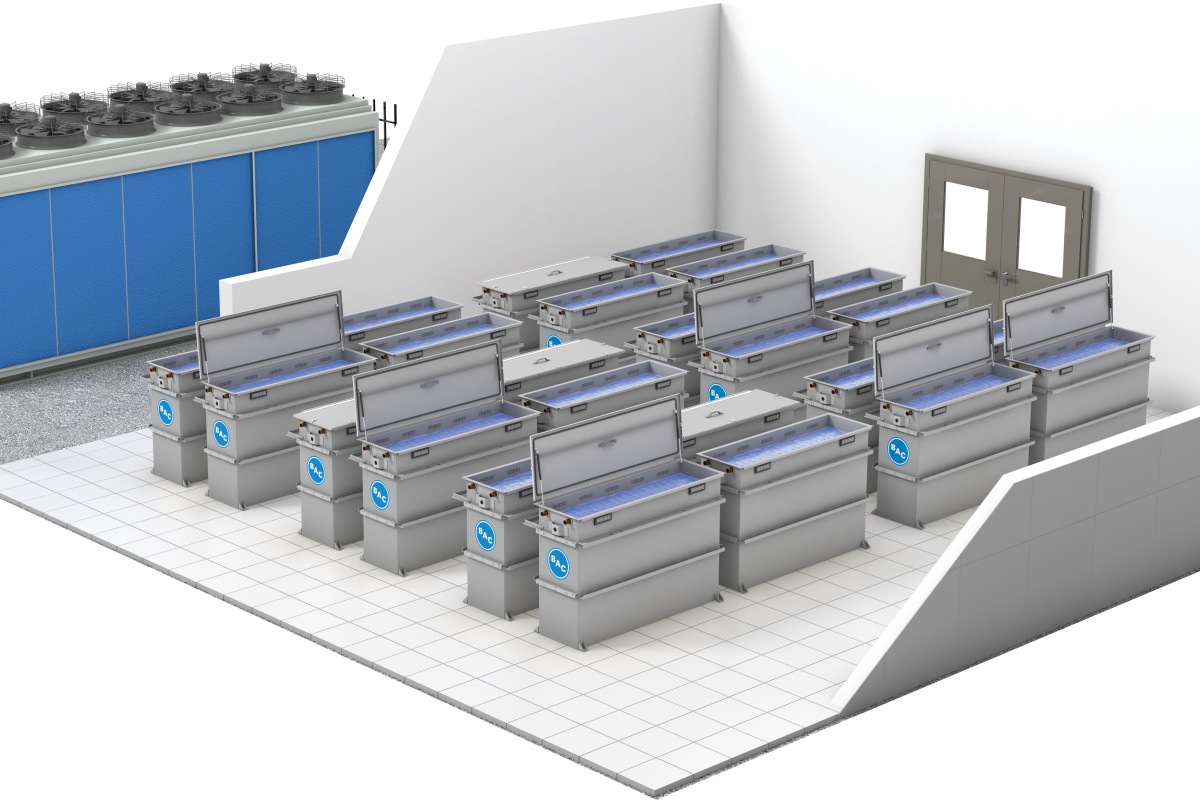

BAC immersion cooling tank gains Intel certification

BAC (Baltimore Aircoil Company), a provider of data centre cooling equipment, has received certification for its immersion cooling tank as part of the Intel Data Center Certified Solution for Immersion Cooling, covering fourth- and fifth-generation Xeon processors.

The programme aims to validate immersion technologies that meet the efficiency and sustainability requirements of modern data centres.

The Intel certification process involved extensive testing of immersion tanks, cooling fluids, and IT hardware. It was developed collaboratively by BAC, Intel, ExxonMobil, Hypertec, and Micron.

The programme also enables Intel to offer a warranty rider for single-phase immersion-cooled Xeon processors, providing assurance on durability and hardware compatibility.

Testing was carried out at Intel’s Advanced Data Center Development Lab in Hillsboro, Oregon. BAC’s immersion cooling tanks, including its CorTex technology, were used to validate performance, reliability, and integration between cooling fluid and IT components.

“Immersion cooling represents a critical advancement in data centre thermal management, and this certification is a powerful validation of that progress,” says Jan Tysebeart, BAC’s General Manager of Data Centers. “Our immersion cooling tanks are engineered for the highest levels of efficiency and reliability.

"By participating in this collaborative certification effort, we’re helping to ensure a trusted, seamless, and superior experience for our customers worldwide.”

Joint testing to support industry adoption

The certification builds on BAC’s work in high-efficiency cooling design.

Its Cobalt immersion system, which combines an indoor immersion tank with an outdoor heat-rejection unit, is designed to support low Power Usage Effectiveness values while improving uptime and sustainability.

Jan continues, “Through rigorous joint testing and validation by Intel, we’ve proven that immersion cooling can bridge IT hardware and facility infrastructure more efficiently than ever before.

“Certification programmes like this one are key to accelerating industry adoption by ensuring every element - tank, fluid, processor, and memory - meets the same high standards of reliability and performance.”

For more from BAC, click here.

Joe Peck - 5 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Aggreko expands liquid-cooled load banks for AI DCs

Aggreko, a British multinational temporary power generation and temperature control company, has expanded its liquid-cooled load bank fleet by 120MW to meet rising global demand for commissioning equipment used in high-density data centres.

The company plans to double this capacity in 2026, supporting deployments across North America, Europe, and Asia, as operators transition to liquid cooling to manage the growth of AI and high-performance computing.

Increasing rack densities, now reaching between 300kW and 500kW in some environments, have pushed conventional air-cooling systems to their limits. Liquid cooling is becoming the standard approach, offering far greater heat removal efficiency and significantly lower power consumption.

As these systems mature, accurate simulation of thermal and electrical loads has become essential during commissioning to minimise downtime and protect equipment.

The expanded fleet enables Aggreko to provide contractors and commissioning teams with equipment capable of testing both primary and secondary cooling loops, including chiller lines and coolant distribution units. The load banks also simulate electrical demand during integrated systems testing.

Billy Durie, Global Sector Head – Data Centres at Aggreko, says, “The data centre market is growing fast, and with that speed comes the need to adopt energy efficient cooling systems. With this comes challenges that demand innovative testing solutions.

“Our multi-million-pound investment in liquid-cooled load banks enables our partners - including those investing in hyperscale data centre delivery - to commission their facilities faster, reduce risks, and achieve ambitious energy efficiency goals.”

Supporting commissioning and sustainability targets

Liquid-cooled load banks replicate the heat output of IT hardware, enabling operators to validate cooling performance before systems go live. This approach can improve Power Usage Effectiveness and Water Usage Effectiveness while reducing the likelihood of early operational issues.

Manufactured with corrosion-resistant materials and advanced control features, the equipment is designed for use in environments where reliability is critical.

Remote operation capabilities and simplified installation procedures are also intended to reduce commissioning timelines.

With global data centre power demand projected to rise significantly by 2030, driven by AI and high-performance computing, the ability to validate cooling systems efficiently is increasingly important.

Aggreko says it also provides commissioning support as part of project delivery, working with data centre teams to develop testing programmes suited to each site.

Billy continues, “Our teams work closely with our customers to understand their infrastructure, challenges, and goals, developing tailored testing solutions that scale with each project’s complexity.

"We’re always learning from projects, refining our design and delivery to respond to emerging market needs such as system cleanliness, water quality management, and bespoke, end-to-end project support.”

Aggreko states that the latest investment strengthens its ability to support high-density data centre construction and aligns with wider moves towards more efficient and sustainable operations.

Billy adds, “The volume of data centre delivery required is unprecedented. By expanding our liquid-cooled load bank fleet, we’re scaling to meet immediate market demand and to help our customers deliver their data centres on time.

"This is about providing the right tools to enable innovation and growth in an era defined by AI.”

For more from Aggreko, click here.

Joe Peck - 5 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Why cooling design is critical to the cloud

In this article for DCNN, Ross Waite, Export Sales Manager at Balmoral Tanks, examines how design decisions today will shape sustainable and resilient cooling infrastructure for decades to come:

Running hot and running dry?

Driven by the surge in AI and cloud computing, new data centres are appearing at pace across Europe, North America, and beyond. Much of the debate has focused on how we power sites, yet there is another side to the story, one that determines whether those billions invested in servers actually deliver: cooling.

Servers run hot, 24/7, and without reliable water systems to manage that heat, even the best-connected facilities cannot operate as intended. In fact, cooling is fast becoming the next frontier in data centre design and the decisions made today will echo for decades.

A growing thirst

Data centres are rapidly emerging as one of the most significant commercial water consumers worldwide. Current global estimates suggest that facilities already use over 560 billion litres of water annually, with that figure set to more than double to 1,200 billion litres by 2030 as AI workloads intensify.

The numbers at an individual site are equally stark. A single 100 MW hyperscale centre can use up to 2.5 billion litres per year - enough to supply a city of 80,000 people. Google has reported daily use of more than 2.1 million litres at some sites, while Microsoft’s 2023 global consumption rose 34% year-on-year to reach 6.4 million cubic metres. Meta reported 95% of its 2023 water use - some 3.1 billion litres - came from data centres.

The majority of this is consumed in evaporative cooling systems, where 80% of drawn water is lost to evaporation and just 20% returns for treatment. While some operators are trialling reclaimed or non-potable sources, these currently make up less than 5% of total supply.

The headline numbers can sound bleak, but water use is not inherently unsustainable. Increasingly, facilities are moving towards closed-loop cooling systems that recycle water for six to eight months at a time, reducing continuous draw from mains supply. These systems require bulk storage capacity, both for the initial fill and for holding treated water ready for reuse.

Designing resilience into water systems

This is where design choices made early in a project pay dividends. Consultants working on new builds are specifying not only the volume of water storage or the type of system that should be used but also the standards to which they are built. Tanks that support fire suppression, potable water, and process cooling need to meet stringent criteria, often set by insurers as well as regulators.

Selecting materials and coatings that deliver 30-50 years of service life can prevent expensive retrofits and reassure both clients and communities that these systems are designed to last. Smart water management, in other words, begins not onsite, but on the drawing board.

For consultants who are designing the build specifications for data centres, water is more than a technical input; it is a reputational risk. Once a specification is signed off and issued to tender, it is rarely altered. Getting it right first time is essential. That means selecting partners who can provide not just tanks, but expertise: helping ensure that water systems meet performance, safety, and sustainability criteria across decades of operation.

The payback is twofold. First, consultants safeguard their client’s investment by embedding resilience from the start. Second, they position themselves as trusted advisors in one of the most scrutinised aspects of data centre development. In a sector where projects often run to tens or hundreds of millions of pounds, this credibility matters.

Power may dominate the headlines, but cooling - and by extension water - is the silent foundation of the digital economy. Without it, AI models do not train, cloud services do not scale, and data stops flowing. The future of data centres will be judged not only on how much power they consume, but on how intelligently they use water - and that judgement begins with design.

If data centres are the beating heart of the modern economy, then water is the life force that keeps them alive. Cooling the cloud is not an afterthought; it is the future.

Joe Peck - 17 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

BAC releases upgraded immersion cooling tanks

Baltimore Aircoil Company (BAC), a provider of data centre cooling equipment, has introduced an updated immersion cooling tank for high-performance data centres, incorporating its CorTex technology to improve reliability, efficiency, and support for high-density computing environments.

The company says the latest tank has been engineered to provide consistent performance with minimal maintenance, noting its sealed design has no penetrations below the fluid level, helping maintain fluid integrity and reduce leakage risks.

Dual pumps are included for redundancy and the filter-free configuration removes the need for routine filter replacement.

Design improvements for reliability and ease of operation

The tanks are available in four sizes - 16RU, 32RU, 38RU, and 48RU - allowing operators to accommodate a range of immersion-ready servers. Air-cooled servers can also be adapted for immersion use.

Each unit supports server widths of 19 and 21 inches (~48 cm and ~53 cm) and depths up to 1,200 mm, enabling higher rack densities within a smaller footprint than traditional air-cooled systems.

BAC states that the design can support power usage effectiveness levels of up to 1.05, depending on the wider installation.

The system uses dielectric fluid to transfer heat from servers to the internal heat exchanger, while external circuits can run on water or water-glycol mixtures.

Cable entry points, the lid, and heat-exchanger connections are fluid-tight to help prevent contamination.

The immersion tank forms the indoor component of BAC’s Cobalt system, which combines indoor and outdoor cooling technologies for high-density computing.

The system can be paired with BAC’s evaporative, hybrid, adiabatic, or dry outdoor equipment to create a complete cooling configuration for data centres managing higher-powered servers and AI-driven workloads.

For more from BAC, click here.

Joe Peck - 17 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

ZutaCore unveils waterless end-of-row CDUs

ZutaCore, a developer of liquid cooling technology, has introduced a new family of waterless end-of-row (EOR) coolant distribution units (CDUs) designed for high-density artificial intelligence (AI) and high-performance computing (HPC) environments.

The units are available in 1.2 MW and 2 MW configurations and form part of the company’s direct-to-chip, two-phase liquid cooling portfolio.

According to ZutaCore, the EOR CDU range is intended to support multiple server racks from a single unit while maintaining rack-level monitoring and control.

The company states that this centralised design reduces duplicated infrastructure and enables waterless operation inside the white space, addressing energy-efficiency and sustainability requirements in modern data centres.

The cooling approach uses ZutaCore’s two-phase, direct-to-chip technology and a low-global warming potential dielectric fluid. Heat is rejected into the facility without water inside the server hall, aiming to reduce condensation and leak risk while improving thermal efficiency.

My Truong, Chief Technology Officer at ZutaCore, says, “AI data centres demand reliable, scalable thermal management that provides rapid insights to operate at full potential. Our new end-of-row CDU family gives operators the control, intelligence, and reliability required to scale sustainably.

"By integrating advanced cooling physics with modern RESTful APIs for remote monitoring and management, we’re enabling data centres to unlock new performance levels without compromising uptime or efficiency.”

Centralised cooling and deployment models

ZutaCore states that the systems are designed to support varying availability requirements, with hot-swappable components for continuous operation.

Deployment options include a single-unit configuration for cost-effective scaling or an active-standby arrangement for enterprise environments that require higher redundancy levels.

The company adds that the units offer encrypted connectivity and real-time monitoring through RESTful APIs, aimed at supporting operational visibility across multiple cooling units.

The EOR CDU platform is set to be used in EGIL Wings’ 15 MW AI Vault facility, as part of a combined approach to sustainable, high-density compute infrastructure.

Leland Sparks, President of EGIL Wings, claims, “ZutaCore’s end-of-row CDUs are exactly the kind of innovation needed to meet the energy and thermal challenges of AI-scale compute.

"By pairing ZutaCore’s waterless cooling with our sustainable power systems, we can deliver data centres that are faster to deploy, more energy-efficient, and ready for the global scale of AI.”

ZutaCore notes that its cooling technology has been deployed across more than forty global sites over the past four years, with users including Equinix, SoftBank, and the University of Münster.

The company says it continues to expand through partnerships with organisations such as Mitsubishi Heavy Industries, Carrier, and ASRock Rack, including work on systems designed for next-generation AI servers.

Joe Peck - 14 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Vertiv expands immersion liquid cooling portfolio

Vertiv, a global provider of critical digital infrastructure, has introduced the Vertiv CoolCenter Immersion cooling system, expanding its liquid cooling portfolio to support AI and high-performance computing (HPC) environments. The system is available now in Europe, the Middle East, and Africa (EMEA).

Immersion cooling submerges entire servers in a dielectric liquid, providing efficient and uniform heat removal across all components. This is particularly effective for systems where power densities and thermal loads exceed the limits of traditional air-cooling methods.

Vertiv has designed its CoolCenter Immersion product as a "complete liquid-cooling architecture", aiming to enable reliable heat removal for dense compute ranging from 25 kW to 240 kW per system.

Sam Bainborough, EMEA Vice President of Thermal Business at Vertiv, explains, “Immersion cooling is playing an increasingly important role as AI and HPC deployments push thermal limits far beyond what conventional systems can handle.

“With the Vertiv CoolCenter Immersion, we’re applying decades of liquid-cooling expertise to deliver fully engineered systems that handle extreme heat densities safely and efficiently, giving operators a practical path to scale AI infrastructure without compromising reliability or serviceability.”

Product features

The Vertiv CoolCenter Immersion is available in multiple configurations, including self-contained and multi-tank options, with cooling capacities from 25 kW to 240 kW.

Each system includes an internal or external liquid tank, coolant distribution unit (CDU), temperature sensors, variable-speed pumps, and fluid piping, all intended to deliver precise temperature control and consistent thermal performance.

Vertiv says that dual power supplies and redundant pumps provide high cooling availability, while integrated monitoring sensors, a nine-inch touchscreen, and building management system (BMS) connectivity simplify operation and system visibility.

The system’s design also enables heat reuse opportunities, supporting more efficient thermal management strategies across facilities and aligning with broader energy-efficiency objectives.

For more from Vertiv, click here.

Joe Peck - 7 November 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173