Features

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Edge Computing in Modern Data Centre Operations

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

CMC Cloud to take Africa to the edge

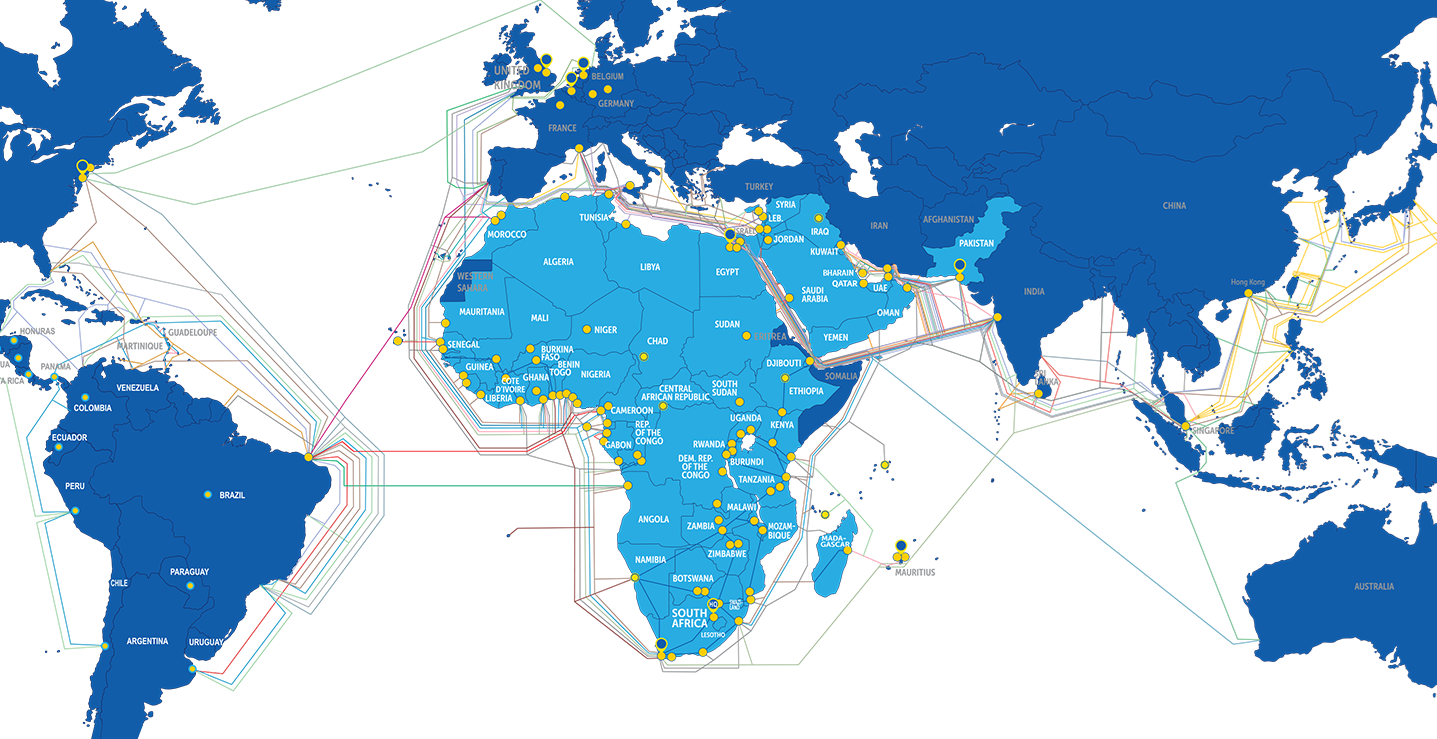

CMC Networks, a global Tier 1 service provider, has launched CMC Cloud to deliver high-performance edge computing across Africa.

CMC Cloud is an Infrastructure as a Service (IaaS) solution designed to bring workloads closer to the end user and improve the performance of applications and services, without the need to invest in physical hardware.

CMC Cloud provides a decentralised edge infrastructure to reduce latency and bandwidth use, helping to enhance the performance and reliability of digital services across Africa. This is essential for real-time applications using transactional data, video streaming, and the Internet of Things (IoT). The IaaS model provides businesses with access to virtual servers, storage, and networking infrastructure on a flexible, scalable and agile basis.

“CMC Cloud has been purpose-built for the African continent. We understand local needs and challenges across markets, and our new IaaS offering is providing tailored solutions to address the specific requirements of African businesses,” says Marisa Trisolino, CEO at CMC Networks. “We want to remove the barriers to cloud adoption, simplifying and accelerating the growth of Africa’s digital economy. CMC Cloud is making this a reality with a flexible, scalable and cost-efficient model.”

CMC Networks makes it simple for both local and international businesses to enter and expand across African markets by overcoming data sovereignty laws which can otherwise limit cloud adoption. It offers cloud services that store and process data within country borders, helping organisations to comply with country-specific legal and regulatory requirements regarding data storage and privacy. These privacy and security measures are crucial for sectors such as finance, healthcare, and public services with sensitive data handling protocols.

“CMC Cloud, on top of our vast network footprint and ‘application-first’ AI core routing capabilities, delivers a holistic approach to digital transformation,” notes Geoff Dornan, CTO at CMC Networks. “Customers can easily adjust computing resources to meet changing business demands while optimising performance with smooth and responsive access to applications and data. The flexibility of CMC Cloud helps in scaling resources in accordance with demand, making it a cost-effective solution for start-ups, SMEs, and large enterprises alike. With CMC Cloud, we’re making it easier than ever for businesses to thrive in today’s digital economy.”

CMC Cloud provides various compute, storage and networking solutions including Virtual Machines, memory, block storage, object storage, IP addresses, Firewall as a Service (FWaaS), IP Premier Dedicated Internet Access (DIA), Distributed Denial of Service Protection as a Service (DDoSPaaS), Carrier Ethernet, MPLS and Multi Cloud Connect. CMC Networks’ Flex-Edge packages software-defined wide-area networking (SD-WAN), SDN, network functions virtualisation (NFV) and security into one solution, without physical presence required in-country. CMC Networks has the largest pan-African network, servicing 51 out of 54 countries in Africa and 12 countries in the Middle East.

For more from CMC Cloud, click here.

Simon Rowley - 9 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Distributed cloud saves CloudReso 30% on storage costs

Cubbit, the innovator behind Europe’s first distributed cloud storage enabler, has announced that CloudReso, a France-based distributor of MSP security solutions, has reduced its cloud object storage costs by 30% thanks to Cubbit’s fully-managed cloud object storage, DS3.

The MSP can now offer its customers cloud storage with unequalled data sovereignty specs, geographical resilience, and double ransomware protection (server and client-side). Through this deployment, CloudReso has successfully avoided hidden costs traditionally linked to S3, including egress, deletion, and bucket replication fees.

Operating across all French-speaking countries, CloudReso manages over 790 TB of data and backs up 1,590 endpoints. CloudReso's expertise covers a wide range of vertical markets, including the public sector, providing extensive technical knowledge of S3 solutions and support.

With cyber-threats such as ransomware attacks growing in sophistication, targeting both client-side and server-side vulnerabilities with unprecedented precision, Cubbit technology has helped CloudReso protect data both client-side (Object Lock, versioning, IAM policy) and server-side (geo-distribution, encryption).

The MSP considered and assessed various public services over the years, including centralised S3 cloud storage offerings. These options incurred high fees for deleting data, and expenses were multiplied according to the number of sites needed for bucket replication (in CloudReso’s case the configuration was composed of three data centres across the Paris region). With Cubbit DS3, fixed storage costs include all the main S3 APIs, together with the geo-distribution capacity, enabling CloudReso to save 30% on storage costs while providing a cloud storage solution with up to 15 9s for durability. Cubbit’s flat rate also helped CloudReso quickly estimate the monthly volume data load, whilst easily predicting costs and ROI, enabling CloudReso to make higher margins.

The infrastructure and maintenance costs of other on-prem object storage options did not offer a perfectly secure and available solution for the MSP’s needs. A major prerequisite for CloudReso's choice of partner was the inclusion of all the key S3 APIs, including Lifecycle Configuration (enabling setting the lifecycle of data and objects), and Object Lock technology, thus implementing an extra layer of data protection from ransomware and preventing unauthorised data access or deletion - capabilities not delivered as comprehensively or as cost-effectively by the other providers. Whereas Cubbit offers an extensive range of S3 object store features at one of today's most competitive prices. With Cubbit's GDPR compliance and geo-fence capabilities, CloudReso can now comply with regional regulations and stringent laws impacting the industries in which its customers operate, enabling the MSP to create new sources of revenue streams.

Gilles Gozlan, CEO at CloudReso, says, “To store our data, we've used US-based and French-based cloud storage providers for a long time, but they did not come with the geographical resilience, data sovereignty and simple pricing that Cubbit offers. This has enabled us to estimate our ROI more easily, and to generate a 30% saving on our previous costs for equivalent configuration. Cubbit will enable us to enter new data intensive, highly-regulated markets. Moreover, Cubbit has a quality and response time of support that we haven't found with any other provider. For these reasons we haven't used any other S3 provider since we started working with Cubbit.”

Richard Czech, Chief Revenue Officer at Cubbit, adds, “Together with the explosive growth of unstructured data, European organisations are now facing an increasing number of challenges: cyber-threats, data sovereignty, and unpredictable costs, to name a few. CloudReso has our DS3 as a true obstacle remover, and we are working together to bring it to organisations all over Europe.”

Moving forward, CloudReso expects to develop the adoption of Cubbit throughout all Francophone territories. Soon, CloudReso will also adopt Cubbit’s new DS3 Composer to provide its customers with on-prem, country-clustered innovations with complete sovereignty and compliance, including NIS2, GDPR, and ISO 27001.

For more from Cubbit, click here.

Simon Rowley - 8 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News in Cloud Computing & Data Storage

Wasabi delivers flexible hybrid cloud storage solutions

Wasabi Technologies, the hot cloud storage company, has announced a collaboration with Dell Technologies, via the Extended Technologies Complete program to bring affordable and innovative hybrid cloud solutions for backup, data protection, and long-term retention to customers.

In a time marked by the exponential growth of data, organisations worldwide face the need for efficient and cost-effective storage solutions. The Wasabi-Dell collaboration addresses this challenge by providing flexible, efficient hybrid cloud solutions enabling users to optimise their data management processes while simultaneously reducing overall costs.

"Dell is a clear industry leader with a broad and deep portfolio of transformative technology,” says David Friend, co-founder and chief executive officer at Wasabi Technologies. "This collaboration will extend the reach of Wasabi's cloud storage to a broader audience, catering to users in search of dependable, economical solutions for safeguarding their data archives over the long haul."

The cloud has become the preferred location for long-term data backup retention and disaster recovery. Dell’s PowerProtect Data Domain appliances natively tiers data to Wasabi, enabling customers to benefit from a complete data protection solution for on-premises storage with long-term cloud retention. In addition, Wasabi integrates with Dell NetWorker CloudBoost to bring long term retention in the cloud to existing NetWorker customers.

"The collaboration between Wasabi Technologies and Dell Technologies presents a powerful solution for organisations grappling with data growth," says Dave McCarthy, research vice president at IDC. "Organisations need a hybrid cloud infrastructure that is efficient and cost-effective, and that has the ability to scale with them during their data management journey. This collaboration meets these challenges head on."

Carly Weller - 7 May 2024

Enterprise Network Infrastructure: Design, Performance & Security

Features

News

How to choose a network performance monitoring solution

by Paul Gray, Chief Product Officer at LiveAction.

Enterprise networks are undergoing huge changes. It’s not uncommon for an enterprise to find that it has quickly outgrown its NPM and that it simply cannot cover the true scope of the enterprise's new geography of activity.

LiveAction’s 2024 Network performance and monitoring trends investigated the current state of NPM solutions, surveying hundreds of enterprises on their experiences. Nearly three-quarters (74%) of respondents managed on-premises networks, 70% said they manage cloud environments, 61% said they’re running hybrid architectures and 43% are managing WAN and SD-WAN networks. This kind of environment diversity signifies a huge change from previous generations' traditionally homogenous corporate networks. However, their NPM solutions often cannot keep up with their growing networks, much less their future ambitions.

Over half (57%) reported that they lack visibility into exactly the places they now manage - such as cloud SD-WAN, WLAN but also growing areas like Voice and Video. Nearly half (49%) say that their current NPMs cannot generate actionable insights that will allow them to solve the problems quickly. Crucially, 43% of respondents also report that their NPMs struggle to scale as the data volumes and network complexity increase. This isn't just a matter of simple network management, but the long-term health of the larger organisation. To innovate, enterprises have to be able to understand their environments and make sure that they run efficiently and securely so that they can accommodate new transformations.

Searching questions

So what then? Enterprises will need to consider their options. Choosing a new NPM is more complex than picking it off the shelf. An organisation on the hunt for a new NPM will first need to ask themselves several searching questions about what will fit with their goals and needs.

They’ll first have to understand their current needs and how, if applicable, their current NPM is failing them. For example, are there new types and traffic loads the current infrastructure cannot handle? Are they dealing with new security threats that their NPM is repeatedly missing? Are their attempts to decrypt traffic introducing compliance risks?

The current environment and network activity's true scope must also be addressed. Whatever NPM solution a business chooses, it must accommodate its various environments, from data centres to multi-clouds.

It’s crucial for future intentions as well. If a business wants to hybridise its environment or roll out large IoT deployments, its choice of NPM provider may change significantly. Depending on future transformation goals, a business will need to consider how well a solution can scale to meet practical realities and future ambitions.

NPMs for today’s landscape

Businesses must choose the solution that works for them; however, some characteristics are critical in today's landscape.

Firstly, comprehensive end-to-end network visibility and performance management should be considered fundamental. That includes visibility across SD-WAN, WAN, LAN, public, hybrid and multi-cloud environments. This is especially important because problems might spring up in one part of the network, but may emanate from another. The ability to pinpoint the origin of such problems is an absolute necessity, especially across the diverse range of environments of today’s modern networks.

Network Traffic Analysis is also crucial because businesses need to be able to use deep packet capture, netflow, and analytics to monitor network traffic in real time and granularly inspect the contents of individual packets without decrypting them.

Application performance and visibility monitoring are critical to correlate data across network devices, applications, cloud environments, and other places to maximise application performance.

The ability to visualise network activity will also be important to unlock a strategic view of network activity. This will allow issues to be quickly tracked to their root cause and relevant specialists to gain a holistic view of network activity.

Justifying new purchases to the board

There’s also a crucial question here about how to justify the purchase of new NPM tools to management. C-suite level executives and managers with control over budget are not tech-savvy. From that point of view, they can view large purchases or infrastructural changes with scepticism. As such, the technical team must justify their budgets on a higher level.

The first step on this path involves understanding the perceived risks of adopting a new NPM solution. Implementation may be complex, involve potential disruptions, and be costly. These concerns must be addressed head-on so that they can be rationally weighed against the benefits of a new solution.

Equally, those benefits have to be stated clearly. Executives and managers need to know how a particular solution can proactively identify issues, optimise network performance, and contribute to further innovation, ROI, and competitive advantage.

Enterprises need not go into a new solution full-bore. Many vendors offer trial periods for their solutions so that customers can see how they run in their environment and otherwise meet their needs. Changes like these can be expensive so no choice should be made lightly. However, by proactively addressing and demystifying the perceived risks, a new NPM solution can help adapt an organisation to growing network demands and lay a foundation for further innovation.

For more from LiveAction, click here.

Simon Rowley - 2 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Arista launches next generation multi-domain segmentation

Arista Networks, a provider of cloud networking solutions, has announced a significant update to its Arista MSS (Multi-Domain Segmentation Service) offerings that address the challenge of creating a truly enterprise-wide zero trust network.

Without the need for endpoint software agents and proprietary network protocols, Arista MSS enables effective microperimeters that restrict lateral movement in campus and data centre networks and thus reduces the blast radius of security breaches such as ransomware.

Today’s distributed IT infrastructure with work-from-anywhere, the explosion of IoT devices and multi-cloud applications has upended the traditional security perimeter and led to a dynamic and unpredictable attack surface. To improve their defensive posture, organisations have embarked on zero trust efforts that require granular control of both north-south and east-west communication paths. Firewalls are simply not optimised to protect against all lateral movement, which would require a proliferation of security appliances, soaring costs, and an explosion of complex rule sets that still fail to protect against lateral movement.

To address this challenge, the Cybersecurity and Infrastructure Security Agency (CISA) “Zero Trust Maturity Model” recommends the adoption of microsegmentation for highly distributed, fine-grained enforcement through microperimeters. While many microsegmentation solutions are available on the market, both network and endpoint-based, they struggle with operational complexity, interoperability and portability challenges, and cost, which has limited their widespread adoption across the enterprise. As a result, zero trust efforts often stall.

Arista MSS offers standards-based microsegmentation using existing network infrastructure while overcoming the challenges of existing solutions. MSS is network-agnostic and end point-independent. It avoids proprietary protocols and can thus seamlessly integrate into a multi-network vendor environment. The solution also does not require endpoint software, avoiding the portability limitations and operational complexity typical of agent-based microsegmentation solutions.

"We are very impressed with the potential of Arista's MSS microperimter segmentation technology,” says Evan Gillette, Security Engineering, Paychex. “We view this technology as highly promising and believe it has the potential to transform our approach to security and segmentation from a traditional perimeter approach to a more distributed network-centric architecture. We are excited to be working with Arista to explore the possibilities of this innovative technology and its applications in our infrastructure.”

Arista MSS combines three capabilities that enable organisations to build microperimeters around each digital asset they seek to protect, whether in the campus or the data centre. Arista MSS enables:

▪ Stateless Wire-speed Enforcement in the Network: Arista EOS-based switches deliver a simple model for fine-grained, identity-aware microperimeter enforcement. This enforcement model is independent of endpoint type and identical across campus and data centre environments, simplifying day two operations. Importantly, Arista MSS thus enables lateral segmentation that is often missing today and offloads the capability from firewalls that would have to be explicitly deployed for this purpose.

▪ Redirection to Stateful Firewalls: Arista MSS can seamlessly integrate with firewalls and cloud proxies from partners such as Palo Alto Networks and Zscaler for stateful network enforcement, especially for north-south and inter-zone traffic. MSS thus ensures the right traffic is sent to these critical security controls, allowing them to focus on L4-L7 stateful enforcement while avoiding unnecessary hairpinning of all other traffic.

▪ CloudVision for Microperimeter Management: Arista CloudVision powered by NetDL provides deep real-time visibility into packets, flows, and endpoint identity. This, in turn, enables effective east-west lateral segmentation. In addition, MSS dashboards within CloudVision ease operator effort to manage the microperimeters. MSS extends Arista’s Ask AVA (Autonomous Virtual Assist) service to provide a chat-like interface for operators to navigate the dashboard data and query and filter policy violations.

“As a bank, we are committed to delivering comprehensive financial products and solutions, while putting customer's data and security as our top priority,” says Komang Artha Yasa, Technology Division Head, OCBC. “Security is also embedded in one of our core architectural principles when designing our data centre networks.

“Arista MSS completes our zero trust posture by working efficiently with our firewalls to microsegment our critical payment systems. Arista's approach is easy for us to adopt since it avoids software-based agents and still gives us interoperability across our entire data centre environment.”

Arista MSS seamlessly integrates with the broader Arista Zero Trust Networking solution, including Arista CloudVision, CV AGNITM and Arista NDR. It also integrates with industry-leading firewalls such as Palo Alto Networks, IT service management (ITSM) such as ServiceNow, and virtualisation platforms such as VMware.

"Arista MSS has been a welcome addition to our zero trust strategy,” notes Dougal Mair, Associate Director, Networks and Security at The University of Waikato. "The ability to provide an open but secure network for many users (e.g., students, faculty, guests), IT (e.g., laptops, printers), and IoT devices (including sensors and smart lighting) in a large environment was a huge challenge at the university. Arista MSS prevents any unauthorised peer-to-peer and lateral movement on our dynamic network."

Arista MSS is in trials now, with general availability in Q3 2024.

For more from Arista, click here.

Simon Rowley - 1 May 2024

Data Centre Regulations & UK Compliance Updates

Features

Innovations in Data Center Power and Cooling Solutions

News

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

How data centres can prepare for 2024 CSRD reporting

by Jad Jebara, CEO of Hyperview.

The CEO of Britain's National Grid, John Pettigrew, recently highlighted the grim reality that data centre power consumption is on track to grow 500% over the next decade. The time to take collective action around implementing innovative and sustainable date centre initiatives is now - and the new initiatives such as the Corporate Sustainability Reporting Directive (CSRD) is the perfect North Star to guide the future of data centre reporting.

This new EU regulation will impact around 50,000 organisations, including over 10,000 non-EU entities with a significant presence in the region. The Corporate Sustainability Reporting Directive (CSRD) requires businesses to report their sustainability efforts in more detail, starting this year. If your organisation is affected, you’ll need reliable, innovative data collection and analysis systems to meet the strict reporting requirements.

CSRD replaces older EU directives and provides more detailed and consistent data on corporate sustainability efforts. It will require thousands of companies that do business in the EU to file detailed reports on the environmental impact and climate-related risks of their operations. Numerous metrics being assessed are still widely analysed within additional EU-wide initiatives. For instance, the Energy Efficiency Directive (EED) requires reporting on two Information & Communication Technologies (ICT) within the CSRD Directive – ITEEsy and ITEUsy – allowing for enhanced measuring and insight into server utilisation, efficiency, and CO2 impact.

Given the anticipated explosion in energy consumption by data centres over the next decade, CSRD will shine a spotlight on the sustainability of these facilities. For example, the law will require organisations to provide accurate data for both greenhouse gases and Scope 1, 2 and 3 emissions.

The essential metrics that data centres will need to report on include:

Power usage effectiveness (PUE) – measures the efficiency of a data centre’s energy consumption

Renewable energy factor (REF) – quantifies the proportion of renewable energy sources used to power data centres

IT equipment energy efficiency for servers (ITEEsv) – evaluates server efficiency, focusing on reducing energy consumption per unit of computing power

IT equipment utilisation for servers (ITEUsv) – measures the utilisation rate of IT equipment

Energy reuse factor (ERF) – measures how much waste energy from data centre operations is reused or recycled

Cooling efficiency ratio (CER) – evaluates the efficiency of data centre cooling systems

Carbon usage effectiveness (CUE) – quantifies the carbon emissions generated per unit of IT workload

Water usage effectiveness (WUE) – measures the efficiency of water consumption in data centre cooling

While power capacity effectiveness (PCE) isn’t a mandatory requirement yet, it is a measure that data centres should track and report on as it reveals the total power capacity consumed over the total power capacity built.

If not already, now is the time to ensure you have processes and systems in place to capture, verify, and extract this information from your data centres. We also recommend conducting a comprehensive data gap analysis to ensure that all relevant data will be collected.

It’s important to understand where your value chain will fall within the scope of CSRD reporting and how that data can be utilised in reporting that’s compliant with ESRS requirements. For example, reports should be machine-readable, digitally tagged and separated into four sections – General, Environmental, Social and Governance.

While the immediate impact of CSRD will be in reporting practices, the hope is that, over time, the new legislation will drive change in how businesses operate. The goal is that CSRD will incentivise organisations such as data centre operators to adopt sustainable practices and technologies, such as renewable energy sources and circular economy models.

Improving sustainability of data centres

Correctly selecting and leveraging Data Centre Infrastructure Management (DCIM) that offers precise and comprehensive reports on energy usage is a paramount step in understanding and driving better sustainability in data centre operations. From modelling and predictive analytics to benchmarking energy performance - data centres that utilise innovative, comprehensive DCIM toolkits are perfectly primed to maintain a competitive operational advantage while prioritising a greener data centre future.

DCIM modelling and predictive analytics tools can empower data centre managers to forecast future energy needs more accurately, in turn helping data centres to optimise operations for maximum efficiency. Modelling and predictive analytics also enables proactive planning, ensuring that energy consumption aligns with actual requirements - preventing unnecessary resource allocation and further supporting sustainability objectives.

Real-time visibility of energy usage gives data centre operators insight into usage patterns and instances of energy waste, allowing changes to be made immediately. Ultimately, eliminating efficiencies faster means less emissions and less energy waste. In addition to enhancing operational efficiency, leveraging these real-time insights aligns seamlessly with emission reduction goals – supporting a more sustainable and conscious data centre ecosystem.

Utilising the right DCIM tools can also reduce energy consumption by driving higher efficiency in crucial areas such as cooling, power provisioning and asset utilisation. They can ensure critical components operate at optimal temperatures, reducing the risk of overheating and preventing energy wastage. In addition to mitigating overheating and subsequent critical failures, utilising optimal temperature tools can also improve the lifespan and performance of the equipment.

The right DCIM tool kit enables businesses to benchmark energy performance across multiple data centres and prioritise energy efficiency – while also verifying the compliance of data centres with key environmental standards and regulations like CSRD. Cutting-edge DCIM platforms also enables data centres to correctly assess their environmental impact by tracking metrics such as power usage effectiveness (PUE), carbon usage effectiveness (CUE) or water usage effectiveness (WUE). These tools facilitate the integration of renewable energy sources - such as solar panels or wind turbines - into the power supply and distribution of green data centres.

As sustainability continues to move up the corporate agenda, expect to see greater integration of DCIM with AI and ML to collect and analyse vast quantities of data, such as sensors, devices, applications and users. In addition to enhancing the ease of data collection, this streamlined approach aligns seamlessly with CSRD emission reduction goals - making compliance with CSRD and similar regulations much easier for data centres.

Taking a proactive approach to the data gathering requirements of CSRD and implementing technologies to support better sustainability practice isn’t just about compliance or reporting; it’s also to incentivise data centre operators towards the adoption of sustainable practices and technologies. Ultimately, data centres that are prepared for CSRD will also be delivering greater value for their organisation while paving the way for a more sustainable future.

Simon Rowley - 29 April 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Edge Computing in Modern Data Centre Operations

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Lenovo introduces purpose-built AI-centric infrastructure systems

Lenovo Group has announced a comprehensive new suite of purpose-built AI-centric infrastructure systems and innovations to advance Hybrid AI innovation from edge to cloud.

The company is delivering GPU-rich and thermal efficient solutions intended for compute intensive workloads across multiple environments and industries. In industries such as financial services and healthcare, customers are managing massive data sets that require extreme I/O bandwidth, and Lenovo is providing the IT infrastructure vital to management of critical data. Across all these solutions is Lenovo TruScale, which provides the ultimate flexibility, scale and support for customers to on-ramp demanding AI workloads completely as-a-service. Regardless of where customers are in their AI journey, Lenovo Professional Services says that it can simplify the AI experience as customers look to meet the new demands and opportunities of AI that businesses are facing today.

“Lenovo is working to accelerate insights from data by delivering new AI solutions for use across industries delivering a significant positive impact on the everyday operations of our customers,” says Kirk Skaugen, President of Lenovo ISG. “From advancing financial service capabilities, upgrading the retail experience, or improving the efficiency of our cities, our hybrid approach enables businesses with AI-ready and AI-optimised infrastructure, taking AI from concept to reality and empowering businesses to efficiently deploy powerful, scalable and right-sized AI solutions that drive innovation, digitalisation and productivity.”

In collaboration with AMD, Lenovo is also delivering the ThinkSystem SR685a V3 8GPU server, bringing customers extreme performance for the most compute-demanding AI workloads, inclusive of GenAI and Large Language Models (LLM). The powerful innovation provides fast acceleration, large memory, and I/O bandwidth to handle huge data sets, needed for advances in the financial services, healthcare, energy, climate science, and transportation industries. The new ThinkSystem SR685a V3 is designed for both enterprise private on-prem AI as well as for public AI cloud service providers.

Additionally, Lenovo is bringing AI inferencing and real time data analysis to the edge with the new Lenovo ThinkAgile MX455 V3 Edge Premier Solution with AMD EPYCTM 8004 processors. A versatile AI-optimised platform delivers new levels of AI, compute, and storage performance at the edge with the best power efficiency of any Azure Stack HCI solution. Offering turnkey seamless integration with on-prem and Azure cloud, Lenovo’s ThinkAgile MX455 V3 Edge Premier Solution allows customers to reduce TCO with unique lifecycle management, gain an enhanced customer experience and allows the ability to adopt software innovations faster.

Lenovo and AMD have also unveiled a multi-node, high-performance, thermally efficient server designed to maximise performance per rack for intensive transaction processing. The Lenovo ThinkSystem SD535 V3 is a 1S/1U half-width server node powered by a single fourth-gen AMD EPYC processor, and it's engineered to maximise processing power and thermal efficiency for workloads including cloud computing and virtualisation at scale, big data analytic, high-performance computing and real-time e-commerce transactions for businesses of all sizes.

Finally, to empower businesses and accelerate success with AI adoption, Lenovo has introduced with immediate availability Lenovo AI Advisory and Professional Services that offer a breadth of services, solutions and platforms designed to help businesses of all sizes navigate the AI landscape, find the right innovations to put AI to work for their organisations quickly, cost-effectively and at scale, bringing AI from concept to reality.

For more from Lenovo, click here.

Simon Rowley - 25 April 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Uncategorised

Axis introduces new open hybrid cloud platform

Axis Communications, a network video specialist, has introduced Axis Cloud Connect, an open hybrid cloud platform designed to provide customers with more secure, flexible, and scalable security solutions. Together with Axis devices, the platform enables a range of managed services to support system and device management, video and data delivery and meet high demands in cybersecurity, the company says.

The video surveillance market is increasingly utilising connectivity to cloud, driven by the need for remote access, data-driven insights, and scalability. While the number of cloud-connected cameras is growing at a rate of over 80% per year, the trend toward cloud adoption has shifted more towards the implementation of hybrid security solutions, a mix of cloud and on-premises infrastructure, using smart edge devices as powerful generators of valuable data.

Axis Cloud Connect enables smooth integration of Axis devices and partner applications by offering a selection of managed services. To keep systems up to date and to ensure consistent system performance and cybersecurity, Axis takes added responsibility for hosting, delivering, and running digital services to ensure availability and reliability. The managed services enable secure remote access to live video operations, and improved device management with automated updates throughout the lifecycle. It also offers user and access management for easy and secure control of user access rights and permissions.

According to Johan Paulsson, CTO, Axis Communications, “Axis Cloud Connect is a continuation of our commitment to deliver secure-by-design solutions that meet changing customer needs. This offering combines the benefits of cloud technology and hybrid architectures with our deep knowledge of analytics, image usability, cybersecurity, and long-term experience with cloud-based solutions, all managed by our team of experts to reduce friction for our customers.”

With a focus on adding security, flexibility and scalability to its offerings, Axis has also announced that it has built the next generation of its video management system (VMS) - AXIS Camera Station - on Axis Cloud Connect. Accordingly, Axis is extending its VMS into a suite of solutions that includes AXIS Camera Station Pro, Edge and Center. The AXIS Camera Station suite is engineered to more precisely match the needs of users based on flexible options for video surveillance and device management, architecture and storage, analytics and data management, and cloud-based services.

AXIS Camera Station Edge: An easily accessible cam-to-cloud innovation combining the power of Axis edge devices with Axis cloud services. It provides a cost-effective, easy-to-use, secure, and scalable video surveillance offering. It is reportedly easy to install and maintain, with minimal equipment on-site. Only a camera with SD card is needed or use AXIS S30 Recorder Series depending on the requirements. This flexible and reliable recording solution offers straight-forward control from anywhere, AXIS claims.

AXIS Camera Station Pro: A powerful and flexible video surveillance and access management software for customers who want full control of their system. It ensures they have full flexibility to take control of their site and build the right solution on their private network, while also benefiting from optional cloud connectivity. It supports the complete range of Axis products and comes with all the powerful features needed for active video management and access control. It includes new features such as a web client, data insight dashboards and improved search functionality and optional cloud connectivity.

AXIS Camera Station Center: Providing new possibilities to effectively manage and operate hundreds or even thousands of cloud-connected AXIS Camera Station Pro or AXIS Camera Station Edge sites all from one centralised location. Accessible from anywhere, it enables aggregated multi-site device management, user management, and video operation. In addition, it offers corporate IT functionality such as Active Directory, user group management, and 24/7 support.

Axis Cloud Connect, the AXIS Camera Station offering, and Axis devices are all built with robust cybersecurity measures to ensure compliance with industry standards and regulations. In addition, with powerful edge devices and the AXIS OS working in tandem with Axis cloud capabilities, users can expect high-level performance with seamless software and firmware updates.

The new cloud-based platform and the solutions built upon it by both Axis and selected partners aim to create opportunity and value throughout the channel, allowing companies to utilise the cloud at a pace that makes the most sense for their evolving business needs.

For more from Axis, click here.

Simon Rowley - 23 April 2024

Data Centres

Features

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

How DCs can develop science-based goals – and succeed at them

by Anthea van Scherpenzeel, Senior Sustainability Manager at Colt DCS.

Sustainability has swiftly evolved over the last 10 years from a nice-to-have, to a top business concern. The data centres industry is one that is frequently criticised for its excessive energy usage and environmental effects. For example, data centres account for about a fifth of Ireland's total power use, while global data centre and network electricity consumption in 2022 was expected to be up to 1.3% of global final electricity demand.

It is clear that responsible roadmaps and measurable targets are needed to lessen the impact of data centres' energy use. But some in the industry really don't know where to begin. The IEA asserts that the data centre industry urgently needs to improve, citing a variety of reasons such as a lack of environmental, social, and governance (ESG) data, a lack of internal expertise, or a culture that values performance and speed over environmental credentials.

In order to effectively address environmental challenges at the rate required to realise a global net-zero economy, it is imperative that science-based targets and roadmaps are established. It is the industry's duty to move forward with the most sustainable practices possible so that ESG impacts will be as small as possible when demand rises as a result of new technology and developing markets. It is also expected that by 2027, the AI sector alone will use as much energy as the Netherlands, making it more important than ever to take steps that are consistent with the science underlying the Paris Agreement.

Being accountable under science-based targets

Science-based targets underline the short and long-term commitment of businesses to take action on climate change. Targets are considered science-based if they align with the goals of the Paris Agreement to limit global warming to 1.5°C above pre-industrial levels. More recently, the Intergovernmental Panel on Climate Change (IPCC) published its sixth Assessment Report reaffirming the near linear relationship between the increase in CO2 emissions due to human activities and future global warming. Colt DCS resubmitted its science-based targets in 2023 in line with the latest Net Zero Standard by the Science-based Targets initiative. The targets cover a range of environmental topics, including fuel, electricity, waste and water. These goals are vital not only for the data centres themselves but also to support customers in reaching their own net-zero goals.

Science-based targets and roadmaps tells businesses how much and how quickly they need to reduce their greenhouse gas (GHG) emissions if we are to achieve a global net zero economy and prevent the worst effects of climate change. This extends to Scope 3 emissions – often the most challenging to track and manage – where data centre and business leaders must ensure that partners are on the same path to sustainable practices. Data centre leaders must commit, develop, submit, communicate, and disclose their science-based targets to remain accountable.

Although science-based targets are vital to improve environmental impact, data centre operators must not forget to cover all three pillars of ESG in their sustainability strategies. Many organisations focus on the ‘E’ with the granularity of data easier to assess; however, social and governance prove just as important. Whether that’s connecting with local communities, safeguarding, or ensuring that governance and reporting are up to scratch, a further focus on the ‘S’ and the ‘G’ can prove a key differentiator.

Turning targets into actions

With these science-based targets in place, it’s time for data centres to turn targets into actions. Smart switches to new, more sustainable materials, technologies, and energy sources reduce the impact of data centres on the planet. For instance, switching to greener fuel options and procuring renewable energy or choosing refrigerants with a lower global warming potential, go a long way in reducing a data centre’s carbon footprint.

A cultural change is also needed to ensure that sustainability becomes a vital part of business strategy. As well as shifting internal mindsets, collaboration with customers and suppliers will be crucial to meeting targets. Being on the same page, and not just thinking of sustainability as a tick-box exercise, must be at the industry’s core.

Going one step further, measuring progress is an essential part of the sustainability journey. Close relationships with partners and suppliers are a key part of effective reporting to track Scope 3 emissions – not just for data centres, but also for the businesses that use them. With the incoming EU Corporate Sustainability Reporting Directive (CSRD), this data sharing will prove more important than ever to gain a holistic overview of sustainability impacts along the entire value chain.

The future for the digital infrastructure market

The carbon footprint of the digital infrastructure market might be significantly reduced if the data centre business adopted science-based targets and actions as best practices. The ultimate objective should focus on embedded acts that begin as soon as land is obtained, rather than just reviewing daily operations. Data centres must make the site lifecycle as sustainable as possible, encompassing construction, materials, equipment, and operations. Measuring embedded carbon is essential to tracking a project's overall impact.

For more from Colt DCS, click here.

Simon Rowley - 22 April 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Features

News in Cloud Computing & Data Storage

Cloud is key in scaling systems to your business needs

by Brian Sibley, Solutions Architect at Espria

Meeting the demands of the modern-day SMB is one of the challenges facing many business leaders and IT operators today. Traditional, office-based infrastructure was fine up until the point where greater capacity was needed than those servers could deliver, vendor support became an issue, or the needs of a hybrid workforce weren’t being met. In the highly competitive SMB space, maintaining and investing in a robust and efficient IT infrastructure can be one of the ways to stay ahead of competitors.

Thankfully, with the advent of cloud offerings, a new scalable model has entered the landscape; whether it be 20 or 20,000 users, the cloud will fit all and with it comes a much simpler, per user cost model. This facility to integrate modern computing environments in the day-to-day workplace means businesses can now stop rushing to catch up, and with this comes the invaluable peace of mind that these operations will scale up or down as required. Added to which, the potential cost savings and added value will better serve each business and help to future-proof the organisation, even when on a tight budget. Cloud service solutions are almost infinitely flexible, rather than traditional on-premises options, and won’t require in-house maintenance.

When it comes to environmental impact and carbon footprint, data centres are often thought to be a threat, contributing to climate change; but in reality, cloud is a great option. The scalability of cloud infrastructure and the economies of scale they leverage facilitate not just cost but carbon savings too. Rather than a traditional model where a server runs in-house at 20% capacity, using power 24/7/365 and pumping out heat, cloud data centres are specifically designed to run and cater for multiple users more efficiently, utilising white space cooling, for example, to optimise energy consumption.

When it comes to the bigger players like Microsoft and Amazon, they are investing heavily in sustainable, on-site energy generation to power their data centres; even planning to feedback excess power into the National Grid. Simply put, it’s more energy efficient for individual businesses to use a cloud offering than to run their own servers – the carbon footprint for each business using a cloud solution becomes much smaller.

With many security solutions now being cloud based too, security doesn’t need to be compromised and can be managed remotely via SOC teams either in-house or via the security provider (where the resources are greater and have far more specialist expertise).

Ultimately, a cloud services solution, encompassing servers, storage, security and more, will best service SMBs. It’s scalable, provides economies of scale and relieves in-house IT teams from many mundane yet critical tasks, allowing them to focus on more profitable activities.

For more from Espria, click here.

Simon Rowley - 15 April 2024

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173