News in Cloud Computing & Data Storage

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Nasuni strengthens European footprint with French expansion

Nasuni, an enterprise data platform for hybrid cloud environments, has announced further investment and expansion into the French market, strengthening its European presence.

The company's data platform built for hybrid cloud supports enterprises facing growing unstructured data volumes with scalability, built-in security, fast edge performance, and AI-ready data.

Organisations across France are embracing the AI revolution, a market which is set to grow by over 28% from 2024 to 2030. This is unearthing a critical need for enterprises to unlock unstructured data repositories and curate AI-ready data. In parallel, the evolving cybersecurity threat landscape in France (the fifth most attacked country by ransomware) is putting enterprise data at risk.

Operating in France since 2017, Nasuni is experiencing strong demand from organisations across France and Europe for its data platform. The company says that organisations rely on Nasuni to turn unstructured data into rich repositories in order to drive AI implementations across their businesses, while strengthening cyber resilience and cutting infrastructure costs.

Nasuni’s client roster already includes nine of France’s top 50 market cap companies, with customers including the likes of Pernod Ricard, TBWA, Colas, Safran, and France Habitation.

“We’re seeing a rapid rise of forward-thinking French businesses undergoing cloud transformations to accelerate AI implementations and secure data in the face of evolving ransomware threats,” says Chris Addis, Vice President of Sales, EMEA at Nasuni. “Our expansion in the French market across sales, technical sales, and partnerships reflects the growing demands we’re seeing for the Nasuni File Data Platform in supporting enterprises with these challenges and driving growth. We are excited to have now achieved critical mass in France, and this rapid growth alongside the growth of our partner network marks an exciting time for Nasuni as we continue to expand operations in Europe.”

Nasuni’s key partnerships in France include arcITek, an innovative French IT services provider. Together, Nasuni and arcITek support French businesses by accelerating cloud transformations, delivering robust cyber resiliency, and enabling successful AI implementations. Nasuni also works closely with hyperscalers Microsoft, Amazon Web Services (AWS), and Google Cloud, and supports enterprise customers across a host of different sectors in France, with a focus on automotive, manufacturing, consumer goods, engineering, and energy.

“It’s an exciting time as enterprises are rapidly adopting AI to drive efficiencies and innovation, and accelerating cloud transformations to enable this,” notes Taniel Doniguian, President at arcITek. “It’s great to see French businesses adopting modern data platforms to drive their business growth, and we’re delighted to work with Nasuni to provide this critical solution. We support enterprises in implementing these technologies and Nasuni has been a key partner of ours for a number of years, helping us to provide firms with the efficient and secure ability to access and manage their unstructured file data while readying for the adoption of GenAI.”

Nasuni’s expansion in the French market follows another year of strong momentum for the company, as it added more than 120 new large enterprise customers across all verticals in 2023.

For more from Nasuni, click here.

Simon Rowley - 27 June 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Spirent announces addition of cloud-native function testing

Spirent Communications, a provider of test and assurance solutions for next-generation devices and networks, has announced the addition of cloud-native function (CNF) resiliency testing to its Spirent Landslide solution to continuously test the impact of CNF performance on the delivery of 5G standalone services.

The expansion of Landslide’s capabilities to include CNF testing within a single test solution platform will help further address the evolving needs of mobile network operators (MNOs) and Network Equipment Manufacturers (NEMs) as they transition to cloud-native environments.

The 5G standalone core, with its service-based architecture and embrace of the public and private clouds to support rapid time-to-market and scalable network operations, has the potential to unlock business opportunities with new ways to deploy, operate, and manage networks and services. While the new disaggregated and distributed architecture provides for a dynamic multi-cloud, multivendor ecosystem, it also presents a raft of daunting new challenges for service providers.

“The need for integration and interoperability across the 5G network means not only dealing with the complexity of how to incorporate new cloud infrastructures and virtual network functions with legacy systems and processes, but also how to test and optimise the performance of these new distributed infrastructures to ensure that they will all work together as they should,” says Anil Kollipara, VP of Automated Test & Assurance Product Management at Spirent.

“Cloud-native demands completely new processes to realise the efficiencies and operational benefits, while each CNF has unique performance expectations within the cloud environment. Adding cloud resiliency testing to Landslide’s already considerable testing and validation capabilities will help network service providers more easily tackle the challenges of embracing new cloud environments to deliver the high-quality 5G services that end users are demanding.”

Patrick Kelly, Founder and Principal Analyst at Appledore Research adds, "Spirent Landslide's CNF resiliency testing introduces a vital advancement in core 5G testing for mobile operators tackling the intricacies of 5G standalone and advanced networks. This enhancement streamlines complex pre-test and post-deployment procedures, optimising 5G services to ensure high performance and reliability in the ever-evolving CNF environment."

Spirent Landslide is the only single pane solution that can generate real-world 5G traffic and simultaneously impair the 5G cloud core to assess and correlate its impact on 5G services. This is especially important with cloud deployments, where failures are the norm and CNFs must be designed to be resilient to avert serious consequences with 5G service quality. MNOs and NEMs have traditionally relied on manual testing processes and self-developed automation scripts, leading to inefficiencies and increased testing complexity.

Ambiguity surrounding ownership of 5G services in CNF environments is also presenting additional concerns for service providers, requiring a fundamental shift in the testing mindset from reliability-focused legacy networks to resilient and scalable CNF networks.

For more from Spirent Communications, click here.

Simon Rowley - 20 June 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Macquarie launches Dell and Azure hybrid cloud offering

In an Australian first, Macquarie Cloud Services, part of Macquarie Technology Group, has leveraged strategic relationships with Microsoft and Dell Technologies to launch Macquarie Flex.

Macquarie Flex is a unique hybrid solution powered by Microsoft Azure Stack HCI (Hybrid Cloud Infrastructure) and Dell Technologies APEX Cloud Platform for Microsoft Azure providing workload flexibility, a single management plane, consistent experience, 24/7 mission critical support and evergreen compliance across public, private and hybrid cloud environments.

As the first Dell Technologies partner offering Azure Stack HCI in Australia, the launch seeks to assist organisations that have traditionally been challenged by the integration costs and complexity of hybrid cloud. Now, Macquarie Flex provides these organisations with a new solution to simplify hybrid cloud management, manage cloud spend and meet compliance and sovereign requirements.

“Macquarie Flex is the true definition of hybrid and represents a new era of hybrid cloud solutions,” says Macquarie Cloud Services Head of Azure, Naran McClung. “Azure and private cloud, two disparate environments promising different things for different purposes, are bound together to provide Australian businesses the choice, flexibility, and agility needed to succeed.”

“Macquarie Flex allows us to meet our customers wherever they are on their cloud journey,” adds Jonathan Staff, Head of Private Cloud at Macquarie Cloud Services. “Through our strong relationship with Dell Technologies and Microsoft, we can now arm Australian businesses with another lever to extract more value from their IT investment.”

Steven Worrall, Managing Director, Microsoft Australia and New Zealand, adds, “Macquarie Flex represents a significant advancement in hybrid cloud solutions. By leveraging Microsoft Azure Stack HCI, Macquarie Cloud Services is providing an unparalleled level of flexibility, security, and performance. This collaboration exemplifies our commitment to empowering Australian organisations with the tools and technologies needed to drive innovation and achieve their strategic goals.”

With Macquarie Flex, organisations now can deploy and run sensitive/compliance-driven workloads in a private cloud whilst leveraging the benefits of Azure, diversify deployment locations to improve commercial viability, treat private cloud workloads as first-class citizens of Azure, as well as monitor, alert, report, backup, secure, manage and govern virtual workloads through one integrated toolset.

This announcement follows the company’s recent launch of Macquarie Guard, a full turnkey Software-as-a-Service (SaaS) solution that automates practical guardrails into Azure services.

“Our purpose is to help customers who have been traditionally underserved and overcharged”, Naran states. “We’re thrilled to be leveraging our long-standing partnerships with Microsoft and Dell Technologies to deliver much needed new innovation to Australian businesses.”

For more from Macquarie, click here.

Simon Rowley - 17 June 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Verkada launches in Germany, Austria, and Switzerland

Verkada, a porivder of cloud-based physical security products, is expanding its operations to Germany, Austria, and Switzerland (DACH) under the leadership of Benjamin Krebs. This expansion comes as Verkada is seeing strong sales and partner growth across EMEA.

“Today, more than 24,000 customers across 85 countries trust Verkada as their safety solution,” says Eric Salava, Chief Revenue Officer at Verkada. “Establishing operations in the DACH region, which represents the largest economy in Europe, is a natural next step as we continue to grow internationally.”

As a result, Verkada’s Command platform and website are now available in German, providing German-speaking customers with full access to Verkada’s services in their native language so that they have the necessary resources to make informed security decisions.

“The DACH region is renowned for its unwavering commitment to both cyber and physical security,” says Benjamin Krebs, Managing Director, DACH at Verkada. “Customers across the region are looking for fully integrated security solutions that provide intelligent insights to keep their organisation safe in a privacy-sensitive way.

“As cloud-based physical security becomes the industry standard, I am looking forward to working with our growing team, customers, and partner network to help more organisations across the DACH region keep the communities they live and work in safe.”

Simon Rowley - 13 June 2024

Artificial Intelligence in Data Centre Operations

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Arista unveils Etherlink AI networking platforms

Arista Networks, a provider of cloud and AI networking solutions, has announced the Arista Etherlink AI platforms, designed to deliver optimal network performance for the most demanding AI workloads, including training and inferencing.

Powered by new AI-optimised Arista EOS features, the Arista Etherlink AI portfolio supports AI cluster sizes ranging from thousands to 100,000s of XPUs with highly efficient one and two-tier network topologies that deliver superior application performance compared to more complex multi-tier networks while offering advanced monitoring capabilities including flow-level visibility.

“The network is core to successful job completion outcomes in AI clusters,” says Alan Weckel, Founder and Technology Analyst for 650 Group. “The Arista Etherlink AI platforms offer customers the ability to have a single 800G end-to-end technology platform across front-end, training, inference and storage networks. Customers benefit from leveraging the same well-proven Ethernet tooling, security, and expertise they have relied on for decades while easily scaling up for any AI application.”

Arista’s Etherlink AI platforms

The 7060X6 AI Leaf switch family employs Broadcom Tomahawk 5 silicon, with a capacity of 51.2Tbps and support for 64 800G or 128 400G Ethernet ports.

The 7800R4 AI Spine is the fourth generation of Arista’s flagship 7800 modular systems. It implements the latest Broadcom Jericho3-AI processors with an AI-optimised packet pipeline and offers non-blocking throughput with the proven virtual output queuing architecture. The 7800R4-AI supports up to 460Tbps in a single chassis, which corresponds to 576 800G or 1152 400G Ethernet ports.

The 7700R4 AI Distributed Etherlink Switch (DES) supports the largest AI clusters, offering customers massively parallel distributed scheduling and congestion-free traffic spraying based on the Jericho3-AI architecture. The 7700 represents the first in a new series of ultra-scalable, intelligent distributed systems that can deliver the highest consistent throughput for very large AI clusters.

A single-tier network topology with Etherlink platforms can support over 10,000 XPUs. With a two-tier network, Etherlink can support more than 100,000 XPUs. Minimising the number of network tiers is essential for optimising AI application performance, reducing the number of optical transceivers, lowering cost and improving reliability.

All Etherlink switches support the emerging Ultra Ethernet Consortium (UEC) standards, which are expected to provide additional performance benefits when UEC NICs become available in the near future.

“Broadcom is a firm believer in the versatility, performance, and robustness of Ethernet, which makes it the technology of choice for AI workloads,” says Ram Velaga, Senior Vice President and General Manager, Core Switching Group, Broadcom. “By leveraging industry-leading Ethernet chips such as Tomahawk 5 and Jericho3-AI, Arista provides the ideal accelerator-agnostic solution for AI clusters of any shape or size, outperforming proprietary technologies and providing flexible options for fixed, modular, and distributed switching platforms.”

Arista EOS Smart AI Suite

The rich features of Arista EOS and CloudVision complement these new networking-for-AI platforms. The innovative software suite for AI-for-networking, security, segmentation, visibility, and telemetry features brings AI-grade robustness and protection to high-value AI clusters and workloads. For example, Arista EOS’s Smart AI suite of innovative enhancements now integrates with SmartNIC providers to deliver advanced RDMA-aware load balancing and QoS. Arista AI Analyzer powered by Arista AVA automates configuration and improves visibility and intelligent performance analysis of AI workloads.

“Arista’s competitive advantage consistently comes down to our rich operating system and broad product portfolio to address AI networks of all sizes,” says Hugh Holbrook, Chief Development Officer, Arista Networks. “Innovative AI-optimised EOS features enable faster deployment, reduce configuration issues and deliver flow-level performance analysis, and improve AI job completion times for any size AI cluster.”

The 7060X6 is available now. The 7800R4-AI and 7700R4 DES are in customer testing and will be available 2H 2024.

For more from Arista Networks, click here.

Carly Weller - 6 June 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

PagerDuty innovations set to improve operational efficiency

PagerDuty, a global provider of digital operations management, has introduced new capabilities and upgrades for the PagerDuty Operations Cloud.

The new capabilities are critical to enterprises that are modernising their operations centres, standardising automation practices, transforming incident management, and automating their remote-location operations. Now teams can take advantage of AI and automation and more powerful end-to-end incident management capabilities to anticipate, identify and resolve operational issues more quickly than ever.

The PagerDuty Operations Cloud combines Incident Management, AIOps, Automation, Customer Service Operations and PagerDuty Copilot (early access) into a flexible, easy-to-use platform designed for mission-critical, time-sensitive, high-impact work across IT, DevOps, security and business teams. The platform is enhanced by APIs that allow organisations to integrate with multiple technology stacks, delivering reliable availability for operational transformation.

“To remain competitive, companies must innovate rapidly and deliver an always-on, immediate digital experience that consumers expect. With the massive amount of noise coming in across teams and tools, it’s challenging to act quickly when your teams are mired in antiquated systems and manual processes, especially at scale,” says Jeffrey Hausman, Chief Product Development Officer at PagerDuty.

“The PagerDuty Operations Cloud makes it easy for business and IT leaders to cross the operational chasm by giving them advanced AI and automation capabilities to address some of the most complex, cross-functional processes of enterprise operations, which frees up time and resources to focus on the most mission-critical work to drive their business.”

PagerDuty Copilot (early access) - the generative AI assistant embedded in the PagerDuty Operations Cloud - augments and scales operations teams with AI and automation to manage mission-critical work faster and more effectively. By interpreting the results of automated diagnostics, providing responders with helpful incident context, drafting status updates and generating drafts of post-incident reviews with the click of a button, PagerDuty Copilot allows teams to eliminate time-consuming and repetitive tasks so they can focus on high-priority needs. If the user asks PagerDuty Copilot to generate a post-incident review, it can generate a draft in seconds - reduced from the hours it typically takes.

In addition, PagerDuty Copilot can provide a quick synopsis of the incident through simple prompts, which creates a summary view of the incident. Responders coming into incidents can leverage PagerDuty generative AI to rapidly summarise incident details, Slack notes and customer impact in a moment. PagerDuty Copilot saves responders precious time because they no longer need to spend time collecting dispersed data points and important details.

PagerDuty Operations Console (early access), the latest AIOps offering, serves as a single source of truth on newly created incidents, providing a live, shared view of operational health. Flexible filters ensure issues are immediately discoverable so that network operations centre (NOC) and ITOps teams can triage and take action on issues quickly to minimise business impact and protect customer experience. Teams can accelerate triage and resolution using valuable context surfaced directly in a single view, including the impact of an issue, key insights, the ability to run automated diagnostics, recommended actions, and the ability to predict the next likely incident.

PagerDuty Automation helps organisations standardise operations, improve efficiency, and enhance customer experiences by connecting and automating critical work across teams, systems and environments. PagerDuty Workflow Automation allows both developers and non-developers to fully automate complex and manual operations processes - including human steps such as gathering approvals, making decisions or providing updates - and can leverage runbooks in PagerDuty Runbook Automation as part of the process. As a result, teams reduce risks associated with human error and see dramatic improvements in operational efficiencies.

New capabilities for PagerDuty Runbook Automation enable organizations to build, deploy, run and manage automation jobs at scale to standardize automation across the business. Project-based runner management (early access) helps organisations increase the adoption of automation while allowing each team to operate efficiently within their particular technical requirements and dependencies.

PagerDuty Incident Management - an enterprise-grade solution that unites PagerDuty’s incident management product with the power of Jeli’s innovative post-incident review capabilities into a single end-to-end offering. PagerDuty empowers organisations to standardise processes with guided remediation and automated workflows directly from Slack, turning every incident into an opportunity to learn and improve. The dynamic narrative builder can drag content from Slack directly into post-incident review. Incident analysis is critical to identify patterns for what happened and why so that teams can adjust processes and avoid repeat issues. This sets organisations up with a more proactive approach to managing incidents that can deliver more resilient operations over time.

"Delivering speed and resiliency are key mechanisms to successfully driving growth and customer loyalty," says Stephen Elliot, Group Vice President, I&O, Cloud Operations, and DevOps at IDC. "As organisations scale, they need to implement automation into their operations practices to continue to deliver uninterrupted, consumer-grade digital experiences."

The PagerDuty Operations Console is currently in early access and will be generally available in Q3 of 2024.

Simon Rowley - 24 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Huawei Cloud announces Cairo region

Huawei Cloud today announced its Cairo Region, making Huawei the first company to launch a public cloud in Egypt.

The Cairo Region covers 28 African countries and contributes to Huawei Cloud’s support of digital transformation of vertical industries. Announced at the Huawei Cloud Summit in Cairo, the announcement of the Cairo Region brings Huawei Cloud’s total number of regions to 33 worldwide.

Huawei says that the Cairo Region will provide innovative, reliable, secure, and flexible cloud services to individuals, corporate and government users and serve as important digital infrastructure. Huawei Cloud has been offering innovative services on its platform including the DataArts data governance pipeline including the GaussDB database, and the ModelArts AI development pipeline.

“With the Cairo Region, we are bringing our most innovative technologies to the country to further support Egypt to unlock the potential of digital transformation,” says Jacqueline Shi, President of Global Marketing and Sales Services at Huawei Cloud. “Furthermore, the launch of the Cairo Region is an important step in Huawei Cloud being able to enhance our services to customers across 28 African countries such as Egypt, Ethiopia and Algeria.”

Huawei Cloud also released a new Arabic large language model (LLM). The new Arabic LLM is an important step in supporting companies in the region with the digital transformation of vertical industries. The automatic speech recognition (ASR) service supports functions covering over 20 Arabic speaking countries, with the accuracy rate reaching 96%. It is the first 100-billion parameter Arabic LLM in the industry.

The model has been trained with native Arabic data, ensuring an accurate understanding of the local culture, history, knowledge customs and more of the Arab world, rather than relying on a body of English work and translating. As an important part of Huawei Cloud’s Pangu model’s endeavour to support vertical industries with AI capabilities, the training of the model is based on industry datasets covering digital power, oil and gas, finance and more.

Jacqueline continues, “We believe that every country should have AI capabilities to preserve its local culture and that AI models should be developed and trained with local languages, enabling vertical industries to become more efficient.”

Huawei previously announced that it will invest $300 million ($235m) to establish the first public cloud region in Egypt, offering over 200 cloud services including AI platforms, data platforms, and development platforms. To nurture a thriving ecosystem, Huawei will invest $200 million ($157m) to support 200 local software partners, to empower 1,300 channel partners and eventually to build a prosperous local software and application ecosystem. In the region, Huawei will invest $30 million ($23m) to train 10,000 local developers and educate 100,000 digital professionals, to drive intelligent transformation in the region.

To further accelerate ecosystem development, Huawei Cloud announced upgrades to its startup program in Egypt, including an advanced cloud platform, training programmes and business resources. The Huawei Cloud Startup Program assigns dedicated teams to advise on startups’ cloud adoption and subsidises their cloud consumption. A single startup can apply for cloud credits worth up to $150,000 (£117,000).

Huawei Cloud is the second largest cloud services provider in China, according to research firm Canalys. It has been steadily expanding its global footprint, opening new data centres in Turkey and Saudi Arabia last year and operating a total of 93 availability zones over 33 regions globally.

Huawei Egypt was founded in 2000. Huawei says that it is committed to collaborating with over 350 local partners and enriching the local ecosystem. In terms of supporting ICT talent, the company has set up 90 ICT academies, a flagship 'Seeds for the Future' programme, and ICT competition. These talent programmes have benefited over 35,000 Egyptian ICT talent.

For more from Huawei, click here.

Simon Rowley - 21 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Edge Computing in Modern Data Centre Operations

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

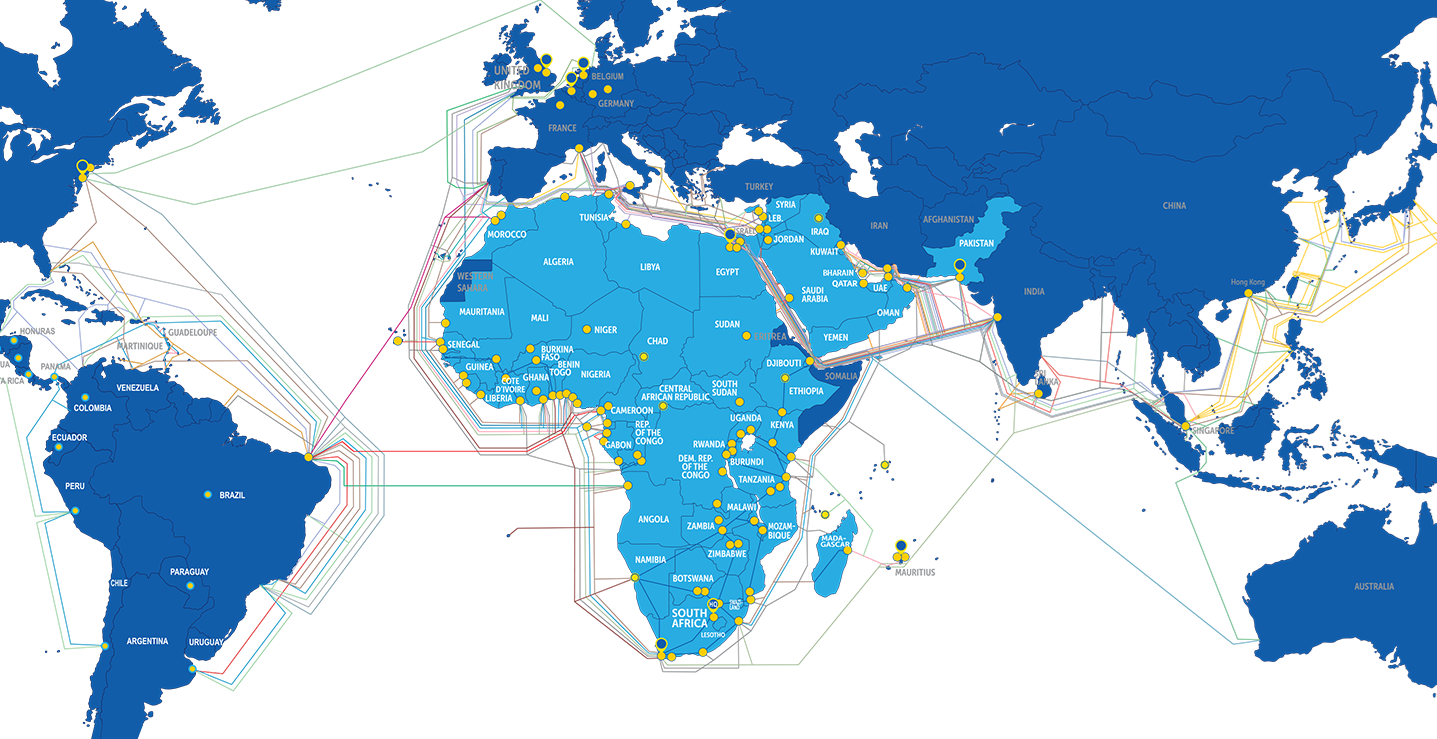

CMC Cloud to take Africa to the edge

CMC Networks, a global Tier 1 service provider, has launched CMC Cloud to deliver high-performance edge computing across Africa.

CMC Cloud is an Infrastructure as a Service (IaaS) solution designed to bring workloads closer to the end user and improve the performance of applications and services, without the need to invest in physical hardware.

CMC Cloud provides a decentralised edge infrastructure to reduce latency and bandwidth use, helping to enhance the performance and reliability of digital services across Africa. This is essential for real-time applications using transactional data, video streaming, and the Internet of Things (IoT). The IaaS model provides businesses with access to virtual servers, storage, and networking infrastructure on a flexible, scalable and agile basis.

“CMC Cloud has been purpose-built for the African continent. We understand local needs and challenges across markets, and our new IaaS offering is providing tailored solutions to address the specific requirements of African businesses,” says Marisa Trisolino, CEO at CMC Networks. “We want to remove the barriers to cloud adoption, simplifying and accelerating the growth of Africa’s digital economy. CMC Cloud is making this a reality with a flexible, scalable and cost-efficient model.”

CMC Networks makes it simple for both local and international businesses to enter and expand across African markets by overcoming data sovereignty laws which can otherwise limit cloud adoption. It offers cloud services that store and process data within country borders, helping organisations to comply with country-specific legal and regulatory requirements regarding data storage and privacy. These privacy and security measures are crucial for sectors such as finance, healthcare, and public services with sensitive data handling protocols.

“CMC Cloud, on top of our vast network footprint and ‘application-first’ AI core routing capabilities, delivers a holistic approach to digital transformation,” notes Geoff Dornan, CTO at CMC Networks. “Customers can easily adjust computing resources to meet changing business demands while optimising performance with smooth and responsive access to applications and data. The flexibility of CMC Cloud helps in scaling resources in accordance with demand, making it a cost-effective solution for start-ups, SMEs, and large enterprises alike. With CMC Cloud, we’re making it easier than ever for businesses to thrive in today’s digital economy.”

CMC Cloud provides various compute, storage and networking solutions including Virtual Machines, memory, block storage, object storage, IP addresses, Firewall as a Service (FWaaS), IP Premier Dedicated Internet Access (DIA), Distributed Denial of Service Protection as a Service (DDoSPaaS), Carrier Ethernet, MPLS and Multi Cloud Connect. CMC Networks’ Flex-Edge packages software-defined wide-area networking (SD-WAN), SDN, network functions virtualisation (NFV) and security into one solution, without physical presence required in-country. CMC Networks has the largest pan-African network, servicing 51 out of 54 countries in Africa and 12 countries in the Middle East.

For more from CMC Cloud, click here.

Simon Rowley - 9 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Distributed cloud saves CloudReso 30% on storage costs

Cubbit, the innovator behind Europe’s first distributed cloud storage enabler, has announced that CloudReso, a France-based distributor of MSP security solutions, has reduced its cloud object storage costs by 30% thanks to Cubbit’s fully-managed cloud object storage, DS3.

The MSP can now offer its customers cloud storage with unequalled data sovereignty specs, geographical resilience, and double ransomware protection (server and client-side). Through this deployment, CloudReso has successfully avoided hidden costs traditionally linked to S3, including egress, deletion, and bucket replication fees.

Operating across all French-speaking countries, CloudReso manages over 790 TB of data and backs up 1,590 endpoints. CloudReso's expertise covers a wide range of vertical markets, including the public sector, providing extensive technical knowledge of S3 solutions and support.

With cyber-threats such as ransomware attacks growing in sophistication, targeting both client-side and server-side vulnerabilities with unprecedented precision, Cubbit technology has helped CloudReso protect data both client-side (Object Lock, versioning, IAM policy) and server-side (geo-distribution, encryption).

The MSP considered and assessed various public services over the years, including centralised S3 cloud storage offerings. These options incurred high fees for deleting data, and expenses were multiplied according to the number of sites needed for bucket replication (in CloudReso’s case the configuration was composed of three data centres across the Paris region). With Cubbit DS3, fixed storage costs include all the main S3 APIs, together with the geo-distribution capacity, enabling CloudReso to save 30% on storage costs while providing a cloud storage solution with up to 15 9s for durability. Cubbit’s flat rate also helped CloudReso quickly estimate the monthly volume data load, whilst easily predicting costs and ROI, enabling CloudReso to make higher margins.

The infrastructure and maintenance costs of other on-prem object storage options did not offer a perfectly secure and available solution for the MSP’s needs. A major prerequisite for CloudReso's choice of partner was the inclusion of all the key S3 APIs, including Lifecycle Configuration (enabling setting the lifecycle of data and objects), and Object Lock technology, thus implementing an extra layer of data protection from ransomware and preventing unauthorised data access or deletion - capabilities not delivered as comprehensively or as cost-effectively by the other providers. Whereas Cubbit offers an extensive range of S3 object store features at one of today's most competitive prices. With Cubbit's GDPR compliance and geo-fence capabilities, CloudReso can now comply with regional regulations and stringent laws impacting the industries in which its customers operate, enabling the MSP to create new sources of revenue streams.

Gilles Gozlan, CEO at CloudReso, says, “To store our data, we've used US-based and French-based cloud storage providers for a long time, but they did not come with the geographical resilience, data sovereignty and simple pricing that Cubbit offers. This has enabled us to estimate our ROI more easily, and to generate a 30% saving on our previous costs for equivalent configuration. Cubbit will enable us to enter new data intensive, highly-regulated markets. Moreover, Cubbit has a quality and response time of support that we haven't found with any other provider. For these reasons we haven't used any other S3 provider since we started working with Cubbit.”

Richard Czech, Chief Revenue Officer at Cubbit, adds, “Together with the explosive growth of unstructured data, European organisations are now facing an increasing number of challenges: cyber-threats, data sovereignty, and unpredictable costs, to name a few. CloudReso has our DS3 as a true obstacle remover, and we are working together to bring it to organisations all over Europe.”

Moving forward, CloudReso expects to develop the adoption of Cubbit throughout all Francophone territories. Soon, CloudReso will also adopt Cubbit’s new DS3 Composer to provide its customers with on-prem, country-clustered innovations with complete sovereignty and compliance, including NIS2, GDPR, and ISO 27001.

For more from Cubbit, click here.

Simon Rowley - 8 May 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News in Cloud Computing & Data Storage

Wasabi delivers flexible hybrid cloud storage solutions

Wasabi Technologies, the hot cloud storage company, has announced a collaboration with Dell Technologies, via the Extended Technologies Complete program to bring affordable and innovative hybrid cloud solutions for backup, data protection, and long-term retention to customers.

In a time marked by the exponential growth of data, organisations worldwide face the need for efficient and cost-effective storage solutions. The Wasabi-Dell collaboration addresses this challenge by providing flexible, efficient hybrid cloud solutions enabling users to optimise their data management processes while simultaneously reducing overall costs.

"Dell is a clear industry leader with a broad and deep portfolio of transformative technology,” says David Friend, co-founder and chief executive officer at Wasabi Technologies. "This collaboration will extend the reach of Wasabi's cloud storage to a broader audience, catering to users in search of dependable, economical solutions for safeguarding their data archives over the long haul."

The cloud has become the preferred location for long-term data backup retention and disaster recovery. Dell’s PowerProtect Data Domain appliances natively tiers data to Wasabi, enabling customers to benefit from a complete data protection solution for on-premises storage with long-term cloud retention. In addition, Wasabi integrates with Dell NetWorker CloudBoost to bring long term retention in the cloud to existing NetWorker customers.

"The collaboration between Wasabi Technologies and Dell Technologies presents a powerful solution for organisations grappling with data growth," says Dave McCarthy, research vice president at IDC. "Organisations need a hybrid cloud infrastructure that is efficient and cost-effective, and that has the ability to scale with them during their data management journey. This collaboration meets these challenges head on."

Carly Weller - 7 May 2024

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173