Cooling

Cooling

Data Centres

Carrier launches new chiller range for data centres

Carrier has launched a new range of high performance chillers for data centres, designed to minimise energy use and carbon emissions while cutting running costs for operators. Available in capacities from 400kW to 2100kW, the Eurovent and AHRI certified units are based on proven Carrier screw compressors, ensuring efficient, reliable operation and long working life. Carrier is a part of Carrier Global Corporation, global leader in intelligent climate and energy solutions.

The new AquaForce 30XF air-cooled screw chillers are equipped with an integrated hydronic free-cooling system and variable-speed inverter drives, which combine to deliver energy savings of up to 50% during total free-cooling operation.

The chillers, available on ultra-low global warming potential refrigerant HFO R-1234ze(E), claim to offer excellent resilience with an ultra-fast recovery system that, in the event of a power cut, can resume 100% of cooling output within two minutes of power being restored. This ensures cooling is maintained for critical servers and data protected.

The chiller can operate in a wide range of ambient conditions, from -20 to 55°C, making it suitable for use in cold, temperate and hot climates, while Carrier's smart monitoring system ensures optimum efficiency and performance. Variable-speed fans further increase energy efficiency and support quiet operation at part-load.

To further enhance chiller performance, the units are equipped with a dual power supply (400/230V or 400/400V) with electronic harmonic filter. The filter automatically monitors and maintains the quality of the power supply, preventing damage to the chiller's electrical components and improving overall system efficiency.

The hydronic free-cooling system is available in a glycol-free option for applications where glycol cannot be used. This operates with glycol in the outdoor unit only, and enables the size of the glycol-free indoor units to be reduced by up to 15%.

"The new AquaForce 30XF has been designed specifically to meet the strict environmental, efficiency and reliability requirements of data centre applications, and ensure servers keep running cool around the clock," says Raffaele D'Alvise, Carrier HVAC Marketing and Communication Director. "The chiller helps data centre operators achieve their budget and sustainability goals by reducing energy consumption and carbon emissions, while providing excellent resilience and extended working life."

The AquaForce 30XF is part of Carrier's comprehensive range of cooling solutions for data centres, which includes AquaSnap 30RBP air-cooled scroll chillers, AquaEdge 19DV water-cooled centrifugal chiller, AquaForce 61XWH-ZE water-cooled heat-pump, plus computer room air conditioners, air handlers and fan-walls, all supported by Carrier BluEdge lifecycle and service and support to maintain optimum performance.

Isha Jain - 22 March 2024

Cooling

Data Centres

News

LiquidStack opens new facility to scale liquid cooling production

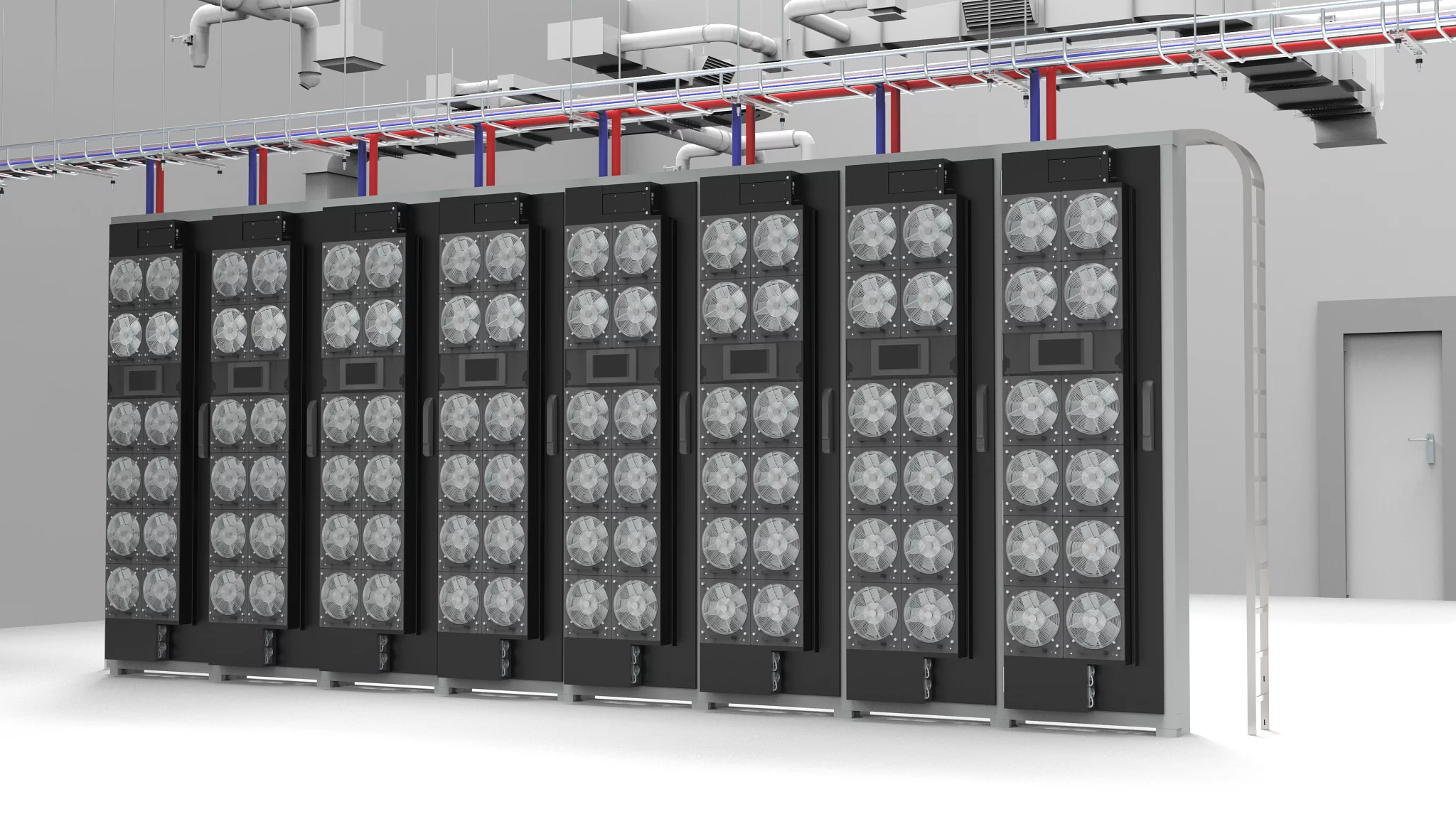

LiquidStack, provider of liquid cooling solutions for data centres, has announced its new US manufacturing site and headquarters located in Carrollton, Texas. The new facility is a major milestone in its mission to deliver high performance, cost-effective and reliable liquid cooling solutions for high performance data centre and edge computing applications. With a significant uptick in liquid cooling demand associated with scaling generative AI, the new facility enables it to respond to customers' needs in an agile fashion, while maintaining the standards and services the company is known for.

LiquidStack’s full range of liquid cooling solutions are being manufactured on site, including direct-to-chip Coolant Distribution Units (CDUs), single phase and two phase immersion cooling solutions and the company’s MacroModular and MicroModular prefabricated data centres. The site will also host a service training and demonstration centre for customers and its global network of service engineers and partners.

“We are seeing incredibly high demand for liquid cooling globally as a result of the introduction of ultra-high TDP chips that are driving the scale and buildout of generative AI. Our investment in this new facility allows us to serve the rapidly growing market while creating new, high-skilled jobs right here in Carrollton,” says Joe Capes, CEO, LiquidStack.

The new manufacturing facility and headquarters occupies over 20,000sqft. It has also been in operation since December 2023, and a formal ribbon cutting ceremony will be held on March 22, 2024. Expected attendees include members of the city council and the Metrocrest chamber of commerce, as well as LiquidStack customers and partners.

Isha Jain - 5 March 2024

Cooling

Data Centres

News

Daikin boosts data centre cooling solutions

Daikin has enhanced its product and services offer for data centre cooling in response to soaring demand from the sector and to keep pace with rapid predicted growth.

The consultancy McKinsey & Company forecast that the global data centre market would grow by at least 10% a year throughout the rest of this decade, with a total of $49bn likely to be spent on construction and fitting out of new facilities.

This rapid expansion is being driven by the growth in AI applications and cloud computing, which in turn means healthy demand for cooling solutions tailored to the specific needs of this energy intensive area.

Minimising energy and water useAs well as keeping data centres cool to protect their sensitive equipment, operators are under increasing pressure to minimise energy and water use – with sustainability a growing preoccupation.

This has been reflected in increased demand for the company’s free cooling solutions, which take advantage of low ambient air temperatures to reduce the run time of chillers, in turn, cutting energy use and extending the operating life of equipment – a key consideration for reducing ‘whole life carbon’.

Daikin offers different types of free cooling, including glycol free systems, to meet the needs of critical applications like data centres. It also uses sophisticated plant management systems supported by AI to keep chillers operating close to their optimal performance level and balances total capacity with actual load. This improves energy savings by as much as 20%, compared with traditional chiller sequencing control by BMS.

It has also extended other aspects of its products and services to meet evolving end user requirements and now offers two ranges of air-cooled chillers specifically for data centres.

Precise modulation of chiller loadsIts chiller range includes screw compressor units equipped with variable frequency drives (VFD), which are designed and manufactured in-house and mounted onto the compressor for better reliability and efficiency.

This allows precise modulation of cooling loads with capacities up to 2150kW and scroll compressor chillers with capacities up to 1344kW, which are ideally suited to a range of applications from refurbishment projects to major new developments.

Daikin chillers deliver up to +30°C supply water temperature, and can operate in a wide band of outside temperature conditions between –35°C and +55°C. This flexibility is particularly valued by large data centre operators juggling with ever denser concentrations of servers and ancillary equipment.

It also offers the Pro-W, which is a high-efficiency computer room air handling (CRAH) solutions, manufactured in both the UK and Italy. The range offers cooling capacities from 200kW to 700kW, making them ideal for even the very largest data centres.

These capacities are achieved using just four core unit sizes, which means the company can use a dedicated range of standardised components, including built-in EC fans and cooling coils, to guarantee manufactured quality and short production lead times.

Despite their large cooling capacities, the Daikin products are compact, which allows them to be fitted in restricted areas. The company also offers a range of control options specifically tailored for data centres to ensure the chiller plant delivers 24/7 cooling operating in the right mode by adjusting continually to real time conditions. The chillers also come with a choice of refrigerant to further meet environmental concerns.

Comprehensive after sales supportDaikin provides comprehensive after-sales support including access to its remote monitoring ‘Daikin On Site’ (DOS) service so any performance or safety risks can be flagged up to keep the system running as intended.

DOS captures live operational data from the data centre and combines it with statistical predictions using trend analysis. This allows the service team to develop a preventative maintenance schedule to ensure the efficiency and reliability of the critical equipment, avoiding costly downtime and major repairs.

Visit Daikin on Stand D530 at Date Centre World, Excel, London on March 6 and 7 to see all the specialised cooling solutions on offer.

Isha Jain - 4 March 2024

Cooling

Data Centres

Playing it cool: A story of not giving up

This case study looks at the success that Danfoss Power Solutions had in helping iXora in its development of a liquid cooling system, specific to the requirements of typical data centre operations.

The Caribbean island of Curaçao enjoys a tropical climate, which may be pleasant for many people, but can be disastrous for electronics. Heat and long hours of direct sunlight, combined with tropical rains and salty, moist air, can very quickly corrode electrical equipment and ruin components.

The founders of iXora were well aware of these challenges when they set about developing their high-performance amplifier system. The solution needed to not only withstand the challenging Curaçao climate, but also offer users easy transport from event to event. Air cooling requires fans and other moving parts that are subject to wear and tear, so iXora looked at alternative solutions.

The company developed a closed-circuit immersion cooling system, which provided the necessary cooling at an extremely high efficiency. Because the system was closed, it was also easy to set up, take down, and transport, and it effectively shielded electronic components from ambient conditions.

Everything was going well for iXora, until COVID-19 struck in 2020; it wiped out the live event scene almost overnight. Company founders, Vincent Beek and Vincent Houwert, decided to use the opportunity to relocate to the Netherlands to seek further investment and finesse the design of the amplifier system.

Immersion cooling: An introduction

In recent years, digital technologies such as cryptocurrency and artificial intelligence have emerged from relative obscurity to mainstream, producing vast amounts of data. As a result, data centres have sought to increase capacity while simultaneously improving efficiency. Air cooling had long been the norm for data centre cooling, but many data centre facilities have transitioned to or are exploring liquid cooling as a more efficient and cost-effective alternative to air cooling.

There are two main approaches to liquid cooling: direct liquid cooling and immersion cooling. Direct liquid cooling involves circulating coolants to specific components. While more efficient than air cooling, it is often not suitable for high-intensity processing operations. Immersion cooling typically involves submerging electronics in a bath of non-conductive liquid. It provides far greater efficiency and cooling density compared to air cooling and requires no fans or other active cooling components. However, designs are often complex, custom, and expensive, requiring large baths in which to submerge server racks entirely.

Pivoting to a new model

iXora realised that its closed system could provide the perfect solution for data centres. It could substantially improve the efficiency and capacity of server hardware without the need for significant infrastructure redesign, and at a much lower cost compared to the immersion cooling systems available at the time.

The company, now joined by data centre expert, Job Witteman, began designing a prototype closed immersion cooling system for the data centre market, based on its existing amplifier cooling solution. Rather than the traditional horizontal rack system, iXora instead sought to develop a chassis containing vertical cassettes, in which dielectric oil would cool the electronic components. This required a vastly more sophisticated design compared to iXora’s previous solutions for audio equipment, and a deep understanding of the specific requirements of typical data centre operations. Furthermore, because the prototype would be the first of its kind, it also required the design and development of new systems and components unique to the application.

Prototype challenges

One example of this was the new system’s heat exchanger, required to transfer heat away from each cassette. The design required two couplings per cassette to connect them both to the chassis, and to the facility’s wider cooling equipment. Achieving a low pressure drop in these couplings was vital, as each small pressure drop could cause disruptions in the cooling performance. Cassettes also required easy connection and disconnection for maintenance, with zero leakage, as any liquid coming into contact with data centre equipment could pose a serious risk to operations.

iXora approached Danfoss for help in the development of custom couplings for its system. As well as zero leakage, these also needed to provide precision alignment, a low connect force, and a compact size. Drawing on its extensive experience in coupling technology, Danfoss product engineers were able to calculate exactly what was required based on the flow rate, maximum coupling size, pressure requirements, and heat exchange rate, alongside a range of other factors specific to data centre cooling applications. Based on these calculations, Danfoss concluded that aluminum dry break quick-disconnect couplings would be the most suitable solution. These were then manufactured to specification by Danfoss.

Success in partnership

The prototype was a success, with the Danfoss couplings outperforming all other couplings tested. iXora’s Head of Operations and Sales, Vincent Beek, explains, “The Danfoss couplings achieved full alignment, easy connection and disconnection and, crucially, zero leakage. This is vital for maintenance. With a conventional full immersion system, it can be tricky getting servers out of the liquid bath. Ours is effectively plug and play, so you can just disconnect it and carry it straight to the workshop.

“We’re now onto the field-testing stage, with a global pilot to follow this year, and then next year we’ll be scaling up to mass production. None of this would have been possible without Danfoss. As a start-up, it can be difficult to get the attention of larger companies, particularly when it comes to help with R&D. They saw our solution, immediately bought into it, and we’ve benefited greatly from their expertise.”

Jeroen Veraart, Senior Sales Development Manager, Danfoss Power Solutions, says, “This was a true partnership. iXora benefited from us for sure, but we’ve learned a lot from them as well. As a result of working with them we’ve identified new ways in which we can improve and refine our products further.

“iXora were a dream to work with,” Jeroen continues. “With a start-up there’s always an element of risk, but they were enthusiastic, willing to learn, and always coming up with creative ideas and solutions. At the end of it they’ve got a really impressive system. Immersion cooling is clearly the future for data centres, and in just a few years, I expect that market will grow considerably. This has been a really rewarding partnership for both parties, and I look forward to seeing it continue to flourish.”

Isha Jain - 12 February 2024

Cooling

Data Centres

Iceotope achieves chip cooling industry milestone at 1000W

Iceotope has achieved chip-level cooling up to 1000W and beyond. The published results in, 'Achieving chip cooling at 1000W and beyond with single phase precision liquid cooling', validate how single-phase liquid cooling can achieve 1000W cooling and the thermal performance of precision liquid cooling.

The data centre industry is looking to liquid cooling as the solution for solving challenges such as the compute densities required for AI, the overall rising thermal design power of IT equipment, and the need for sustainable cooling solutions. Data centre operators must know they are future-proofing their infrastructure investment for 1000W to 1500W to 2000W CPUs and GPUs in the coming years. The testing conducted by Iceotope Labs has demonstrated how precision liquid cooling technology is expected to meet these challenges.

Key findings from the testing include:

At a flow rate of 7l/min, Iceotope's copper-pinned KUL SINK achieved a thermal resistance of 0.039K/W when a 1000W heat load was applied to Intel’s Airport Cove thermal test vehicle (TTV), a thermal emulator for the 4th Gen Intel Xeon Scalable processors. This translates to an 11.4% improvement in thermal resistance, compared to a like-for-like test of a tank immersion product containing a forced-flow heatsink.

Thermal resistance remains almost constant at a given flow rate as the power was increased from 250W to 1000W.

The results demonstrate high confidence that testing at 1500W will yield the same consistency based on the testing of the thermal resistance from 250W to 1000W.

“Iceotope precision liquid cooling technology has achieved an important industry milestone by demonstrating enhanced thermal performance capability compared to other competing liquid cooling technologies,” says Neil Edmunds, Vice President of Product Management at Iceotope.

“We are confident that future testing of our standard solution at elevated power levels will demonstrate further inherent cooling capability. Iceotope is also continuing to develop new solutions which enable even higher roadmap power levels to be attained in a safe, sustainable and scalable way.”

“The ability to cool 1000W silicon is a key milestone in building the runway for silicon with higher thermal design power and enabling efficient data centre and Edge cluster solutions of the future,” says Mohan J Kumar, Intel Fellow.

Read more latest news from Iceotope here.

Isha Jain - 8 February 2024

Cooling

Data Centres

Concentric AB wins business nomination in liquid cooling market

Concentric AB has announced that it has received its first new multi-year business nomination from a leading global OEM customer in the data centre liquid cooling market. The value of this new business is 63MSEK per year, and the start of production is planned in the first quarter of 2025. This strategic customer selected Concentric’s seal-less e-pump based on its innovative design, proven endurance and dependability for its new data centre liquid cooling application.

The global data centre market is expected to grow at a CAGR of 10-13% over the next six years. There is a clear trend towards liquid cooling in these applications, and it is anticipated that liquid cooling in data centres will grow at a faster rate of 24.4% during the same period, according to a report by MarketsAndMarkets Research.

AI has redefined the way chips are designed and utilised in the semiconductor industry, leading to optimised energy efficiency and performance for larger datasets. As performance requirements increase, so does the need for cooling. Liquid cooling is more effective than air cooling in handling a data centre's growing densities, as these systems directly dissipate heat from the battery cells through the coolant, allowing customers to achieve precise temperature control, unaffected by external conditions.

“This first business nomination from a global market leading OEM for data centre cooling systems is another testimonial of the successful execution of our growth plans into new markets. As with our previous wins in energy storage applications, data centres are another new market where our existing products, which are already proven to manage similar liquid cooling challenges, can fulfil the customer’s needs. This new business serves as a significant gateway for Concentric into this highly attractive and fast-growing market and I am extremely proud of our global sales and engineering team, who has developed this new solution with the customer, based on an existing Concentric product,” says Martin Kunz, President and CEO Concentric AB.

Isha Jain - 29 January 2024

Cooling

Data Centres

Time to prepare data centre chillers for cool weather corrosion!

The approach of fall and winter means cooler temperatures are on their way. For data centres in northern climates, that also means some chiller systems used to cool excess server heat can be turned off to save energy. Cortec has encouraged maintenance personnel to follow seasonal layup best practices in order not to let corrosion costs swallow up those energy savings.

Corrosion problems during chiller shutdown

Seasonal shutdown of data centre chillers makes them vulnerable to internal corrosion for two key reasons. Firstly, the normal water treatment chemicals are no longer running through the system. Secondly, the empty system may have residual moisture that incites corrosion throughout the pipes and bundles. Resulting corrosion problems may not show up until later, such as when the chiller system is turned back on and corrosion products plug the system or leaks appear. Even if higher Iron content in the water is the only sign that corrosion has occurred, the underlying metal loss can eventually lead to shortened service life for the chiller.

Seasonal layup made easy

The Cooling Tower Frog is an excellent low-labour method of slowing down or even eliminating offline corrosion. All that is needed is to place these water-soluble pouches in the chiller’s drained water box, slit open the water-soluble packaging, and shut all chiller openings. During the layup period, Vapor phase corrosion inhibitors will diffuse out of the Cooling Tower Frog and form a protective molecular layer on metal surfaces inside the chiller. At start-up, the chiller can simply be filled and started as normal without removing the Cooling Tower Frog, which will simply dissolve in the makeup water.

Offset corrosion and energy costs

Cooler winter temperatures offer a great break to data centres facing high energy costs from chillers. To make the most of those energy savings, data centre managers should also do their best to offset potential corrosion problems and costs simply by taking advantage of the Cooling Tower Frog. Contact Cortec to learn more about this easy seasonal chiller layup solution.

Isha Jain - 11 December 2023

Cooling

Data Centres

News

nVent's new rear door coolers offer scalable solution for high-density racks

nVent has announced the launch of its RDHX PRO rear door cooling unit, a new high-performance solution offering the capability to upgrade data centres with up to 78kW high-density racks, meeting the requirements for the growing use of AI-enhanced applications, demands for higher energy efficiency and sustainability, and the need for greater data centre space utilisation.

Rear door cooling solutions are increasingly popular as a pay-as-you-go method for retrofitting increased data centre cooling performance, in many cases without the need for additional re-engineering of the existing facility mechanical design. When installed as a primary method of heat removal, rear door coolers eliminate much of the need for mechanical equipment including fans, blowers and CRAHs, to provide a more optimised cooling solution aligned with the exact requirements for individual racks, at the same time reducing noise and energy waste.

“The RDHX PRO RDC is an innovative rear door cooling solution which responds to trends currently converging to make life more complex for those managing data centres,” says Marc Caiola, nVent's Vice President of Data Cooling and Networking. “Our new RDC combines the ability to upgrade cooling capacity without significant capital investment and re-equipping of existing data centre space; the opportunity to reduce the amount of power used for cooling IT, as well the expense and associated emissions; and the advantage of an easily maintainable cooling solution designed and built for high availability.”

RDHX PRO from nVent, bringing flexibility to rack cooling

One of the features of RDHX PRO RDC is the ability to operate comfortably with 57°F (14°C) warm water cooling, making it environmentally friendly. By utilising free cooling, the device significantly reduces the amount of energy required to cool the data centre, not only reducing overall data centre power consumption, but also the carbon footprint of operations.

The future-proofed solution can also be combined with direct-to-chip liquid cooling deployments, for customers who are moving towards a hybrid approach to cooling, and especially those looking to maximise heat reuse opportunities, combined with NVent’s liquid cooling CDU 800.

RDHX PRO RDC is lab tested with full results available upon request.

Rear door cooling for maximum compatibility and availability

The new rear door cooling units from nVent are offered in a range of standard sizes for use with 42U, 47U, 48U and 52U data centre racks, in both 600mm and 800mm widths. The coolers themselves are 250mm deep, with a dry weight of 200kg. One of the features of rear door coolers is that they are compact and do not require any additional floor space or ceiling headroom, it can free up white space as CRAH units and other room cooling equipment may be eliminated from the data centre.

Each unit has a maximum power draw of 1500W and features 12x axial brushless DC fans. However, the large number of fans means they individually do less work than a smaller number of large fans, reducing stress on the electromechanical devices and increasing their lifecycle. In the event failures do occur, nVent has developed an innovative, tool-less and hot-swappable method for fan replacement, as well as other critical components such as PSUs. This feature allows uptime to be maximised at the same time as reducing service callouts and associated costs.

For ease of integration with data centre management applications as well as BMS software, the new rear door cooling units also feature a newly designed controller which is compatible with most popular network and control protocols including web interface, Ethernet, SNMP, Modbus TCP, RTU, and Redfish.

Data centre white space racks, cooling and accessories

The new RDHX PRO RDC is now available. For further details, click here or email datacentre.eu@nvent.com.

Isha Jain - 11 December 2023

Cooling

Data

Data Centres

Building the telco edge

By Nathan Blom, Chief Commercial Officer, Iceotope

With the growing migration of data to the edge, telco providers are facing new challenges in the race to net zero. Applications like IoT and 5G require ultra-low latency and high scalability to process large volumes of data close to where the data is generated, often in remote locations. Mitigating power constraints, simplifying serviceability and significantly driving down maintenance costs are rapidly becoming top priorities. Operators are tasked with navigating these changes in a sustainable and cost-effective manner, while working towards their net zero objectives. Liquid cooling is one solution able to help them do just that.

Challenges facing telco operators

The major challenges confronting telco operators can be distilled into three fundamental aspects: power constraints, increased density, and rising costs.

The limitations of available power in the grid pose a significant challenge. Both urban areas and the extreme edge have concerns about diverting power from other essential activities. As telcos demand more data processing, increased computational power, and GPUs, power consumption becomes a critical bottleneck. This constraint pushes operators to find innovative solutions to reduce power consumption.

Telco operators also face the dual challenge of increasing the number of towers while also enhancing the capacity of each tower. This requirement to boost compute power at each node and increase the number of nodes strains both power budgets and computational capabilities. The pursuit of maximising the value of each location becomes critical.

Finally, the combination of increased density, heightened service costs per site, and a surge in operational expenses (OPEX) due to the need for service and maintenance leads to rising costs, particularly at the extreme edge. The logistics and expenses of servicing remote sites drive up OPEX, making it a pressing concern for telco operators.

Liquid cooling as a solution

One promising avenue to address these challenges is liquid cooling. Cooling is a vital aspect of data centre operations, consuming approximately 40% of the total electricity used. Liquid cooling is rapidly becoming the solution of choice to efficiently and cost-effectively accommodate today’s compute requirements. However, not all liquid cooling solutions are the same.

Direct-to-chip appears to offer the highest cooling performance at chip levels, but because it still requires air cooling, it adds inefficiencies at the system level. It is a nice interim solution to cool the hottest chips, but it does not address the longer-term goals of sustainability, serviceability, and scalability. Meanwhile, tank immersion offers a more sustainable option at the system level, but requires a complete rethink of data centre design. This works counter to the goals of density, scalability, and most importantly, serviceability. Facility and structural requirements mean brownfield data centre space is essentially eliminated as an option for both of those solutions, not to mention special training is required to service the equipment.

Precision liquid cooling combines the best of both technologies, by removing nearly 100% of the heat generated by the electronic components of a server and reducing energy use by up to 40% and water consumption by up to 100%. It does this by using a small amount of dielectric coolant to precisely target and remove heat from the hottest components of the server, ensuring maximum efficiency and reliability. This eliminates the need for traditional air-cooling systems and allows for greater flexibility in designing IT solutions. There are no hotspots to slow down performance, no wasted physical space on unnecessary cooling infrastructure, and minimal need for water consumption.

Precision liquid cooling also reduces stress on chassis components, reducing component failures by 30% and extending server lifecycles. Servers can be hot swapped at both the data centre and at remote locations. Service calls are simplified and eliminate exposure to environmental elements on-site, de-risking service operations.

Operating within standard rack-based chassis, Precision liquid cooling is also highly scalable. Telco operators can effortlessly expand their compute capacity from a single node to a full rack, adapting to evolving needs.

The telco industry is on the cusp of a transformative era. Telco operators are grappling with the challenges of power constraints, increased density, and rising costs, particularly at the extreme edge. Precision liquid cooling offers a sustainable solution to these challenges. As the telecommunications landscape continues to evolve, embracing innovative cooling solutions becomes a strategic imperative for slashing energy and maintenance costs while driving toward sustainability goals. It's going to be an exciting time for the future of compute.

Isha Jain - 7 December 2023

Cooling

News

Vertiv's acquisition of CoolTera boosts liquid cooling portfolio

Vertiv has announced that subsidiaries of the company have entered into a definitive agreement to acquire all of the shares of CoolTera, a provider of coolant distribution infrastructure for data centre liquid cooling technology and certain assets, including certain contracts, patents, trademarks, and intellectual property from an affiliate of CoolTera.

Founded in 2016 and based in the UK, CoolTera provides liquid cooling infrastructure solutions, and designs and manufactures coolant distribution units (CDU), secondary fluid networks (SFN), and manifolds for data centre liquid cooling solutions. CoolTera and Vertiv have been technology partners for three years with multiple global deployments to data centres and super compute systems. The acquisition of CoolTera brings advanced cooling technology, deep domain expertise, controls and systems, and manufacturing and testing for high density compute cooling requirements to Vertiv’s already robust thermal management portfolio, as well as key industry partnerships already in place across the ecosystem for such applications. CoolTera has a proven track record of engineering excellence and strong customer service supported by a team of highly qualified, proven liquid cooling engineers.

“This bolt-on technology acquisition is consistent with our long-term strategic vision for value creation, and further strengthens our expertise in high-density cooling solutions,” says Giordano Albertazzi, Chief Executive Officer, Vertiv. “And while the purchase price is not material to Vertiv, the acquisition is essential to further reinforce our liquid cooling portfolio, enhancing our ability to serve the needs of our global data centre customers and strengthening our position and capabilities to support the needs of AI at scale.”

“It was a logical decision to join the Vertiv family,” says Mark Luxford, CoolTera’s Managing Director. “We are excited to join the leader in data centre thermal management. Vertiv has demonstrated the ability to scale technologies at a pace that is needed for AI deployment. We look forward to working as a team to deliver next generation liquid cooling technologies at the scale the industry requires. Vertiv is well-positioned to support the industry growth.”

The acquisition is expected to close in the fourth quarter of 2023, subject to customary closing conditions.

Isha Jain - 5 December 2023

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173