How to prioritise efficiency without compromising on performance

Author: Beatrice

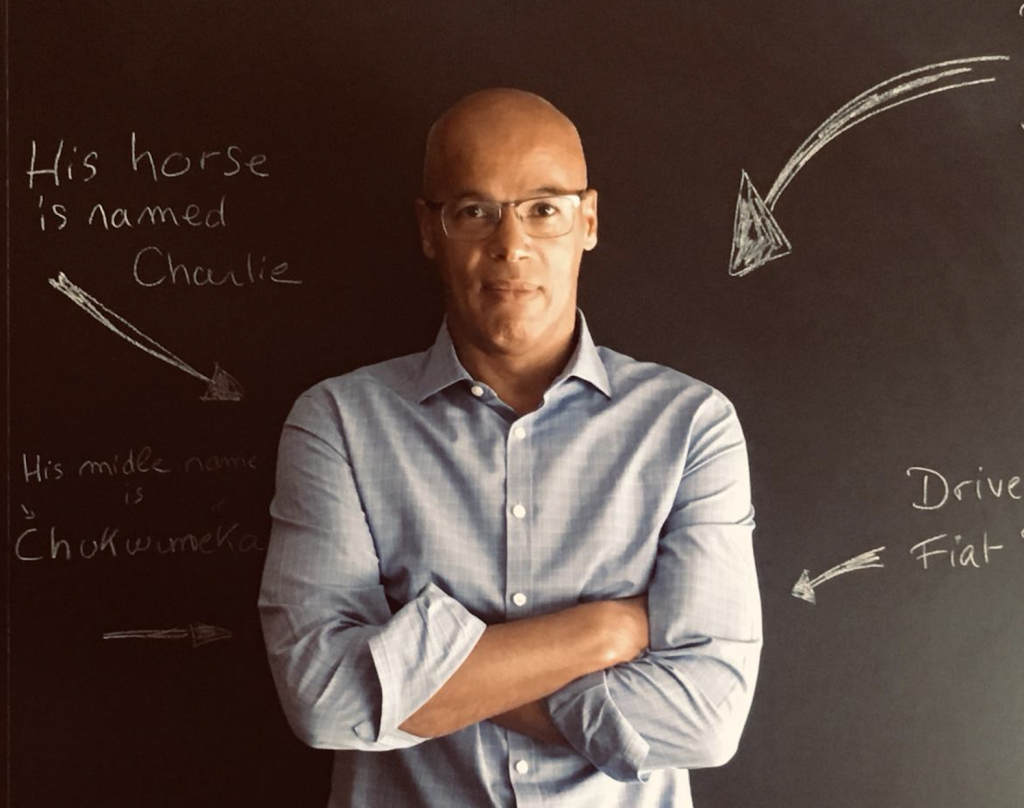

By Martin James, VP EMEA at Aerospike

With the energy sector in turmoil and the energy price cap now set to be in place only until April 2023, financial uncertainty is a thread that runs through all businesses, not least those that are running data centres filled with power-hungry servers.

Data centre operators have, however, been addressing the issue of energy for years now. Their priority is no longer to pack in as many active servers as possible, but instead to design facilities that deliver efficient, resilient services that can minimise not only their carbon footprint, but their customers’ too.

At one point the idea of reducing server count would have been seen as a bad proposition for cloud providers and data centre operators. It would decrease consumption and lower profits. But with increasing concerns about climate change, attitudes have changed.

Many operators today have ESG strategies and goals and minimising the impact of escalating energy prices is just part of a broader drive towards reaching net zero carbon. Among the hyperscalers, for example, Amazon announced earlier this year that it had increased the capacity of its renewable energy portfolio by nearly 30%, bringing the total number of its projects to 310 across 19 countries. These help to power its data centres and have made Amazon the world’s largest corporate buyer of renewable energy.

As internet usage grows globally, Google has also set its sights on moving to carbon-free energy by 2030. In a blog last Autumn, Google’s CEO Sundar Pichai outlined how in its newest building at its California HQ – the lumber is all responsibly sourced, and the outside is covered in solar panels, which is set to generate about 40% of the energy the building would use.

Using solutions to drive down PUE

While opting for greener energy sources, data centres of all sizes can also lower their carbon footprint if they reduce what is called ‘power usage effectiveness’ (PUE). This is a measure of how much energy is used by the computing equipment within the facility. TechTarget describes it as dividing the total amount of power entering a data centre by the power used to run the IT equipment within it. PUE ratings have become increasingly important, not just to data centre operators who want to be seen to be efficiently managing their facilities, but also to their customers who will benefit from this improved efficiency.

With the drive towards carbon neutrality filtering through the entire ecosystem of data centres and the companies that work in partnership with them, a range of both hardware and software solutions are being used to drive down PUE.

A recent IEEE paper focused on CO2 emissions efficiency as a non-functional requirement for IT systems. It compared the emissions efficiency of two databases, one of which was ours, and their costs. It concluded that their ability to reduce emissions would not only have a positive impact on the environment but would also reduce expenditure for IT decision-makers.

This makes sense as efficiency starts to take priority over scaling resources to deliver performance. Adding extra servers is no longer environmentally sustainable, which is why data centres are now focused on how they can use fewer resources and achieve a lower carbon footprint without compromising on either scalability or performance.

Cut server counts without a performance trade-off

A proven method for doing this in data centres is by deploying real-time data platforms. These allow organisations to take advantage of billions of real-time transactions using massive parallelism and a hybrid memory model. This results in a tiny server footprint – our own database requires up to 80% less infrastructure – allowing data centres to drastically cut their server count, lower cloud costs for customers, improve energy efficiency and free up resources that can be used for additional projects.

Modern real-time data platforms provide unlimited scale by ingesting and acting on streaming data at the edge. They can combine this with data from systems of record, third party sources, data warehouses, and data lakes.

Our own database delivers predictable high performance at any scale, from gigabytes to petabytes of data at the lowest latency. This is why earlier this month, we announced that our latest database running on AWS Graviton2 – the processors housed in data centres that support huge cloud workloads – have been proven to deliver price-performance benefits of up to an 18% increase in throughput while maintaining up to 27% reduction in cost, not to mention vastly improved energy efficiency.

The fiercely competitive global economy demands computing capacity, speed and power, but this can no longer come at the price of our planet. The concerns for data centres are not just to combat escalating energy prices, but to turn the tide on energy usage. Meeting ESG goals means becoming more energy efficient, reducing server footprints and taking advantage of a real-time data platform to ensure performance is unaffected. Every step that data centres take towards net zero is another indicator to customers that they have not just their best interests at heart, but those of the environment too.