Hunting Anomalies: combining CyberSec with Machine learning

Author: Beatrice

In psychology, Capgras delusion is the (unfounded) thought that some close person has been replaced by an identical impostor. Under the spell of this delusion, people feel that something “isn’t right” about the person they know. Everything looks as it is supposed to but it just doesn’t feel seemly.

Whenever I receive what turns out to be a “good” phishing email, I get much of the same experience. It looks legitimate but often something feels “off”. I have seen so many attempts over the years that the small irregularities or suspicious arrivals trigger a warning signal. It’s an anomaly in a familiar place.

However, we can’t expect everyone to have the same experience in IT. That’s why cybersecurity exists. We implement systems to protect users from malicious actors who will try anything to gain access or information from their victims. Manually noticing phishing or other malicious attempts takes experience. Often, emails, websites, and apps are crafted in such a way that they look almost identical. Yet, they are a little different.

There is a field that deals well with detecting such small differences – machine learning.

A bright future for CyberSec

I don’t even mean “the future” in the exact sense of the word. Machine learning (ML) has been showing off its muscles in cybersecurity for quite some time now. Back in 2018, Microsoft had stopped a potential outbreak of Emotet through clever use of ML in both local and cloud systems.

Philosophically, cybersecurity is a perfect candidate for machine learning as models are predictive. These predictions are derived from massive amounts of data (a common criticism of current ML). After the models are trained, they make predictions on data points that are very similar but not identical to the training data.

Most malicious attacks depend on a similar approach. They have to fool a human user in order to execute some actions. Clearly, they must look as similar to something legitimate as possible. Otherwise, it will be ignored even by those less tech-savvy.

Additionally, many new renditions of malware are somewhat simple mutations of the same code. Since we’ve been dealing with malicious code for several decades now, there’s enough of it out there to create good training sets for machine learning. We also have plenty of innocuous code for anything else we might need.

A common threat: Domain Generated Algorithms

Domain Generation Algorithms (DGAs) have been a long-standing threat for cybersecurity. Nearly everyone who has been in this field has had some experience with DGAs. There are numerous benefits for the attacker, making it a popular vector of attack.

One of the primary benefits of DGA attacks is that the perpetrator can flood DNS with thousands of randomly generated domains. Of those thousands only one would be the real C&C center, resulting in significant issues for any expert trying to find the source. Additionally, since DGAs are, mostly, seed-based, the attacker can know which domain to register beforehand.

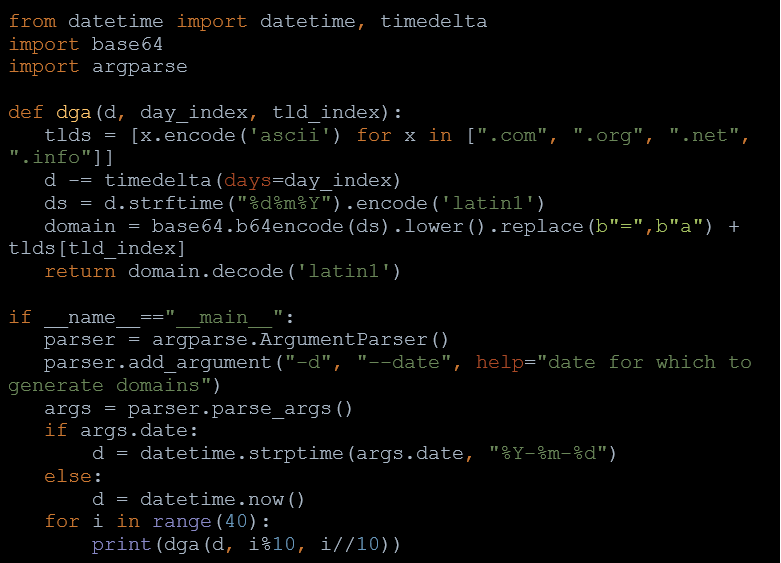

A common and very popular way to generate seeds is to use dates. Obviously, it’s very easy to predict which domains to register ahead of time.

However, DGAs produce URLs that look nothing like a regular website (with some exceptions, as randomness can lead to something that may seem like a pattern). For example, Sisron’s DGA produced these samples:

- mjgwndiwmjea.org

- mdcwntiwmjea.net

- mdywntiwmjea.net

There are two important drawbacks. It makes it easier to find that something is amiss, even for someone outside of cybersec. Secondly, after just several domains, it’s clear (to humans) that they are being generated.

However, here’s the fun part – automating fool-proof (or even something close to that) DGA detection is unbelievably difficult. Rule-based approaches are prone to failure, false positives, or are too slow.

DGAs were (and in large part still are) a real pain for any cybersecurity professional. Luckily, machine learning has already allowed us to make great strides in improving detection methods – Akamai have developed their, allegedly, very sophisticated and successful model. For smaller players in the market, there are plenty of libraries and frameworks for the same purpose.

Machine learning for other avenues

Cybersecurity is in a fortunate position – there’s millions of data points and more are produced every day. Unlike other fields, domain-specific data that can be easily labelled will continue being created for the foreseeable future.

Yet, if DGAs can be “solved” (I use this word with some caution) through machine learning, other attack methods almost definitely can be as well. A great application for machine learning is phishing. Outside of being the most popular vector of attack, it’s also the one that prominently uses impersonation and fabrication.

Every (good) phishing website (and email) looks a lot like it’s supposed to. However, there will always be some discrepancy – an unusual link here, a grammatical error there, there’s always something waiting to be uncovered.

After some extensive logistic regression model training, such a tool should be able to output a phishing-probability and assign a specific website to a class. While acquiring data for these models might be a little challenging, there are some public sets available (e.g. PhishTank, used by authors of the study) to ease the process.

Conclusion

The applications I have mentioned are just a quick skim over the surface. Machine learning models can be applied to probably every sphere in cybersecurity. Malware detection, OSINT, email protection and others can be tackled effectively through proper use of ML.

Thus, cybersecurity is in a unique position. The broad nature of cyberattacks generates large amounts of data that is the foundation for ML-based solutions. It will not solve everything, especially highly tailored attacks, but it will raise the bar that attackers need to overcome immensely. Therefore, cybersecurity should be thought of as the avant-garde of machine learning applications.

We’ll be discussing more about the ML-based solutions in a free annual web scraping conference Oxycon – the agenda involves both technical and business topics around data collection. Free registration is available here.

Author: Juras Juršėnas, COO at Oxylabs.io.