Liquid Cooling Technologies Driving Data Centre Efficiency

Data Centre Build News & Insights

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

Yondr Group holds ground-breaking for Toronto data centre

Yondr Group, a global developer, owner and operator of hyperscale data centres, has held a ground-breaking ceremony to mark the start of work on site for its 27MW Toronto data centre.

The project, which Yondr is building on a 4.5-acre site, is the company’s first development in Canada. It forms part of Yondr’s global expansion, as the business continues to deliver reliable and resilient data centre capacity at speed and at scale, with projects currently completed or in progress in North America, Europe and Asia.

The ground-breaking in Toronto follows the completion of the company’s 48MW data centre project in Northern Virginia, and the first ready for service (RFS) milestone for the company’s 40MW Frankfurt data centre last November.

The ceremony was attended by Councillor Shelley Carroll of Don Valley North, who gave a speech highlighting the city’s thriving digital economy and emphasised Toronto’s vision of becoming a global hub for innovation and talent. The event also brought together key stakeholders all united in their vision of building a more connected and future-proofed Toronto.

Situated in a strategic location within Canada’s emerging data centre hub, the project comprises a three-storey, 27MW data centre, which is scheduled to achieve RFS by mid-2026. The project has been designed by Yondr to follow the Toronto Green Standard, the city’s sustainable design and performance requirements for new developments, and this aligns with the company’s environmental goals and target for achieving net zero for scope 1 and 2 carbon emissions by 2030.

The building will feature a closed loop cooling design, which means once the chilled water loop is filled, the facility will not need to consume water for cooling. Once completed, the project will have bike parking, electric vehicle charging points and will open up pedestrian walkways. The environmentally friendly landscaping plan will have native and pollinator plants, and the building’s glass will be bird-friendly, helping birds to see the building as a barrier and avoid collisions.

As part of its social impact initiatives, Yondr has partnered with the University of Toronto to fund a scholarship programme. ‘The Yondr Group Scholarship’ will be available to undergraduate students at the university entering courses in Computer Science, the Rotman Commerce business programme, Life Sciences, or Mathematical & Physical Sciences.

Successful applicants will receive $5,000 per year for five years, with the first awards being made to students starting their studies at the beginning of the 2025/26 academic year this coming autumn.

Kent Andersson, Program Controls Director for the Americas at Yondr Group, says, “Our Toronto data centre forms a key part of our strategy for North America, where there is an urgent need to increase capacity to support the digital economy. This project will play a key role in providing the infrastructure needed to support cutting-edge cloud computing and connectivity, and enable the development of AI and future technologies in Canada and beyond.

“We would like to thank the Canadian authorities, including the City of Toronto and our strategic partners, for supporting a positive approach to bringing this project from concept to site, and I look forward to seeing the data centre take shape on site over the coming months.”

For more from Yondr Group, click here.

Simon Rowley - 31 January 2025

Cooling

Data Centres

Infrastructure Management for Modern Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

DeepCoolAI and Sanmina partner to scale AI infrastructure

DeepCoolAI, an expert in AI infrastructure (including liquid cooling and high density solutions), and Sanmina Corporation, a specialist in advanced manufacturing, have announced a strategic partnership that seeks to revolutionise AI-driven data centres.

Together, the companies are striving to set new standards in efficiency, flexibility and sustainability, catering to the ever-growing demands of AI-driven, high-performance computing environments.

“Our partnership with Sanmina amplifies DeepCoolAI’s mission to pioneer cooling innovations for AI-driven data centres,” says Kris Holla, Founder and CEO of DeepCoolAI. “By integrating our technology, innovation and AI customised solutions with Sanmina’s global footprint and manufacturing expertise, we empower customers to achieve greater efficiency, unparalleled performance and high availability at scale. Together, we are building a future filled with unparalleled possibilities."

Hari Pillai, President, Technology Components Group at Sanmina, comments, “Leveraging Sanmina’s state-of-the-art manufacturing facilities around the world, as well as the depth and experience of our design and manufacturing teams that have successfully brought multiple Open Compute Project (OCP) rack and power solutions to market, this partnership ensures rapid deployment of reliable, high-quality AI solutions tailored to each customer's unique needs.

“From liquid-to-liquid and liquid-to-air Coolant Distribution Units (CDUs) to prefabricated modular high density solutions, DeepCoolAI and Sanmina are equipping data centres with the tools to exceed operational goals. The portfolio also emphasises seamless rack level integration with liquid cooling and high density power, enabling customers to deploy cooling systems with high availability and flexibility at scale.”

“Our strategic alliance with DeepCoolAI brings an unprecedented combination of innovation and scalability to the data centre market. Together, we’re delivering future-proof AI infrastructure solutions that optimise efficiency and sustainability for the next generation of AI driven workloads. We are committed to the fast-growing data centre market with unprecedented scalability and manufacturing capacity to help our customers to turn on data centres faster.”

Innovation to set a new benchmark

AI-powered precision: Innovative liquid cooling and high density technology for sustainability and rapid scalability.

High availability and sustainability at the core: Solutions are designed to meet high availability, stringent environmental standards, aligning with global carbon neutrality goals.

Global reach, local support: Sanmina’s robust supply chain ensures consistent delivery of solutions worldwide, backed by regional expertise and customer support.

Simon Rowley - 21 January 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Telehouse launches new liquid cooling lab

Addressing the thermal challenges of today’s high-performance computing and AI workloads, Telehouse International Corporation of Europe, a global data centre service provider, has announced the launch of its liquid cooling lab following the development of strategic partnerships with four of the world’s most advanced liquid cooling technology providers.

From early 2025 onwards, the four companies – Accelsius, EkkoSense, JetCool, and Legrand – will showcase their advanced cooling technologies at the new, state-of-the-art liquid cooling lab at Telehouse South, the latest addition to Telehouse’s London Docklands campus. This project will enable Telehouse customers to explore cutting-edge liquid cooling innovations and find an option that works best for their needs.

Accelsius is bringing its NeuCool platform to Telehouse’s London data centre, introducing a two-phase, direct-to-chip cooling technology that uses a waterless, non-conductive refrigerant for heat removal. Removing an average heat flux of 250W/cm² and hot spot heat fluxes above 500W/cm², NeuCool offers substantial performance headroom for AI and high-performance computing.

The deployment will include the Accelsius Thermal Simulation Rack, a patent-pending system with Load Simulation Sleds that replicate high-power servers, enabling users to control, monitor, and measure the impressive cooling and computational performance delivered by NeuCool technology. Telehouse clients will also have access to a live performance portal for real-time data and seamless demonstrations of the system’s capabilities.

JetCool, a Flex company, will provide its SmartPlate System in the Liquid Cooling Lab - a self-contained liquid cooling product in compact 1U and 2U form factors. Requiring no piping, plumbing, or facility modifications, the SmartPlate System provides a hassle-free approach to efficient cooling. JetCool’s patented microconvective liquid cooling technology handles the highest-power CPUs, GPUs, and AI accelerators, cooling Superchips over 3,500W and outperforming microchannel-based designs by up to 30%, as validated by third-party testing.

JetCool’s product suite extends beyond self-contained systems to integrated rack-level liquid cooling products. These incorporate JetCool’s in-rack SmartSense Coolant Distribution Unit (CDU), capable of cooling up to 300kW per rack or neighbouring racks, with scalability to row-based configurations exceeding 2MW. JetCool’s flexible technology enables Telehouse tenants to choose products ranging from liquid-assisted air cooling to full-scale liquid cooling, meeting the demands of today’s high-performance computing and AI workloads.

Legrand will install its USystems ColdLogikCL20 Rear Door Heat Exchanger (RDHx), supporting over 90kW capacity per cabinet. Its ColdLogik RDHx negates heat at source and removes the need for air-mixing or containment. Ambient air is drawn into the rack via the IT equipment fans, and the hot exhaust air is expelled from the equipment and pulled over the heat exchanger assisted by EC fans mounted in the RDHx chassis. The exhaust air transfers heat into the coolant within the heat exchanger, and the newly chilled air is expelled into the room at, or just below, the predetermined room ambient temperature designed around sensible cooling.

Both processes are managed by the ColdLogik adaptive intelligence, using air-assisted liquid-cooling to control the whole room temperature automatically at its most efficient point.

EkkoSense, a provider of AI-powered data centre optimisation software, will deploy to Telehouse's lab its innovative EkkoSim ‘what-if?’ scenario simulations; low-cost air-side and liquid-side monitoring sensors; web-based EkkoSoft Critical 3D visualisations with analytics and AI-powered advisory; and anomaly detection tools. Because the latest AI compute hardware and hybrid cooling infrastructure introduce new levels of engineering complexity, EkkoSense deploys the power of AI to capture, visualise, and analyse data centre performance.

Telehouse has already deployed EkkoSense’s EkkoSoft Critical platform across its London Docklands campus, optimising data centre cooling performance. A key benefit for the Telehouse operations team has been how the EkkoSense AI-powered platform has not only improved visibility into cooling and capacity performance, but also helped to reduce the administrative burden for already busy Telehouse team members.

For more from Telehouse, click here.

Simon Rowley - 15 January 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

The data centre liquid cooling market outlook

According to analysis by Persistence Market Research, the data centre liquid cooling market is projected to grow from $4.1 billion in 2024 to $19.4 billion by 2031, at a robust CAGR of 24.6%.

This growth, the research firm says, is fuelled by increasing data centre density, the need for energy-efficient cooling systems, and rising adoption of High-Performance Computing (HPC). Liquid cooling systems offer superior energy efficiency, cutting energy use by up to 40% compared to air cooling. Major cloud providers and hyperscale data centres are driving demand for innovative solutions like cold plate cooling and immersion cooling, which address the challenges of high thermal loads and sustainability. North America leads the market with a significant share, supported by booming cloud computing and favorable regulatory policies.

The growing need for efficient cooling solutions

As data centres continue to expand in scale and capacity, the demand for efficient cooling mechanisms has grown exponentially. Traditional air-cooling systems, though widely used, are struggling to meet the energy efficiency and thermal management needs of modern high-performance computing (HPC) systems. Liquid cooling is emerging as a revolutionary solution to address these challenges.

Liquid cooling systems utilise water or specialised cooling fluids to absorb and dissipate heat generated by servers and IT equipment. Unlike air cooling, liquid cooling has a significantly higher thermal transfer efficiency, making it ideal for densely packed data centres. Key components of these systems include cold plates, heat exchangers, and pumps that work in synergy to maintain optimal operating temperatures.

Growth projections and industry trends

The global data centre liquid cooling market is witnessing robust growth, with projections estimating a CAGR of over 20% between 2024 and 2032. This surge is driven by the increasing deployment of advanced IT infrastructure and rising energy costs. Furthermore, environmental concerns are pushing data centre operators to adopt greener, more energy-efficient cooling solutions, further boosting the adoption of liquid cooling.

Liquid cooling offers several advantages over conventional air-cooling methods:

- Enhanced energy efficiency: With the ability to directly cool components, liquid cooling reduces overall energy consumption.

- Higher cooling capacity: It supports high-density server configurations, enabling better utilisation of physical space.

- Reduced noise and maintenance: Liquid cooling systems operate quietly and require less frequent maintenance compared to air-cooling setups.

Applications across industries

Liquid cooling is not limited to a single sector; it is being rapidly adopted across industries such as cloud computing, artificial intelligence, and blockchain technology. These sectors require immense computational power, making efficient thermal management critical to their operations.

Key challenges in liquid cooling implementation

Despite its advantages, implementing liquid cooling comes with its own set of challenges:

- Initial investment costs: The upfront cost of installing liquid cooling systems can be prohibitive for smaller enterprises.

- Complexity in design and maintenance: Designing an efficient liquid cooling system requires expertise, and regular maintenance can be complex.

- Risk of leakage: While rare, leakage of coolant fluids can pose a risk to critical IT equipment.

Innovations driving adoption

Innovations in liquid cooling technology are making these systems more accessible and reliable. For instance, immersion cooling - where servers are submerged in non-conductive cooling fluids - is gaining traction for its simplicity and effectiveness. Similarly, modular cooling systems are enabling scalability and easier integration into existing data centre architectures.

Regional insights: where growth is happening

The data centre liquid cooling market is experiencing significant growth across various regions:

- North America: Leading the market due to its extensive data centre infrastructure and focus on green technologies.

- Europe: Accelerating adoption driven by stringent energy efficiency regulations.

- Asia-Pacific: Witnessing rapid growth due to the booming IT sector and increasing investments in data centre facilities.

Future outlook: sustainability and beyond

The future of data centre cooling lies in sustainable technologies. Liquid cooling systems are poised to play a pivotal role in achieving carbon-neutral data centres. Innovations like water-free cooling systems and closed-loop solutions are expected to further enhance the eco-friendliness of these systems.

The path ahead for liquid cooling

Data centre liquid cooling represents the next frontier in thermal management solutions. As technological advancements continue to reshape the IT landscape, liquid cooling systems will be essential in meeting the performance and sustainability demands of future data centres. Their adoption not only ensures energy efficiency but also aligns with global efforts toward environmental conservation.

Simon Rowley - 6 January 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

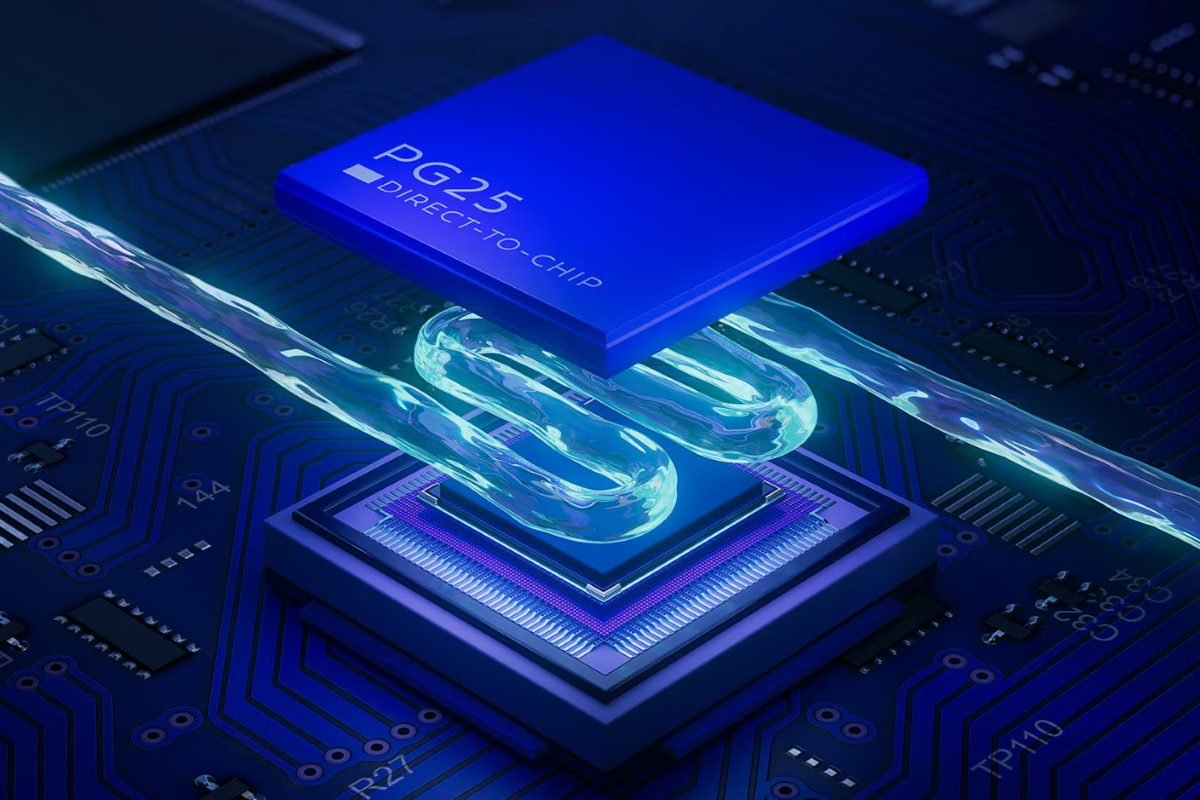

Castrol launches new direct-to-chip cooling fluid

Castrol has announced the launch of Castrol ON Direct Liquid Cooling PG 25, a new propylene glycol-based cooling fluid specifically designed for direct-to-chip cooling applications in high-performance data centres.

The ready-to-use solution helps meet the complex thermal management needs of today's high-performance computing, while offering protection against corrosion and bacterial growth.

Castrol ON PG 25 has been developed to meet the rapidly growing adoption of direct-to-chip cooling technology in data centres, driven by the demand for higher computing densities, particularly for applications like AI, machine learning, and high-performance computing. The global data centre cooling market is expected to reach $16.8 billion by 2028, with direct-to-chip cooling becoming widely adopted.

Traditional air cooling, which currently dominates data centre cooling, often cannot efficiently manage the heat generated by densely packed, high-power servers.

To address these escalating cooling demands, the industry is turning to more efficient solutions like direct-to-chip cooling which delivers liquid coolant directly to heat-generating components, enabling faster heat transfer and reducing the reliance on energy-intensive air conditioning systems.

This approach helps maintain the optimal operating temperatures crucial for system reliability and performance.

"As data centres continue to push the boundaries of computing power, direct-to-chip cooling offers an opportunity for data centres to manage the increasing thermal demands of next-generation processors," says Peter Huang, Global Vice President of Thermal Management, Castrol. "Through the launch of PG 25, we are combining Castrol's expertise in thermal management with our global capabilities to help customers meet their cooling challenges with efficiency."

Castrol’s new PG 25 fluid offers several key advantages for data centre operators:• Non-toxic propylene glycol formulation for enhanced safety in sensitive computing environments• Extended protection against metal corrosion and bacterial growth• Comprehensive compatibility with common cooling system materials• Ready-to-use 25% concentration requiring no dilution• Global availability backed by Castrol's technical support network

Castrol ON Direct Liquid Cooling PG 25 is supported by the company's comprehensive operational support and monitoring solutions, enabling data centre operators to optimise the performance of their cooling systems throughout their lifecycle.

"Through research and collaboration with our customers and technology partners, we've developed a truly end-to-end solution that supports data centres to rapidly implement and benefit from direct-to-chip cooling technologies while anticipating the future needs of the industry," adds Sung A Kim, Global Head of Technology for Data Centre Liquid Solutions, Castrol. "Further, our global network will enable us to provide comprehensive support all the way from initial testing to deployment, supporting our customers to confidently implement direct-to-chip cooling solutions across their facilities."

With the addition of Castrol ON Direct Liquid Cooling PG 25 to Castrol’s existing range of immersion cooling fluids and associated services, Castrol can be a one-stop partner for meeting the liquid cooling solutions of today and tomorrow.

The product is now available through Castrol's distribution network in selected markets.

For more from Castrol, click here.

Simon Rowley - 16 December 2024

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Vertiv predicts data centre trends for 2025

AI continues to reshape the data centre industry, a reality reflected in the projected 2025 data centre trends from Vertiv, a global provider of critical digital infrastructure and continuity solutions. Vertiv experts anticipate increased industry innovation and integration to support high-density computing, regulatory scrutiny around AI, as well as increasing focus on sustainability and cybersecurity efforts.

“Our experts correctly identified the proliferation of AI and the need to transition to more complex liquid- and air-cooling strategies as a trend for 2024, and activity on that front is expected to further accelerate and evolve in 2025,” says Vertiv CEO, Giordano (Gio) Albertazzi. “With AI driving rack densities into three- and four-digit kWs, the need for advanced and scalable solutions to power and cool those racks, minimise their environmental footprint, and empower these emerging AI factories has never been higher. We anticipate significant progress on that front in 2025, and our customers demand it.”

According to Vertiv's experts, these are the 2025 trends most likely to emerge across the data centre industry:

1. Power and cooling infrastructure innovates to keep pace with computing densification: In 2025, the impact of compute-intense workloads will intensify, with the industry managing the sudden change in a variety of ways. Advanced computing will continue to shift from CPU to GPU to leverage the latter’s parallel computing power and the higher thermal design point of modern chips. This will further stress existing power and cooling systems and push data centre operators toward cold-plate and immersion cooling solutions that remove heat at the rack level. Enterprise data centre will be impacted by this trend, as AI use expands beyond early cloud and colocation providers.

• AI racks will require UPS systems, batteries, power distribution equipment and switchgear with higher power densities to handle AI loads that can fluctuate from a 10% idle to a 150% overload in a flash.

• Hybrid cooling systems, with liquid-to-liquid, liquid-to-air and liquid-to-refrigerant configurations, will evolve in rackmount, perimeter and row-based cabinet models that can be deployed in brown/greenfield applications.

• Liquid cooling systems will increasingly be paired with their own dedicated, high-density UPS systems to provide continuous operation.

• Servers will increasingly be integrated with the infrastructure needed to support them, including factory-integrated liquid cooling, ultimately making manufacturing and assembly more efficient, deployment faster, equipment footprint smaller, and increasing system energy efficiency.

2. Data centres prioritise energy availability challenges: Overextended grids and skyrocketing power demands are changing how data centres consume power. Globally, data centres use an average of 1-2% of the world’s power, but AI is driving increases in consumption that are likely to push that to 3-4% by 2030. Expected increases may place demands on the grid that many utilities can’t handle, attracting regulatory attention from governments around the globe – including potential restrictions on data centre builds and energy use – and spiking costs and carbon emissions that data centre organisations are racing to control. These pressures are forcing organisations to prioritise energy efficiency and sustainability even more than they have in the past.

In 2024, Vertiv predicted a trend toward energy alternatives and microgrid deployments, and in 2025 the company is expecting an acceleration of this trend, with real movement toward prioritising and seeking out energy-efficient solutions and energy alternatives that are new to this arena. Fuel cells and alternative battery chemistries are increasingly available for microgrid energy options.

Longer-term, multiple companies are developing small modular reactors for data centres and other large power consumers, with availability expected around the end of the decade. Progress on this front bears watching in 2025.

3. Industry players collaborate to drive AI factory development: Average rack densities have been increasing steadily over the past few years, but for an industry that supported an average density of 8.2kW in 2020, the predictions of AI factory racks of 500 to 1000kW or higher soon represent an unprecedented disruption.

As a result of the rapid changes, chip developers, customers, power and cooling infrastructure manufacturers, utilities and other industry stakeholders will increasingly partner to develop and support transparent roadmaps to enable AI adoption. This collaboration extends to development tools powered by AI to speed engineering and manufacturing for standardised and customised designs.

In the coming year, chip makers, infrastructure designers and customers will increasingly collaborate and move toward manufacturing partnerships that enable true integration of IT and infrastructure.

4. AI makes cybersecurity harder – and easier: The increasing frequency and severity of ransomware attacks is driving a new, broader look at cybersecurity processes and the role the data centre community plays in preventing such attacks.

One-third of all attacks last year involved some form of ransomware or extortion, and today’s bad actors are leveraging AI tools to ramp up their assaults, cast a wider net, and deploy more sophisticated approaches.

Attacks increasingly start with an AI-supported hack of control systems, embedded devices or connected hardware and infrastructure systems that are not always built to meet the same security requirements as other network components. Without proper diligence, even the most sophisticated data centre can be rendered useless.

As cybercriminals continue to leverage AI to increase the frequency of attacks, cybersecurity experts, network administrators and data centre operators will need to keep pace by developing their own sophisticated AI security technologies. While the fundamentals and best practices of defence in depth and extreme diligence remain the same, the shifting nature, source and frequency of attacks add nuance to modern cybersecurity efforts.

5. Government and industry regulators tackle AI applications and energy use: While Vertiv's 2023 predictions focused on government regulations for energy usage, in 2025, Vertiv expects the potential for regulations to increasingly address the use of AI itself. Governments and regulatory bodies around the world are racing to assess the implications of AI and develop governance for its use. The trend toward sovereign AI – a nation’s control or influence over the development, deployment and regulation of AI and regulatory frameworks aimed at governing AI – is a focus of The European Union’s Artificial Intelligence Act and China’s Cybersecurity Law (CSL) and AI Safety Governance Framework.

Denmark recently inaugurated its own sovereign AI supercomputer, and many other countries have undertaken their own sovereign AI projects and legislative processes to further regulatory frameworks, an indication of the trajectory of the trend. Some form of guidance is inevitable, and restrictions are possible, if not likely.

Initial steps will be focused on applications of the technology, but as the focus on energy and water consumption and greenhouse gas emissions intensifies, regulations could extend to types of AI application and data centre resource consumption. In 2025, governance will continue to be local or regional rather than global, and the consistency and stringency of enforcement will widely vary.

For more from Vertiv, click here.

Simon Rowley - 21 November 2024

Data Centre Build News & Insights

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

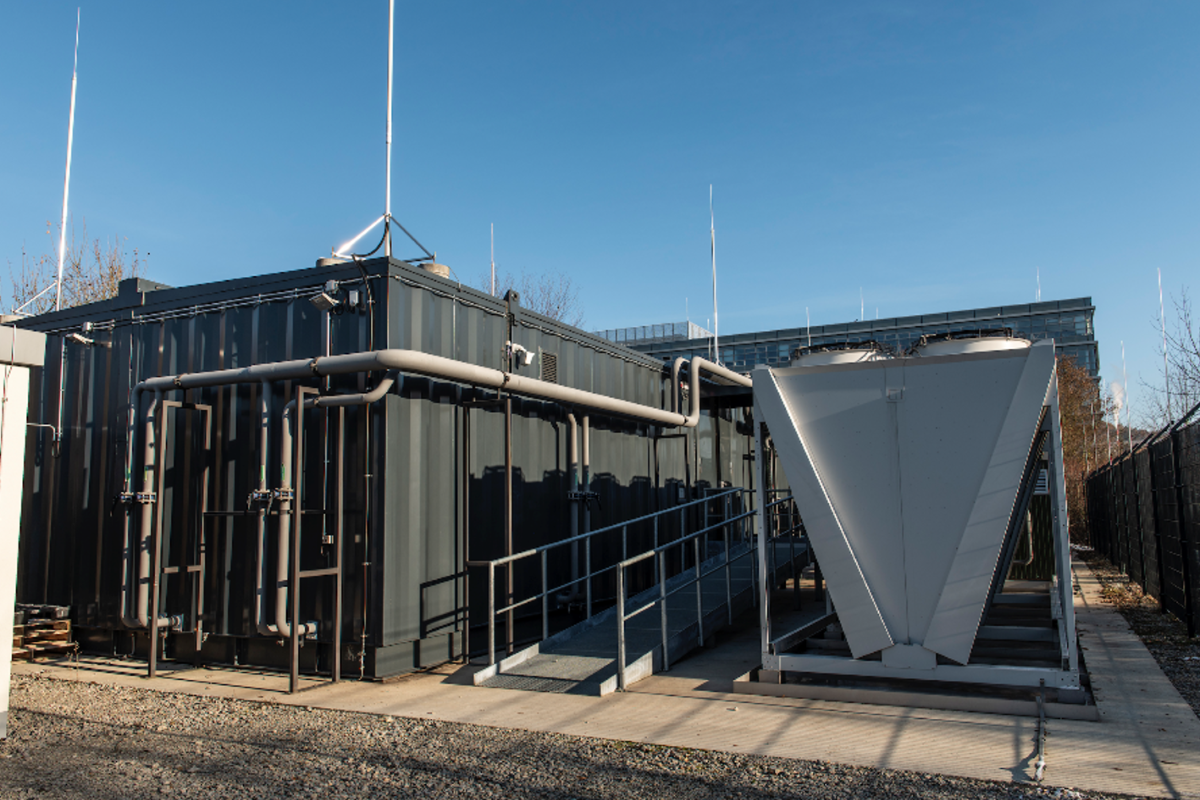

STULZ Modular configures data centre at University of Göttingen

STULZ Modular, a provider of modular data centre solutions and a wholly owned subsidiary of STULZ, has announced the completion of an installation at the University of Göttingen in Germany for the Emmy supercomputer, which employs an innovative combination of direct to chip liquid and air cooling.

One of the top 100 most powerful supercomputers in the world, Emmy is named after renowned German mathematician, Emmy Noether, who was described by Albert Einstein as one of the most important women in the field of mathematics.

The University of Göttingen needed a new data centre to house Emmy, as the existing facilities could not provide the required space and cooling infrastructure. It needed to be a modular construction with a 1.5MW total capacity that could accommodate further expansion, with the deployment of a cooling system that could remove heat density of up to 100kW per rack. Emmy’s power consumption was also a factor, so the implemented solution needed to address this by being as energy efficient and sustainable as possible.

"We were given less than two months to design and install a two room modular data centre with a cooling infrastructure, which would be installed on a ground slab and connected to the on-site transformer station," explains Dushy Goonawardhane, Managing Director at STULZ Modular. "Our solution comprises four prefabricated modules – two larger modules cover an area of 85m² and are joined along the spine to accommodate the direct to chip liquid cooled supercomputer. Two smaller modules are also joined along the spine to accommodate air cooled IT equipment in 70m² of space."

The entire data centre comprises high performance computers, 1,120kW direct to chip liquid cooled systems with approximately 20% residual heat, high-density racks and STULZ CyberAir and STULZ CyberRow precision air conditioning unit with free cooling. With 96kW per full rack and 11 racks currently in-situ, there is available capacity for up to 14 racks in total.

STULZ Modular worked with CoolIT Systems which specialises in scalable liquid cooling solutions for the world’s most demanding computing environments, to incorporate direct to chip liquid cooling to Emmy’s microprocessors. Comprising two liquid loops, the secondary loop provides a flow of cooling fluid from the cooling distribution unit (CDU) to the distribution manifolds and into the servers, where heat is transferred through cold plates into the coolant. The secondary fluid then flows into the heat exchanger in the CDU, where it transfers heat into the primary loop and the absorbed heat energy is carried to a dry cooler and rejected.

The direct to chip liquid cooled system removes 78% (74.9kW) of the server heat load. A water-cooled STULZ CyberRow (with free cooling option) air cooling unit removes the remaining 22% (21.1kW) of the heat load produced by components within the server. The CyberRow’s return air temperature is specified at approximately 48°C, supply air temperature at 27-35°C and water temperature at 32-36°C.

The University of Göttingen is dedicated to reducing its carbon footprint and overall energy consumption across its campus. The STULZ modular data centre provides 27% electricity savings at an average 75% load, equating to 3.96GWh per year. Furthermore, compared to a standard air-cooled data centre with a Power Usage Effectiveness (PUE) of 1.56 – the current industry average according to the Uptime Institute – the hybrid direct to chip liquid and air-cooling system provides an overall annual facility PUE of 1.13, with a 1.07 PUE for the liquid cooled supercomputer room alone.

STULZ Modular’s Dushy Goonawardhane concludes, "This installation demonstrates our commitment to pushing the boundaries of data centre cooling technology. By combining direct-to-chip liquid cooling with our advanced air-cooling systems, we've created a solution that not only meets the extreme demands of supercomputing but also aligns with the University of Göttingen's sustainability goals. We are excited to share the complexity and learnings from this project in a white paper we have produced in cooperation with the University of Göttingen.

For more from STULZ, click here.

Simon Rowley - 20 November 2024

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Sabey Data Centers forms partnership with Seguente

Sabey Data Centers, a designer, builder and operator of multi-tenant data centres, has announced a new strategic partnership with Seguente, a global technology company that provides innovative liquid-cooled IT hardware and AI software platforms for high-performance computing. This partnership enhances both companies' ability to deliver sustainable, optimised and scalable data centre solutions to customers across Sabey’s portfolio.

Seguente specialises in deploying IT hardware with a passive direct-to-chip liquid cooling technology through its Coldware product line, offering an energy-efficient and environmentally friendly solution for data centres housing high-density IT equipment. The system uses a passive two-phase liquid cooling method with ultra-low global warming potential dielectric fluids, that is waterless and pumpless, allowing for seamless integration into both new and existing data centre infrastructures. This advanced low maintenance cooling method significantly reduces power usage and provides greater flexibility and reliability in high-demand computing environments, including artificial intelligence (AI), high-performance computing (HPC) and 5G.

“This partnership with Sabey Data Centers represents a significant milestone in our vision to deliver fully configured IT and heat rejection products that are scalable and sustainable to support the global digital infrastructure evolution,” says Dr. Raffaele Luca Amalfi, CEO and co-founder of Seguente.

“By combining our innovative Coldware products with Sabey’s vast expertise in data centre operations, we are equipped to offer flexible, high-performance solutions that also reduce carbon footprint, power consumption and water usage of data centre operations.”

Rob Rockwood, President at Sabey Data Centers, adds, “Seguente’s advanced liquid cooling technology aligns perfectly with our mission to deliver state-of-the-art, energy-efficient data centres. This partnership will enable us to meet the growing demand for sustainable solutions in high-performance computing and AI environments.”

The partnership emphasises the shared commitment of both companies to innovation, sustainability and operational excellence, ensuring that their clients can continue to expect the most efficient and forward-looking solutions available in the industry.

For more from Sabey Data Centers, click here.

Simon Rowley - 15 November 2024

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Iceotope launches new precision liquid-cooled server

Iceotope, a Precision Liquid Cooling (PLC) specialist, has announced the launch of KUL AI, a new solution to deliver the promise of AI everywhere and offering significant operational advantages where enhanced thermal management and maximum server performance are critical.

KUL AI features an 8-GPU Gigabyte G293 data centre server-based solution integrated with Iceotope’s Precision Liquid Cooling and powered by Intel Xeon Scalable processors – the most powerful server integrated by Iceotope to date. Designed to support dense GPU compute, the 8-GPU G293 carries NVIDIA Certified-Solutions accreditation and is optimised by design for liquid cooling with dielectric fluids. KUL AI ensures uninterrupted, reliable compute performance by maintaining optimal temperatures, protecting critical IT components, and minimising failure rates, even during sustained GPU operations.

The surge in power consumption and sheer volume of data produced by new technologies including Artificial Intelligence (AI), high-performance computing (HPC), and machine learning poses significant challenges for data centres. To achieve maximum server performance without throttling, Iceotope's KUL AI uses an advanced precision cooling solution for faster processing, more accurate results, and sustained GPU execution, even for demanding workloads. KUL AI is highly scalable and proven to achieve up to four times compaction, handling growing data and model complexity without sacrificing performance.

Its innovative specifications make KUL AI ideal for a range of industries where AI is becoming increasingly essential: from AI research and development centres, HPC labs and cloud service provider (CSPs), to media production and visual effects (VFX) studios, and financial services and quantitative trading firms.

Fitting seamlessly into the KUL family of Iceotope technologies, KUL AI uses Iceotope’s Precision Liquid Cooling technology which offers several advantages – from providing uniform cooling across all heat-generating server components to reducing hotspots and improving overall efficiency. Additionally, PLC eliminates the need for supplementary air cooling, leading to simpler deployments and lower overall energy consumption.

Improving cost-effectiveness and operation efficiency are constant targets for Iceotope. In fact, KUL AI’s advanced thermal management maximises server utilisation, boosting compute density, cutting energy costs, and extending hardware lifespan for a lower total cost of ownership (TCO). Furthermore, KUL AI cuts energy use by up to 40% and water consumption by 96%, and minimises operational costs, while maintaining high thermal efficiency and meeting sustainability targets.

Built with scalability and adaptability in mind, KUL AI is deployable in both data centres and across all edge IT installations. Precision Liquid Cooling removes noisy server fans from the cooling process, resulting in near-silent operations and making KUL AI ideal for busy non-IT and populous workspaces which nonetheless demand sustained GPU performance.

Ideal for latency-sensitive edge deployments and environments with extreme conditions, KUL AI is sealed and protected at the server level, not only ensuring uniform cooling of all components on the GPU motherboard, but also rendering it impervious to airborne contaminants and humidity for greater reliability. Crucially, PLC minimises the risk of leaks and system damage, making it a safe choice for any critical environments.

Nathan Blom, Co-CEO of Iceotope, says, “The unprecedented volume of data being generated by new technologies demands a state-of-the-art solution which not only guarantees server performance, but delivers on all vectors of efficiency and sustainability. KUL AI is a pioneering product delivering more computational power and rack space. It offers a scalable system for data centres and is adaptable in non-IT environments, enabling AI everywhere.”

The launch will be showcased for the first time at Super Computing 2024, taking place in Atlanta from 17-22 November 2024. The Iceotope team will be welcoming interested parties at nVent Booth 1738. To schedule an introductory meeting, contact sales@iceotope.com.

For more from Iceotope, click here.

Simon Rowley - 12 November 2024

Colocation Strategies for Scalable Data Centre Operations

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

atNorth announces data centre expansion in Iceland

atNorth, the Nordic colocation, high-performance computing, and AI service provider, has announced the substantial expansion of two of its data centres in Iceland.

The ICE02 campus near Keflavík will gain an additional capacity of 35MW, while the ICE03 site in Akureyri (which opened last year) will gain additional capacity of 16MW. Both sites have surplus space for further expansion in line with future demand.

Both data centre sites are highly energy efficient, operating at a maximum PUE of 1.2, and will also be able to accommodate the latest in air and liquid cooling technologies, depending on customer preference. The initial phase of ICE02’s expansion became operational in Q3 2024 and all further phases for both sites are expected to be completed in the first half of 2025.

The innovative design of the data centres caters to data-intensive businesses that require high-density infrastructure for high-performance computing. The sites currently accommodate companies such as Crusoe, Advania, RVX, DNV, Opera, BNP Paribas, and Tomorrow.io.

As part of atNorth’s ongoing commitment to sustainability and collaboration, the business has also entered into a partnership with AgTech startup, Hringvarmi, to recycle excess heat for use in food production. As part of this agreement, Hringvarmi will place its Generation 1 prototype module within ICE03 to test the concept of transforming 'data into dinner' by utilising waste heat to grow microgreens in collaboration with the food producer, Rækta Microfarm

“We are delighted to be part of atNorth’s innovative data centre ecosystem”, says Justine Vanhalst, Co-Founder of Hringvarmi. “Our partnership aims to boost Iceland’s agriculture industry to lessen the need for imported produce and contribute to Iceland’s circular economy”.

The expansion plans reflect the huge demand, both domestically and internationally for atNorth’s sustainable data centre solutions. Data intensive businesses, including hyperscalers and companies that run AI and High-Performance Computing workloads, recognise the quality of the digital infrastructure available and are attracted by Iceland’s advantageous location. The country benefits from a consistently cool climate and an abundance of renewable energy in addition to fully redundant connectivity and a highly skilled workforce.

“We are experiencing a considerable increase in interest in our highly energy efficient, sustainable data centres”, says Eyjólfur Magnús Kristinsson, CEO at atNorth. “We have power agreements and building permits in place and will meet this demand as part of our ongoing sustainable expansion strategy”.

atNorth operates seven data centres in four of the five Nordic countries and currently has four new data centre sites in development: two in Finland (FIN02 in Helsinki and FIN04 in Kouvola), and two in Denmark (DEN01 in the Ballerup region and DEN02 in Ølgod in Varde).

For more from atNorth, click here.

Simon Rowley - 7 November 2024

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173