Liquid Cooling Technologies Driving Data Centre Efficiency

Cooling

Data Centres

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Schneider Electric acquires liquid cooling company

Schneider Electric has announced that it has signed an agreement to acquire a controlling interest in Motivair Corporation, a company that specialises in liquid cooling and advanced thermal management solutions for high performance computing systems.

The advent of Generative-AI and the introduction of Large Language Models (LLMs) have been additional catalysts driving enhanced power needs to support increased digitisation across end-markets. This shift to accelerated computing is resulting in new data centre architectures requiring more efficient cooling solutions, particularly liquid cooling, as traditional air cooling alone cannot mitigate the higher heat generated as a result.

As the compute within data centres becomes higher-density, the need for effective cooling will grow, with multiple market and analyst forecasts predicting growth in liquid cooling solutions in excess of +30% CAGR in the coming years. This transaction strengthens Schneider Electric’s portfolio of direct-to-chip liquid cooling and high-capacity thermal solutions, enhancing existing offerings and furthering innovation in cooling technology.

Headquartered in Buffalo, New York, Motivair was founded in 1988 and currently has over 150 employees. Leveraging its strong engineering competency and deep domain expertise, Motivair has a range of offers including Coolant Distribution Units (CDUs), Rear Door Heat Exchangers (RDHx), Cold Plates and Heat Dissipation Units (HDUs), alongside Chillers for thermal management. Motivair provides its customers with a portfolio to meet the thermal challenges of modern computing technology.

While liquid cooling is not a new technology, specific application to the data centre and AI environment represents a nascent market set for strong growth in the coming years. Motivair has years of experience in cooling the world’s fastest supercomputers with liquid cooling solutions. In recent quarters, the company has been tracking a strong double-digit growth trajectory, which is expected to continue as it pivots to provide end-to-end liquid cooling solutions to several of the largest data centre and AI customers.

Peter Herweck, CEO of Schneider Electric, comments, “The acquisition of Motivair represents an important step, furthering our world leading position across the data centre value chain. The unique liquid cooling portfolio of Motivair complements our value proposition in data centre cooling and further strengthens our prominent position in data centre build out, from grid to chip and from chip to chiller.”

Rich Whitmore, President & CEO of Motivair Corporation - who will continue to run the Motivair business out of Buffalo after the closing of the transaction - adds, “Schneider Electric shares our core values and commitment to innovation, sustainability and excellence. Joining forces with Schneider will enable us to further scale our operations and invest in new technologies that will drive our mission forward and solidify our position as an industry leader. We are thrilled to embark on this exciting journey together."

Under the terms of the transaction, Schneider Electric will acquire an initial 75% controlling interest in the equity of Motivair for an all-cash consideration of $850 million (£652m), which includes the value of a tax step-up, and values Motivair at a mid-single digit multiple of projected FY2025 revenue.

The transaction is subject to customary closing conditions, including the receipt of required regulatory approvals, and is expected to close in the coming quarters. On completion, Motivair would be reported within the Energy Management business of Schneider Electric. The Group expects to acquire the remaining 25% of non-controlling interests in 2028.

For more from Schneider Electric, click here.

Simon Rowley - 18 October 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

Lenovo expands Neptune liquid cooling ecosystem

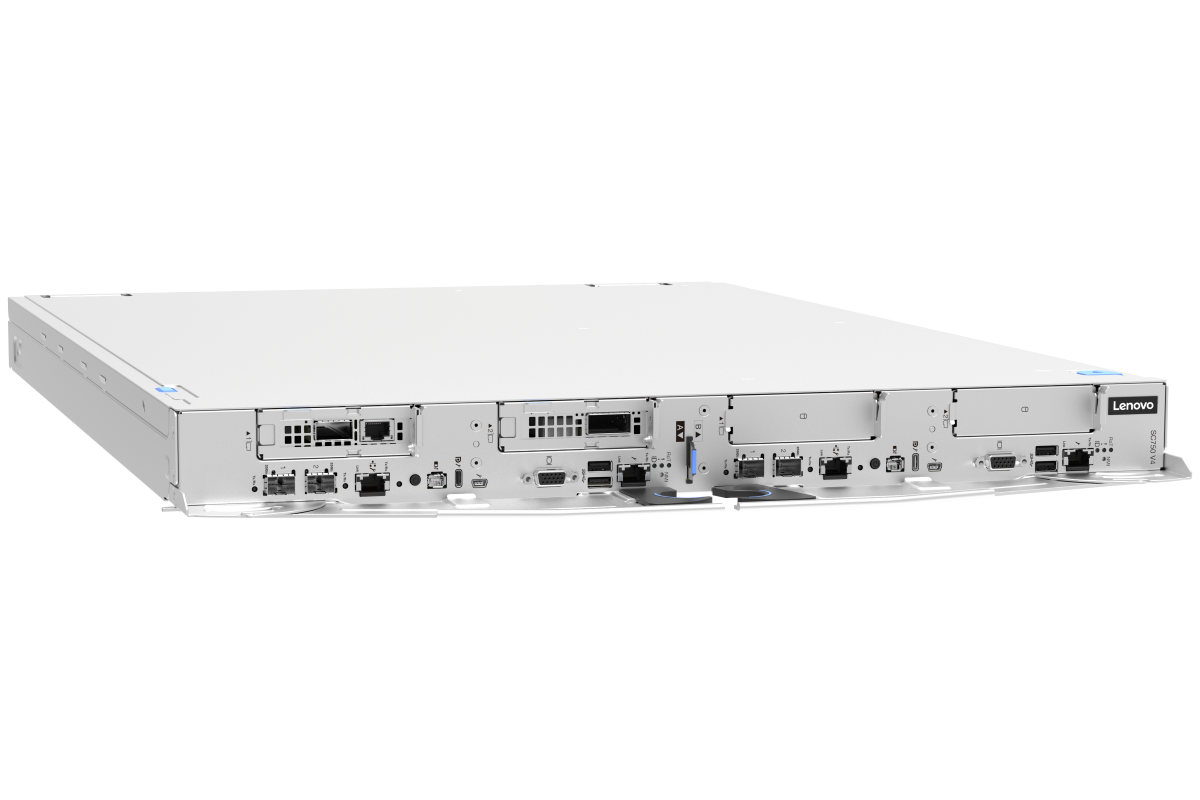

Lenovo has expanded its Neptune liquid-cooling technology to more servers with new ThinkSystem V4 designs that help businesses boost intelligence, consolidate IT and lower power consumption in the new era of AI.

Powered by Intel Xeon 6 processors with P-cores, the new Lenovo ThinkSystem SC750 V4 supercomputing infrastructure (pictured above) combines peak performance with advanced efficiency to deliver faster insights in a space-optimised design for intensive HPC workloads.

The full portfolio includes new Intel-based solutions optimised for rack density and massive transactional data, maximising processing performance in the data centre space for HPC and AI workloads.

“Lenovo is helping enterprises of every size and across every industry bring new AI use cases to life based on improvements in real-time computing, power efficiency and ease of deployment,” says Scott Tease, Vice President and General Manager of High-Performance Computing and AI at Lenovo. “The new Lenovo ThinkSystem V4 solutions powered by Intel will transform business intelligence and analytics by delivering AI-level compute in a smaller footprint that consumes less energy.”As part of its ongoing investment in accelerating AI, Lenovo is pushing the envelope with the sixth generation of its Lenovo Neptune liquid-cooling technology, delivering it for mainstream use throughout its ThinkSystem V3 and V4 portfolios through compact design innovations that maximise computing performance while consuming less energy. Lenovo’s proprietary direct water-cooling recycles loops of warm water to cool data centre systems, enabling up to a 40% reduction in power consumption.The Lenovo ThinkSystem SC750 V4 helps support customers’ sustainability goals data centre operations with highly efficient direct water-cooling built directly into the solution and accelerators that deliver even greater workload efficiency with exceptional performance per watt. Engineered for space-optimised computing, the infrastructure fits within less than a square meter of data centre space in industry-standard 19-inch racks, pushing the boundaries of compact general-purpose supercomputing.

Leveraging the new infrastructure, organisations can achieve faster time-to-value by quickly and securely unlocking new insights from their data. The ThinkSystem SC750 V4 uses a next-generation MRDIMM memory solution to increase critical memory bandwidth by up to 40%. It is also designed for handling sensitive workloads with enhanced security features for greater protection.

Building on Lenovo’s leadership in AI innovation, the new solutions achieve advanced performance, increased reliability and higher density to propel intelligence.

For more from Lenovo, click here.

Simon Rowley - 26 September 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Park Place Technologies introduces liquid cooling solutions

Park Place Technologies, a global data centre and networking optimisation firm, has announced the expansion of its portfolio of IT infrastructure services with the introduction of two liquid cooling solutions for data centres: immersion liquid cooling and direct-to-chip cooling.

This announcement comes at a critical time for businesses who are seeing a dramatic increase in the compute power they require, driven by adoption of technologies like AI and IoT. This, in turn, is driving the need for more on-prem hardware, more space for that hardware, and more energy to run it all – presenting a significant financial and environmental challenge for businesses. Park Place Technology says that its new liquid cooling solutions present a strong solution for businesses looking to address these challenges, as the technology has the potential to deliver strong financial and environmental results.

Direct-to-chip is an advanced cooling method that applies coolant directly to the server components that generate the most heat including CPUs and GPUs. Immersion cooling empowers data centre operators to do more with less: less space and less energy. Using these methods, businesses can increase their Power Usage Effectiveness (PUE) by up to 18 times, and rack density by up to 10 times. Ultimately, this can help deliver power savings of up to 50%, which in turn leads to lower operation costs.

From an environmental perspective, liquid cooling is significantly more efficient than traditional air cooling. At present, air cooling technology only captures 30% of the heat generated by the servers, compared to the 100% captured by immersion cooling, resulting in lower carbon emissions for businesses that opt for immersion cooling methods.

Park Place Technologies can deliver a complete turnkey solution for organisations looking to implement liquid cooling technology, removing the complexity of adoption, which is a common barrier for businesses. Park Place Technologies provides a single-vendor solution for the whole process from procuring the hardware, conversion of the servers for liquid cooling, installation, maintenance, monitoring and management of the hardware and the cooling technology.

“Our new liquid cooling offerings have the potential to have a significant impact on our customers’ costs and carbon emissions, two of the key issues they face today,” says Chris Carreiro, Chief Technology Officer at Park Place Technologies. “Park Place Technologies is ideally positioned to help organisations cut their data centre operations costs, giving them the opportunity to re-invest in driving innovation across their businesses.

“The decision to invest in immersion cooling and direct-to-chip cooling depends on various factors, including the specific requirements of the data centre, budget constraints, the desired level of cooling efficiency, and infrastructure complexity. Park Place Technologies can work closely with customers to find the best solution for their business, and can guide them towards the best long-term strategy, while offering short-term results. This takes much of the complexity out of the process, which will enable more businesses to capitalise on this exciting new technology.”

Simon Rowley - 24 September 2024

Data Centres

Hyperscale Data Centres: Scale, Speed & Strategy

Liquid Cooling Technologies Driving Data Centre Efficiency

News

New 1MW Coolant Distribution Unit launched

Airedale by Modine, a critical cooling specialist, has announced the launch of a coolant distribution unit (CDU), in response to increasing demand for high performance, high efficiency liquid and hybrid (air and liquid) cooling solutions in the global data centre industry.

The Airedale by Modine CDU will be manufactured in the US and Europe and is suitable for both colocation and hyperscale data centre providers who are seeking to manage higher-density IT heat loads. The increasing data processing power of next-generation central processing units (CPUs) and graphics processing units (GPUs), developed to support complex IT applications like AI, result in higher heat loads that are most efficiently served by liquid cooling solutions.

The CDU is the key component of any liquid cooling system, isolating facility water systems from the IT equipment and precisely distributing coolant fluid to where it is needed in the server / rack. Delivering up to 1MW of cooling capacity based on ASHRAE W2 or W3 facility water temperatures, Airedale’s CDU offers the same quality and high energy efficiency associated with other Airedale by Modine cooling solutions.

Developed with complete, intelligent cooling systems in mind, the CDU’s integrated controls communicates with the site building management system (BMS) and system controls, for optimal performance and reliability. The ability to network up to eight CDUs makes it a flexible and scalable solution, responsive to a wide range of high-density loads. Manufactured with the highest quality materials and components, with N+1 pump redundancy, the Airedale CDU is engineered to perform in the uptime-dependent world of the hyperscale and colocation global data centre markets.

Richard Burcher, Liquid Cooling Product Manager at Airedale by Modine, says, “Our investment in the liquid cooling market strengthens Airedale by Modine’s position in the data centre industry. We are seeing an increasing amount of enquiries for liquid cooling solutions, as providers move to a hybrid cooling approach to manage low to mid-density and high-density heat loads in the same space.

“Airedale by Modine is a complete system provider, encompassing air and liquid cooling, as well as control throughout the thermal chain, supported with in-territory aftersales. This expertise in all areas of data centre cooling affords our clients complete life-cycle assurance.”

For more from Airedale, click here.

Simon Rowley - 19 September 2024

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Portus Data Centers announces new Hamburg site

Portus Data Centers has announced the planned availability of its new data centre (IPHH4) located on the IPHH Internet Port Hamburg Wendenstrasse campus.

Construction work will begin in the first quarter of 2025 and is due to be completed in the fourth quarter of 2026 (Phase 1). The Tier III+ data centre is designed for a PUE of 1.2 or under and will have a total IT load of 12.8MW (two infrastructures each 6.4MW).

With a data centre area (white space) of 6,380m² (3,190m² per building), the grid connection capacity is 20.3MVA. The facility is already fully compliant with the new EnEfG requirements and liquid cooling will be available for High Performance Computing (HPC).

The IPHH data centre business was acquired by Arcus European Infrastructure Fund 3 SCSp on behalf of Portus last year. In addition to IPHH4, adjacent to the existing IPHH3 data centre at the main Wendenstrasse location, IPHH operates two other facilities in Hamburg.

Sascha E. Pollok, CEO of IPHH, comments, “With the benefit of significant investment by Arcus under the Portus Data Centers umbrella, I am excited to see the accelerated transformation and expansion of the IPHH operation. IPHH4 will be fully integrated into the virtual campus of IPHH2 and IPHH3, and will therefore be the ideal interconnect location in Hamburg. With around 50 carriers and network operators, just a standard cross connect away, IPHH4 will be a major and highly accessible interconnection hub.”

Adriaan Oosthoek, Chairman of Portus Data Centers, adds, “The rapid expansion of IPHH is testament to our mission to establish Portus Data Centers as a major regional force in Germany and adjacent markets. Our buy-and-build regional data centre aggregation strategy is focused on ensuring our current and future locations are equipped with the capacity and connectivity required to meet customer demand.”

IPHH’s growing customer base includes telecom carriers, global technology and social media companies and content distribution networks that rely on IPHH’s strong interconnection services. Aligning with Arcus’ commitment to ensuring that its investments have a positive ESG impact, energy consumed by IPHH’s data centres is certified as being 100% renewably sourced. IPHH’s highly efficient facilities currently provide a power usage effectiveness ratio of circa 1.3 times, which is subject to continuous improvement as part of Portus’ ongoing commitment to optimising its ESG policies and practices.

For more from Portus, click here.

Simon Rowley - 13 September 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Aruba activates liquid cooling in Ponte San Pietro data centre

Aruba, an Italian provider of cloud and data centre services, has announced the implementation of liquid cooling within the data centre campus in Ponte San Pietro (BG), near Milan.

Liquid cooling enables the support of increasingly dense racks in data centres and meets the needs of new generations of processors. This type of technology is intended to support specialised hardware of public and private customers with specific needs in AI or high-performance computing (HPC); applications that require high processing intensity.

“By establishing one of the first spaces equipped to accommodate liquid-cooled cabinets, Aruba is one of few industry players ready to provide the next generation of machines designed for artificial intelligence and high-performance computing," comments Giancarlo Giacomello, Head of Data Centre Offering.

“This type of solution responds to the growing needs of the market that require an increase in computing density and power, offering full compatibility with next-generation systems. Integrating liquid cooling solutions into our infrastructures is part of Aruba's innovation strategy, based on the desire to explore and support all initiatives that allow us to offer customers the highest quality, performance and environmentally sustainable solutions. To do so, we design and maintain our data centres at the forefront of technology, ready to face future challenges.”

Strengths of liquid cooling include:• Higher thermal efficiency: liquids have a higher heat transfer capacity than air, allowing adequate thermal dissipation for each rack even above certain density thresholds.• Energy efficiency: in high-density infrastructure, this type of cooling proves more efficient than traditional air systems.• Higher computing density: liquid cooling supports processors and GPUs with high thermal requirements and can handle higher power densities.

Thanks to the design of Aruba's data centres, the liquid cooling solution was integrated into an environment already in production without needing to dedicate a separate space or portion of the facilities to liquid cooling. This greatly reduced implementation time since all data rooms had already been prepared for applications involving liquid-based heat exchange.

For more from Aruba, click here.

Simon Rowley - 1 August 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

Iceotope announces the retirement of CEO David Craig

Iceotope, the precision liquid cooling specialist, has announced the retirement of David Craig from the position of CEO effective from 30 September 2024.

The company will be led jointly by Chief Commercial Officer (CCO) Nathan Blom and Chief Financial Officer (CFO), Simon Jesenko until the appointment of David’s successor. David will continue to advise the company and provide assistance during the transition period.

Nathan has a leadership background driving revenue and strategy in Fortune 500 companies, including Lenovo and HP. Simon is a deep tech finance executive with experience supporting private equity and venture capital-backed companies as they achieve hypergrowth.

David joined Iceotope in June 2015 and during his nine years at the helm, he has successfully guided the company through a transformative period as it seeks to become recognised as the leader in precision liquid cooling. His achievements include building a strong team with a clear vision to engineer practical liquid cooling solutions to meet emerging challenges, such as AI, distributed telco edge, high power dense computing, and sustainable data centre operations.

The company’s cooling technology is critical in meeting today’s global data centre sustainability challenges. Its technology removes nearly 100% of the heat generated, reduces energy use by up to 40% and water consumption by up to 100%. The strength of its technology has attracted an international consortium of investors that include ABC Impact, British Patient Capital, Northern Gritstone, nVent and SDCL.

David comments, “The past nine years have been an amazing ride – we have built a fantastic team, developed a great IP portfolio and created the only liquid cooling solution that addresses the thermal and sustainability challenges facing the data centre industry today and tomorrow.

“I have enjoyed every moment and have nothing but pride in the team, company and product. However, it feels like now is an appropriate time for me to step aside, enjoy retirement, and focus on other passions in my life; particularly my charitable work in the UK and Africa. I look forward to seeing the future success of Iceotope and can’t wait to see what comes next.”

Iceotope Chairman, George Shaw, states, “On behalf of everyone at Iceotope, we thank David for his dedication and endless enthusiasm for the company, the technology and the people who make it all possible. We know he will be a tremendous brand ambassador for precision liquid cooling in the years to come. We wish him all the best in his well-deserved retirement.”

For more from Iceotope, click here.

Simon Rowley - 29 July 2024

Cooling

Data Centre Infrastructure News & Trends

Data Centres

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Iceotope launches state-of-the art liquid cooling lab

Iceotope Technologies, a global provider of precision liquid cooling technology, has announced the launch of Iceotope Labs, the first of its state-of-the-art liquid cooling lab facilities in Sheffield.

Designed to revolutionise high-density data centre research and testing capabilities for customers seeking to deploy liquid cooling solutions, Iceotope believes that its Iceotope Labs will set new standards as the industry's most advanced liquid-cooled data centre test environment available today.

Amid the exponential growth of AI and machine learning, liquid cooling is rapidly becoming an enabling technology for AI workloads. As operators evolve their data centre facilities to meet this market demand, validating liquid cooling technology is key to future-proofing infrastructure decisions.

By leveraging advanced monitoring capabilities, data analysis tools, and a specialist team of test engineers, Iceotope Labs will provide quantitative data and a state-of-the-art research and development (R&D) environment to demonstrate the benefits of liquid cooling to customers and partners seeking to utilise the latest advancements in high-density infrastructure and GPU-powered computing. Examples of recent research conducted by Iceotope Labs includes groundbreaking testing for next-gen chip level cooling at both 1500W and 1000W. These tests demonstrated precision liquid cooling’s ability to meet the thermal demands of future computing architectures needed for AI compute.

Working in partnership with Efficiency IT, a UK specialist in data centres, IT and critical communications environments, the first of Iceotope’s bespoke labs showcases the adaptability and flexibility of leveraging liquid cooling in a host of data centre settings including HPC, supercomputing and edge environments. The fully functional, small-scale liquid cooled data centre includes two temperature-controlled test rooms and dedicated space for thermal, mechanical and electronic testing for everything from next generation CPUs and GPUs to racks and manifolds.

Iceotope Labs also features a facility water system (FWS) loop, a technology cooling system (TCS) loop with heat exchangers, as well as an outside dry cooler – demonstrating key technologies for a complete liquid cooled facility. The two flexible, secondary loops are independent of each other and have a large temperature band to stress-test the efficiency and resiliency of a customers' IT equipment if and when required. Additionally, the flexible test space considers all ASHRAE guidelines and best practices to ensure optimal conditions for a range of test setups for enhanced control and monitoring all while maximising efficiency and safety.

"We are investing in our research and innovation capabilities to offer customers an unparalleled opportunity," says David Craig, CEO of Iceotope. “Iceotope Labs not only serves as a blueprint for what a liquid cooled data centre should be, but is also a collaborative hub for clients to explore liquid cooling solutions without the need for their own lab space. It's a transformative offering within the data centre industry."

David continues, “We’d like to thank Efficiency IT for its role in bringing Iceotope Labs to fruition. Its design expertise has empowered us with the flexibility needed to create a cutting-edge facility that exceeds industry standards."

“With new advancements in GPU, CPU and AI workloads having a transformative impact on both data centre design and cooling architectures, it’s clear to see that liquid cooling will play a significant role in improving the resiliency, energy and environmental impact of data centres,” adds Nick Ewing, MD, EfficiencyIT. “We’re delighted to have supported Iceotope throughout the design, development and installation of its industry-first Iceotope Lab, and look forward to building on our collaboration as together, we develop a new customer roadmap for high-density, liquid-cooled data centre solutions.”

Located at Iceotope's global headquarters in Sheffield, UK, Iceotope Labs further expands the location as a hub for technology innovation and enables Iceotope to continue to deliver the highest level of customer experience.

For more from Iceotope, click here.

Simon Rowley - 9 July 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

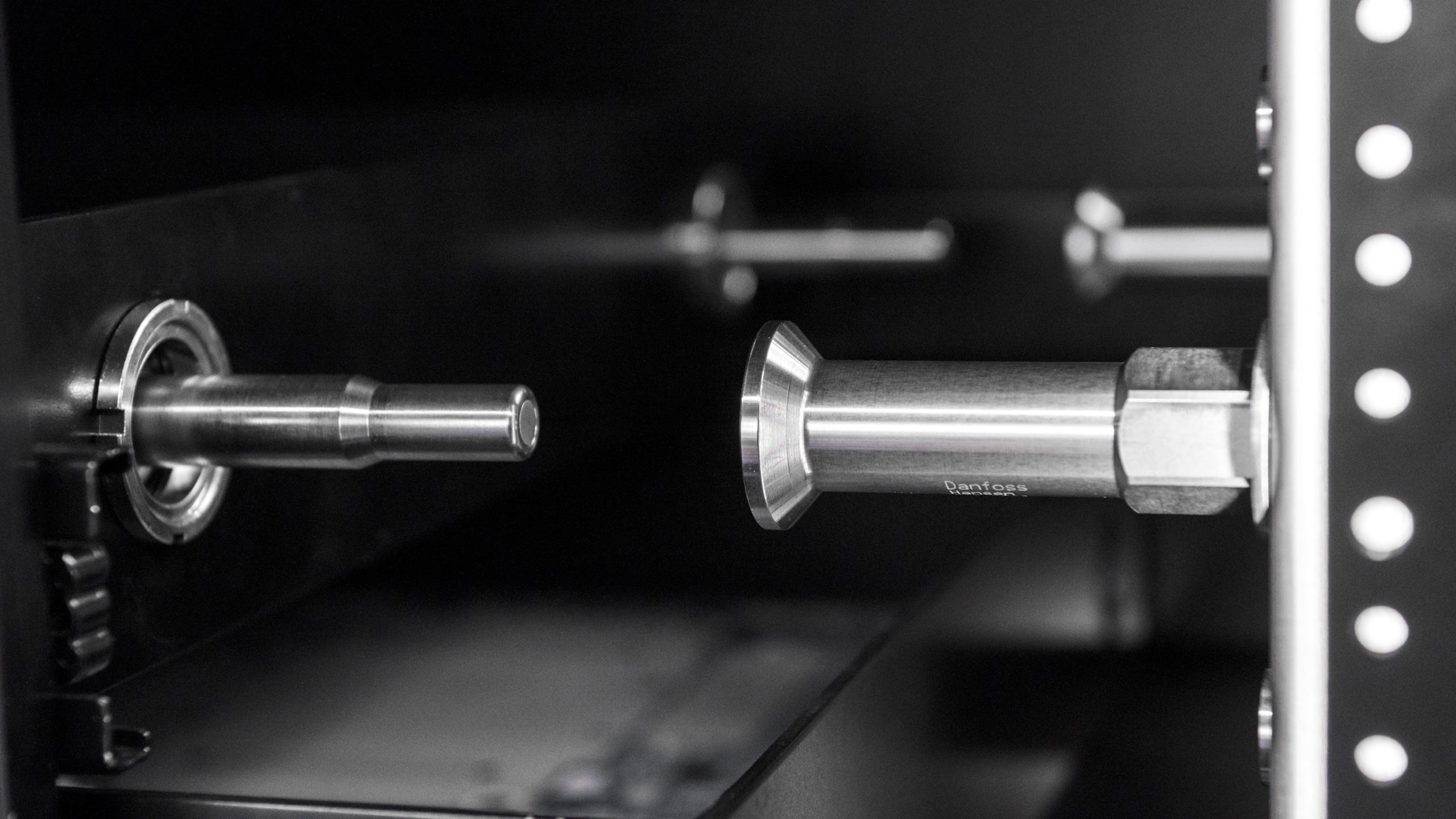

New Danfoss connector for liquid cooling applications

Danfoss Power Solutions has launched a Blind Mate Quick Connector for data centre liquid cooling applications.

Compliant with soon-to-be-released Open Compute Project Open Rack V3 specifications, the Danfoss Hansen BMQC simplifies installation and maintenance of inner rack servers while increasing reliability and efficiency.

The BMQC enables blind connection of the server chassis to the manifold at the rear of the rack, providing faster and easier installation and maintenance in inaccessible or non-visible locations. With its patented self-alignment design, the BMQC compensates for angular and radial misalignment of up to 5mm and 2.7 degrees, enabling simple and secure connections.

The Danfoss Hansen BMQC also offers a highly reliable design, the company states. The coupling is manufactured from corrosion-resistant 303 stainless steel and the seal material is EPDM rubber, providing broad fluid compatibility and a long lifetime with minimal maintenance requirements. In addition, Danfoss performs helium leak testing on every BMQC to ensure 100% leak-free operation.

Amanda Bryant, Product Manager at Danfoss Power Solutions, comments, “As a member of the Open Compute Project community, Danfoss is helping set the industry standard for data centre liquid cooling. Our rigorous product design and testing capabilities are raising the bar for component performance, quality, and reliability. Highly critical applications like data centre liquid cooling require 100% uptime and leak-free operation, and our complete liquid cooling portfolio is designed to meet this demand, making Danfoss a strong system solution partner for data centre owners.”

With its high flow rate and low pressure drop, the BMQC improves system efficiency. This reduces the power consumption of the data centre rack, thereby reducing operational costs. Furthermore, the BMQC can be connected and disconnected under pressure without the risk of air entering the system. This eliminates the need to depressurise the entire system, minimising downtime.

The Danfoss Hansen BMQC features a working pressure of 2.4 bar (35 psi), a rated flow of 6 litres per minute (1.6 gallons per minute), and maximum flow rate of 10 lpm (2.6 gpm). It has a pressure drop of 0.15 bar (2.3 psi) at 6 lpm (1.6 gpm). It is available in a 5mm size and is interchangeable with other OCP Open Rack V3 blind mate quick couplings.

For more from Danfoss, click here.

Simon Rowley - 27 June 2024

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Schneider experts explore liquid cooling for AI data centres

Schneider Electric has released its latest white paper, Navigating Liquid Cooling Architectures for Data Centres with AI Workloads.

The paper provides a thorough examination of liquid cooling technologies and their applications in modern data centres, particularly those handling high-density AI workloads.

The demand for AI is growing at an exponential rate. As a result, the data centres required to enable AI technology are generating substantial heat, particularly those containing AI servers with accelerators used for training large language models and inference workloads. This heat output is increasing the necessity for the use of liquid cooling to maintain optimal performance, sustainability, and reliability.

Schneider Electric’s latest white paper guides data centre operators and IT managers through the complexities of liquid cooling, offering clear answers to critical questions about system design, implementation, and operation.

Over the 12 pages, authors Paul Lin, Robert Bunger, and Victor Avelar identify two main categories of liquid cooling for AI servers: direct-to-chip and immersion cooling. They describe the components and functions of a coolant distribution unit (CDU), which are essential for managing temperature, flow, pressure, and heat exchange within the cooling system.

“AI workloads present unique cooling challenges that air cooling alone cannot address,” says Robert Bunger, Innovation Product Owner, CTO Office, Data Centre Segment, Schneider Electric. “Our white paper aims to demystify liquid cooling architectures, providing data centre operators with the knowledge to make informed decisions when planning liquid cooling deployments. Our goal is to equip data centre professionals with practical insights to optimise their cooling systems. By understanding the trade-offs and benefits of each architecture, operators can enhance their data centres’ performance and efficiency.”

The white paper outlines three key elements of liquid cooling architectures:

Heat capture within the server: Utilising a liquid medium (e.g. dielectric oil, water) to absorb heat from IT components.

CDU type: Selecting the appropriate CDU based on heat exchange methods (liquid-to-air, liquid-to-liquid) and form factors (rack-mounted, floor-mounted).

Heat rejection method: Determining how to effectively transfer heat to the outdoors, either through existing facility systems or dedicated setups.

The paper details six common liquid cooling architectures, combining different CDU types and heat rejection methods, and provides guidance on selecting the best option based on factors such as existing infrastructure, deployment size, speed, and energy efficiency.

With the increasing demand for AI processing power and the corresponding rise in thermal loads, liquid cooling is becoming a critical component of data centre design. The white paper also addresses industry trends such as the need for greater energy efficiency, compliance with environmental regulations, and the shift towards sustainable operations.

“As AI continues to drive the need for advanced cooling solutions, our white paper provides a valuable resource for navigating these changes,” Robert adds. “We are committed to helping our customers achieve their high-performance goals while improving sustainability and reliability.”

This white paper is particularly timely and relevant in light of Schneider Electric's recent collaboration with NVIDIA to optimise data centre infrastructure for AI applications. This partnership introduced the first publicly available AI data centre reference designs, leveraging NVIDIA's advanced AI technologies and Schneider Electric's expertise in data centre infrastructure.

Schneider claims that the reference designs set new standards for AI deployment and operation, providing data centre operators with innovative solutions to manage high-density AI workloads efficiently.

For more information and to download the white paper, click here.

For more from Schneider Electric, click here.

Simon Rowley - 27 June 2024

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173