Liquid Cooling Technologies Driving Data Centre Efficiency

Data Centre Infrastructure News & Trends

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Products

Danfoss expands UQDB coupling range

Danfoss Power Solutions, a Danish manufacturer of mobile hydraulic systems and components, has completed its Universal Quick Disconnect Blind-Mate (UQDB) coupling portfolio with the launch of the -08 size Hansen connector.

The couplings are designed for direct connection between servers and manifolds in data centre liquid cooling systems and are fully compliant with Open Compute Project (OCP) standards.

Higher flow capacity

The new -08 size joins the existing -02, -04, and -06 sizes, covering body sizes from 1/8-inch to 1/2-inch. The company says it delivers a 29% higher flow rate than OCP requirements, supporting greater cooling efficiency for high-density racks.

Danfoss UQDB couplings feature a flat-face dry break design to prevent spillage and a push-to-connect system with self-alignment to simplify installation in tight spaces. The plug half can move radially to align with the socket half, allowing compensation of up to +/-1 millimetre for easier in-rack connections.

Developed in collaboration with the OCP community, the couplings meet existing standards and are designed to comply with the forthcoming OCP V2 specification for liquid cooling, expected in October.

All UQDB units undergo helium-leak testing for reliability and include QR codes on both plug and socket halves for easier identification and tracking.

https://www.youtube.com/watch?v=yjt9_O0Wb1o

Chinmay Kulkarni, Data Centre Product Manager at Danfoss Power Solutions, says, “Our now-complete UQDB range expands our robust portfolio of thermal management products for data centres, enabling us to provide comprehensive systems and delivering on our 'one partner, every solution' promise.

"When paired with our flexible, kink-free hoses, we deliver a complete direct-to-chip cooling solution that sets the standard for efficiency and reliability.”

The couplings are manufactured from 303 stainless steel for corrosion resistance, with EPDM seals for fluid compatibility. They feature ORB terminal ends for secure, leak-free connections, an operating temperature range of 10°C to 65°C, and a minimum working pressure of 10 bar.

For more from Danfoss, click here.

Joe Peck - 19 August 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Aligned collaborates with Divcon for its Advanced Cooling Lab

Divcon Controls, a US provider of building management systems and electrical power monitoring systems for data centres and mission-critical facilities, has announced its role in the development of Aligned Data Centers’ new Advanced Cooling Lab in Phoenix, Arizona, where it served as the controls vendor for the facility.

The project marks a step forward in the design and management of liquid-cooled infrastructure to support artificial intelligence (AI) and high-performance computing (HPC) workloads.

The lab, which opened recently, is dedicated to testing advanced cooling methods for GPUs and AI accelerators. It reflects a growing need for more efficient thermal management as data centre density increases and energy requirements rise.

“As the data centre landscape rapidly evolves to accommodate the immense power and cooling requirements of AI and HPC workloads, the complexities of managing mechanical systems in these environments are escalating,” says Kevin Timmons, Chief Executive Officer of Divcon Controls.

“Our involvement with Aligned Data Centers' Advanced Cooling Lab has provided us with invaluable experience at the forefront of liquid cooling technology.

"We are actively developing and deploying advanced control platforms that not only optimise the performance of these systems, but also contribute to long-term sustainability goals.”

Divcon Controls has focused its work on managing the added complexity that liquid cooling introduces, including:

• Precise thermal control — Managing coolant flow, temperature, and pressure to improve heat transfer efficiency and reduce energy consumption.

• Integration with mechanical infrastructure — Coordinating the performance of pumps, heat exchangers, cooling distribution units (CDUs), and leak detection systems within a unified control framework.

• Load-responsive adjustment — Adapting cooling output in real time to match fluctuating IT loads, helping maintain optimal operating conditions while limiting energy waste.

• Visibility and predictive maintenance — Providing operators with detailed analytics on system performance to support proactive maintenance and longer equipment life.

• Support for hybrid environments — Enabling the transition between air and liquid cooling within the same facility, as demonstrated at Aligned’s lab.

As more facilities transition to hybrid and liquid-cooled architectures, Divcon Controls says it is focusing on delivering control systems that enhance energy efficiency, reduce operational risk, and ensure long-term asset reliability.

“Our collaboration with industry leaders like Aligned Data Centers underscores our commitment to innovation and to solving the most pressing challenges in data centre infrastructure,” continues Kevin.

“Divcon Controls is proud to be at the forefront of developing intelligent control platforms for the next generation of high-density, AI-powered data centres, with environmental performance front of mind.”

For more from Aligned, click here.

Joe Peck - 29 July 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

GF introduces first-ever full-polymer Quick Connect Valve

The Quick Connect Valve 700 is a patented dual-ball valve engineered with the aim of enhancing safety, efficiency, and sustainability in Direct Liquid Cooling (DLC) systems.

The company claims that, "as the first all-polymer quick connect valve for data centre applications, it is 50% lighter and facilitates 25% better flow compared to conventional metal alternatives while offering easy, ergonomic handling."

As demand for high-density, high-performance computing grows, DLC is reportedly becoming a preferred method for thermal management in next-generation data centres.

By transporting coolant directly to the chip, DLC can improve thermal efficiency compared to air-based methods. A key component in this setup is the Technology Cooling System (TCS), which distributes coolant from the Cooling Distribution Unit (CDU) to individual server racks.

To support this shift, manufacturer of plastic piping systems, valves, and fittings GF has developed the Quick Connect Valve 700, a fully plastic, dual-ball valve engineered for direct-to-chip liquid cooling environments.

Positioned at the interface between the main distribution system and server racks, the valve is intended to enable fast, safe, and durable coolant connections in mission-critical settings.

Built on GF’s Ball Valve 546 Pro platform, the Quick Connect Valve 700 features two identical PVDF valve halves and a patented dual-interlock lever.

This mechanism ensures the valve can only be decoupled when both sides are securely closed, aiming to minimise fluid loss and maximise operator safety during maintenance. Its two-handed operation further reduces the risk of accidental disconnection.

The valve is made of corrosion-free polymer, which is over 50% lighter than metal alternatives and provides a UL 94 V-0 flammability rating.

Combined with the ergonomic design of its interlocking mechanism, the valve is, according to the company, easy to handle during installation and operation.

At the same time, its full-bore valve design seeks to ensure an optimal flow profile and a reduced pressure drop of up to 25% compared to similar metal products.

The product has a minimum expected service life of 25 years.

“With the Quick Connect Valve 700, we’ve created a critical link in the DLC cooling loop that’s not only lighter and safer, but more efficient,” claims Charles Freda, Global Head of Data Centers at GF.

“This innovation builds on our long-standing thermoplastic expertise to help operators achieve the performance and uptime their mission-critical environments demand.”

The Quick Connect Valve 700 has been assessed with an Environmental Product Declaration (EPD) according to ISO 14025 and EN 15804.

An EPD is a standardised, third-party verified document that uses quantified data from Life Cycle Assessments to estimate environmental impacts and enable comparisons between similar products.

For more from GF, click here.

Joe Peck - 28 July 2025

Data Centre Infrastructure News & Trends

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

STULZ invests in Hamburg production facility for liquid cooling

STULZ, a manufacturer of mission-critical air conditioning technology, has invested in a new production facility dedicated to liquid cooling systems at its headquarters in Hamburg.

The expansion reflects the company’s focus on meeting growing demand for advanced cooling systems across high-performance computing and AI-driven data centres.

The site extension enables closer collaboration between STULZ’s research and development, product management, and service teams, aiming to improve internal coordination and streamline workflows.

According to the company, this will accelerate the delivery of liquid cooling innovations, reduce time to market, and enhance customer support capabilities across global markets.

“Liquid cooling is a highly effective way to efficiently dissipate heat from the sensitive IT equipment found in modern data centres,” says Jörg Desler, Global Director Technology at STULZ.

“Liquid cooling solutions must therefore be manufactured to the highest standards, with rigorously tested materials, modern quality management, efficient production processes, and qualified and experienced personnel.

"We are proud to have these attributes in place in Hamburg and are already expanding upon them with our new production facility.”

STULZ offers a range of liquid cooling systems which it says are tailored to the needs of modern data centre environments. These include configurable complete systems, advanced chillers with free cooling functionality, and modular technologies for scalable, high-density deployments.

The company states that all offerings are designed for precise temperature control, reliability, and sustainability.

Among the products manufactured at the new facility is the CyberCool CMU cooling distribution unit, which enables control over both the facility water system and the technology cooling system.

It manages coolant flow, temperature, and pressure across both sides of the liquid cooling infrastructure, with the aim of improving efficiency. The unit is available in two sizes and provides a continuously variable output of up to 1,380kW. It can also be customised to meet specific project requirements.

The CyberCool CMU is produced in Hamburg and distributed to customers across the EMEA and US regions, while other STULZ production sites supply additional global markets. New variants and expanded product sizes are currently under development, supported by ongoing investment in the Hamburg facility.

“With this expansion of our production capabilities, we are demonstrating our commitment to Hamburg and thus creating a further basis for growth, innovation, and sustainable employment, even in the face of international competition,” adds Jörg.

“The development of liquid cooling for high performance computing and AI-driven data centres is a key component of our strategy to strengthen technological leadership and uphold our high standards of quality and service.”

For more from STULZ, click here.

Joe Peck - 24 July 2025

Data Centre Build News & Insights

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Liquid Cooling Technologies Driving Data Centre Efficiency

XDS to host 10MW of AI workloads in Saudi's 'Desert Dragons'

UK & Dubai-based XDS Datacentres (XDS), a developer of liquid immersion digital infrastructure, has signed a major agreement with ICS Arabia for the construction and delivery of Riyadh & Jeddah's first 10 MW immersion-cooled data centre.

This collaboration, developed within ICS Arabia's Desert Dragon technology ecosystem, aims to bring advanced computing capacity, sustainability, and scalability to support Saudi Arabia's digital transformation.

Under the terms of the 15-year agreement, ICS Arabia will design, construct, and hand over two 10MW facilities to XDS by Q4 2026. The project will utilise Desert Dragon's Tier III-certified infrastructure and immersion cooling technology to support high-density workloads such as AI, machine learning, blockchain, and other GPU-intensive applications, while the facility will seek to set new benchmarks for energy-efficient, high-performance computing in the region.

The signing ceremony was held on 8 July at Desert Dragon’s headquarters in Riyadh, with key executives from both organisations in attendance.

Ghufran Hamid, CEO of XDS, states, "We are pleased to partner with ICS Arabia on this landmark deployment. The Kingdom represents a key growth market for XDS, and the initial 10MW facilities will showcase the potential of immersion-cooled infrastructure to deliver both performance and sustainability. XDS would like to contribute to Vision 2030 by supplying sustainable infrastructure meeting global ESG standards.

"This isn't just another facility, it's the beginning of a new era. No other data centre company is providing the services XDS will provide, with the switch from air-cooled to liquid immersion. As demand for high-density AI workloads, sovereign compute, and climate-resilient digital infrastructure continues to rise, traditional air-cooled data centres are already struggling to cope. Immersion cooling isn't a niche but an inevitability."

Abdullah Ayed Al Mazny, General Manager at Desert Dragon (ICS Arabia), adds, "Our partnership with XDS reflects our shared vision to deliver cutting-edge data centre capabilities in the Kingdom. Together, we are enabling sovereign digital infrastructure aligned with the ambitions of Saudi Vision 2030."

Immersion cooling at scale

Both Riyadh & Jeddah facilities will feature full immersion cooling with rack densities up to 368kW. This would make them appropriate for services such as AI, GPU-as-a-Service (GPUaaS), cloud-native compute, and hyperscale edge deployment. The design includes redundant N+N power and cooling systems, Tier III certification (TCCF and TCDD), and high-capacity network interconnectivity.

Service and SLAs

Clients of XDS in Saudi Arabia will, according to the company, "benefit from 99.982% uptime guarantees, fully managed colocation services and smart hands, flexible power allocations, GPU-as-a-Service, private cloud, server conversion, customer rack migration and engineering support, Infrastructure-as-a-Service & Software-as-a-Service."

Supporting Saudi Arabia's digital future

The project represents a milestone for both XDS and ICS Arabia as they contribute to building the Kingdom's digital infrastructure and sovereign data capabilities.

The XDS data centre will support national cloud initiatives, artificial intelligence growth, and enterprise workloads that require scalable, low-latency compute infrastructure.

Following the announcement of XDS's successful immersion cooled facility in Dubai, this expansion into the Kingdom seeks to position the company as a key operator deploying immersion cooling at scale for high-density compute across the GCC.

Joe Peck - 15 July 2025

Cooling

Data Centres

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Trane expands liquid cooling portfolio

Trane, an American manufacturer of heating, ventilation, and air conditioning (HVAC) systems, has announced enhanced liquid cooling capabilities for its thermal management systems, intended to help data centres become more future-ready. These include new, scalable Coolant Distribution Units (CDU), from 2.5MW to 10MW.

“We are a trusted innovator for mission-critical infrastructure, continuously co-innovating with our customers to design and develop the custom, integrated thermal management systems needed to support sustainable business growth,” claims Steve Obstein, Vice President and General Manager, Data Centers & High-Tech, Trane Technologies. “Through our scalable, modular approach to liquid cooling we can provide a platform for future sustainable capacity growth and thermal load requirements associated with rapidly escalating AI needs.”

The scalable 2.5MW to 10MW platform adds to Trane's 1MW CDU, aiming to give data centres flexible, direct-to-chip cooling capacity to manage high-density computing environments.

The company says it supports operations and uptime throughout the lifecycle of the data centre through its service and network of data-centre-qualified technicians, located in proximity to customers, and Smart Service options for monitoring, predictive maintenance, and energy management.

Key features of the new products include:

• Modular scalability — Supporting cooling capacities up to 10MW, adaptable to data centre sizes.

• Direct-to-chip liquid cooling technology — Optimised for high-density data centres.

• Compact footprint — Provides up to 10MW cooling capacity in a factory-skid-mounted design.

• Service and support — Access to resources and data-centre-qualified technicians from Trane.

For more from Trane, click here.

Joe Peck - 12 June 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Aligned debuts its Advanced Cooling Lab

Aligned Data Centers, a technology infrastructure company, has announced the launch of its new Advanced Cooling Lab. The lab is dedicated to testing and developing Aligned’s air and liquid cooling solutions for Graphics Processing Units (GPUs) and emerging AI accelerators.

Aligned's Phoenix-based Advanced Cooling Lab has been designed to promote hybrid cooling environments and advance data centre infrastructure. The company's Delta Cube air-cooled system and DeltaFlow liquid-cooled system aim to ensure customers have the capacity and performance needed for AI and HPC workloads.

“Aligned has been innovating data centre cooling for more than a decade,” says Michael Welch, Chief Technology Officer at Aligned Data Centers. “The Advanced Cooling Lab is a testament to our commitment to delivering cutting-edge data centre solutions and our passion for innovation. By investing in research and development, we can continue to provide our customers with the most flexible and advanced infrastructure available, capable of handling the dynamic demands of AI workloads.”

For more from Aligned, click here.

Joe Peck - 5 June 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Castrol launches new fluid management service

Castrol, a British multinational lubricants company owned by BP, known for its presence in the automotive industry, has launched a new fluid management service for data centre liquid cooling, addressing a critical gap as the industry transitions away from traditional air-cooling systems.

Announced at the Datacloud Global Congress 2025 in Cannes, France, Castrol’s new service model aims to cover all four phases of the data centre operation lifecycle: system start-up, ongoing maintenance, break-fix support, and fluid disposal. The approach is designed to help remove operational barriers in the adoption of liquid cooling in data centres.

"Data centre operators recognise the benefits of liquid cooling but need assurance around long-term fluid management," states Peter Huang, Global Vice President of Data Centre Thermal Management at Castrol. "Castrol has delivered fluid services for the automotive industry for decades – we're now bringing this proven expertise to data centres with a service model that supports optimal performance throughout the entire lifecycle.”

The four-phase service includes:1. System start-up support with fluid installation, filtration, system flushing, and certificates of analysis.2. Ongoing maintenance, such as laboratory testing, dynamic monitoring, predictive maintenance, and smart dosing capabilities.3. ‘Break-fix’ service, including telephone assistance, virtual engineering support, on-site response, and spare fluid availability.4. Support with fluid collection and disposal.

Castrol’s service launch comes at a time when the data centre industry faces mounting pressure to improve cooling efficiency. Recent industry research indicates that traditional air-cooling systems struggle to handle increased computing demands from AI and edge computing applications, with 74% of data centre experts believing immersion cooling is now essential to meet current power requirements.

"Our aim with this new service model is to remove the operational and technical uncertainties that have slowed liquid cooling adoption," says Andrea Zunino, Global Offer Development Manager at Castrol. "Within liquid cooling systems, the fluid represents a single point of failure – degraded conditions can reduce cooling capacity and lead to equipment failure. We're going beyond just fluid supply to deliver structured support at every stage, giving data centre operators the confidence they need to embrace liquid cooling.”

The new service model will be deployed globally through Castrol's partner network. All services will be delivered with third-party suppliers. The availability and rollout of certain services may vary by location and may be introduced at different times depending on regional factors.

For more from Castrol, click here.

Joe Peck - 5 June 2025

Cooling

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

LiquidStack unveils GigaModular CDU

LiquidStack, a global company specialising in liquid cooling for data centres, today at Datacloud Global Congress unveiled its all-new GigaModular CDU — the industry’s first modular, scalable Coolant Distribution Unit with up to 10MW cooling capacity, made possible through the unit’s modular platform and 'pay-as-you-grow' installation approach.

Driven by dramatic increases in the adoption of AI, cloud computing, and other data-intensive technologies, the global data centre liquid cooling market is predicted to grow from $5.17 billion in 2025 to approximately $15.75 billion by 2030. Simultaneously, the demanding nature of AI workloads is pushing data centre thermal management requirements even further. With hardware such as Nvidia’s B300 and GB300 soon to arrive — and subsequent generations of even more powerful iterations inevitably following thereafter — the need to future-proof cooling capacity has never been greater. These increasingly sophisticated technologies generate far greater heat densities than traditional processing units, with rack power densities already exceeding 120kW per rack, and growing to 600kW by the end of 2027.

"AI will keep pushing thermal output to new extremes, and data centres need cooling systems that can be easily deployed, managed, and scaled to match heat rejection demands as they rise,” says Joe Capes, CEO of LiquidStack. “With up to 10MW of cooling capacity at N, N+1, or N+2, the GigaModular is a platform like no other — we designed it to be the only CDU our customers will ever need. It future-proofs design selections for direct-to-chip liquid cooling without traditional limits or boundaries."

Key features of the LiquidStack GigaModular CDU platform include:

● Scalable cooling capacity: A modular platform supporting single-phase, direct-to-chip liquid cooling heat loads from 2.5MW to 10MW.

● Pump module: An IE5 pump and dual BPHx, alongside dual 25um strainers.

● Control module: A centralised design with separate pump and control modules.

● Instrumentation kits: Centralised pressure, temperature, and EM flow sensors.

● Simplified service access: Serviceable from the front of the unit, with no rear or end access required, allowing the system to be placed against the wall.

● Optional configuration: Skid-mounted system with rail and overhead piping pre-installed or shipped as separate cabinets for on-site assembly.

LiquidStack will showcase the new GigaModular CDU at Datacloud Global Congress in Cannes, France, from 3-5 June at the Palais des Festivals. Attendees can visit LiquidStack at Booth #88 for a VR-driven demonstration.

GigaModular CDU quoting will begin by September 2025 with production in LiquidStack’s manufacturing facilities in Carrollton, Texas (USA).

For more from LiquidStack, click here.

Joe Peck - 3 June 2025

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

News

Supermicro introduces new direct liquid-cooling innovation

Supermicro has announced several improvements to its Direct Liquid Cooling (DLC) solution that incorporate new technologies for cooling various server components, accommodate warmer liquid inflow temperatures, and introduce innovative mechanical designs that enhance AI per watt.

The Supermicro DLC-2 solution reduces data centre power consumption by up to 40% compared to air-cooled installations. These advanced technologies enable faster deployment and reduced time-to-online for cutting-edge liquid-cooled AI infrastructure. Additionally, the total cost of ownership decreases by up to 20%. The comprehensive cold plate coverage of components allows for lower fan speeds and fewer required fans, significantly reducing data centre noise levels to approximately 50dB.

"With the expected demand for liquid-cooled data centres rising to 30% of all installations, we realised that current technologies were insufficient to cool these new AI-optimised systems," says Charles Liang, President and CEO of Supermicro. "Supermicro continues to remain committed to innovation, green computing, and improving the future of AI, by significantly reducing data centre power and water consumption, noise, and space. Our latest liquid-cooling innovation, DLC-2, saves data centre electricity costs by up to 40%."

Supermicro aims to save 20% of data centre costs and apply DLC-2 innovations as part of data centre building block solutions to make liquid-cooling more broadly available and accessible.

A significant component of the new liquid-cooling architecture is a GPU-optimised Supermicro server, which includes eight NVIDIA Blackwell GPUs and two Intel Xeon 6 CPUs, all in just 4U of rack height. This system is designed to support increased supply coolant temperatures. This unique and optimised design incorporates cold plates for CPUs, GPUs, memory, PCIe switches, and voltage regulators. This design reduces the need for high-speed fans and rear-door heat exchangers, thereby lowering cooling costs for the data centre.

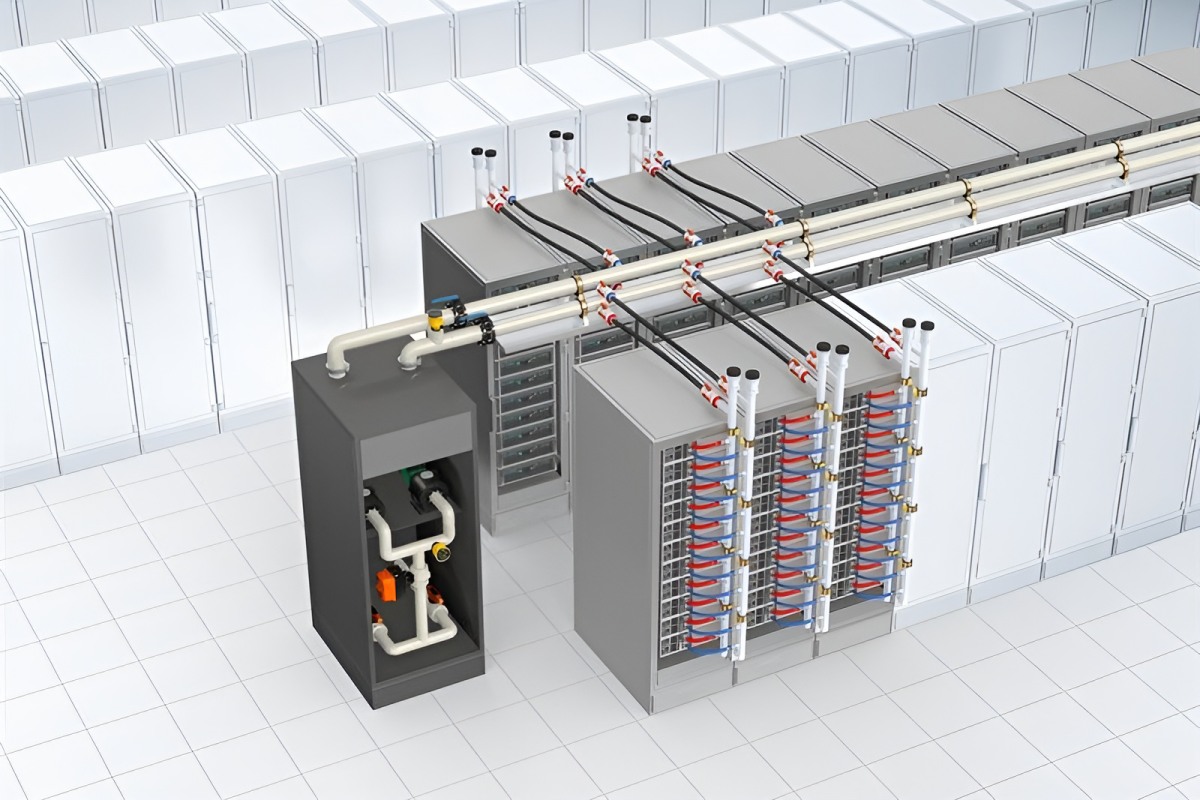

The new Supermicro DLC-2 stack supports the new 4U front I/O NVIDIA HGX B200 8-GPU system, and the in-rack Coolant Distribution Unit (CDU) has an increased capacity of removing 250kW of heat generated per rack. The Supermicro DLC-2 solution also utilises vertical coolant distribution manifolds (CDMs) to remove hot liquid and return cooler liquid to the servers for the entire rack. The reduced rack space requirements enables more servers to be installed, increasing computing density per unit of floor space. The vertical CDM is available in various sizes, precisely matching the number of servers installed in the rack. The entire DLC-2 stack is fully integrated with Supermicro SuperCloud Composer software for data centre-level management and infrastructure orchestration.

The efficient liquid circulation and nearly full liquid-cooling heat capture coverage, at up to 98% per server rack, allow for an increase in the inlet liquid temperature at up to 45°C. The higher inlet temperature eliminates the need for chilled water, chiller compressor equipment cost, and additional power usage, saving up to 40% of data centre water consumption.

Combined with liquid-cooled server racks and clusters, DLC-2 also offers hybrid cooling towers as well as water towers as part of data centre building blocks. The hybrid cooling towers combine the features of standard dry and water towers into a single design. This is especially beneficial in data centre locations with strong seasonal temperature variation to reduce usage of resources and costs further.

Supermicro serves as a comprehensive one-stop solution provider with global manufacturing scale, delivering data centre-level solution design, liquid-cooling technologies, networking, cabling, a full data centre management software suite, L11 and L12 solution validation, onsite deployment, and professional service and support. With production facilities across San Jose, Europe, and Asia, Supermicro offers unmatched manufacturing capacity for liquid-cooled rack systems. This ensures timely delivery, reduced total cost of ownership (TCO), and consistent quality.

For more from Supermicro, click here.

Simon Rowley - 15 May 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173