Liquid Cooling Technologies Driving Data Centre Efficiency

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Aggreko expands liquid-cooled load banks for AI DCs

Aggreko, a British multinational temporary power generation and temperature control company, has expanded its liquid-cooled load bank fleet by 120MW to meet rising global demand for commissioning equipment used in high-density data centres.

The company plans to double this capacity in 2026, supporting deployments across North America, Europe, and Asia, as operators transition to liquid cooling to manage the growth of AI and high-performance computing.

Increasing rack densities, now reaching between 300kW and 500kW in some environments, have pushed conventional air-cooling systems to their limits. Liquid cooling is becoming the standard approach, offering far greater heat removal efficiency and significantly lower power consumption.

As these systems mature, accurate simulation of thermal and electrical loads has become essential during commissioning to minimise downtime and protect equipment.

The expanded fleet enables Aggreko to provide contractors and commissioning teams with equipment capable of testing both primary and secondary cooling loops, including chiller lines and coolant distribution units. The load banks also simulate electrical demand during integrated systems testing.

Billy Durie, Global Sector Head – Data Centres at Aggreko, says, “The data centre market is growing fast, and with that speed comes the need to adopt energy efficient cooling systems. With this comes challenges that demand innovative testing solutions.

“Our multi-million-pound investment in liquid-cooled load banks enables our partners - including those investing in hyperscale data centre delivery - to commission their facilities faster, reduce risks, and achieve ambitious energy efficiency goals.”

Supporting commissioning and sustainability targets

Liquid-cooled load banks replicate the heat output of IT hardware, enabling operators to validate cooling performance before systems go live. This approach can improve Power Usage Effectiveness and Water Usage Effectiveness while reducing the likelihood of early operational issues.

Manufactured with corrosion-resistant materials and advanced control features, the equipment is designed for use in environments where reliability is critical.

Remote operation capabilities and simplified installation procedures are also intended to reduce commissioning timelines.

With global data centre power demand projected to rise significantly by 2030, driven by AI and high-performance computing, the ability to validate cooling systems efficiently is increasingly important.

Aggreko says it also provides commissioning support as part of project delivery, working with data centre teams to develop testing programmes suited to each site.

Billy continues, “Our teams work closely with our customers to understand their infrastructure, challenges, and goals, developing tailored testing solutions that scale with each project’s complexity.

"We’re always learning from projects, refining our design and delivery to respond to emerging market needs such as system cleanliness, water quality management, and bespoke, end-to-end project support.”

Aggreko states that the latest investment strengthens its ability to support high-density data centre construction and aligns with wider moves towards more efficient and sustainable operations.

Billy adds, “The volume of data centre delivery required is unprecedented. By expanding our liquid-cooled load bank fleet, we’re scaling to meet immediate market demand and to help our customers deliver their data centres on time.

"This is about providing the right tools to enable innovation and growth in an era defined by AI.”

For more from Aggreko, click here.

Joe Peck - 5 December 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Why cooling design is critical to the cloud

In this article for DCNN, Ross Waite, Export Sales Manager at Balmoral Tanks, examines how design decisions today will shape sustainable and resilient cooling infrastructure for decades to come:

Running hot and running dry?

Driven by the surge in AI and cloud computing, new data centres are appearing at pace across Europe, North America, and beyond. Much of the debate has focused on how we power sites, yet there is another side to the story, one that determines whether those billions invested in servers actually deliver: cooling.

Servers run hot, 24/7, and without reliable water systems to manage that heat, even the best-connected facilities cannot operate as intended. In fact, cooling is fast becoming the next frontier in data centre design and the decisions made today will echo for decades.

A growing thirst

Data centres are rapidly emerging as one of the most significant commercial water consumers worldwide. Current global estimates suggest that facilities already use over 560 billion litres of water annually, with that figure set to more than double to 1,200 billion litres by 2030 as AI workloads intensify.

The numbers at an individual site are equally stark. A single 100 MW hyperscale centre can use up to 2.5 billion litres per year - enough to supply a city of 80,000 people. Google has reported daily use of more than 2.1 million litres at some sites, while Microsoft’s 2023 global consumption rose 34% year-on-year to reach 6.4 million cubic metres. Meta reported 95% of its 2023 water use - some 3.1 billion litres - came from data centres.

The majority of this is consumed in evaporative cooling systems, where 80% of drawn water is lost to evaporation and just 20% returns for treatment. While some operators are trialling reclaimed or non-potable sources, these currently make up less than 5% of total supply.

The headline numbers can sound bleak, but water use is not inherently unsustainable. Increasingly, facilities are moving towards closed-loop cooling systems that recycle water for six to eight months at a time, reducing continuous draw from mains supply. These systems require bulk storage capacity, both for the initial fill and for holding treated water ready for reuse.

Designing resilience into water systems

This is where design choices made early in a project pay dividends. Consultants working on new builds are specifying not only the volume of water storage or the type of system that should be used but also the standards to which they are built. Tanks that support fire suppression, potable water, and process cooling need to meet stringent criteria, often set by insurers as well as regulators.

Selecting materials and coatings that deliver 30-50 years of service life can prevent expensive retrofits and reassure both clients and communities that these systems are designed to last. Smart water management, in other words, begins not onsite, but on the drawing board.

For consultants who are designing the build specifications for data centres, water is more than a technical input; it is a reputational risk. Once a specification is signed off and issued to tender, it is rarely altered. Getting it right first time is essential. That means selecting partners who can provide not just tanks, but expertise: helping ensure that water systems meet performance, safety, and sustainability criteria across decades of operation.

The payback is twofold. First, consultants safeguard their client’s investment by embedding resilience from the start. Second, they position themselves as trusted advisors in one of the most scrutinised aspects of data centre development. In a sector where projects often run to tens or hundreds of millions of pounds, this credibility matters.

Power may dominate the headlines, but cooling - and by extension water - is the silent foundation of the digital economy. Without it, AI models do not train, cloud services do not scale, and data stops flowing. The future of data centres will be judged not only on how much power they consume, but on how intelligently they use water - and that judgement begins with design.

If data centres are the beating heart of the modern economy, then water is the life force that keeps them alive. Cooling the cloud is not an afterthought; it is the future.

Joe Peck - 17 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

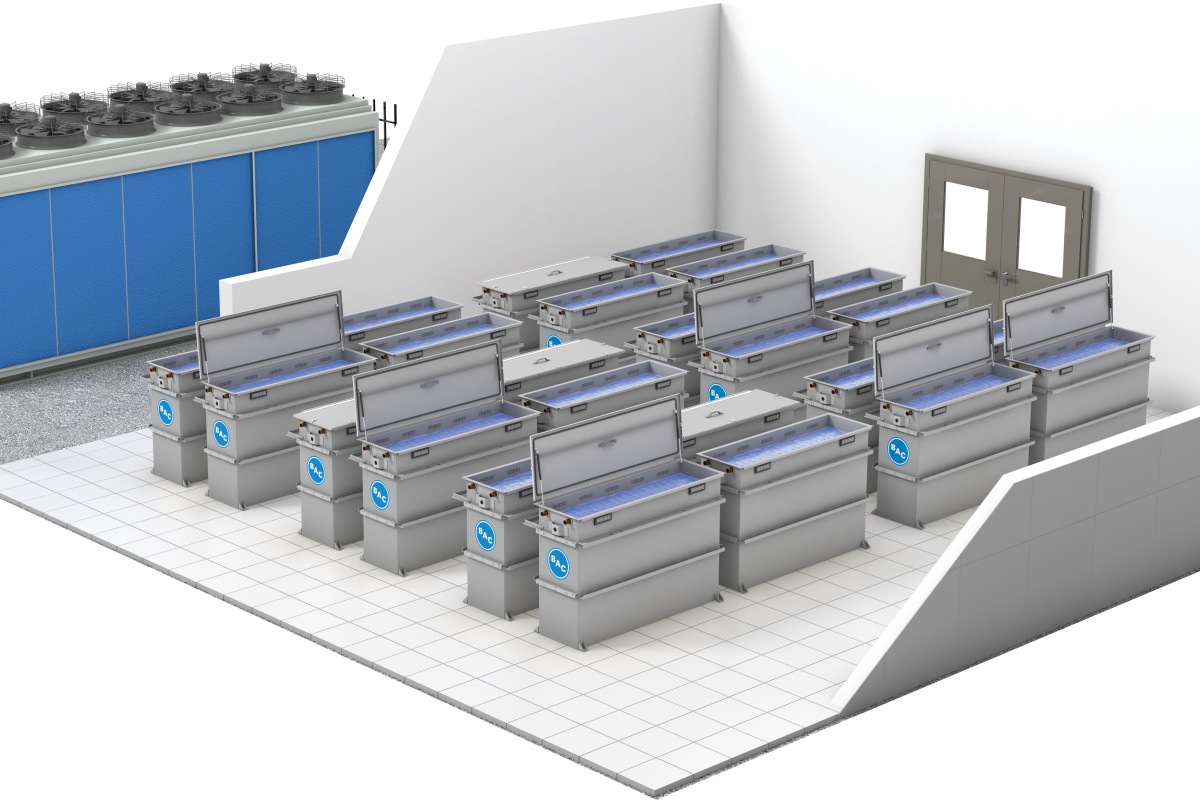

BAC releases upgraded immersion cooling tanks

Baltimore Aircoil Company (BAC), a provider of data centre cooling equipment, has introduced an updated immersion cooling tank for high-performance data centres, incorporating its CorTex technology to improve reliability, efficiency, and support for high-density computing environments.

The company says the latest tank has been engineered to provide consistent performance with minimal maintenance, noting its sealed design has no penetrations below the fluid level, helping maintain fluid integrity and reduce leakage risks.

Dual pumps are included for redundancy and the filter-free configuration removes the need for routine filter replacement.

Design improvements for reliability and ease of operation

The tanks are available in four sizes - 16RU, 32RU, 38RU, and 48RU - allowing operators to accommodate a range of immersion-ready servers. Air-cooled servers can also be adapted for immersion use.

Each unit supports server widths of 19 and 21 inches (~48 cm and ~53 cm) and depths up to 1,200 mm, enabling higher rack densities within a smaller footprint than traditional air-cooled systems.

BAC states that the design can support power usage effectiveness levels of up to 1.05, depending on the wider installation.

The system uses dielectric fluid to transfer heat from servers to the internal heat exchanger, while external circuits can run on water or water-glycol mixtures.

Cable entry points, the lid, and heat-exchanger connections are fluid-tight to help prevent contamination.

The immersion tank forms the indoor component of BAC’s Cobalt system, which combines indoor and outdoor cooling technologies for high-density computing.

The system can be paired with BAC’s evaporative, hybrid, adiabatic, or dry outdoor equipment to create a complete cooling configuration for data centres managing higher-powered servers and AI-driven workloads.

For more from BAC, click here.

Joe Peck - 17 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

ZutaCore unveils waterless end-of-row CDUs

ZutaCore, a developer of liquid cooling technology, has introduced a new family of waterless end-of-row (EOR) coolant distribution units (CDUs) designed for high-density artificial intelligence (AI) and high-performance computing (HPC) environments.

The units are available in 1.2 MW and 2 MW configurations and form part of the company’s direct-to-chip, two-phase liquid cooling portfolio.

According to ZutaCore, the EOR CDU range is intended to support multiple server racks from a single unit while maintaining rack-level monitoring and control.

The company states that this centralised design reduces duplicated infrastructure and enables waterless operation inside the white space, addressing energy-efficiency and sustainability requirements in modern data centres.

The cooling approach uses ZutaCore’s two-phase, direct-to-chip technology and a low-global warming potential dielectric fluid. Heat is rejected into the facility without water inside the server hall, aiming to reduce condensation and leak risk while improving thermal efficiency.

My Truong, Chief Technology Officer at ZutaCore, says, “AI data centres demand reliable, scalable thermal management that provides rapid insights to operate at full potential. Our new end-of-row CDU family gives operators the control, intelligence, and reliability required to scale sustainably.

"By integrating advanced cooling physics with modern RESTful APIs for remote monitoring and management, we’re enabling data centres to unlock new performance levels without compromising uptime or efficiency.”

Centralised cooling and deployment models

ZutaCore states that the systems are designed to support varying availability requirements, with hot-swappable components for continuous operation.

Deployment options include a single-unit configuration for cost-effective scaling or an active-standby arrangement for enterprise environments that require higher redundancy levels.

The company adds that the units offer encrypted connectivity and real-time monitoring through RESTful APIs, aimed at supporting operational visibility across multiple cooling units.

The EOR CDU platform is set to be used in EGIL Wings’ 15 MW AI Vault facility, as part of a combined approach to sustainable, high-density compute infrastructure.

Leland Sparks, President of EGIL Wings, claims, “ZutaCore’s end-of-row CDUs are exactly the kind of innovation needed to meet the energy and thermal challenges of AI-scale compute.

"By pairing ZutaCore’s waterless cooling with our sustainable power systems, we can deliver data centres that are faster to deploy, more energy-efficient, and ready for the global scale of AI.”

ZutaCore notes that its cooling technology has been deployed across more than forty global sites over the past four years, with users including Equinix, SoftBank, and the University of Münster.

The company says it continues to expand through partnerships with organisations such as Mitsubishi Heavy Industries, Carrier, and ASRock Rack, including work on systems designed for next-generation AI servers.

Joe Peck - 14 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Vertiv expands immersion liquid cooling portfolio

Vertiv, a global provider of critical digital infrastructure, has introduced the Vertiv CoolCenter Immersion cooling system, expanding its liquid cooling portfolio to support AI and high-performance computing (HPC) environments. The system is available now in Europe, the Middle East, and Africa (EMEA).

Immersion cooling submerges entire servers in a dielectric liquid, providing efficient and uniform heat removal across all components. This is particularly effective for systems where power densities and thermal loads exceed the limits of traditional air-cooling methods.

Vertiv has designed its CoolCenter Immersion product as a "complete liquid-cooling architecture", aiming to enable reliable heat removal for dense compute ranging from 25 kW to 240 kW per system.

Sam Bainborough, EMEA Vice President of Thermal Business at Vertiv, explains, “Immersion cooling is playing an increasingly important role as AI and HPC deployments push thermal limits far beyond what conventional systems can handle.

“With the Vertiv CoolCenter Immersion, we’re applying decades of liquid-cooling expertise to deliver fully engineered systems that handle extreme heat densities safely and efficiently, giving operators a practical path to scale AI infrastructure without compromising reliability or serviceability.”

Product features

The Vertiv CoolCenter Immersion is available in multiple configurations, including self-contained and multi-tank options, with cooling capacities from 25 kW to 240 kW.

Each system includes an internal or external liquid tank, coolant distribution unit (CDU), temperature sensors, variable-speed pumps, and fluid piping, all intended to deliver precise temperature control and consistent thermal performance.

Vertiv says that dual power supplies and redundant pumps provide high cooling availability, while integrated monitoring sensors, a nine-inch touchscreen, and building management system (BMS) connectivity simplify operation and system visibility.

The system’s design also enables heat reuse opportunities, supporting more efficient thermal management strategies across facilities and aligning with broader energy-efficiency objectives.

For more from Vertiv, click here.

Joe Peck - 7 November 2025

Data Centre Infrastructure News & Trends

Exclusive

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

CDUs: The brains of direct liquid cooling

As air cooling reaches its limits with AI and HPC workloads exceeding 100 kW per rack, hybrid liquid cooling is becoming essential. To this, coolant distribution units (CDUs) could be the key enabler for next-generation, high-density data centre facilities.

In this article for DCNN, Gordon Johnson, Senior CFD Manager at Subzero Engineering, discusses further the importance of CDUs in direct liquid cooling:

Cooling and the future of data centres

Traditional air cooling has hit its limits, with rack power densities surpassing 100 kW due to the relentless growth of AI and high-performance computing (HPC) workloads. Already, CPUs and GPUs exceed 700–1000 W per socket, while projections estimate that to rise to over 1500 W going forward.

Fans and heat sinks are just unable to handle these thermal loads at scale. Hybrid cooling strategies are becoming the only scalable, sustainable path forward.

Single-phase direct-to-chip (DTC) liquid cooling has emerged as the most practical and serviceable solution, delivering coolant directly to cold plates attached to processors and accelerators. However, direct liquid cooling (DLC) cannot be scaled safely or efficiently with plumbing alone. The key enabler is the coolant distribution unit (CDU), a system that integrates pumps, heat exchangers, sensors, and control logic into a coordinated package.

CDUs are often mistaken for passive infrastructure. But far from being a passive subsystem, they act as the brains of DLC, orchestrating isolation, stability, adaptability, and efficiency to make DTC viable at data centre scale. They serve as the intelligent control layer for the entire thermal management system.

Intelligent orchestration

CDUs do a lot more than just transport fluid around the cooling system; they think, adapt, and protect the liquid cooling portion of the hybrid cooling system. They maintain redundancy to ensure continuous operation, control flow, and pressure, using automated valves and variable speed pumps, filtering particulates to protect cold plates, and maintaining coolant temperature above the dew point to prevent condensation. They contribute to the precise, intelligent, and flexible coordination of the complete thermal management system.

Because of their greater cooling capacity, CDUs are ideal for large HPC data centres. However, because they must be connected to the facility's chilled water supply or another heat rejection source to continuously provide liquid to the cold plates for cooling, they can be complicated.

CDUs typically fall into two categories:

• Liquid to Liquid (L2L): Large HPC facilities are well-suited for high-capacity CDUs known as L2L. Through heat exchangers, they move chip heat into the isolated chilled water loop, such as the facility water system (FWS).

• Liquid to Air (L2A): For smaller deployments, L2A CDUs are simpler but have a lower cooling capacity. By utilising conventional HVAC systems, they transfer heat from the returning liquid coolant from the cold plates to the surrounding data centre air by using liquid-to-air heat exchangers rather than a chilled water supply or FWS.

Isolation: Safeguarding IT from facility water

Acting as the bridge between the FWS and the dedicated technology cooling system (TCS), which provides filtered liquid coolant directly to the chips via cold plate, CDUs isolate sensitive server cold plates from external variability, ensuring a safe and stable environment while constantly adjusting to shifting workloads.

One of L2L CDUs' primary functions is to create a dual-loop architecture:

• Primary loop (facility side): Connects to building chilled water, district cooling, or dry coolers

• Secondary loop (IT side): Delivers conditioned coolant directly to IT racks

CDUs isolate the primary loop (which may carry contaminants, particulates, scaling agents, or chemical treatments like biocides and corrosion inhibitors - chemistry that is incompatible with IT gear) from the secondary loop. As well as preventing corrosion and fouling, this isolation offers operators the safety margin that operators need for board-level confidence in liquid.

The integrity of the server cold plates is safeguarded by the CDU, which uses a heat exchanger to separate the two environments and maintain a clean, controlled fluid in the IT loop. Because CDUs are fitted with variable speed pumps, automated valves, and sensors, they can dynamically adjust the flow rate and pressure of the TCS to ensure optimal cooling even when HPC workloads change.

Stability: Balancing thermal predictability with unpredictable loads

HPC and AI workloads are not only high power; they are also volatile. GPU-intensive training jobs or changeable CPU workloads can cause high-frequency power swings, which - without regulation - would translate into thermal instability. The CDU mitigates this risk by controlling temperature, pressure, and flow across all racks and nodes, absorbing dynamic changes and delivering predictable thermal conditions.

The CDU absorbs fluctuations by stabilising temperature, pressure, and flow across all racks and nodes, regardless of how erratic the workload is. Sensor arrays ensure the cooling loop remains in accordance with specifications, while variable speed pumps modify flow to fit demand and heat exchangers are calibrated to maintain an established approach temperature.

Adaptability: Bridging facility constraints with IT requirements

The thermal architecture of data centres varies widely, with some using warm-water loops that operate at temperatures between 20 and 40°C. By adjusting secondary loop conditions to align IT requirements with the facility, the CDU adjusts to these fluctuations. The CDU uses mixing or bypass control to temper supply water. It can alternate between tower-assisted cooling, free cooling, or dry cooler rejection depending on the environmental conditions, and it can adjust flow distribution amongst racks to align with real-time demand.

This adaptability makes DTC deployable in a variety of infrastructures without requiring extensive facility renovations. It also makes it possible for liquid cooling to be phased in gradually - ideal for operators who need to make incremental upgrades.

Efficiency: Enabling sustainable scale

Beyond risk and reliability, CDUs unlock possibilities that make liquid cooling a sustainable option.

By managing flow and temperature, CDUs eliminate the inefficiencies of over-pumping and over-cooling. They also maximise scope for free cooling and heat recovery integration such as connecting to district heating networks and reclaiming waste heat as a revenue stream or sustainability benefit. This allows operators to simultaneously lower PUE (Power Usage Effectiveness) to values below 1.1 while simultaneously reducing WUE (Water Usage Effectiveness) by minimising evaporative cooling. All this, while meeting the extreme thermal demands of AI and HPC workloads.

CDUs as the thermal control plane

Viewed holistically, CDUs are far more than pumps and pipes; they are the thermal control plane for thermal management, orchestrating safe isolation, dynamic stability, infrastructure adaptability, and operational efficiency.

They translate unpredictable IT loads into manageable facility-side conditions, ensuring that single-phase DTC can be deployed at scale, enabling HPC and AI data centres to evolve into multi-hundred kilowatt racks without thermal failure.

Without CDUs, direct-to-chip cooling would be risky, uncoordinated, and inefficient. With CDUs, it becomes an intelligent and resilient architecture capable of supporting 100 kW (and higher) racks as well as the escalating thermal demands of AI and HPC clusters.

As workloads continue to climb and rack power densities surge, the industry’s ability to scale hinges on this intelligence. CDUs are not a supporting component; they are the enabler of single-phase DTC at scale and a cornerstone of the future data centre.

For more from Subzero Engineering, click here.

Joe Peck - 4 November 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Salute introduces DTC liquid cooling operations service

Salute, a US provider of data centre lifecycle services, has announced what it describes as the data centre industry’s first dedicated service for direct-to-chip (DTC) liquid cooling operations, launched at NVIDIA GTC in Washington DC, USA.

The service is aimed at supporting the growing number of data centres built for artificial intelligence (AI) and high-performance computing (HPC) workloads.

Several data centre operators, including Applied Digital, Compass Datacenters, and SDC, have adopted Salute’s operational model for DTC liquid cooling across new and existing sites.

Managing operational risks in high-density environments

AI and HPC facilities operate at power densities considerably higher than those of traditional enterprise or cloud environments. In these facilities, heat must be managed directly at the chip level using liquid cooling technologies.

Interruptions to coolant flow or system leaks can result in temperature fluctuations, equipment damage, or safety risks due to the proximity of electrical systems and liquids.

Erich Sanchack, Chief Executive Officer at Salute, says, “Salute has achieved a long list of industry firsts that have made us an indispensable partner for 80% of companies in the data centre industry.

"This first-of-its-kind DTC liquid cooling service is a major new milestone for our industry that solves complex operational challenges for every company making major investments in AI/HPC.”

Salute’s service aims to help operators establish and manage DTC liquid cooling systems safely and efficiently. It includes:

• Design and operational assessments to create tailored operational models for each facility

• Commissioning support to ensure systems are optimised for AI and HPC operations

• Access to a continuously updated library of best practices developed through collaborations with NVIDIA, CDU manufacturers, chemical suppliers, and other industry participants

• Operational documentation, including procedures for chemistry management, leak prevention, safety, and CDU oversight

• Training programmes for data centre staff through classroom, online, and lab-based sessions

• Optional operational support to help operators scale teams in line with AI and HPC demand

Industry comments

John Shultz, Chief Product Officer AI and Learning Officer for Salute, argues, “This service has already proven to be a game changer for the many data centre service providers who partnered with us as early adopters. By successfully mitigating the risks of DTC liquid cooling, Salute is enabling these companies to rapidly expand their AI/HPC operations to meet customer demand.

"These companies will rely on this service from Salute to support an estimated 260 MW of data centre capacity in the coming months and will expand that to an estimated 3,300 MW of additional data centre capacity by the end of 2027. This is an enormous validation of the impact of our service on their ability to scale. Now, other companies can benefit from this service to protect their investments in AI.”

Laura Laltrello, COO of Operations at Applied Digital, notes, “High-density environments that utilise liquid cooling require an entirely new operational model, which is why we partnered with Salute to implement operational methodologies customised for our facilities and our customers’ needs.”

Walter Wang, Founder at SDC, adds, "Salute is making it possible for SDC’s customers to accelerate AI deployments with zero downtime, thanks to the proven operational model, real-world training, and other best practices."

Joe Peck - 28 October 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Arteco introduces ECO coolants for data centres

Arteco, a Belgian manufacturer of heat transfer fluids and direct-to-chip coolants, has expanded its coolant portfolio with the launch of ECO versions of its ZITREC EC product line, designed for direct-to-chip liquid cooling in data centres.

Each product is manufactured using renewable or recycled feedstocks with the aim of delivering a significantly reduced product carbon footprint compared with fossil-based equivalents, while maintaining the same thermal performance and reliability.

Addressing growing thermal challenges

As demand for high-performance computing rises, driven by artificial intelligence (AI) and other workloads, operators face increasing challenges in managing heat loads efficiently.

Arteco’s ZITREC EC line was developed to support liquid cooling systems in data centres, enabling high thermal performance and energy efficiency.

The new ECO version incorporates base fluids, Propylene Glycol (PG) or Ethylene Glycol (EG), sourced from certified renewable or recycled materials. By moving away from virgin fossil-based resources, ECO products aim to help customers reduce scope 3 emissions without compromising quality.

Serge Lievens, Technology Manager at Arteco, says, “Our comprehensive life cycle assessment studies show that the biggest environmental impact of our coolants comes from fossil-based raw materials at the start of the value chain.

"By rethinking those building blocks and incorporating renewable and/or recycled raw materials, we are able to offer products with significantly lower climate impact, without compromising on high quality and performance standards.”

Certification and traceability

Arteco’s ECO coolants use a mass balance approach, ensuring that renewable and recycled feedstocks are integrated into production while maintaining full traceability. The process is certified under the International Sustainability and Carbon Certification (ISCC) PLUS standard.

Alexandre Moireau, General Manager at Arteco, says, “At Arteco, we firmly believe the future of cooling must be sustainable. Our sustainability strategy focuses on climate action, smart use of resources, and care for people and communities.

"This new family of ECO coolants is a natural extension of that commitment. Sustainability for us is a continuous journey, one where we keep researching, innovating, and collaborating to create better, cleaner cooling solutions.”

For more from Arteco, click here.

Joe Peck - 26 September 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

GF partners with NTT Facilities on sustainable cooling

GF, a provider of piping systems for data centre cooling systems, has announced a collaboration with NTT Facilities in Japan to support the development of sustainable cooling technologies for data centres.

The partnership involves GF supplying pre-insulated piping for the 'Products Engineering Hub for Data Center Cooling', a testbed and demonstration site operated by NTT Facilities.

The hub opened in April 2025 and is designed to accelerate the move from traditional chiller-based systems to alternatives such as direct liquid cooling.

Focus on energy-efficient cooling

GF is providing its pre-insulated piping for the facility’s water loop. The system is designed to support efficient thermal management, reduce energy losses, and protect against corrosion. GF’s offering covers cooling infrastructure from the facility level through to rack-level systems.

Wolfgang Dornfeld, President Business Unit APAC at GF, says, “Our partnership with NTT Facilities reflects our commitment to working side by side with customers to build smarter, more sustainable data centre infrastructure.

"Cooling is a critical factor in AI-ready data centres, and our polymer-based systems ensure performance, reliability, and energy efficiency exactly where it matters most.”

While the current project focuses on water transport within the facility, GF says it also offers a wider range of polymer-based systems for cooling networks. The company notes that these systems are designed to help improve uptime, increase reliability, and support sustainability targets.

For more from GF, click here.

Joe Peck - 10 September 2025

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Castrol and Airsys partner on liquid cooling

Castrol, a British multinational lubricants company owned by BP, and Airsys, a provider of data centre cooling systems, have formed a partnership to advance liquid cooling technologies for data centres, aiming to meet the growing demands of next-generation computing and AI applications.

The collaboration will see the companies integrate their technologies, co-develop new products, and promote greater industry awareness of liquid cooling. A recent milestone includes Castrol’s Immersion Cooling Fluid DC 20 being certified as fully compatible with Airsys’ LiquidRack systems.

Addressing rising cooling demands in the AI era

The partnership comes as traditional air-cooling methods struggle to keep pace with increasing power densities.

Research from McKinsey indicates that average rack power density has more than doubled in two years to 17kW. Large Language Models (LLMs) such as ChatGPT can consume over 80kW per rack, while Nvidia’s latest chips may require up to 120kW per rack.

Castrol’s own research found that 74% of data centre professionals believe liquid cooling is now the only viable option to handle these requirements. Without effective cooling, systems face risks of overheating, failure, and equipment damage.

Industry expertise and collaboration

By combining Castrol’s 125 years of expertise in fluid formulation with Airsys’ 30 years of cooling system development, the companies aim to accelerate the adoption of liquid cooling.

Airsys has also developed spray cooling technology designed to address the thermal bottleneck of AI whilst reducing reliance on mechanical cooling.

"Liquid cooling is no longer just an emerging trend; it’s a strategic priority for the future of thermal management," says Matthew Thompson, Managing Director at Airsys United Kingdom.

"At Airsys, we’ve built a legacy in air cooling over decades, supporting critical infrastructure with reliable, high-performance systems. This foundation has enabled us to evolve and lead in liquid cooling innovation.

"Our collaboration with Castrol combines our engineering depth with their expertise in advanced thermal fluids, enabling us to deliver next-generation solutions that meet the demands of high-density, high-efficiency environments."

Peter Huang, Global President, Data Centre and Thermal Management at Castrol, adds, "Castrol has been working closely with Airsys for two years, and we’re excited to continue working together as we look to accelerate the adoption of liquid cooling technology and to help the industry support the AI boom.

"We have been co-engineering solutions with OEMs for decades, and the partnership with Airsys is another example of how Castrol leans into technical problems and supports its customers and partners in delivering optimal outcomes."

For more from Castrol, click here.

Joe Peck - 5 September 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173