Data

Data

Features

News

News in Cloud Computing & Data Storage

365 Data Centers collaborates with Liberty Center One

365 Data Centers (365), a provider of network-centric colocation, network, cloud, and other managed services, has announced a collaboration with Liberty Center One, an IT delivery solutions company focused on cloud services, data protection, and high-availability environments.

The collaboration aims to expand the companies' combined cloud capabilities.

Liberty Center One provides open-sourced-based public and private cloud services, disaster recovery resources, and colocation at two data centres which it operates.

365 currently operates 20 colocation data centres, and this relationship is set to enhance the company’s colocation, public cloud, multi-tenant, private cloud, and hybrid cloud offerings for enterprise clients, as well as its managed and dedicated services.

"This collaboration will have big implications for 365 as we continue to expand our offerings to the market," believes Derek Gillespie, CRO of 365 Data Centers.

"When it comes to solutions for enterprise, working with Liberty Center One will enable us to enhance our current suite of cloud capabilities and hosted services to give our customers what they need today to meet the demands of their business.”

Tim Mullahy, Managing Director of Liberty Center One, adds, "We’re looking forward to working with 365 Data Centers to be able to truly bring the best out of one another’s services through this agreement.

"Customer service has always been our number one priority, and this association will be instrumental in helping 365 reach its business goals.”

For more from 365 Data Centers, click here.

Joe Peck - 13 August 2025

Data

Features

News

News in Cloud Computing & Data Storage

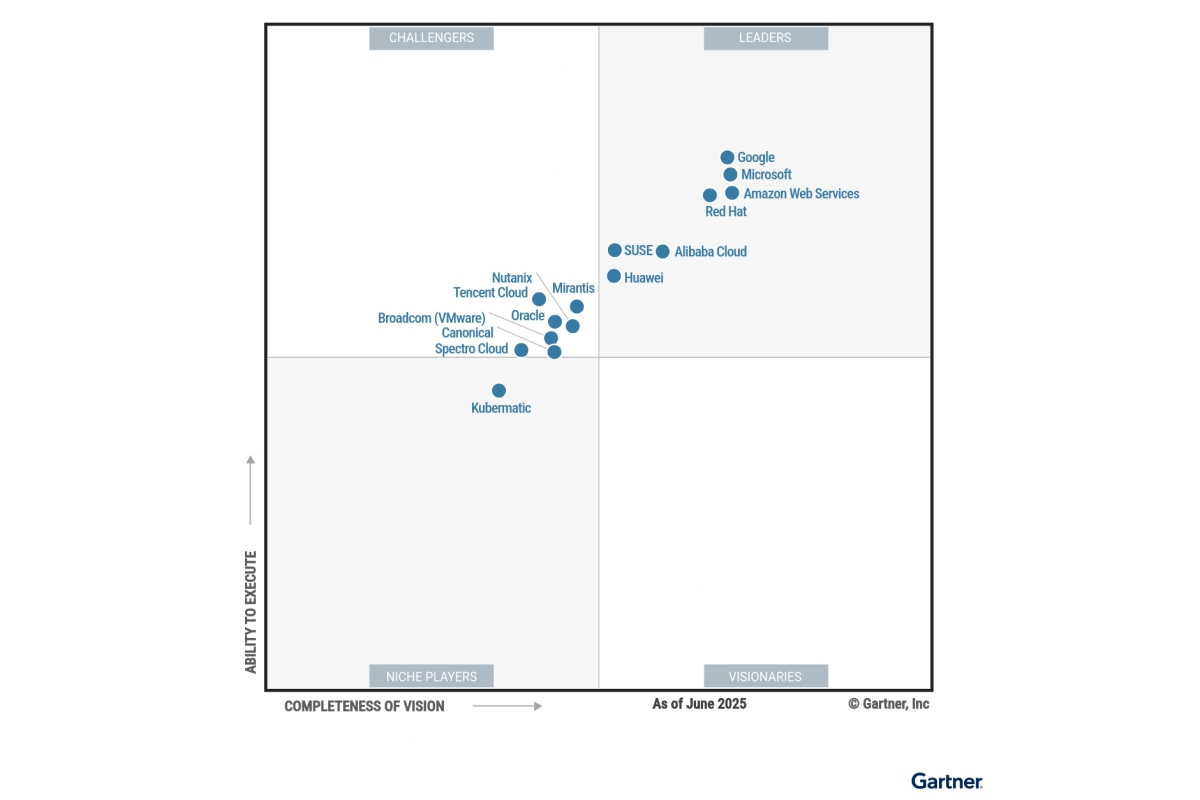

Huawei named a leader for container management

Chinese multinational technology company Huawei has been positioned in the 'Leaders' quadrant of American IT research and advisory company Gartner's Magic Quadrant for Container Management 2025, recognising its capabilities in cloud-native infrastructure and container management.

The company’s Huawei Cloud portfolio includes products such as CCE Turbo, CCE Autopilot, Cloud Container Instance (CCI), and the distributed cloud-native service UCS. These are designed to support large-scale containerised workloads across public, distributed, hybrid, and edge cloud environments.

Huawei Cloud’s offerings cover a range of use cases, including new cloud-native applications, containerisation of existing applications, AI container deployments, edge computing, and hybrid cloud scenarios. Gartner’s assessment also highlighted Huawei Cloud’s position in the AI container domain.

Huawei is an active contributor to the Cloud Native Computing Foundation (CNCF), having participated in 82 CNCF projects and holding more than 20 maintainer roles. It is currently the only Chinese cloud provider with a vice-chair position on the CNCF Technical Oversight Committee.

The company says it has donated multiple projects to the CNCF, including KubeEdge, Karmada, Volcano, and Kuasar, and contributed other projects such as Kmesh, openGemini, and Sermant in 2024.

Use cases and deployments

Huawei Cloud container services are deployed globally in sectors such as finance, manufacturing, energy, transport, and e-commerce. Examples include:

• Starzplay, an OTT platform in the Middle East and Central Asia, used Huawei Cloud CCI to transition to a serverless architecture, handling millions of access requests during the 2024 Cricket World Cup whilst reducing resource costs by 20%.

• Ninja Van, a Singapore-based logistics provider, containerised its services using Huawei Cloud CCE, enabling uninterrupted operations during peak periods and improving order processing efficiency by 40%.

• Chilquinta Energía, a Chilean energy provider, migrated its big data platform to Huawei Cloud CCE Turbo, achieving a 90% performance improvement.

• Konga, a Nigerian e-commerce platform, adopted CCE Turbo to support millions of monthly active users.

• Meitu, a Chinese visual creation platform, uses CCE and Ascend cloud services to manage AI computing resources for model training and deployment.

Cloud Native 2.0 and AI integration

Huawei Cloud has incorporated AI into its cloud-native strategy through three main areas:

1. Cloud for AI – CCE AI clusters form the infrastructure for CloudMatrix384 supernodes, offering topology-aware scheduling, workload-aware scaling, and faster container startup for AI workloads.

2. AI for Cloud – The CCE Doer feature integrates AI into container lifecycle management, offering diagnostics, recommendations, and Q&A capabilities. Huawei reports over 200 diagnosable exception scenarios with a root cause accuracy rate above 80%.

3. Serverless containers – Products include CCE Autopilot and CCI, designed to reduce operational overhead and improve scalability. New serverless container options aim to improve computing cost-effectiveness by up to 40%.

Huawei Cloud states it will continue working with global operators to develop cloud-native technologies and broaden adoption across industries.

For more from Huawei, click here.

Joe Peck - 12 August 2025

Artificial Intelligence in Data Centre Operations

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centres

Macquarie, Dell bring AI factories to Australia

Australian data centre operator Macquarie Data Centres, part of Macquarie Technology Group, is collaborating with US multinational technology company Dell Technologies with the aim of providing a secure, sovereign home for AI workloads in Australia.

Macquarie Data Centres will host the Dell AI Factory with NVIDIA within its AI and cloud data centres. This approach seeks to power enterprise AI, private AI, and neo cloud projects while achieving high standards of data security within sovereign data centres.

This development will be particularly relevant for critical infrastructure providers and highly regulated sectors such as healthcare, finance, education, and research, which have strict regulatory compliance conditions relating to data storage and processing.

This collaboration hopes to give them the secure, compliant foundation needed to build, train, and deploy advanced AI applications in Australia, such as AI digital twins, agentic AI, and private LLMs.

Answering the Government’s call for sovereign AI

The Australian Government has linked the data centre sector to its 'Future Made in Australia' policy agenda. Data centres and AI also play an important role in the Australian Federal Government’s new push to improve Australia’s productivity.

“For Australia's AI-driven future to be secure, we must ensure that Australian data centres play a core role in AI, data, infrastructure, and operations,” says David Hirst, CEO, Macquarie Data Centres.

“Our collaboration with Dell Technologies delivers just that, the perfect marriage of global tech and sovereign infrastructure.”

Sovereignty meets scalability

Dell AI Factory with NVIDIA infrastructure and software will be supported by Macquarie Data Centres’ newest purpose-built AI and cloud data centre, IC3 Super West.

The 47MW facility is, according to the company, "purpose-built for the scale, power, and cooling demands of AI infrastructure." It is to be ready in mid-2026 with the entire end-state power secured.

“Our work with Macquarie Data Centres helps bring the Dell AI Factory with NVIDIA vision to life in Australia,” comments Jamie Humphrey, General Manager, Australia & New Zealand Specialty Platforms Sales, Dell Technologies ANZ.

“Together, we are enabling organisations to develop and deploy AI as a transformative and competitive advantage in Australia in a way that is secure, sovereign, and scalable.”

Macquarie Technology Group and Dell Technologies have been collaborating for more than 15 years.

For more from Macquarie Data Centres, click here.

Joe Peck - 11 August 2025

Artificial Intelligence in Data Centre Operations

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Products

Cloudera is bringing Private AI to data centres

Cloudera, a hybrid platform for data, analytics, and AI, today announced the latest release of Cloudera Data Services, bringing Private AI on premises and aiming to give enterprises secure, GPU-accelerated generative AI capabilities behind their firewall.

With built-in governance and hybrid portability, Cloudera says organisations can now build and scale their own sovereign data cloud in their own data centre, "eliminating security concerns."

The company claims it is the only vendor that delivers the full data lifecycle with the same cloud-native services on premises and in the public cloud.

Concerns about keeping sensitive data and intellectual property secure is a key factor in what holds back AI adoption for enterprises across industries.

According to Accenture, 77% of organisations lack the foundational data and AI security practices needed to safeguard critical models, data pipelines, and cloud infrastructure.

Cloudera argues that it directly addresses the biggest security and intellectual property risks of enterprise AI, allowing customers to "accelerate their journey from prototype to production from months to weeks."

Through this release, the company claims users could reduce infrastructure costs and streamline data lifecycles, boosting data team productivity, as well as accelerating workload deployment, enhancing security by automating complex tasks, and achieving faster time-to-value for AI deployment.

As part of this release, both Cloudera AI Inference Service and AI Studios are now available in data centres. Both of these tools are designed to tackle the barriers to enterprise AI adoption and have previously been available in cloud only.

Details of the products

• Cloudera AI Inference services, accelerated by NVIDIA:

The company says this is one of the industry’s first AI inference services to provide embedded NVIDIA NIM microservice capabilities and it is streamlining the deployment and management of large-scale AI models to data centres.

It continues to suggest the engine helps deploy and manage the AI production lifecycle, right in the data centre, where data already securely resides.

• Cloudera AI Studios:

The company claims this offering democratises the entire AI application lifecycle, offering "low-code templates that empower teams to build and deploy GenAI applications and agents."

Data and comments

According to an independent Total Economic Impact (TEI) study - conducted by Forrester Consulting and commissioned by Cloudera - a composite organisation representative of interviewed customers who adopted Cloudera Data Services on premises saw:

• An 80% faster time-to-value for workload deployment• A 20% increase in productivity for data practitioners and platform teams• Overall savings of 35% from the modern cloud-native architecture

The study also highlighted operational efficiency gains, with some organisations improving hardware utilisation from 30% to 70% and reporting they needed between 25% to 50% less capacity after modernising.

“Historically, enterprises have been forced to cobble together complex, fragile DIY solutions to run their AI on premises,” comments Sanjeev Mohan, an industry analyst.

“Today, the urgency to adopt AI is undeniable, but so are the concerns around data security. What enterprises need are solutions that streamline AI adoption, boost productivity, and do so without compromising on security.”

Leo Brunnick, Cloudera’s Chief Product Officer, claims, “Cloudera Data Services On-Premises delivers a true cloud-native experience, providing agility and efficiency without sacrificing security or control.

“This release is a significant step forward in data modernisation, moving from monolithic clusters to a suite of agile, containerised applications.”

Toto Prasetio, Chief Information Officer of BNI, states, "BNI is proud to be an early adopter of Cloudera’s AI Inference service.

"This technology provides the essential infrastructure to securely and efficiently expand our generative AI initiatives, all while adhering to Indonesia's dynamic regulatory environment.

"It marks a significant advancement in our mission to offer smarter, quicker, and more dependable digital banking solutions to the people of Indonesia."

This product is being demonstrated at Cloudera’s annual series of data and AI conferences, EVOLVE25, starting this week in Singapore.

Joe Peck - 8 August 2025

Data

Features

News in Cloud Computing & Data Storage

The hidden cost of overuse and misuse of data storage

Most organisations are storing far more data than they use and, while keeping it “just in case” might feel like the safe option, it’s a habit that can quietly chip away at budgets, performance, and even sustainability goals.

At first glance, storing everything might not seem like a huge problem. But when you factor in rising energy prices and ballooning data volumes, the cracks in that strategy start to show.

Over time, outdated storage practices, from legacy systems to underused cloud buckets, can become a surprisingly expensive problem.

Mike Hoy, Chief Technology Officer at UK edge infrastructure provider Pulsant, explores this growing challenge for UK businesses:

More data, more problems

Cloud computing originally promised a simple solution: elastic storage, pay-as-you-go, and endless scalability. But in practice, this flexibility has led many organisations to amass sprawling, unmanaged environments.

Files are duplicated, forgotten, or simply left idle – all while costs accumulate.

Many businesses also remain tied to on-premises legacy systems, either from necessity or inertia. These older infrastructures typically consume more energy, require regular maintenance, and provide limited visibility into data usage.

Put unmanaged cloud plus outdated on-prem systems together and you’ve got a recipe for inefficiency.

The financial sting of bad habits

Most leaders in IT understand storing and securing data costs money. But what often gets overlooked are the hidden costs: the backup of low-value data, the power consumption of idle systems, or the surprise charges that come from cloud services which are not being monitored properly.

Then there’s the operational cost. Disorganised or poorly labelled data makes access slower and compliance tougher. It also increases security risks, especially if sensitive information is spread across uncontrolled environments.

The longer these issues go unchecked, the more danger there is of a snowball effect.

Smarter storage starts with visibility

The first step towards resolving these issues isn’t deleting data indiscriminately, it’s understanding what’s there.

Carrying out an infrastructure or storage audit can shed light on what’s being stored, who’s using it, and whether it still serves a purpose.

Once that visibility is at your fingertips, you can start making smarter decisions about what stays, what goes, and what gets moved somewhere more cost-effective.

This is where a hybrid approach of combining cloud, on-premises, and edge infrastructure comes into play. It lets businesses tailor their storage to the job at hand, reducing waste while improving performance.

Why edge computing is part of the solution

Edge computing isn’t just a tech buzzword; it’s an increasingly practical way to harness data where it’s generated.

By processing information at the edge, organisations can act on insights faster, reduce the volume of data stored centrally, and ease the load on core networks and systems.

Edge computing technologies make this approach practical. By using regional edge data centres or local processing units, businesses can filter and process data closer to its source, sending only essential information to the cloud or core infrastructure.

This reduces storage and transmission costs and helps prevent the build-up of redundant or low-value data that can silently increase expenses over time.

This approach is particularly valuable in data-heavy industries such as healthcare, logistics, and manufacturing, where large volumes of real-time information are produced daily.

Processing data locally enables businesses to store less, move less, and act faster.

The wider payoff

Cutting storage costs is an obvious benefit but it’s far from the only one.

A smarter, edge-driven strategy helps businesses build a more efficient, resilient, and sustainable digital infrastructure:

• Lower energy usage — By processing and filtering data locally, organisations reduce the energy demands of transmitting and storing large volumes centrally, supporting both carbon reduction targets and lower utility costs. As sustainability reporting becomes more critical, this can also help meet Scope 2 emissions goals.

• Faster access to critical data — When the most important data is processed closer to its source, teams can respond in real time, meaning improved decision-making, customer experience, and operational agility.

• Greater resilience and reliability — Local processing means organisations are less dependent on central networks. If there’s an outage or disruption, edge infrastructure can provide continuity, keeping key services running when they’re needed most.

• Improved compliance and governance — By keeping sensitive data within regional boundaries and only transmitting what’s necessary, businesses can simplify compliance with regulations such as GDPR, while reducing the risk of data sprawl and shadow IT.

Ultimately, it’s about creating a storage and data environment that’s fit for modern demands. It needs to be fast, flexible, efficient and aligned with wider business priorities.

Don’t let storage be an afterthought

Data is valuable - but only when it's well managed.

When storage becomes a case of “out of sight, out of mind,” businesses end up paying more for less. And what do they have to show for it? Ageing infrastructure and bloated cloud bills.

A little housekeeping goes a long way. By adopting modern infrastructure strategies, including edge computing and hybrid storage models, businesses can transform data storage from a hidden cost centre into a source of operational efficiency and competitive advantage.

For more from Pulsant, click here.

Joe Peck - 1 August 2025

Data

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

News

365 Data Centers, Megaport grow partnership

365 Data Centers (365), a provider of network-centric colocation, network, cloud, and other managed services, has announced a further expansion of its partnership with Megaport, a global Network-as-a-Service provider (NaaS).

Megaport has broadened its 365 footprint by adding Points of Presence (PoPs) at several of 365 Data Centers’ colocation facilities - namely Alpharetta, GA; Aurora, CO; Boca Raton, FL; Bridgewater, NJ; Carlstadt, NJ; and Spring Garden, PA - enhancing public cloud and other connectivity systems available to 365’s customers.

Said customers will now be able to access DIA, Transport, and direct-to-cloud connectivity options to all the major public cloud hyperscalers - such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), Oracle Cloud, and IBM Cloud - directly from 365 Data Centers.

“Integrating Megaport’s advanced connectivity solutions into our data centers is a natural progression of our partnership and network-centric strategy," comments Derek Gillespie, CRO at 365 Data Centers.

"When we’ve added to Megaport’s presence in other facilities, the deployments [have] fortified our joint Infrastructure-as-a-Service (IaaS) and NaaS offerings and complemented our partnership in major markets.

"Megaport’s growing presence with 365 significantly enhances the public cloud connectivity options available to our customers.”

Michael Reid, CEO at Megaport, adds, “Our expanded partnership with 365 Data Centers is all about pushing boundaries and delivering more for our customers.

"Together, we’re making cutting-edge network solutions easier to access, no matter the size or location of the business, so customers can connect, scale, and innovate on their terms.”

For more from 365 Data Centers, click here.

Joe Peck - 1 August 2025

Cyber Security Insights for Resilient Digital Defence

Data

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Summer habits could increase cyber risk to enterprise data

As flexible work arrangements expand over the summer months, cybersecurity experts are warning businesses about the risks associated with remote and ‘workation’ models, particularly when employees access corporate systems from unsecured environments.

According to Andrius Buinovskis, Cybersecurity Expert at NordLayer - a provider of network security services for businesses - working from abroad or outside traditional office settings can increase the likelihood of data breaches if not properly managed. The main risks include use of unsecured public Wi-Fi, reduced vigilance against phishing scams, use of personal or unsecured devices, and exposure to foreign jurisdictions with weaker data protection regulations.

Devices used outside the workplace are also more susceptible to loss or theft, further raising the threat of data exposure.

Andrius recommends the following key measures to mitigate risk:

• Strong network encryption — It secures data in transit, transforming it into an unreadable format and safeguarding it from potential attackers.

• Multi-factor authentication — Access controls, like multi-factor authentication, make it more difficult for cybercriminals to access accounts with stolen credentials, adding a layer of protection.

• Robust password policies — Hackers can easily target and compromise accounts protected by weak, reused, or easy-to-access passwords. Enforcing strict password management policies requiring unique, long, and complex passwords, and educating employees on how to store them securely, minimises the possibility of falling victim to cybercriminals.

• Zero trust architecture — The constant verification process of all devices and users trying to access the network significantly reduces the possibility of a hacker successfully infiltrating the business.

• Network segmentation — If a bad actor does manage to infiltrate the network, ensuring it's segmented helps to minimise the potential damage. Not granting all employees access to the whole network and limiting it to the parts essential for their work helps reduce the scope of the data an infiltrator can access.

He also highlights the importance of centralised security and regular staff training on cyber hygiene, especially when using personal devices or accessing systems while travelling.

“High observability into employee activity and centralised security are crucial for defending against remote work-related cyber threats,” he argues.

Joe Peck - 24 July 2025

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

How the Internet of Things Is Shaping Data Centre Operations

Siemens enters collaboration with Microsoft

Siemens Smart Infrastructure, a digital infrastructure division of German conglomerate Siemens, today announced a collaboration agreement with Microsoft to transform access to Internet of Things (IoT) data for buildings. The collaboration will enable interoperability between Siemens' digital building platform Building X and Microsoft Azure IoT Operations, a component of this adaptive cloud approach, providing tools and infrastructure to connect edge devices while integrating data.

The interoperability of Building X and Azure IoT Operations seeks to make IoT-based data more accessible for large enterprise customers across commercial buildings, data centres, and higher education facilities, and provide them with the information to enhance sustainability and operations. It enables automatic onboarding and monitoring by bringing datapoints such as temperature, pressure, or indoor air quality to the cloud for assets like heating, ventilation, and air conditioning (HVAC) systems, valves, and actuators. The system should also allow customers to develop their own in-house use cases such as energy monitoring and space optimisation.

The collaboration leverages known and established open industry standards, including World Wide Web Consortium (W3C) Web of Things (WoT), describing the metadata and interfaces of hardware and software, as well as Open Platform Communications Unified Architecture (OPC UA) for communication of data to the cloud. Both Siemens and Microsoft are members of the W3C and the OPC Foundation, which develops standards and guidelines that help build an industry based on accessibility, interoperability, privacy, and security.

“This collaboration with Microsoft reflects our shared vision of enabling customers to harness the full potential of IoT through open standards and interoperability,” claims Susanne Seitz, CEO, Siemens Smart Infrastructure Buildings. “The improved data access will provide portfolio managers with granular visibility into critical metrics such as energy efficiency and consumption. With IoT data often being siloed, this level of transparency is a game-changer for an industry seeking to optimise building operations and meet sustainability targets.”

“Siemens shares Microsoft’s focus on interoperability and open IoT standards. This collaboration is a significant step forward in making IoT data more actionable,” argues Erich Barnstedt, Senior Director & Architect, Corporate Standards Group, Microsoft. “Microsoft’s strategy underscores our commitment to partnering with industry leaders to empower customers with greater choice and control over their IoT solutions.”

The interoperability between Siemens’ Building X and Azure IoT Operations will be available on the market from the second half of 2025.

For more from Siemens, click here.

Joe Peck - 7 July 2025

Data

News

News in Cloud Computing & Data Storage

Nasuni achieves AWS Energy & Utilities Competency status

Nasuni, a unified file data platform company, has announced that it has achieved Amazon Web Services (AWS) Energy & Utilities Competency status. This designation recognises that Nasuni has demonstrated expertise in helping customers leverage AWS cloud technology to transform complex systems and accelerate the transition to a sustainable energy and utilities future.

To receive the designation, AWS Partners undergo a rigorous technical validation process, including a customer reference audit. The AWS Energy & Utilities Competency provides energy and utilities customers the ability to more easily select skilled partners to help accelerate their digital transformations.

"Our strategic collaboration with AWS is redefining how energy companies harness seismic data,” comments Michael Sotnick, SVP of Business & Corporate Development at Nasuni. “Together, we’re removing traditional infrastructure barriers and unlocking faster, smarter subsurface decisions. By integrating Nasuni’s global unified file data platform with the power of AWS solutions including Amazon Simple Storage Service (S3), Amazon Bedrock, and Amazon Q, we’re helping upstream operators accelerate time to first oil, boost capital efficiency, and prepare for the next era of data-driven exploration."

AWS says it is enabling scalable, flexible, and cost-effective solutions from startups to global enterprises. To support the integration and deployment of these solutions, AWS established the AWS Competency Program to help customers identify AWS Partners with industry experience and expertise.

By bringing together Nasuni’s cloud-native file data platform with Amazon S3 and other AWS services, the company claims energy customers could eliminate data silos, reduce interpretation cycle times, and unlock the value of seismic data for AI-driven exploration.

For more from Nasuni, click here.

Joe Peck - 26 June 2025

Data

Data Centres

News

Scalable Network Attached Solutions for Modern Infrastructure

Chemists create molecular magnet, boosting data storage by 100x

Scientists at The University of Manchester have designed a molecule that can remember magnetic information at the highest temperature ever recorded for this kind of material.

In a boon for the future of data storage technologies, the researchers have made a new single-molecule magnet that retains its magnetic memory up to 100 Kelvin (-173 °C) – around the temperature of the moon at night.

The finding, published in the journal Nature, is a significant advancement on the previous record of 80 Kelvin (-193 °C). While still a long way from working in a standard freezer, or at room temperature, data storage at 100 Kelvin could be feasible in huge data centres, such as those used by Google.

If perfected, these single-molecule magnets could pack vast amounts of information into incredibly small spaces – possibly more than three terabytes of data per square centimetre. That’s around half a million TikTok videos squeezed into a hard drive that’s the size of a postage stamp.

The research was led by The University of Manchester, with computational modelling led by the Australian National University (ANU).

David Mills, Professor of Inorganic Chemistry at The University of Manchester, comments, “This research showcases the power of chemists to deliberately design and build molecules with targeted properties. The results are an exciting prospect for the use of single-molecule magnets in data storage media that is 100 times more dense than the absolute limit of current technologies.

“Although the new magnet still needs cooling far below room temperature, it is now well above the temperature of liquid nitrogen (77 Kelvin), which is a readily available coolant. So, while we won’t be seeing this type of data storage in our mobile phones for a while, it does make storing information in huge data centres more feasible.”

Magnetic materials have long played an important role in data storage technologies. Currently, hard drives store data by magnetising tiny regions made up of many atoms all working together to retain memory. Single-molecule magnets can store information individually and don’t need help from any neighbouring atoms to retain their memory, offering the potential for incredibly high data density. But, until now, the challenge has always been the incredibly cold temperatures needed in order for them to function.

The key to the new magnets’ success is the unique structure, with the element dysprosium located between two nitrogen atoms. These three atoms are arranged almost in a straight line – a configuration predicted to boost magnetic performance, but now realised for the first time.

Usually, when dysprosium is bonded to only two nitrogen atoms it tends to form molecules with more bent or irregular shapes. In the new molecule, the researchers added a chemical group called an alkene that acts like a molecular pin, binding to dysprosium to hold the structure in place.

The team at the Australian National University developed a new theoretical model to simulate the molecule’s magnetic behaviour to allow them to explain why this particular molecular magnet performs so well compared to previous designs.

Now, the researchers will use these results as a blueprint to guide the design of even better molecular magnets.

Joe Peck - 25 June 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173