Feature – Overcoming the DCI deluge

Author: Simon Rowley

Tim Doiron, VP Solution Marketing, Infinera, looks at the ways of overcoming the DCI deluge in the era of artificial intelligence and machine learning.

In the Merriam-Webster dictionary, deluge is defined as “an overwhelming amount.” In recent discussions with communication service providers and internet content providers (ICPs), data centre interconnect (DCI) traffic growth is running hot at 50% or more per annum. Any traffic that doubles in less than two years surely qualifies as a deluge, so yes, DCI traffic is a deluge.

But is DCI’s accelerated growth driven by artificial intelligence (AI) and machine learning (ML)? The short answer is yes, some of it is – but we are still in the early days of AI/ML, and in particular, generative AI. AI/ML’s contribution to DCI traffic will increase with time. With more applications, more people taking advantage of AI/ML capabilities (think about medical imaging analysis for disease detection), and generative AI creating new images and videos (consider collaboration with artists or marketing/branding), the north-south traffic to/from data centres will continue to grow.

And we know that data centres don’t exist in a vacuum. They need connectivity with other data centres – data centres that are increasingly modular and distributed to reduce their impact on local real estate and power grids and to be closer to end users for latency-sensitive applications. One estimate from several years ago holds that 9% of all data centre traffic is east-west – meaning DCI or connectivity to other data centres. Even if this percentage is high for today’s data centres, with more data centres coming online and more AI/ML traffic to/from data centres, Al/ML will help DCI sustain its hot growth rate for years to come.

So, how do we address increasing data centre modularity and distribution while also supporting the DCI deluge that is already here and will be sustained in part by accelerating AI/ML utilisation? The answer is threefold: speed up, spread out, and stack it.

Speed up with terabit waves

Today’s 800G embedded optical engines are moving into the terabit era with the development of 1.2+ Tb/s engines that can transmit 1.2 Tb/s wavelengths hundreds of kilometres and 800G waves up to 3,000 kilometres. Data centre operators that lease fibre can utilise embedded optical engines with high spectral efficiency to maximise data transmission over a single fibre pair and thus avoid the incremental costs associated with leasing incremental fibres.

While 400G ZR coherent pluggables are increasingly common in metro DCI applications, 800G coherent pluggables are under development for delivery in early 2025. This latest generation of coherent pluggables based upon 3-nm digital signal processor technology expands capacity-reach significantly with the ability to deliver 800G wavelengths up to 2,000 kilometres. With such capabilities in small QSFP-DD or OSFP packages, IP over DWDM (IPoDWDM) will continue to be realised in some DCI applications, with pluggables deployed directly into routers and switches.

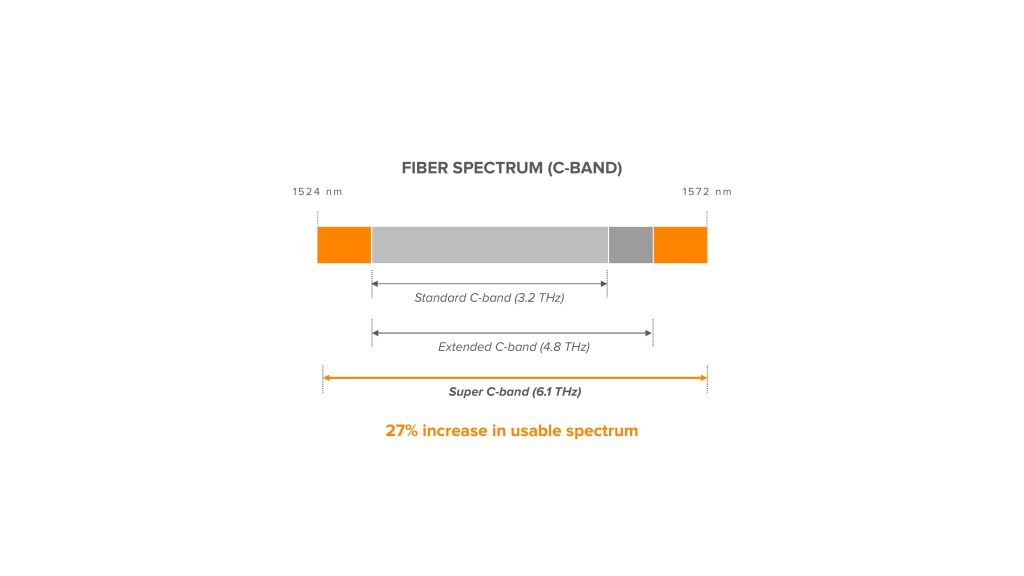

Spread out with Super C

With advancements in optical line system components like amplifiers and wavelength-selective switches, we can now cost-effectively increase the optical fibre transmission spectrum from 4.8 THz to 6.1 THz to create Super C transmission. With Super C we can realise 27% incremental spectrum enabling up to 50 Tb/s transmission capacity per band. A similar approach can be applied to creating a Super L transmission band. Super C and Super L transmission are cost-effective ways to squeeze more out of existing fibre and to keep up with DCI capacity demands.

Super C expansion also benefits coherent pluggables in DCI deployments by reclaiming any reduction in total fibre capacity due to the lower spectral efficiency of pluggables. Combining coherent pluggables with Super C enables network operators to leverage the smaller space and power utilisation benefits of pluggables without sacrificing total fibre transmission capacity.

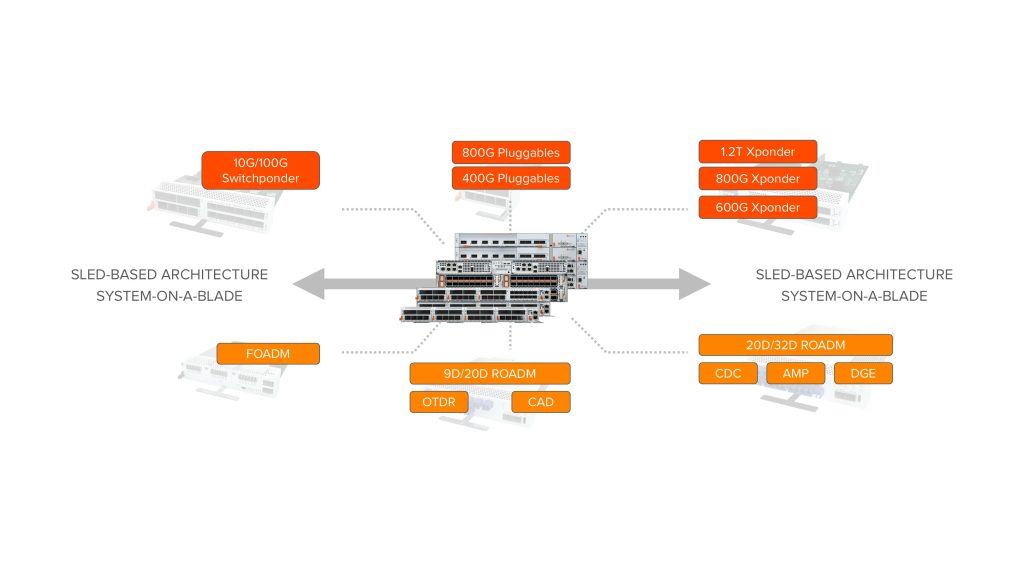

Stack it with compact modularity

Today’s next-generation compact modular optical platforms support mix-and-match optical line system functionality and embedded and pluggable optical engines. Network operators can start with a single 1RU, 2RU, or 3RU chassis and stack them as needed, matching cost to capacity while minimising complexity. By supporting both line system and optical engine functionality in a common platform, network operators can cost-effectively support small, medium, and large DCI capacity requirements while minimising the amount of equipment required versus dedicated per-function alternatives. As capacity demand grows, additional pluggables, sleds, and chassis can be easily added – all managed as a single network entity for operational simplicity.

Bringing it all together

While modest today, AI/ML-related DCI traffic will continue to grow – and help buoy an already hot DCI market. To accommodate the rapid connectivity growth between data centres, we will need to continue to innovate with pluggable and embedded optical engines that deliver more capacity with less power and a smaller footprint; with more transmission spectrum on the fibre; and with modular, stackable optical platforms. Commercially available generative AI applications like ChatGPT launched less than two years ago. We are literally just getting started. Hold on tight and grab an optical transmission partner that’s laser focused on what’s next.

For more from Infinera, click here.