23 January 2026

RWE sustainably powers Global Switch’s London DC

RWE sustainably powers Global Switch’s London DC

23 January 2026

McCarthy tops out NV12 project at Vantage’s campus

McCarthy tops out NV12 project at Vantage’s campus

22 January 2026

Warnings of drone‑enabled cyber threats to critical infrastructure

Warnings of drone‑enabled cyber threats to critical infrastructure

22 January 2026

Report: How Slough became Europe's largest DC cluster

Report: How Slough became Europe's largest DC cluster

Latest News

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

Products

Fluke Networks launches CertiFiber Max fibre tester

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Products

Motivair introduces scalable CDU for AI data centres

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Products

Vertiv expands perimeter cooling range in EMEA

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

Sponsored

Molex turns infrastructure into advantage

Data Centre Build News & Insights

Events

Exploring Modern Data Centre Design

News

Sponsored

Datacloud Middle East comes to Dubai

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

News

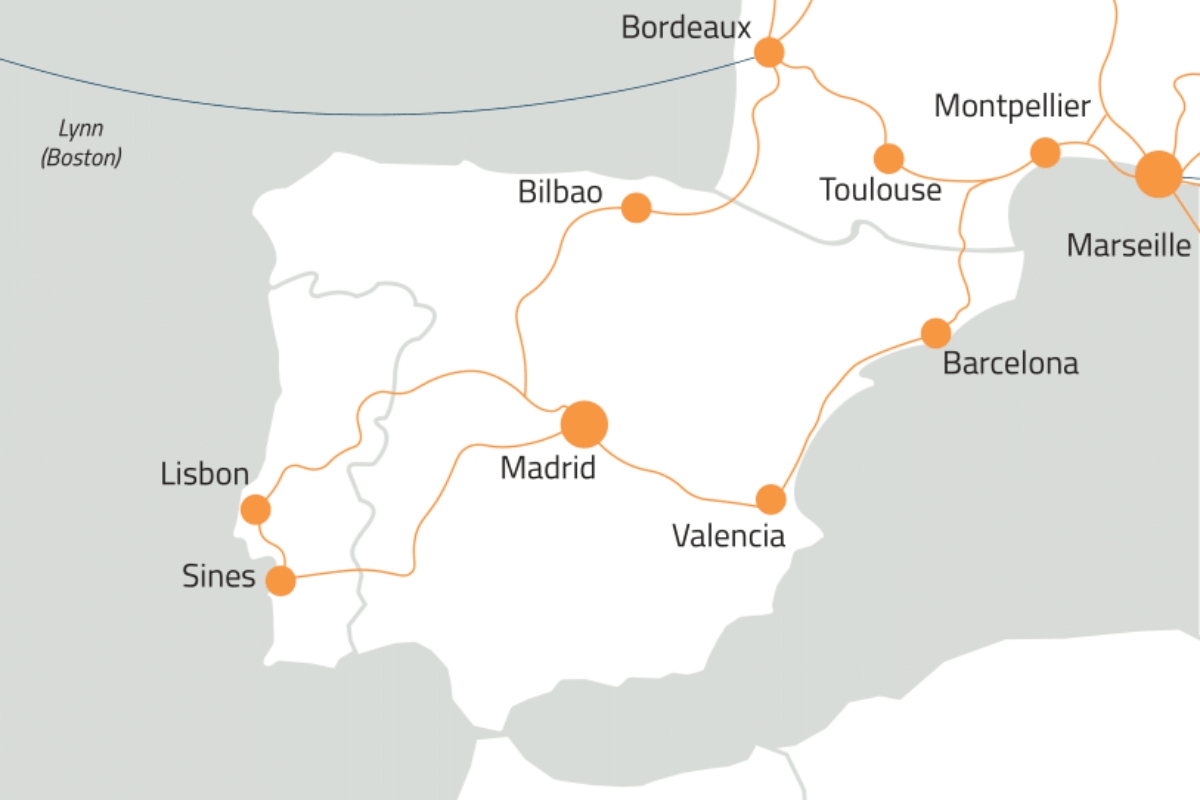

Zayo Europe partners with Reintel for network in Iberia

Data Centre Infrastructure News & Trends

Innovations in Data Center Power and Cooling Solutions

Liquid Cooling Technologies Driving Data Centre Efficiency

Sabey Data Centers partners with OptiCool Technologies

Data Centre Infrastructure News & Trends

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Innovations in Data Center Power and Cooling Solutions

Modular Data Centres in the UK: Scalable, Smart Infrastructure

Prism expands into the US market

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Enterprise Network Infrastructure: Design, Performance & Security

Sustainable Infrastructure: Building Resilient, Low-Carbon Projects

Huber+Suhner expands sustainable packaging drive

Data Centre Build News & Insights

Data Centre Infrastructure News & Trends

Data Centre Projects: Infrastructure Builds, Innovations & Updates

Innovations in Data Center Power and Cooling Solutions

Multi-million pound Heathrow data centre upgrade completed