Features

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Latest version of StarlingX cloud platform now available

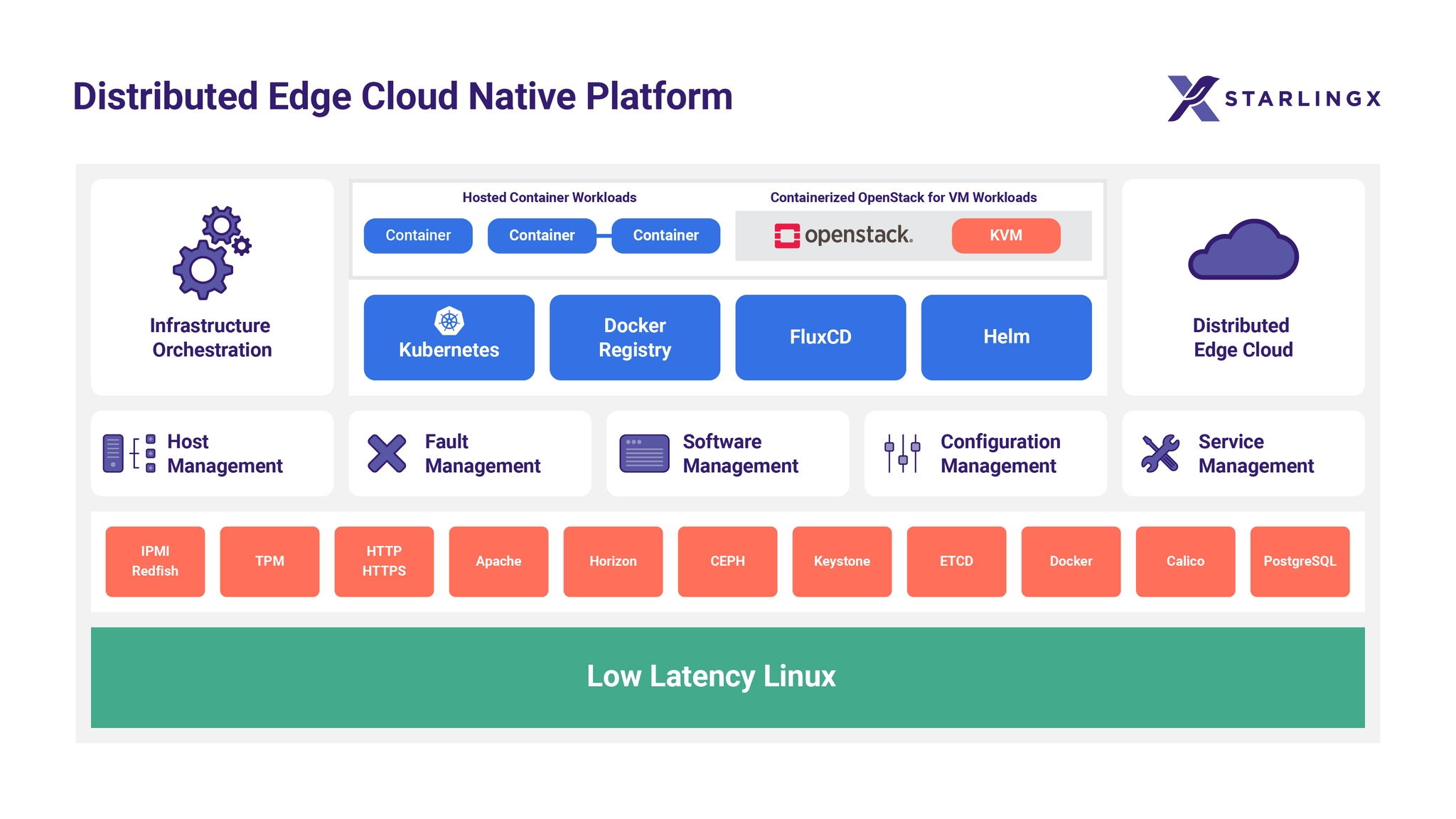

Version 9.0 of StarlingX - the open source distributed cloud platform for IoT, 5G, O-RAN and edge computing - is now available.

StarlingX combines Ceph, OpenStack, Kubernetes and more to create a full-featured cloud software stack that provides everything telecom carriers and enterprises need to deploy an edge cloud on a few servers or hundreds of them. Container-based and highly scalable, StarlingX is used by the most demanding applications in industrial IoT, telecom, video delivery and other ultra-low latency, high-performance use cases.

"StarlingX adoption continues to grow as more organisations learn of the platform’s unique advantages in supporting modern-day workloads at scale; in fact, one current user has documented its deployment of 20,000 nodes and counting,” says Ildikó Váncsa, Director, Community, for the Open Infrastructure Foundation. “Accordingly, this StarlingX 9.0 release prioritises enhancements for scaling, updating and upgrading the end-to-end environment.

“Also during this release cycle, StarlingX collaborated closely with Arm and AMD for a coordinated effort towards increasing the diversity of hardware supported,” Ildikó continues. “This collaboration also includes building out lab infrastructure to continuously test the project on a diverse set of hardware platforms.”

“Across cloud, 5G, and edge computing, power efficiency and lower TCO is critical for developers in bringing new innovations to market,” notes Eddie Ramirez, Vice President of Go-To-Market, Infrastructure Line of Business, Arm. “StarlingX plays a vital role in this mission and we’re pleased to be working with the community so that developers and users can leverage the power efficiency advantages of the Arm architecture going forward.”

Additional New Features and Upgrades in StarlingX 9.0

Transition to the Antelope version of OpenStack.

Redundant / HA PTP timing clock sources. Redundancy is an important requirement in many systems, including telecommunications. This feature is crucial to be able to set up multiple timing sources to synchronise from any of the available and valid hardware clocks and to have an HA configuration down to system clocks.

AppArmor support. This security feature makes a Kubernetes deployment (and thus the StarlingX stack) more secure by restricting what containers and pods are allowed to do.

Configurable power management. Sustainability and optimal power consumption is becoming critical in modern digital infrastructures. This feature adds to the StarlingX platform the Kubernetes Power Manager, which allows power control mechanisms to be applied to processors.

Intel Ethernet operator integration. This feature allows for firmware updates and more granular interface adapter configuration on Intel E810 Series NICs.

Learn more about these and other features of StarlingX 9.0 in the community’s release notes.A simple approach to scaling distributed clouds

StarlingX is widely used in production among large telecom operators around the globe, such as T-Systems, Verizon, Vodafone, KDDI and more. Operators are utilising the container-based platform for their 5G and O-RAN backbone infrastructures along with relying on the project's features to easily manage the lifecycle of the infrastructure components and services.

Hardened by major telecom users, StarlingX is ideal for enterprises seeking a highly performant distributed cloud architecture. Organisations are evaluating the platform for use cases such as backbone network infrastructure for railway systems and high-performance edge data centre solutions. Managing forest fires is another new use case that has emerged and is being researched by a new StarlingX user and contributor.

OpenInfra Community Drives StarlingX Progress

The StarlingX project launched in 2018, with initial code for the project contributed by Wind River and Intel. Active contributors to the project include Wind River, Intel and 99Cloud. Well-known users of the software in production include T-Systems, Verizon and Vodafone. The StarlingX community is actively collaborating with several other groups such as the OpenInfra Edge Computing Group, ONAP, the O-RAN Software Community (SC), Akraino and more.

Simon Rowley - 12 April 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News in Cloud Computing & Data Storage

Uncategorised

Vultr launches Sovereign Cloud and Private Cloud to boost digital autonomy

Vultr, the world’s largest privately-held cloud computing platform, today announced the launch of Vultr Sovereign Cloud and Private Cloud.

They have been introduced in response to the increased importance of data sovereignty and the growing volumes of enterprise data being generated, stored and processed in even more locations — from the public cloud to edge networks and IoT devices, to generative AI.

The announcement comes on the heels of the launch of Vultr Cloud Inference, which provides global AI model deployment and AI inference capabilities leveraging Vultr’s cloud-native infrastructure spanning six continents and 32 cloud data centre locations. With Vultr, governments and enterprises worldwide can ensure all AI training data is bound by local data sovereignty, data residency, and privacy regulations.

A significant portion of the world's cloud workloads are currently managed by a small number of cloud service providers in concentrated geographies, raising concerns particularly in Europe, the Middle East, Latin America, and Asia about digital sovereignty and control over data within their countries. Enterprises meanwhile must adhere to a growing number of regulations governing where data can be collected, stored, and used while retaining access to native GPU, cloud, and AI capabilities to compete on the global stage. 50% of European CXOs list data sovereignty as a top issue when selecting cloud vendors, with more than a third looking to move 25-75% of data, workloads, or assets to a sovereign cloud, according to Accenture.

Vultr Sovereign Cloud and Private Cloud are designed to empower governments, research institutions, and enterprises to access essential cloud-native infrastructure while ensuring that critical data, technology, and operations remain within national borders and comply with local regulations. At the same time, Vultr provides these customers with access to the advanced GPU, cloud, and AI technology powering today’s leading AI innovators. This enables the development of sovereign AI factories and AI innovations that are fully compliant, without sacrificing reach or scalability. By working with local telecommunications providers, such as Singtel, and other partners and governments around the world, Vultr is able to build and deploy clouds managed locally in any region.

Vultr Sovereign Cloud and Private Cloud guarantee data is stored locally, ensuring it is used strictly for its intended purposes and not transferred outside national borders or other in-country parameters without explicit authorisation.

Vultr also delivers technological independence through physical infrastructure, featuring air-gapped deployments and a dedicated control plane that is under the customer’s direct control, completely untethered from the central control plane governing resources across Vultr’s global data centres. This provides complete isolation of data and processing power from global cloud resources. To further ensure local governance and administration of these resources, Vultr Sovereign Cloud and Private Cloud are managed exclusively by nationals of the host country, resulting in an audit trail that complies with the highest standards of national security and operational integrity.

For enterprises, Vultr combines Sovereign and Private Cloud services with ‘train anywhere, scale everywhere’ infrastructure, including Vultr Container Registry, which enables models to be trained in one location but shared across multiple geographies, allowing customers to scale AI models on their own terms.“To address the growing need for countries to control their own data, and to reduce their reliance on a small number of large global tech companies, Vultr will now deploy sovereign clouds on demand for national governments around the world,” says J.J. Kardwell, CEO of Vultr’s parent company, Constant. “We are actively working with government bodies, local telecommunications companies, and other in-country partners to provide Vultr Sovereign Cloud and Private Cloud solutions globally, paving the way for customers to deliver fully compliant AI innovation at scale.”

For more from Vultr, click here.

Simon Rowley - 11 April 2024

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

News

News in Cloud Computing & Data Storage

Nasuni launches Nasuni Edge for Amazon S3

Nasuni, a hybrid cloud storage solution, has announced the general availability of Nasuni Edge for Amazon Simple Storage Service (S3), a cloud-native, distributed solution that allows enterprises to accelerate data access and delivery times, while ensuring low-latency access that is crucial for edge workloads, including cloud-based artificial intelligence and machine learning (AI/ML) applications, all through a single, unified platform.

Amazon S3 is an object storage service from Amazon Web Services (AWS) that offers scalability, data availability, security, and performance. Nasuni Edge for Amazon S3 supports petabyte-sized workloads and allows customers to run S3-compatible storage that supports select S3 APIs on AWS outposts, AWS local zones, and customers’ on-premises environments. The Nasuni cloud-native architecture is designed to improve performance and accelerate business processes. Immediate file access is essential across various industries, where remote facilities with limited bandwidth generate large volumes of data that must be quickly processed and ingested into Amazon S3. In addition, increasingly, customers are looking for a unified platform for both file and object data access and protection, enabling them to address small and large-scale projects with on-prem and cloud-centric workloads through a single offering.

With an influx of large volumes of data, simplified storage is a priority for any business looking to collect and quickly process data at the edge in 2024. Forrester Research expects 80% of new data pipelines in 2024 will be built for ingesting, processing, and storing unstructured data. Nasuni Edge for Amazon S3 is specifically designed for IT professionals, infrastructure managers, IT architects, and ITOps teams who want to improve application performance and data-driven workflow processes. It enables application developers to read and write using the Amazon S3 API to a global namespace backed by an Amazon S3 bucket from multiple endpoints located within AWS local zones, AWS outposts, and on-premises environments.

Nasuni Edge for Amazon S3 enhances data access by providing local performance at the edge, multi-protocol read/write scenarios and support for more file metadata.

“Nasuni has been a long-time AWS partner, and this latest collaboration delivers the simplest solution for modernising an enterprise’s existing file infrastructure. With Nasuni Edge for Amazon S3, enterprises can support legacy workloads and take advantage of modern Amazon S3-based applications,” says David Grant, President, Nasuni. “Nasuni Edge for Amazon S3 allows an organisation to make unstructured data easily available to cloud-based AI services.”

In addition to providing fast access to a single, globally accessible namespace, the ability to ingest large amounts of data into a single location drives potential new opportunities for customer innovation and powerful new insights via integration with third-party AI services. Importantly, Nasuni’s multi-protocol support means these new data workloads are accessible from a vast range of existing applications without having to rewrite them.

Isha Jain - 14 March 2024

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

News

News in Cloud Computing & Data Storage

Navigating enterprise approach on public, hybrid and private clouds

By Adriaan Oosthoek, Chairman at Portus Data Centers

With the rise of public cloud services offered by industry giants like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP), many organisations have migrated or are considering migrating their workloads to these platforms. However, the decision is not always straightforward, as factors like cost, performance, security, vendor lock-in and compliance come into play. Increasingly, enterprises must think about workloads in the public cloud, the costs involved and when a private cloud or a hybrid cloud setup might be a better fit.

Perceived benefits of public cloud

Enterprises view the public cloud as a versatile solution that offers scalability, flexibility, and accessibility. Workloads that exhibit variable demand patterns, such as certain web applications, mobile apps and development environments, are well-suited for the public cloud. The ability to quickly provision resources and pay only for what is used may make it an attractive option for some businesses and applications.

Public cloud offerings also typically provide a vast array of managed services, including databases, analytics, machine learning and AI, and can enable enterprises to innovate rapidly without the burden of managing underlying infrastructure. This is also a key selling point of public cloud offerings.

But how have enterprises’ real life experiences of public cloud set-ups compared against these expectations? Many have found the ‘pay-as-you-go’ pricing model to be very expensive and to have led to unexpected cost increases, particularly if workloads and usage spike unexpectedly or if the customer packages have been provisioned inefficiently. If not very carefully managed, the costs of public cloud services have a tendency to balloon quickly.

Public cloud providers and enterprises that have adopted public cloud strategies are naturally seeking to address these concerns. Enterprises are increasingly adopting cloud cost management strategies, including using cost estimation tools, implementing resource tagging for better visibility, optimising instance sizes, and utilising reserved instances or savings plans to reduce costs. Cloud providers offer pricing calculators and cost optimisation recommendations to help enterprises forecast expenses and increase efficiency. Despite these efforts, the public cloud has proved to be far more expensive for many organisations than originally envisaged and managing costs effectively in public cloud set-ups requires considerable oversight, ongoing vigilance and optimisation efforts.

When private clouds make sense

There are numerous situations where a private cloud environment is a more suitable and cost-effective option. Workloads with stringent security and compliance requirements, such as those in regulated industries like finance, healthcare or government, often necessitate the control and isolation provided by a private cloud environment - hosted in a local data centre on a server that is owned by the user.

Many workloads with predictable and steady resource demands, such as legacy applications or mission-critical systems, may not need the flexibility of the public cloud and could potentially incur much higher costs over time. In such cases, a private cloud infrastructure offers much greater predictability and cost control, allowing enterprises to optimise resources based on their specific requirements.

And last but not least, once workloads are in the public cloud, vendor lock-in occurs. It is notoriously expensive to repatriate workloads back out of the public cloud, mainly due to excessive data egress costs.

Hybrid cloud

It is becoming increasingly clear that most organisations will benefit most from a hybrid cloud setup. Simply put, ‘horses for courses’. Only put those workloads that will benefit from the specific advantages into the public cloud and keep the other workloads within their own control in a private environment.

Retaining a private environment does not require an enterprise to have or run their own data centre. Rather, they should take capacity in a professionally managed, third-party colocation data centre that is located in the vicinity of the enterprises’ own premises. Capacity in a colocation facility will generally be more resilient, efficient, sustainable, and cost effective for enterprises compared to operating their facilities. The private cloud infrastructure can also be outsourced – in a private instance. This is where regional and edge data centre operators such as Portus Data Centers come to the fore.

In most cases, larger organisations will end up with hybrid cloud IT architecture to benefit from the best of both worlds. This will require careful consideration of how to seamlessly pull those workloads together through smart networking. Regional data centres with strong network and connectivity options will be crucial to serving this demand for local IT infrastructure housing.

The era where enterprises went all-in into the cloud is over. While the public cloud offers scalability, flexibility, and access to cutting-edge technologies, concerns about cost, security, vendor lock-in and compliance persist. To mitigate these concerns, enterprises must carefully evaluate their workloads and determine the most appropriate hosting environment.

Isha Jain - 14 March 2024

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News

News in Cloud Computing & Data Storage

Own Company empowers customers to capture value from their data

Own Company, a SaaS data platform, has announced a new product, Own Discover, that reflects the company’s commitment to empower every company operating in the cloud to own their own data.

Own Discover is expanding its product portfolio beyond its backup and recovery, data archiving, seeding, and security solutions to help customers activate their data and amplify their business. With Own Discover, businesses will be able to use their historical SaaS data to unlock insights, accelerate AI innovation, and more in an easy and intuitive way.

Own Discover is part of the Own Data Platform, giving customers quick and easy access to all of their backed up data in a time-series format so they can:

Analyse their historical SaaS data to identify trends and uncover hidden insights

Train machine learning models faster, enabling AI-driven decisions and actions

Integrate SaaS data to external systems while maintaining security and governance

“For the first time, customers can easily access all of their historical SaaS data to understand their businesses better, and I’m excited to see our customers unleash the potential of their backups and activate their data as a strategic asset,” says Adrian Kunzle, Chief Technology Officer at Own. “Own Discover goes beyond data protection to active data analysis and insights and provides a secure, fast way for customers to learn from the past and inform new business strategies and growth.”

Isha Jain - 7 March 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Hyperscale Data Centres: Scale, Speed & Strategy

News

News in Cloud Computing & Data Storage

Vultr announces new CDN in race to be the next hyperscaler

Vultr, a privately-held cloud computing platform, has announced the launch of Vultr CDN. This content delivery service pushes content closer to the edge without compromising security. Vultr now enables global content and media caching, empowering its worldwide community with services for scaling websites and web applications.

Traditional content delivery networks are incredibly complex, leaving businesses and web developers needing help to configure, manage, and optimise infrastructure cost-effectively and in a timely manner. They require immediate access to a powerful, scalable, and global content delivery network to accelerate digital content distribution and keep up with customer demand.

The launch of Vultr CDN marks the next phase of the company’s growth as a leading cloud computing platform. By adding global content caching and delivery to Vultr’s existing cloud infrastructure, the service simplifies infrastructure operations with unbeatable price-to-performance starting at $10/month, with the industry’s lowest bandwidth costs. For those requiring the highest performance CPUs, Vultr also offers unique high-frequency plans powered by high clock speed CPUs and NVMe local storage, optimised for websites and content management systems.

Purpose-built for performance-driven businesses, Vultr CDN delivers a network for fast, secure, and reliable content distribution and is optimised for content acceleration, API caching, image optimisation and more. Seamless integrations with Vultr Cloud Compute enable it to scale automatically and intelligently by selecting the best location for content delivery, thereby optimising user requests to save time and money.

Vultr CDN is now available for use as a beta service with a full release in February.

Isha Jain - 7 February 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

News

News in Cloud Computing & Data Storage

Aruba combines cloud potential with electric mobility

Aruba has announced that it is ready to enter the FIM Enel MotoE World Championship with the arrival of the Aruba Cloud MotoE Team. As both, the manager and title sponsor of the team, this is a new journey for Aruba into the world of sport. The project runs parallel to one undertaken with customers in the construction of a new cloud platform, which is now complete.

There are several challenges that unite cloud technologies and the motor industry. First and foremost, sustainability, a key topic associated for cloud technologies as businesses look for more innovative and environmentally friendly products. The virtualisation of computational resources that underlies cloud computing, for example, allows for a reduction in the use of servers, and therefore, a reduction in emissions or when using clean energy, saving of natural resources.

Furthermore, the continuous search for performance optimisation also unites the two industries. Cloud technologies are crucial across all spheres, both at a business level, but also in everyday life. For this reason, cloud developers are always looking to save energy through increasing the efficiency of infrastructure and optimising the use of resources. Similarly, the MotoE team is a starting point from where Ducati can experiment and develop technologies that could, in the future, be used on road motorbikes and offer customers increasingly sustainable and clean vehicles.

The international dimension of the project is also particularly exciting, as over the years, Aruba Cloud has consolidated a significant international presence, becoming a player with more than 200,000 customers served in over 150 countries. Thanks to continuous investments in the innovation of its technology stack, Aruba Cloud is also distributed across the European data centre network.

The riders of the Aruba Cloud MotoE Team will be Chaz Davies, who after retiring from Superbike in 2021, joined the Ducati ERC team in the Endurance World Championship, acting as coach for the Aruba riders in Superbike and Supersport at the same time, and Armando Pontone, who after a stint in the Moto3 category won the National Trophy SS600 in 2021.

The team's official presentation will be held on 7 March at the Aruba Auditorium in Ponte San Pietro.

Read more latest news from Aruba here.

Isha Jain - 6 February 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

News

News in Cloud Computing & Data Storage

Quantum Computing: Infrastructure Builds, Deployment & Innovation

Amidata implements Quantum ActiveScale to launch new cloud storage service

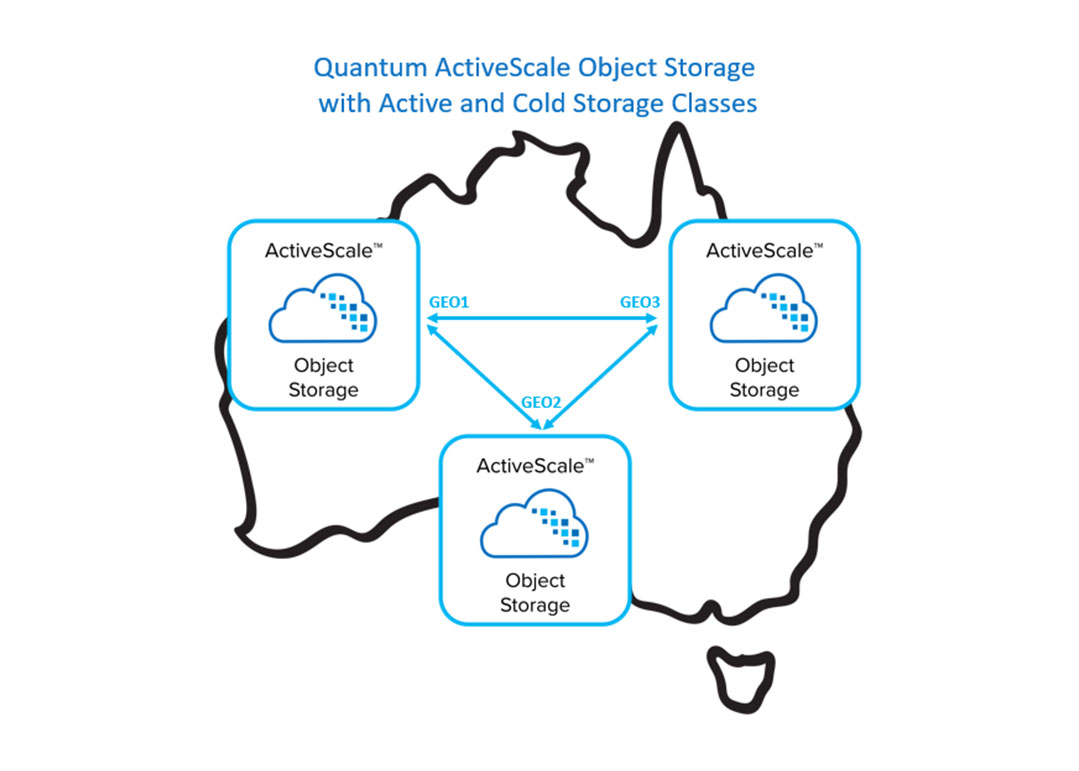

Quantum Corporation has announced that Amidata has implemented Quantum ActiveScale Object Storage as the foundation for its new Amidata Secure Cloud Storage service. Amidata has deployed ActiveScale object storage to build a secure, resilient set of cloud storage services accessible from across all of Australia, where the company is based. This way the company achieves simple operational efficiency, seamless scalability, and the ability to address customer needs across a wide range of use cases, workflows, and price points.

Amidata’s adoption of object storage also aligns with current IT trends. “More and more organisations are looking at object storage to create secure and massively scalable hybrid clouds,” says Michael Whelan, Managing Director, Amidata. “ActiveScale provides a durable, cost-effective approach for backing up and archiving fast-growing data volumes while also protecting data from ransomware attacks. Plus, by deploying the ActiveScale Cold Storage feature, we are delivering multiple storage classes as part of our service offerings, allowing us to target a wider set of customers and use cases. With our Secure Cloud cold storage option, customers can retain data longer and at a lower cost; that’s useful for offsite copies, data compliance, and increasingly, for storing the growing data sets that are fuelling AI-driven business analytics and insights.”

ActiveScale also supports multiple S3-compatible storage classes using flash, disk, and tape medias, providing a seamless environment that can flexibly grow capacity and performance to any scale. Cold Storage, a key feature, integrates Quantum Scalar tape libraries as a lower cost storage class to efficiently store cold and archived data sets. Quantum’s tape libraries are nearline storage, where customers can easily access and retrieve cold or less used data with slightly longer latency—minutes instead of seconds—but at a low cost, leveraging the same infrastructure used by the major hyperscalers. It intelligently stores and protects data across all storage resources using Quantum’s patented two-dimensional erasure coding to achieve extreme data durability, performance, availability, and storage efficiency.

For more information on Amidata’s implementation of ActiveScale, view the video case study.

Isha Jain - 1 February 2024

Artificial Intelligence in Data Centre Operations

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

News in Cloud Computing & Data Storage

VAST Data forms strategic partnership with Genesis Cloud

VAST Data has announced a strategic partnership with Genesis Cloud. Together, VAST and Genesis Cloud aim to make AI and accelerated cloud computing more efficient, scalable and accessible to organisations across the globe.

Genesis Cloud helps businesses optimise their AI training and inference pipeline by offering performance and capacity for AI projects at scale while providing enterprise-grade features. The company is using the VAST Data Platform to build a comprehensive set of AI data services in the industry. With VAST, Genesis Cloud will lead a new generation of AI initiatives and Large Language Model (LLM) development by delivering highly automated infrastructure with exceptional performance and hyperscaler efficiency.

“To complement Genesis Cloud’s market-leading compute services, we needed a world-class partner at the data layer that could withstand the rigors of data-intensive AI workloads across multiple geographies,” says Dr Stefan Schiefer, CEO at Genesis Cloud. “The VAST Data Platform was the obvious choice, bringing performance, scalability and simplicity paired with rich enterprise features and functionality. Throughout our assessment, we were incredibly impressed not just with VAST’s capabilities and product roadmap, but also their enthusiasm around the opportunity for co-development on future solutions.”

Key benefits for Genesis Cloud with the VAST Data Platform include:

Multitenancy enabling concurrent users across public cloud: VAST allows multiple, disparate organisations to share access to the VAST DataStore, enabling Genesis Cloud to allocate orders for capacity as needed while delivering unparalleled performance.

Enhancing security in cloud environments: By implementing a zero trust security strategy, the VAST Data Platform provides superior security for AI/ML and analytics workloads with Genesis Cloud customers, helping organisations achieve regulatory compliance and maintain the security of their most sensitive data in the cloud.

Simplified workloads: Managing the data required to train LLMs is a complex data science process. Using the VAST Data Platform’s high performance, single tier and feature rich capabilities, Genesis Cloud is delivering data services that simplify and streamline data set preparation to better facilitate model training.

Quick and easy to deploy: The intuitive design of the VAST Data Platform simplifies the complexities traditionally associated with other data management offerings, providing Genesis Cloud with a seamless and efficient deployment experience.

Improved GPU utilisation: By providing fast, real-time access to data across public and private clouds, VAST eliminates data loading bottlenecks to ensure high GPU utilisation, better efficiency and ultimately lower costs to the end customer.

Future proof investment with robust enterprise features: The VAST Data Platform consolidates storage, database, and global namespace capabilities that offer unique productisation opportunities for service providers.

Isha Jain - 29 January 2024

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centres

News

News in Cloud Computing & Data Storage

Digital Realty to expand its service orchestration platform

Digital Realty has announced the continued momentum of ServiceFabric - its service orchestration platform that seamlessly interconnects workflow participants, applications, clouds and ecosystems on PlatformDIGITAL - its global data centre platform.

Following the recent introduction of Service Directory, a central marketplace that allows Digital Realty partners to highlight their offerings, over 70 members have joined the directory and listed more than 100 services, including secure and direct connections to over 200 global cloud on-ramps, creating a vibrant ecosystem for seamless interconnection and collaboration.

Service Directory is a core component of the ServiceFabric product family that underpins the organisation’s vision for interconnecting global data communities on PlatformDIGITAL and enabling customers to tackle the challenges of data gravity head-on.

Chris Sharp, Chief Technology Officer, Digital Realty, says, “ServiceFabric is redefining the way customers and partners interact with our global data centre platform. By fostering an open and collaborative environment, we're empowering businesses to build and orchestrate their ideal solutions with unparalleled ease and efficiency.”

The need for an open interconnection and orchestration platform is critical as an enabler for artificial intelligence (AI) and high-performance compute (HPC), especially as enterprises increasingly deploy private AI applications, which rely on the low latency, private exchange of data between many members of an ecosystem. PlatformDIGITAL was chosen to be the home of many ground-breaking AI and HPC workloads and ServiceFabric was designed with the needs of cutting-edge applications in mind.

A key differentiator is Service Directory’s ‘click-to-connect’ capability, which allows customers to orchestrate and automate on-demand connections to the services they need, significantly streamlining workflows and removing manual configuration steps.

With ‘click-to-connect’, users can:

Generate secure service keys, granting controlled access to resources and partners with customisable security parameters.

Automate approval workflows and facilitate connections to Service Directory, paving the way for seamless interconnectivity.

Initiate service connections, significantly streamlining workflows between partners and customers.

Integrate seamlessly with Service Directory, creating a unified experience for discovery, connection, and orchestration.

Isha Jain - 25 January 2024

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173