Data

Cyber Security Insights for Resilient Digital Defence

Data

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

Enterprise Network Infrastructure: Design, Performance & Security

News

Cyber attacks drop by nearly 10%

Four in 10 (43%) of UK businesses and 30% of charities experienced cyber attacks or data breaches in the last 12 months, according to the latest Cyber Security Breaches Survey. While this marks a slight decrease from last year’s 50%, the threat level for medium and large businesses remains alarmingly high.

The average cost of the most disruptive breach was estimated at £1,600 for businesses and £3,240 for charities.

The drop in incidents is attributed mainly to fewer small businesses reporting breaches – but government officials warn against complacency. With cyber threats increasingly targeting critical infrastructure, the UK Government is introducing the Cyber Security and Resilience Bill, compelling organisations to strengthen their digital defences.

The survey found that 70% of large businesses now have a formal cyber strategy in place, compared to just 57% of medium-sized firms – exposing a potential gap in preparedness among mid-sized enterprises.

There has been a notable improvement in cyber hygiene practices among smaller businesses, with rising adoption of risk assessments, cyber insurance, formal cyber security policies and continuity planning.

These steps are seen as essential in building digital resilience across the UK economy.

However, the number of high-income charities implementing best practices such as risk assessments has declined. Insights suggest this may be linked to budgetary pressures, limiting their ability to invest in adequate cyber security measures.

Sawan Joshi, Group Director of Information Security at FDM Group, comments, “Keeping banking systems online is becoming more challenging, and technology alone isn’t enough. Skilled IT teams are crucial for spotting risks early and responding quickly to prevent disruptions. Organisations need to invest in ongoing training so their staff can strengthen system defences and recover fast when issues arise. A mix of advanced monitoring, backup systems, and a well-trained workforce is key to keeping services running and maintaining customer trust.'"

The Government has also confirmed that UK data centres are now officially designated as critical national infrastructure. This means they will receive the same priority in the event of a major incident - such as a cyber attack - as essential services like water and energy.

Carly Weller - 11 April 2025

Artificial Intelligence in Data Centre Operations

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Enterprise Network Infrastructure: Design, Performance & Security

News in Cloud Computing & Data Storage

Juniper and Google Cloud enhance branch deployments

Juniper Networks has announced its collaboration with Google Cloud to accelerate new enterprise campus and branch deployments and optimise user experiences. With just a few clicks in the Google Cloud Marketplace, customers can subscribe to Google’s Cloud WAN solution alongside Juniper Mist wired, wireless, NAC, firewalls and secure SD-WAN solutions.

Unveiled at Google Cloud Next 25, the solution is designed to simply, securely and reliably connect users to critical applications and AI workloads whether on the internet, across clouds or within data centres.

“At Google Cloud, we’re committed to providing our customers with the most advanced and innovative networking solutions. Our expanded collaboration with Juniper Networks and the integration of its AI-native networking capabilities with Google’s Cloud WAN represent a significant step forward,” says Muninder Singh Sambi, VP/GM, Networking, Google Cloud. “By combining the power of Google Cloud’s global infrastructure with Juniper’s expertise in AI for networking, we’re empowering enterprises to build more agile, secure and automated networks that can meet the demands of today’s dynamic business environment.”

AIOps key to GenAI application growth

As the cloud expands and GenAI applications grow, reliable connectivity, enhanced application performance and low latency are paramount. Businesses are turning to cloud-based network services to meet these demands. However, many face challenges with operational complexity, high costs, security gaps and inconsistent application performance. Assuring the best user experience through AI-native operations (AIOps) is essential to overcoming these challenges and maximising efficiency.

Powered by Juniper’s Mist AI-Native Networking platform, Google’s Cloud WAN, a new solution from Google Cloud, delivers a fully managed, reliable and secure enterprise backbone for branch transformation. Mist is purpose-built to leverage AIOps for optimised campus and branch experiences, assuring that connections are reliable, measurable and secure for every device, user, application and asset.

“Mist has become synonymous with AI and cloud-native operations that optimise user experiences while minimising operator costs,” says Sujai Hajela, EVP, Campus and Branch, Juniper Networks. “Juniper’s AI-Native Networking Platform is a perfect complement to Google’s Cloud WAN solution, enabling enterprises to overcome campus and branch management complexity and optimise application performance through low latency connectivity, self-driving automation and proactive insights.”

Google’s Cloud WAN delivers high-performance connections for campus and branch

The campus and branch services on Google’s Cloud WAN driven by Mist provide a single, secure and high-performance connection point for all branch traffic. A variety of wired, wireless, NAC and WAN services can be hosted on Google Cloud Platform, enabling businesses to eliminate on-premises hardware, dramatically simplifying branch operations and reducing operational costs. By natively integrating Juniper and other strategic partners with Google Cloud, Google’s Cloud WAN solution enhances agility, enabling rapid deployment of new branches and services, while improving security through consistent policies and cloud-delivered threat protection.

Carly Weller - 11 April 2025

Artificial Intelligence in Data Centre Operations

Cooling

Data

Data Centre Operations: Optimising Infrastructure for Performance and Reliability

Data Centres

Liquid Cooling Technologies Driving Data Centre Efficiency

Compu Dynamics launches AI and HPC Services unit

Compu Dynamics has announced the launch of its full lifecycle AI and High-Performance Computing (HPC) Services unit, showcasing the company’s end to end capabilities.

The expanded portfolio encompasses the entire spectrum of data centre needs, from initial design and procurement to construction, operation and ongoing maintenance, with a particular emphasis on cutting-edge liquid cooling technologies for AI and HPC environments.

Compu Dynamics’ new AI and HPC service offerings build on the company’s expertise in white space deployment, including advanced liquid cooling and post-installation services. As a vendor-neutral solutions provider, the company is uniquely positioned to support equipment from virtually every manufacturer with no geographical limitations, ensuring clients receive unbiased recommendations and optimal solutions tailored to their specific requirements.

"Our advanced AI and HPC service offerings represent a significant evolution in data centre services," says Steve Altizer, President and CEO of Compu Dynamics. “We have created this team to respond to the accelerating demand for highly-qualified technical support for high-density AI data centre infrastructure. By working with a variety of OEM partners and offering true end-to-end solutions, we are empowering our clients to focus on their core business while we handle the complexities of their modern critical infrastructure."

The company’s holistic solutions portfolio addresses the growing need for specialised support in high-density computing environments. Compu Dynamics’ innovative liquid cooling solutions are said to offer superior efficiency and reduced energy consumption, making them essential for future-ready data centres. Key highlights of these service offerings include:

· Equipment evaluation, design consultation and procurement.

· Power distribution and liquid cooling system installation, startup, commissioning and quality assurance/quality control.

· Flexible maintenance service options designed for seamless, worry-free support including comprehensive fluid management, coolant sampling and contamination and corrosion prevention.

· Onsite staffing for day-to-day technical operations.

· Dedicated customer success manager.

· 24x7 emergency response team for technical issues and repair services.

"As AI and HPC workloads drive unprecedented demand on data centre infrastructure, our liquid cooling expertise has become increasingly crucial,” says Scott Hegquist, Director of AI/HPC Services at Compu Dynamics. “We're committed to helping our clients navigate these challenges, providing cutting-edge solutions that optimise performance, efficiency and sustainability."

Carly Weller - 11 April 2025

Data

Data Centres

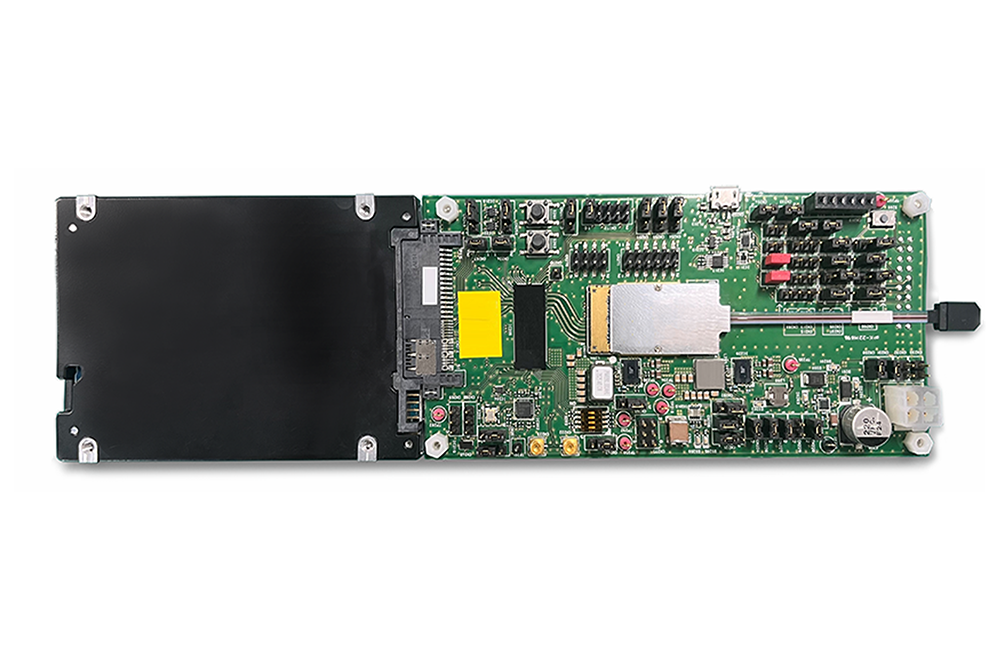

New PCIe 5.0-compatible broadband optical SSD

KIOXIA Corporation, AIO Core Co. and Kyocera Corporation have announced the development of a prototype PCIe 5.0-compatible broadband SSD with an optical interface (broadband optical SSD). The three companies will develop technologies for broadband optical SSDs to enhance their suitability for advanced applications that require high-speed transfer of large data, such as generative AI, and will also apply them to proof-of-concept (PoC) tests for future social implementation.

The new prototype achieved functional operation with the high-speed PCIe 5.0 interface, which is twice the bandwidth of the previous PCIe 4.0 generation, through the combination of AIO Core’s IOCore optical transceiver and Kyocera’s OPTINITY optoelectronic integration module technologies.

In next-generation green data centres, replacing the electrical wiring interface with optical and utilising broadband optical SSD technology can significantly increase the physical distance between the compute and storage devices, while maintaining energy efficiency and high signal quality. It also contributes to the flexibility and efficiency of data centre system design, where digital diversification and the evolution of generative AI require complex, high-volume and high-speed data processing.

This achievement is the result of the Japanese ‘Next Generation Green Data Center Technology Development’ project JPNP21029. It is subsidised by the New Energy and Industrial Technology Development Organization (NEDO), which is under the ‘Green Innovation Fund Project: Construction of Next Generation Digital Infrastructure’.

In this project, companies will develop next-generation technologies with the goal of achieving more than 40% energy savings compared to current data centres. As part of this project, KIOXIA is developing broadband optical SSDs, AIO Core is developing optoelectronic fusion devices and Kyocera is developing optoelectronic device packages.

Axel Stoermann, Chief Technology Officer and Vice President at KIOXIA Europe, comments, “As we enter a new era where AI and high-performance data centres form the foundation of societal advancement, it's essential to address the challenge of power management to ensure our strides in technology align with global sustainability goals.

"This new prototype of a PCIe 5.0-compatible broadband SSD with an optical interface has the real potential to revolutionise data centres and to make them truly sustainable.”

Carly Weller - 10 April 2025

Data

Enterprise Network Infrastructure: Design, Performance & Security

ZTE enhances all-optical networks with smart ODN solutions

Hans Neff, Senior Director of ZTE CTO Group, recently delivered a keynote speech entitled ‘Embracing the AI Era to Accelerate the Development of All-Optical Networks’ at the FTTH Conference 2025 in Amsterdam, sharing the company's solutions and experience in focusing on AI empowerment to achieve intelligent ODN, improve the deployment efficiency of all-optical networks, and reduce deployment and O&M costs.

At present, the deployment of all-optical networks is continuously accelerated, and the rapidly developing AI is increasingly integrated into broadband IT systems, significantly improving the intelligent level of FTTx deployment, operation and maintenance. In Europe, the National Broadband Plan (NBP) and the EU policies are actively advocating for an all-optical, gigabit and digitally intelligent society, and the demand for sustainable, efficient and future-oriented network infrastructure is growing.

Hans pointed out that operators are facing challenges such as high costs, complex network management and diverse deployment scenarios. AI and digital intelligence can provide end-to-end support for ODN construction in multiple aspects. Hans described how ZTE leverages AI technology to build intelligent ODNs across the entire process, enhancing efficiency and reducing operational complexity.

He elaborated on the specific applications and advantages of intelligent ODN across three phases: before, during and after deployment. Before deployment, one-click zero-touch site surveys, AI-based automatic planning and design, and paperless processes replace traditional manual methods, significantly reducing the time and human resources required. During deployment, real-time visualised and cost-effective solutions, along with tailored product combinations - such as pre-connectorised splice-free ODN, plug-and-play deployment and air-blowing solutions - ensure smooth construction while reducing both time and labour costs.

After deployment and delivery, intelligent real-time ODN network detection, analysis and risk warnings minimise fault location time, improving monitoring accuracy and troubleshooting efficiency. Additionally, new solutions like passive ID branch detection enable 24/7 real-time optical link monitoring, dynamic perception of link changes and automatic updates, ensuring 100% accuracy of end-to-end ODN resources. This proactive approach optimises network performance while streamlining maintenance and resource management.

Hans emphasised that collaboration among multiple parties is key to accelerating the construction of intelligent ODN networks. Suppliers play a vital role in driving digital and intelligent platforms, developing innovative product portfolios and delivering localised solutions through strategic partnerships. By leveraging AI and digital intelligence, operators and stakeholders can effectively address challenges such as high costs, complex construction and maintenance, and regional adaptability.

ZTE says it is committed to providing comprehensive intelligent ODN solutions and building end-to-end all-optical networks. Moving forward, ZTE will continue collaborating with global partners to harness its advanced network and intelligent capabilities. ZTE aims to help operators build and operate cutting-edge, efficient and intelligent broadband networks, unlocking new application scenarios and fostering a ubiquitous, green and efficient era of all-optical networks.

Carly Weller - 9 April 2025

Cyber Security Insights for Resilient Digital Defence

Data

Data Centres

News

Industry experts react to World Backup Day

Today, 31 March, marks this year's World Backup Day, and industry experts say that it once again offers a timely reminder of how vulnerable enterprise data can be.

Fred Lherault, Field CTO at Pure Storage, says that businesses cannot afford to think about backup just one day, every year, and predicts that 2025 could be a record-setting year for ransomware attacks.

Commenting on the day, Fred says, “31 March marks World Backup Day, serving as an important reminder for businesses to reassess their data protection strategies in the wake of an ever-evolving, and ever-growing threat landscape. However, cyber attackers aren’t in need of a reminder, and are probing for vulnerabilities 24/7 in order to invade systems. Given the valuable and sensitive nature of data, whether it resides in the public sector, healthcare, financial services or any other industry, businesses can’t afford to think about backup just one day per year.

“Malware is a leading cause of data loss, and ransomware, which locks down data with encryption rendering it useless, is among the most common forms of malware. In 2024, there were 5,414 reported global ransomware attacks, an 11% increase from 2023. Due to the sensitive nature of these kinds of breaches, it’s safe to assume that the real number is much higher. It’s therefore fair to suggest that 2025 could be a record setting year for ransomware attacks. In light of these alarming figures, there is no place for a ‘it won’t happen to me’ mindset. Businesses need to be proactive, not reactive in their plans - not only for their own peace of mind, but also in the wake of new cyber resiliency regulations laid down by international governments.

“Unfortunately, while backup systems have provided an insurance policy against an attack in the past, hackers are now trying to breach these too. Once an attacker is inside an organisation’s systems, they will attempt to find credentials to immobilise backups. This will make it more difficult, time consuming and potentially expensive to restore.”

Meanwhile, Dr. Thomas King, the CTO of global internet exchange operator, DE-CIX, offers his own remarks about the occasion.

Thomas explains, “World Backup Day has traditionally carried a very simple yet powerful message for businesses: backup your data. A large part of this is 'data redundancy' – the idea that storing multiple copies of data in separate locations will offer greater resilience in the event of an outage or network security breach. Yet, as workloads have moved into the cloud, and AI and SaaS applications have become dominant vehicles for productivity, the concept of 'redundancy' has started to expand. Businesses not only need contingency plans for their data, but contingency plans for their connectivity. Relying on a single-lane, vendor-locked connectivity pathway is a bit like only backing your data up in one place – once that solution fails, it’s game over.

“In 2025, roughly 85% of software used by the average business is SaaS-based, with a typical organisation using 112 apps in their day-to-day operations. These cloud-based applications are wholly dependent on connectivity to function, and even minor slow-downs caused by congestion or packet loss on the network can kill productivity. This is even more true of AI-driven workloads, where businesses depend on low-latency, high-performance connectivity to generate real-time or near real-time calculations.

“Over the years, we have been programmed to believe that faster connectivity equals better connectivity, but the reality is far more nuanced. IT decision-makers frequently chase faster connections to improve their SaaS or AI performance, but 82% severely underestimate the impact of packet loss and the general performance of their connectivity. This is what some refer to as the 'Application Performance Trap' – expecting a single, lightning-fast connection to solve all performance issues. But what happens if that connectivity pathway becomes congested, or worse, fails entirely?

“This is why 'redundant' connectivity is essential. The main principle of redundancy in this context is that there should always be at least two paths leading to a destination – if one fails, the other can be used. This can be achieved by using a carrier-neutral Internet Exchange or IX, which facilitates direct peer-to-peer connectivity between businesses and their cloud-based workloads, essentially bypassing the public Internet. While IXs in the US were traditionally vendor-locked to a single carrier or data centre, neutral IXs allow businesses to establish multiple connections with different providers – sometimes to serve a particular use-case, but often in the interests of redundancy. Our research has shown that more than 80% of IXs in the US are now data centre and carrier neutral, presenting a perfect opportunity for businesses to not only back up their data, but also back up their connectivity this World Backup Day.”

To read more about World Backup Day, visit its official website by clicking here.

For more from Pure Storage, click here.

For more from DE-CIX, click here.

Simon Rowley - 31 March 2025

Data

Data Centres

News

Keysight and Coherent to enhance data transfer rates

Keysight Technologies and Coherent Corp have collaborated on a 200G multimode technology demonstration that will be showcased for the first time at the OFC Conference in San Francisco, California.

The 200G per lane vertical-cavity surface-emitting laser (VCSEL) technology provides higher data transfer rates and addresses industry demand for higher bandwidth in data centres. It will enable the industry to deliver AI/ML services while reducing power consumption and capital expense of short-reach data interconnects.

AI/ML deployment is driving extreme growth in the amount of data transfer in data centres. The cost of optical interconnects is an ever-growing portion of the Capex of the data centre, while the power consumption of the optical interconnects is an ever-growing portion of Opex. 200G multimode VCSELs revolutionise data transfer and network efficiency, offering the following benefits compared to single-mode transmission:

· Increased bandwidth: 200 Gbps/lane doubles the data throughput of the current highest speed multimode interconnects.· Power efficiency: Significantly lower power-per-bit relative to single-mode alternatives driving down electrical power operational expense and helping large-scale data centres minimise their environmental impact.· Cost efficiency: Multimode VCSELs are less costly to manufacture than single-mode technologies, providing lower capital outlay for short-reach data links.· Compatible network architecture: AI pods and clusters require many high-speed, short-reach interconnects to share data amongst GPUs, aligning well with the strength of 200G multimode VCSELs.

The 200G multimode demonstration consists of Keysight’s new wideband multimode sampling oscilloscope technology, Keysight’s M8199B 256 GSa/s Arbitrary Waveform Generator (AWG) and Coherent’s 200G multimode VCSEL. The M8199B AWG drives a 106.25 GBaud PAM4 electrical signal into the Coherent VCSEL, and the PAM4 optical signal output from the VCSEL is measured on wideband multimode scope displaying the eye diagram. The demo showcases the feasibility and capability of Coherent’s new VCSEL technology, as well as Keysight’s ability to characterise and validate this technology.

Lee Xu, Executive Vice President and General Manager, Datacom Business Unit at Coherent, says, “Keysight has been a trusted partner and a leader in test instrumentation technology, providing advanced test solutions to us. We rely on Keysight products, such as the M8199B Arbitrary Waveform Generator, to validate our latest transceiver designs. We look forward to continuing our collaboration as we push the boundaries of optical communications with products based on 200G VCSEL, silicon photonics, and Electro-Absorption Modulated Laser (EML).”

Dr. Joachim Peerlings, Vice President and General Manager of Keysight’s Network and Data Centre Solutions Group, adds, “We are pleased with the progress the industry is making in bringing 200G multimode technology to market. Our close collaboration with Coherent enabled another milestone in high-speed connectivity. The industry will benefit from a more efficient and cost-effective technology to address their business-critical AI/ML infrastructure deployment in the data centre.”

Join Keysight experts at OFC, taking place 1-3 April in San Francisco, California, at Keysight's booth (stand 1301) for live demos on coherent optical innovations.

For more from Keysight Technologies, click here.

Simon Rowley - 28 March 2025

Data

Enterprise Network Infrastructure: Design, Performance & Security

News

Broadband Forum launches trio of new open broadband projects

An improved user experience, including reduced latency and a wider choice of in-home applications, will be delivered to broadband consumers as the Broadband Forum launches three new projects.

The three new open broadband projects will provide open source software blueprints for application providers and Broadband Service Providers (BSPs) to follow. These will deliver a foundation for Artificial Intelligence (AI) and Machine Learning (ML) for network automation, additional tools for network latency and performance measurements, and on-demand connectivity for different applications.

“These new projects will play a key role in improving network performance measurement and monitoring and the end-user experience,” says Broadband Forum Technical Chair, Lincoln Lavoie. “Open source software is a crucial component in providing the blueprint for BSPs to follow and we invite interested companies to get involved.”

The new Open Broadband-CloudCO-Application Software Development Kit (OB-CAS), Open Broadband – Simple Two-Way Active Measurement Protocol (OB-STAMP), and Open Broadband – Subscriber Session Steering (OB-STEER) projects will bring together software developers and standards experts from the forum.

The projects will deliver open source reference implementations, which are examples of how Broadband Forum specifications can be implemented. They act as a starting point for application developers to base their designs on. In turn, those applications are available on platforms for BSPs to select and offer to their customers, shortening the path between the development of the specification to the first deployment of the technologies into the network.

“The development of open source software and open broadband standards are invaluable to the industry, laying the foundations for faster innovation through global collaboration,” says Broadband Forum CEO, Craig Thomas. “The Broadband Forum places the end-user experience at the forefront of all of our projects and is playing a crucial role in overcoming network issues.”

OB-CAS aims to simplify network monitoring and maintenance for BSPs, while also offering a wider selection of applications from various software vendors. Alongside this, network operations will be simplified and automated through existing Broadband Forum cloud standards that use AI and ML to improve the end-user experience.

OB-STAMP will build an easy-to-deploy component that simplifies network performance measurement between Customer Premises Equipment and IP Edge. The project will allow BSPs to proactively monitor their subscribers’ home networks to measure latency and, ultimately, avoid network failures. Four vendors have already signed up to join the efforts to reduce the cost and time associated with deploying infrastructure for measuring network latency.

Building on Broadband Forum’s upcoming technical report WT-474, OB-STEER will create a reference implementation of the Subscriber Session Steering architecture to deliver flexible, on-demand connectivity and simplify network management. Interoperability of Subscriber Session Steering is of high importance as it will be implemented in the access network equipment and edge equipment from various vendors.

Carly Weller - 5 March 2025

Data

Data Centre Security: Protecting Infrastructure from Physical and Cyber Threats

News

Five considerations when budgeting for enterprise storage

By Eric Herzog, Chief Marketing Officer at Infinidat.

Enterprise storage is fundamental to maintaining a strong enterprise data infrastructure. While storage has evolved over the years, the basic characteristics remain the same – performance, reliability, cost-effectiveness, flexibility, capacity, flexibility, cyber resilience, and usability. The rule of thumb in enterprise storage is to look for faster, cheaper, easier and bigger capacity, but in a smaller footprint.

So, when you’re reviewing what storage solutions to entrust your enterprise with, what are the factors to be considering? What are the five key considerations that have risen to the top of enterprise storage buying decisions?

• Safeguard against cyber attacks, such as ransomware and malware, by increasing your enterprise’s cyber resilience and cyber recovery with automated cyber protection.• Look to improve the performance of your enterprise storage infrastructure by up to 2.5x (or more), while simultaneously consolidating storage to save costs.• Evaluate the optimal balance between your enterprise’s use of on-premises and the use of the public cloud (i.e. Microsoft Azure or Amazon AWS).• Extend cyber detection across your storage estate.• Initiate a conversation about infrastructure consumption services that are platform-centric, automated, and optimised for hybrid, multi-cloud environments.

The leading edge of enterprise storage has already moved into the next generation of storage arrays for all-flash and hybrid configurations. With cybercrime expected to cost an enterprise in excess of £7.3 trillion in 2024, according to Cybersecurity Ventures, the industry has also seen a rise in cybersecurity capabilities being built into primary and secondary storage. Seamless hybrid multi-cloud support is now readily available. And enterprises are taking advantage of Storage-as-a-Service (STaaS) offerings with confidence and peace of mind.

When you’re buying enterprise storage for a refresh or for consolidation, it’s best to seek out solutions that are built from the ground-up with cyber resilient and cyber recovery technology intrinsic to your storage estate, optimised by a platform-native architecture for data services. In today’s world with continuous cyber threats, enterprises are substantially extending cyber storage resilience and recovery, as well as real-world application performance, beyond traditional boundaries.

We have also seen our customers value scale-up architectures, such as 60%, 80% and 100% populated models of software-defined architected storage arrays. This can be particularly pertinent with all-flash arrays that are aimed at specific latency-sensitive applications and workloads. Having the option to utilise a lifecycle management controller upgrade program is also appealing when buying a next-generation storage solution. Thinking ahead, this option can extend the life of your data infrastructure.

In addition, adopting next-gen storage solutions that facilitate a GreenIT approach puts your enterprise in a position to both save money (better economics) and reduce your carbon emissions (better for the environment) by using less power, less rack space, and less cooling. I call this the “E2” approach to enterprise storage: better economics and a better environment together in one solution. It helps to have faster storage devices with massive bandwidth and blistering I/O speeds.

Storage is not just about storage arrays anymore

Traditionally, it was commonly known that if you needed more enterprise data storage capacity, you’d buy more storage arrays and throw them into your data centre. No more thought needed for storage, right? All done with storage, right? Well, not exactly.

Not only has this piecemeal approach caused small array storage 'sprawl' and complexity that can be exasperating for any IT team, but it doesn’t address the significant need to secure storage infrastructures or simplify IT operations.

Cyber storage resilience and recovery need to be a critical component of an enterprise’s overall cybersecurity strategy. You need to be sure that you can safeguard your data infrastructure with cyber capabilities, such as cyber detection, automated cyber protection, and near-instantaneous cyber recovery.

These capabilities are key to neutralising the effects of cyber attacks. They could mean the difference between you paying a ransom for your data that has been taken 'hostage' and not paying any ransom. When you can execute rapid cyber recovery of a known good copy of your data, then you can effectively combat the cybercriminals and beat them at their own sinister game.

One of the latest advancements in cyber resilience that you cannot afford to ignore is automated cyber protection, which helps you reduce the threat window for cyber attacks. With a strong automated cyber protection solution, you can seamlessly integrate your enterprise storage into your Security Operations Centres (SOC), Security Information and Events Management (SIEM), Security Orchestration, Automation, and Response (SOAR) cyber security applications, as well as simple syslog functions for less complex environments. A security-related incident or event triggers immediate automated immutable snapshots of data, providing the ability to protect both block and file datasets. This is an extremely reliable way to ensure cyber recovery.

Another dimension of modern enterprise storage is seamless configurations of hybrid multi-cloud storage. The debate about whether an enterprise should put everything into the public cloud is over. There are very good use cases for the public cloud, but there continues to be very good use cases for on-prem storage, creating a hybrid multi-cloud environment that brings the greatest business and technical value to the organisation.

You can now harness the power of a powerful on-prem storage solution in a cloud-like experience across the entire storage infrastructure, as if the storage array you love on-premises is sitting in the public cloud. Whether you choose Microsoft Azure or Amazon AWS or both, you can extend the data services usually associated with on-prem storage to the cloud, including ease of use, automation, and cyber storage resilience.

Purchasing new enterprise storage solutions is a journey. Isn’t it the best choice to get on the journey to the future of enterprise storage, cyber security, and hybrid multi-cloud? If you use these top five considerations as a guidepost, you end up in an infinitely better place for storage that transforms and transcends conventional thinking about the data infrastructure.

Infinidat at DTX 2025

Eric Herzog is a guest speaker at DTX 2025 and will be discussing “The New Frontier of Enterprise Storage: Cyber Resilience & AI” on the Advanced Cyber Strategies Stage. Join him for unique insights on 3 April 2025, from 11.15-11.40am.

DTX 2025 takes place on 2-3 April at Manchester Central. Infinidat will be located at booth #C81.

For more from Infinidat, click here.

Simon Rowley - 21 February 2025

Data

News

Ataccama to deliver end-to-end visibility into data flows

Ataccama, the data trust company, has launched Ataccama Lineage, a new module within its Ataccama ONE unified data trust platform (V16).

Ataccama Lineage provides enterprise-wide visibility into data flows, offering organisations a clear view of their data’s journey from source to consumption. It helps teams trace data origins, resolve issues quickly, and ensure compliance - enhancing transparency and building confidence in data accuracy for business decision-making. Fully integrated with Ataccama’s data quality, observability, governance, and master data management capabilities, Ataccama lineage enables organisations to make faster, more informed decisions, such as ensuring audit readiness and meeting regulatory compliance requirements.

Data challenges are increasingly complex and, according to the Ataccama Data Trust Report 2025, 41% of Chief Data Officers are struggling with fragmented and inconsistent systems. Despite significant investments in integrations, AI, and cloud applications, enterprise data often remains siloed or poor in quality. This fractured landscape obscures visibility into data transformations and flows, creating inefficiencies and operational silos. Ataccama believes that the lack of clarity hampers collaboration and increases the risk of non-compliance with regulations like GDPR, erodes customer trust, drains resources, and slows decision-making - ultimately stifling organisational growth.

Ataccama Lineage is designed to simplify how organisations manage and trust their data. Its AI-powered capabilities automatically map data flows and transformations, saving time and reducing manual effort. For example, tracking customer financial data across fragmented systems is a common struggle in financial services. Ataccama Lineage provides clear, visual maps that trace issues like missing or duplicate records to their source. It also tracks sensitive data, such as PII, with audit-ready documentation to ensure compliance. By delivering reliable, trustworthy data, Ataccama Lineage establishes a strong foundation for AI and analytics, enabling organisations to make informed decisions and achieve long-term success.

Isaac Gabay, Senior Director, Data Management & Operations at Lennar, says, “As one of the nation’s leading homebuilders, Lennar is continually evolving our data foundation with best-in-class, cost-effective solutions to drive efficiency and innovation. Ataccama ONE Lineage’s detailed, visual map of data flows enables us to monitor data quality, trace issues through our ecosystem, and take a proactive approach to prevent and remediate quality concerns while maintaining centralised control. Ataccama ONE Lineage will provide unparalleled visibility, enhancing transparency, data literacy, and trust in our data. This partnership strengthens our ability to scale with confidence, deliver accurate insights, and adapt to the evolving needs of the homebuilding industry.”

Jessie Smith, VP of Data Quality at Ataccama, comments, "Managing today’s data pipelines means dealing with increasing sources, diverse data types, and transformations that impact systems upstream and downstream. The rise of AI and generative AI has amplified complexity while expanding data estates, and stricter audits demand greater transparency. Understanding how information flows across systems is no longer optional, it’s essential. Ataccama Lineage is part of the Ataccama ONE data trust platform which brings together data quality, lineage, observability and master data management into a unified solution for enterprise companies."

Key benefits of AI-powered Ataccama Lineage include:

- Faster resolution of data quality issues: Advanced anomaly detection identifies issues like missing records, unexpected values, or duplicates caused by transformation errors. For example, retail operations with multiple sales channels, mismatched pricing, or inventory discrepancies can disrupt business. Ataccama Lineage enables teams to quickly pinpoint root causes, assess downstream impacts, and resolve issues before they affect operations - ensuring continuity and reliability.

- Simplified compliance: Data classification and anomaly detection enhance visibility into sensitive data, such as PII, and track its transformations. Financial organisations benefit from audit-ready documentation that ensures PII is properly traced to authorised locations, reducing regulatory risks, meeting data privacy requirements, and fostering customer trust with transparent processes.

- Comprehensive visibility into data flows: Lineage maps provide a detailed, enterprise-wide view of data flows, from origin to dashboards and reports. Teams in sectors like manufacturing can analyse the lineage of key metrics, such as production efficiency or supply chain performance, identifying dependencies across ETL jobs, on-premises systems, and cloud platforms. Enhanced filtering focuses efforts on critical datasets, allowing faster issue resolution and better decision-making.

- Streamlined data modernisation efforts: During cloud migrations, Ataccama Lineage reduces risks by mapping redundant pipelines, dependencies, and critical datasets. Insurance companies transitioning to modern platforms can retire outdated systems and migrate only essential data, minimising disruption while maintaining compliance with regulations like Solvency II.

For more from Ataccama, click here.

Simon Rowley - 13 February 2025

Head office & Accounts:

Suite 14, 6-8 Revenge Road, Lordswood

Kent ME5 8UD

T: +44 (0)1634 673163

F: +44 (0)1634 673173